TranUnet代码梳理

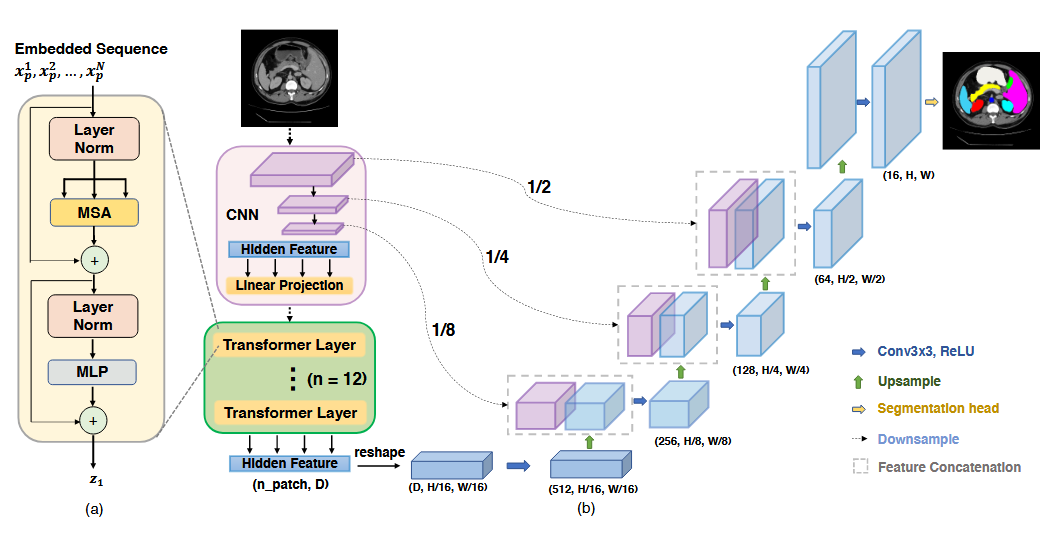

TransUNet

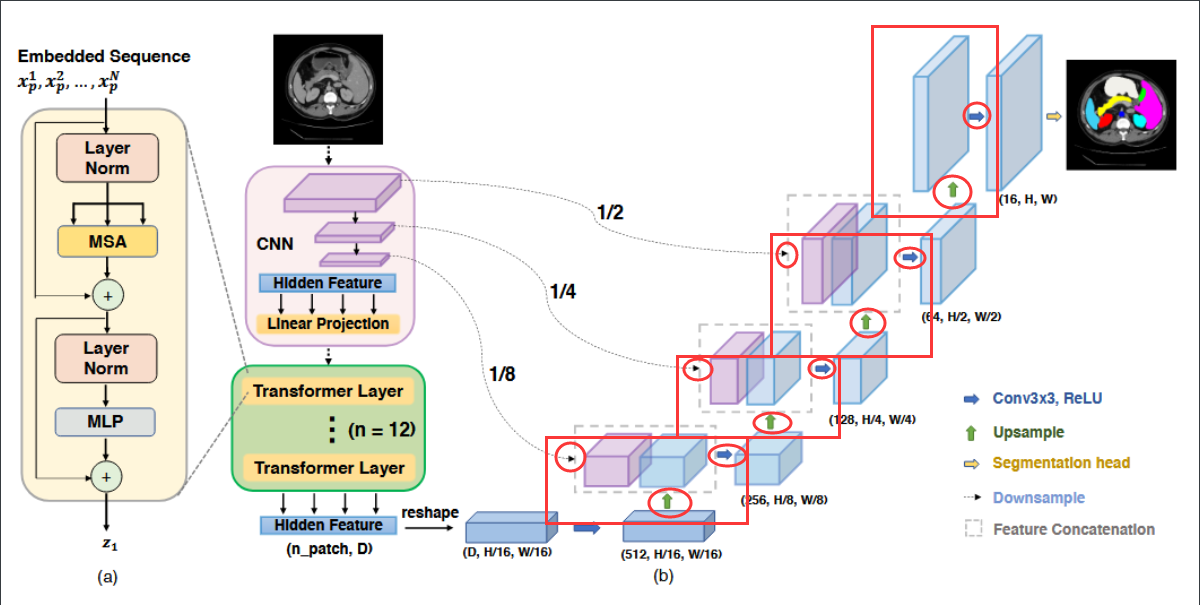

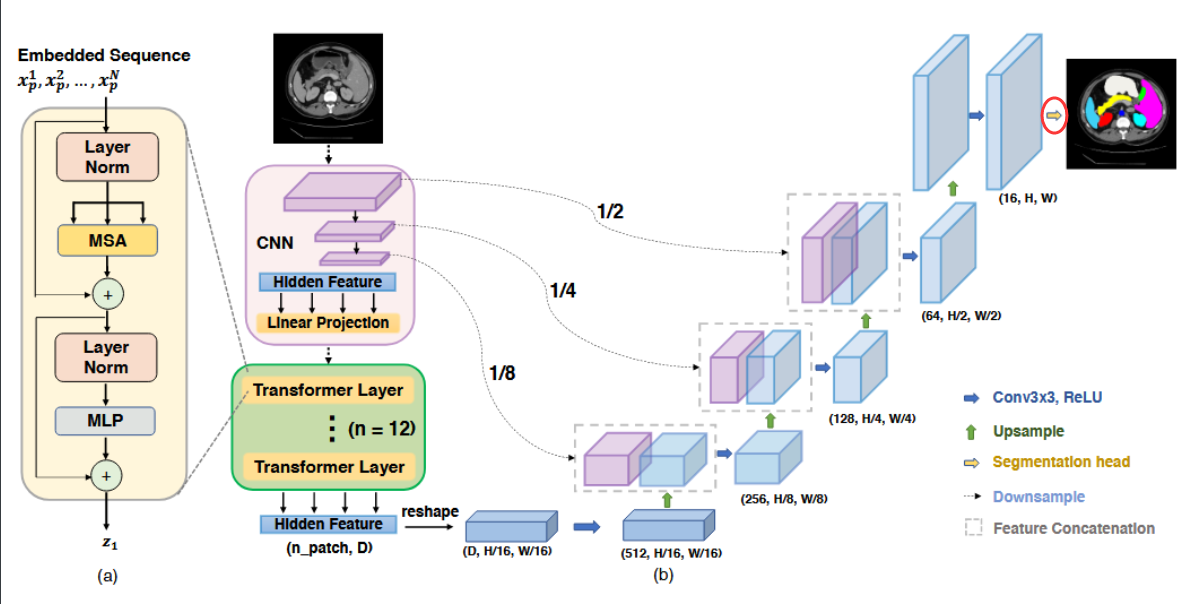

类似 Unet 结构,融入了Transformer思想,所以称作TransUNet

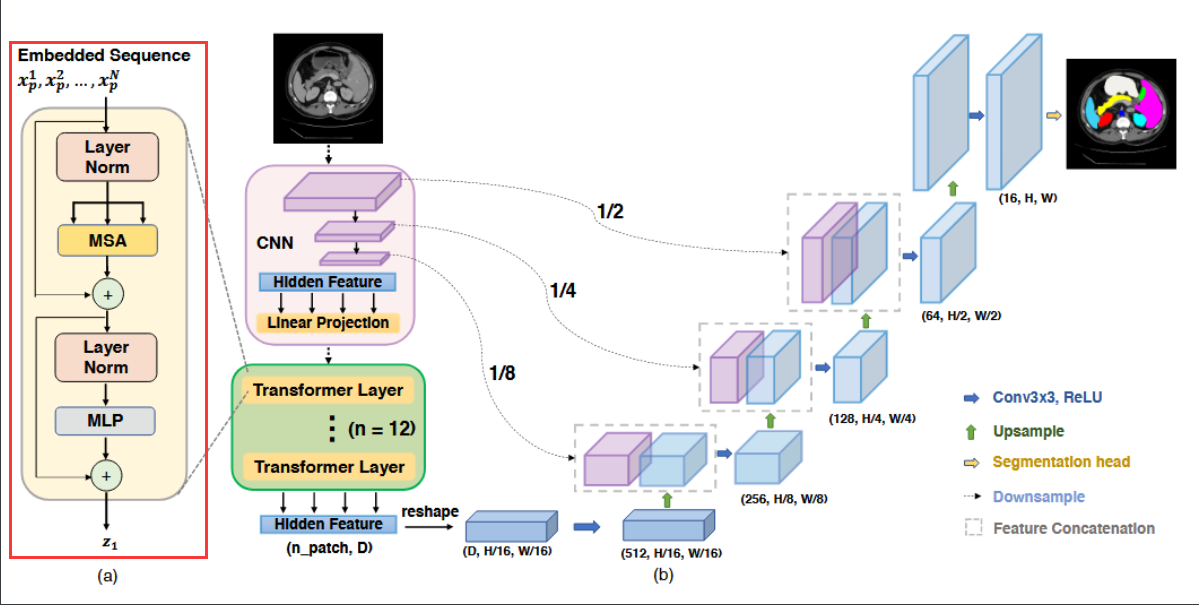

故事的开始还要从VisionTransformer类看起

class VisionTransformer(nn.Module):

def __init__(self, config, img_size=224, num_classes=21843, zero_head=False, vis=False):

super(VisionTransformer, self).__init__()

self.num_classes = num_classes

self.zero_head = zero_head

self.classifier = config.classifier # 'token'

self.transformer = Transformer(config, img_size, vis)

self.decoder = DecoderCup(config)

self.segmentation_head = SegmentationHead(

in_channels=config['decoder_channels'][-1], # (16) (256, 128, 64, 16)

out_channels=config['n_classes'], # 9

kernel_size=3,

)

self.config = config

def forward(self, x):

if x.size()[1] == 1: # 如果图片是灰度图就在其通道方向进行复制从1维转成3维(比如CT图像就是灰度图)

x = x.repeat(1,3,1,1) # (B,3,H,W)

x, attn_weights, features = self.transformer(x) # (B, n_patch, hidden), attn_weights 从头到尾都未用过

x = self.decoder(x, features) # 解码

logits = self.segmentation_head(x)

return logits

可以看到Transformer类相当于编码部分,然后从Transformer类看起

Transformer类

class Transformer(nn.Module):

def __init__(self, config, img_size, vis):

super(Transformer, self).__init__()

self.embeddings = Embeddings(config, img_size=img_size)

self.encoder = Encoder(config, vis)

def forward(self, input_ids):

embedding_output, features = self.embeddings(input_ids)

encoded, attn_weights = self.encoder(embedding_output) # (B, n_patch, hidden)

return encoded, attn_weights, features # features是提取的特征

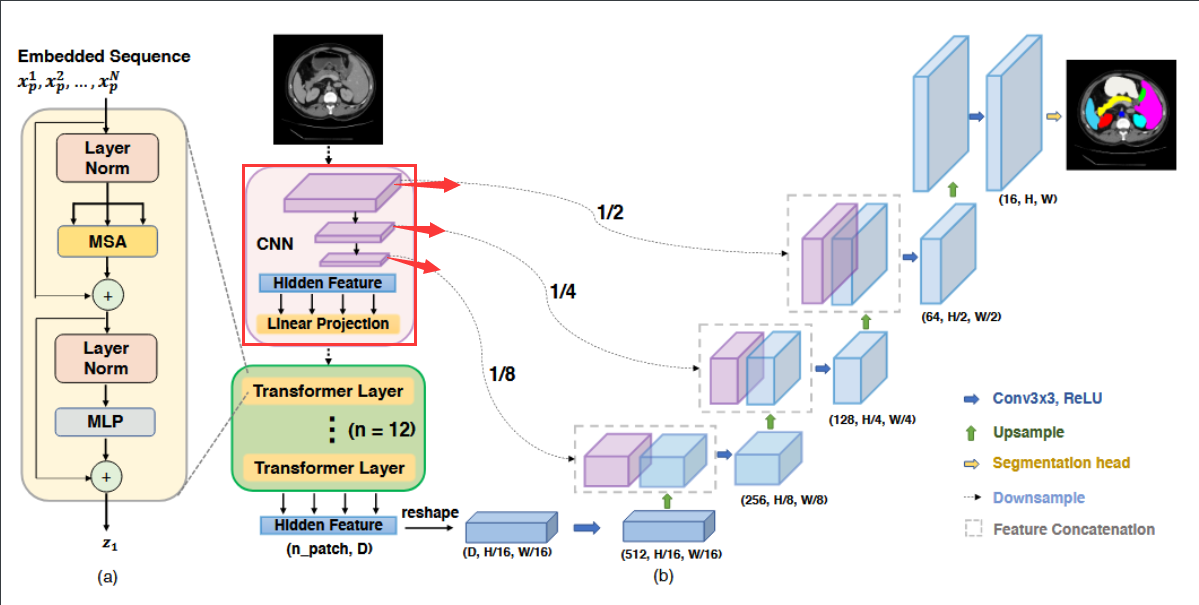

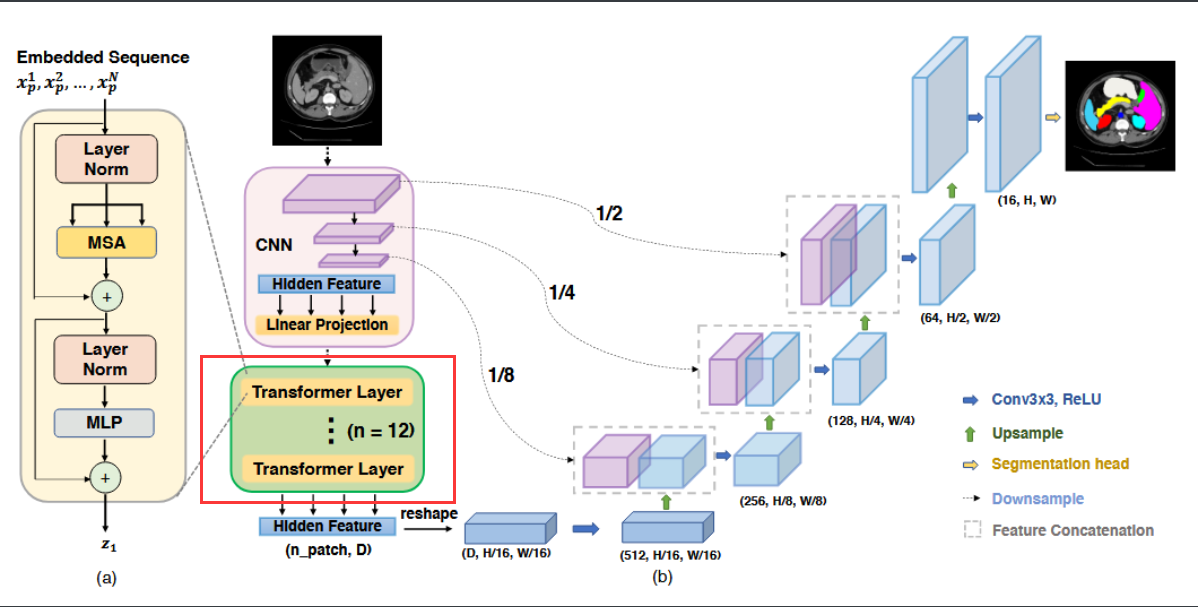

包含Embeddings类和Encoder类,其中Embeddings类是用来编码,Encoder类是MSA实现流程,即绿色框中部分。

Embeddings类

其中三个裁剪部分是在Embeddings类中实现的

class Embeddings(nn.Module):

"""Construct the embeddings from patch, position embeddings.

负责将输入图像转换为一系列嵌入向量

"""

def __init__(self, config, img_size, in_channels=3):

super(Embeddings, self).__init__()

self.hybrid = None

self.config = config

img_size = _pair(img_size)

if config.patches.get("grid") is not None: # ResNet

grid_size = config.patches["grid"] # (14,15)

patch_size = (img_size[0] // 16 // grid_size[0], img_size[1] // 16 // grid_size[1]) #

patch_size_real = (patch_size[0] * 16, patch_size[1] * 16)

n_patches = (img_size[0] // patch_size_real[0]) * (img_size[1] // patch_size_real[1])

self.hybrid = True

else:

patch_size = _pair(config.patches["size"])

n_patches = (img_size[0] // patch_size[0]) * (img_size[1] // patch_size[1])

self.hybrid = False

if self.hybrid:

self.hybrid_model = ResNetV2(block_units=config.resnet.num_layers, width_factor=config.resnet.width_factor) #((3,4,9),1)

in_channels = self.hybrid_model.width * 16 # 64*16 = 1024

self.patch_embeddings = Conv2d(in_channels=in_channels,

out_channels=config.hidden_size,

kernel_size=patch_size,

stride=patch_size) # (1024,768,1,1)

self.position_embeddings = nn.Parameter(torch.zeros(1, n_patches, config.hidden_size)) # (1,196,768)

self.dropout = Dropout(config.transformer["dropout_rate"])

def forward(self, x):

if self.hybrid:

x, features = self.hybrid_model(x) # [1, 1024, 14, 14]

# 从这里开始看,

else:

features = None

x = self.patch_embeddings(x) # (B, hidden. n_patches^(1/2), n_patches^(1/2)),[1, 768, 14, 14]

x = x.flatten(2) # 从第二个维度开始展开,即将H,W合并

x = x.transpose(-1, -2) # (B, n_patches, hidden)

embeddings = x + self.position_embeddings # 加入位置信息

embeddings = self.dropout(embeddings) # 防止过拟合

return embeddings, features # features是通过ResNetV2提取的特征,这个是默认的,可以设置

CONFIGS = {

'ViT-B_16': configs.get_b16_config(),

'ViT-B_32': configs.get_b32_config(),

'ViT-L_16': configs.get_l16_config(),

'ViT-L_32': configs.get_l32_config(),

'ViT-H_14': configs.get_h14_config(),

'R50-ViT-B_16': configs.get_r50_b16_config(),

'R50-ViT-L_16': configs.get_r50_l16_config(),

'testing': configs.get_testing(),

}

# 作者提供了7种特征提取模型,默认是R50-ViT-B_16,stem和前两个Stage提取到的特征加入列表

关于Vit模型的解释:【计算机视觉 | 目标检测】术语理解6:ViT 变种( ViT-H、ViT-L & ViT-B)、bbox(边界框)、边界框的绘制(含源代码)-CSDN博客

Encoder

class ResNetV2(nn.Module):

"""Implementation of Pre-activation (v2) ResNet mode."""

def __init__(self, block_units, width_factor):

super().__init__()

width = int(64 * width_factor)

self.width = width

self.root = nn.Sequential(OrderedDict([

('conv', StdConv2d(3, width, kernel_size=7, stride=2, bias=False, padding=3)),

('gn', nn.GroupNorm(32, width, eps=1e-6)),

('relu', nn.ReLU(inplace=True)),

# ('pool', nn.MaxPool2d(kernel_size=3, stride=2, padding=0))

]))

self.body = nn.Sequential(OrderedDict([

('block1', nn.Sequential(OrderedDict(

[('unit1', PreActBottleneck(cin=width, cout=width*4, cmid=width))] +

[(f'unit{i:d}', PreActBottleneck(cin=width*4, cout=width*4, cmid=width)) for i in range(2, block_units[0] + 1)],

))),

('block2', nn.Sequential(OrderedDict(

[('unit1', PreActBottleneck(cin=width*4, cout=width*8, cmid=width*2, stride=2))] +

[(f'unit{i:d}', PreActBottleneck(cin=width*8, cout=width*8, cmid=width*2)) for i in range(2, block_units[1] + 1)],

))),

('block3', nn.Sequential(OrderedDict(

[('unit1', PreActBottleneck(cin=width*8, cout=width*16, cmid=width*4, stride=2))] +

[(f'unit{i:d}', PreActBottleneck(cin=width*16, cout=width*16, cmid=width*4)) for i in range(2, block_units[2] + 1)],

))),

]))

def forward(self, x):

features = []

b, c, in_size, _ = x.size()

x = self.root(x)

features.append(x)

# root模块后提取初始特征添加到features列表

x = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)(x)

for i in range(len(self.body)-1):

x = self.body[i](x)

right_size = int(in_size / 4 / (i+1))

if x.size()[2] != right_size:

pad = right_size - x.size()[2]

assert pad < 3 and pad > 0, "x {} should {}".format(x.size(), right_size)

feat = torch.zeros((b, x.size()[1], right_size, right_size), device=x.device)

feat[:, :, 0:x.size()[2], 0:x.size()[3]] = x[:]

else:

feat = x

features.append(feat)

# 前两个Block后提取到的特征添加到features列表中

x = self.body[-1](x)

return x, features[::-1]

将特征提取完毕后输出[1, 1024, 14, 14],使用卷积降低通道维度,然后加入位置信息,传入MSA

Encoder类

class Encoder(nn.Module):

def __init__(self, config, vis):

super(Encoder, self).__init__()

self.vis = vis

self.layer = nn.ModuleList()

self.encoder_norm = LayerNorm(config.hidden_size, eps=1e-6)

for _ in range(config.transformer["num_layers"]): # config.transformer["num_layers"]=12

layer = Block(config, vis)

self.layer.append(copy.deepcopy(layer))

def forward(self, hidden_states):

attn_weights = [] # 注意力系数

for layer_block in self.layer:

hidden_states, weights = layer_block(hidden_states)

if self.vis: # 该参数全程都是False

attn_weights.append(weights)

encoded = self.encoder_norm(hidden_states) # 归一化

return encoded, attn_weights # attn_weights 此参数全程都没有用到

Block类即注意力机制

其中MSA 指的是 Multihead Self-Attention

class Block(nn.Module):

def __init__(self, config, vis):

super(Block, self).__init__()

self.hidden_size = config.hidden_size # 768

self.attention_norm = LayerNorm(config.hidden_size, eps=1e-6)

self.ffn_norm = LayerNorm(config.hidden_size, eps=1e-6)

self.ffn = Mlp(config)

self.attn = Attention(config, vis)

def forward(self, x):

h = x

x = self.attention_norm(x)

x, weights = self.attn(x) # 返回注意力最终得分、注意力系数

x = x + h

h = x

x = self.ffn_norm(x)

x = self.ffn(x)

x = x + h

return x, weights

def load_from(self, weights, n_block):

ROOT = f"Transformer/encoderblock_{n_block}"

with torch.no_grad():

query_weight = np2th(weights[pjoin(ROOT, ATTENTION_Q, "kernel")]).view(self.hidden_size, self.hidden_size).t()

key_weight = np2th(weights[pjoin(ROOT, ATTENTION_K, "kernel")]).view(self.hidden_size, self.hidden_size).t()

value_weight = np2th(weights[pjoin(ROOT, ATTENTION_V, "kernel")]).view(self.hidden_size, self.hidden_size).t()

out_weight = np2th(weights[pjoin(ROOT, ATTENTION_OUT, "kernel")]).view(self.hidden_size, self.hidden_size).t()

query_bias = np2th(weights[pjoin(ROOT, ATTENTION_Q, "bias")]).view(-1)

key_bias = np2th(weights[pjoin(ROOT, ATTENTION_K, "bias")]).view(-1)

value_bias = np2th(weights[pjoin(ROOT, ATTENTION_V, "bias")]).view(-1)

out_bias = np2th(weights[pjoin(ROOT, ATTENTION_OUT, "bias")]).view(-1)

self.attn.query.weight.copy_(query_weight)

self.attn.key.weight.copy_(key_weight)

self.attn.value.weight.copy_(value_weight)

self.attn.out.weight.copy_(out_weight)

self.attn.query.bias.copy_(query_bias)

self.attn.key.bias.copy_(key_bias)

self.attn.value.bias.copy_(value_bias)

self.attn.out.bias.copy_(out_bias)

mlp_weight_0 = np2th(weights[pjoin(ROOT, FC_0, "kernel")]).t()

mlp_weight_1 = np2th(weights[pjoin(ROOT, FC_1, "kernel")]).t()

mlp_bias_0 = np2th(weights[pjoin(ROOT, FC_0, "bias")]).t()

mlp_bias_1 = np2th(weights[pjoin(ROOT, FC_1, "bias")]).t()

self.ffn.fc1.weight.copy_(mlp_weight_0)

self.ffn.fc2.weight.copy_(mlp_weight_1)

self.ffn.fc1.bias.copy_(mlp_bias_0)

self.ffn.fc2.bias.copy_(mlp_bias_1)

self.attention_norm.weight.copy_(np2th(weights[pjoin(ROOT, ATTENTION_NORM, "scale")]))

self.attention_norm.bias.copy_(np2th(weights[pjoin(ROOT, ATTENTION_NORM, "bias")]))

self.ffn_norm.weight.copy_(np2th(weights[pjoin(ROOT, MLP_NORM, "scale")]))

self.ffn_norm.bias.copy_(np2th(weights[pjoin(ROOT, MLP_NORM, "bias")]))

DecoderBlock类

class DecoderBlock(nn.Module):

def __init__(

self,

in_channels,

out_channels,

skip_channels=0,

use_batchnorm=True,

):

super().__init__()

self.conv1 = Conv2dReLU(

in_channels + skip_channels,

out_channels,

kernel_size=3,

padding=1,

use_batchnorm=use_batchnorm,

)

self.conv2 = Conv2dReLU(

out_channels,

out_channels,

kernel_size=3,

padding=1,

use_batchnorm=use_batchnorm,

)

self.up = nn.UpsamplingBilinear2d(scale_factor=2)

def forward(self, x, skip=None):

x = self.up(x)

if skip is not None:

x = torch.cat([x, skip], dim=1)

# 最后一步skip = None

x = self.conv1(x)

x = self.conv2(x)

return x

SegmentationHead类

class SegmentationHead(nn.Sequential):

def __init__(self, in_channels, out_channels, kernel_size=3, upsampling=1):

conv2d = nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size, padding=kernel_size // 2)

upsampling = nn.UpsamplingBilinear2d(scale_factor=upsampling) if upsampling > 1 else nn.Identity()

# nn.Identity()作用

'''

nn.Identity 实际上是一个恒等映射(Identity Mapping),它的作用是将输入直接传递到输出,不做任何改变。这意味着任何通过 nn.Identity 的数据都会保持原样输出,相当于一个“无操作”(NOP)层。

'''

super().__init__(conv2d, upsampling)

实际上,这里upsampling=1即,什么也不做,对应图片中最后一步

至此模型数理完毕

浙公网安备 33010602011771号

浙公网安备 33010602011771号