实验三

作业一

(1)实验内容

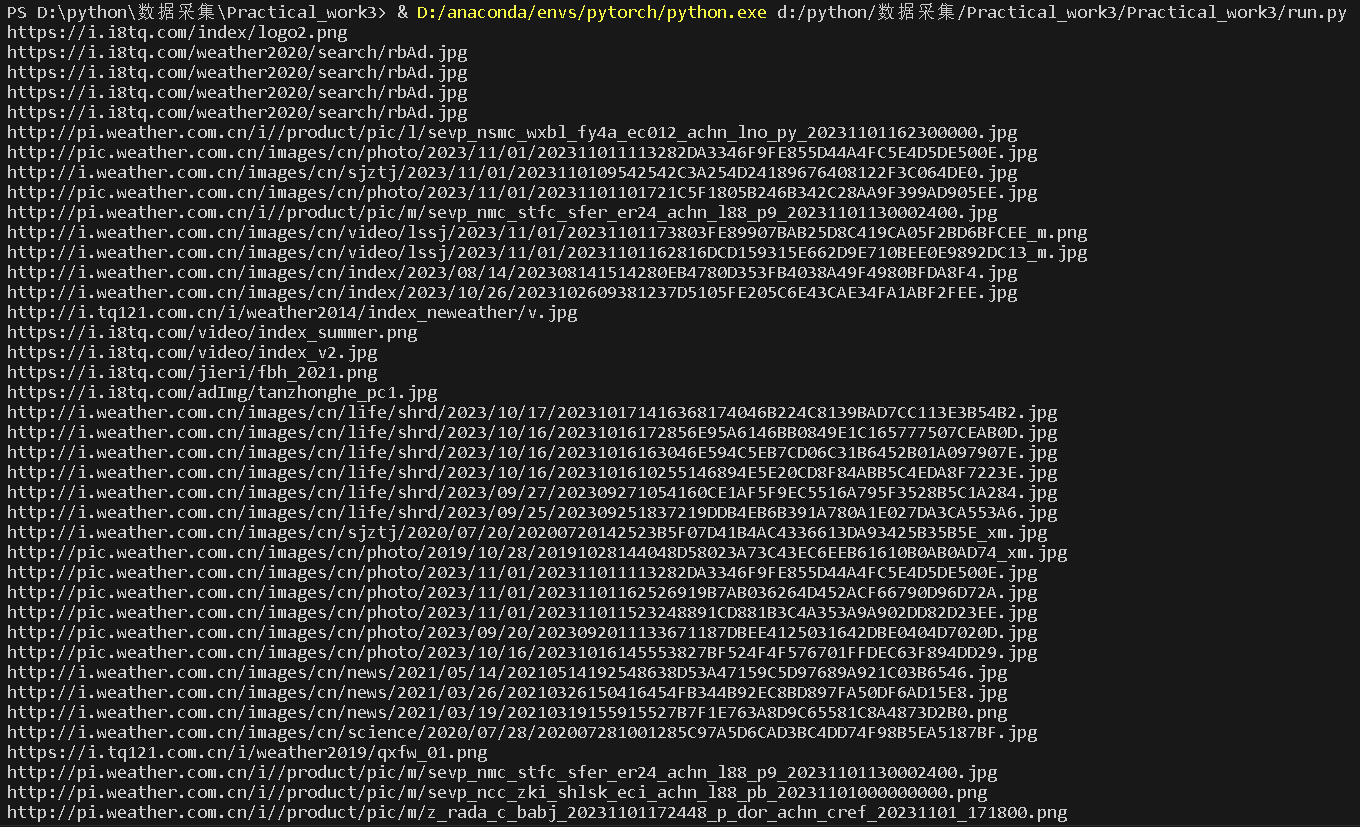

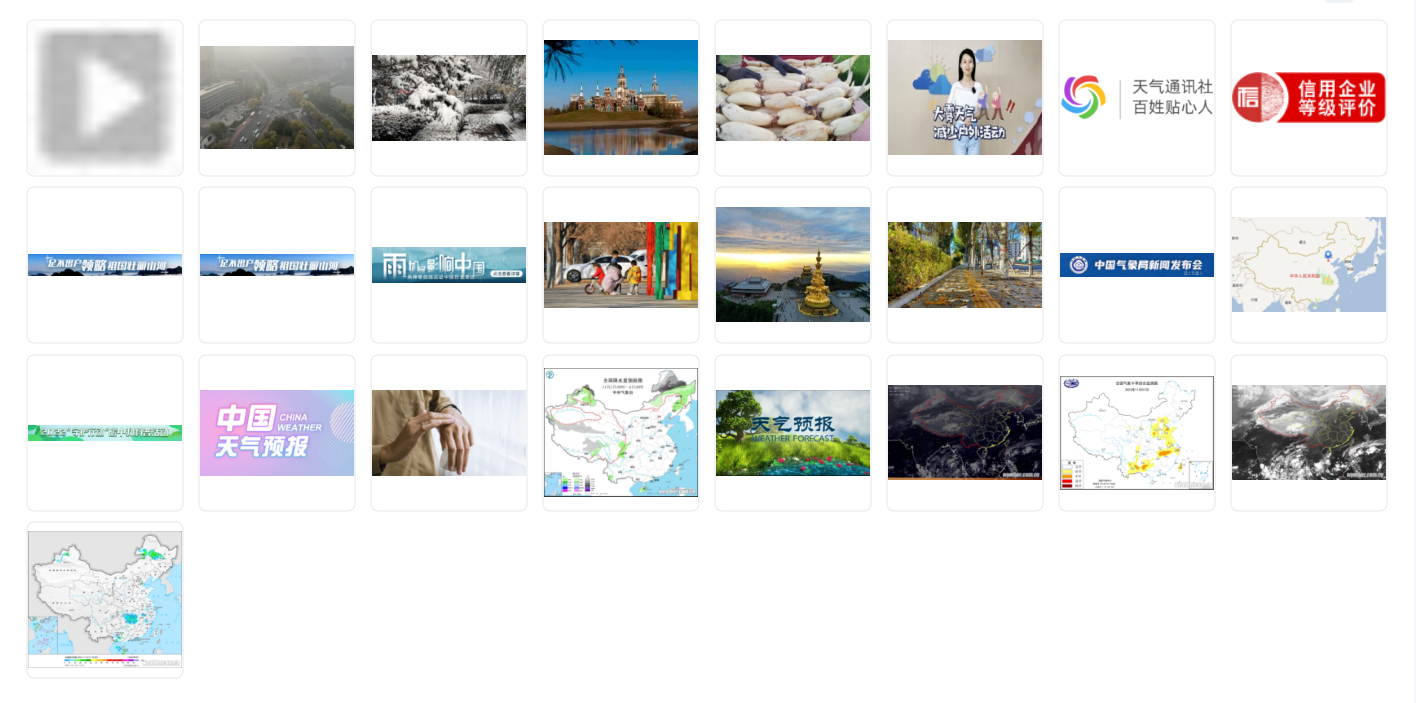

- 要求:指定一个网站爬取这个网站的所有图片,如中国气象网,使用scrapy框架分别实现单线程和多线程爬取

- 输出信息:将下载的url信息在控制台输出,并将下载的图片存储在images子文件当中,并给出截图

item.py

import scrapy

class work1_Item(scrapy.Item):

img_url = scrapy.Field()

单线程的pipeline.py

import urllib.request

import os

import pathlib

from Practical_work3.items import work1_Item

class work1_Pipeline:

count = 0

desktopDir = str(pathlib.Path.home()).replace('\\','\\\\') + '\\Desktop'

threads = []

def open_spider(self,spider):

picture_path=self.desktopDir+'\\images'

if os.path.exists(picture_path): # 判断文件夹是否存在

for root, dirs, files in os.walk(picture_path, topdown=False):

for name in files:

os.remove(os.path.join(root, name)) # 删除文件

for name in dirs:

os.rmdir(os.path.join(root, name)) # 删除文件夹

os.rmdir(picture_path) # 删除文件夹

os.mkdir(picture_path) # 创建文件夹

# 单线程

def process_item(self, item, spider):

url = item['img_url']

print(url)

img_data = urllib.request.urlopen(url=url).read()

img_path = self.desktopDir + '\\images\\' + str(self.count)+'.jpg'

with open(img_path, 'wb') as fp:

fp.write(img_data)

self.count = self.count + 1

return item

多线程的pipeline类

import threading

import urllib.request

import os

import pathlib

from Practical_work3.items import work1_Item

class work1_Pipeline:

count = 0

desktopDir = str(pathlib.Path.home()).replace('\\','\\\\') + '\\Desktop'

threads = []

def open_spider(self,spider):

picture_path=self.desktopDir+'\\images'

if os.path.exists(picture_path): # 判断文件夹是否存在

for root, dirs, files in os.walk(picture_path, topdown=False):

for name in files:

os.remove(os.path.join(root, name)) # 删除文件

for name in dirs:

os.rmdir(os.path.join(root, name)) # 删除文件夹

os.rmdir(picture_path) # 删除文件夹

os.mkdir(picture_path) # 创建文件夹

# 多线程

def process_item(self, item, spider):

if isinstance(item,work1_Item):

url = item['img_url']

print(url)

T=threading.Thread(target=self.download_img,args=(url,))

T.setDaemon(False)

T.start()

self.threads.append(T)

return item

def download_img(self,url):

img_data = urllib.request.urlopen(url=url).read()

img_path = self.desktopDir + '\\images\\' + str(self.count)+'.jpg'

with open(img_path, 'wb') as fp:

fp.write(img_data)

self.count = self.count + 1

def close_spider(self,spider):

for t in self.threads:

t.join()

Spider.py

import scrapy

from Practical_work3.items import work1_Item

class Work1Spider(scrapy.Spider):

name = 'work1'

# allowed_domains = ['www.weather.com.cn']

start_urls = ['http://www.weather.com.cn/']

def parse(self, response):

data = response.body.decode()

selector=scrapy.Selector(text=data)

img_datas = selector.xpath('//a/img/@src')

for img_data in img_datas:

item = work1_Item()

item['img_url'] = img_data.extract()

yield item

run.py

from scrapy import cmdline

cmdline.execute("scrapy crawl work1".split())

结果如下:

(2)心得体会

- 多线程相比单线程,效率高了不少,一开始直接在管道类实现多线程,后面发现也可以在setting.py里设置多线程,只需要修改CONCURRENT_REQUESTS的值即可

- scrapy框架初上手,了解了该框架的基本用法

- 自动生成的allowed_domains列表,指允许访问的域名,一般不使用

作业二

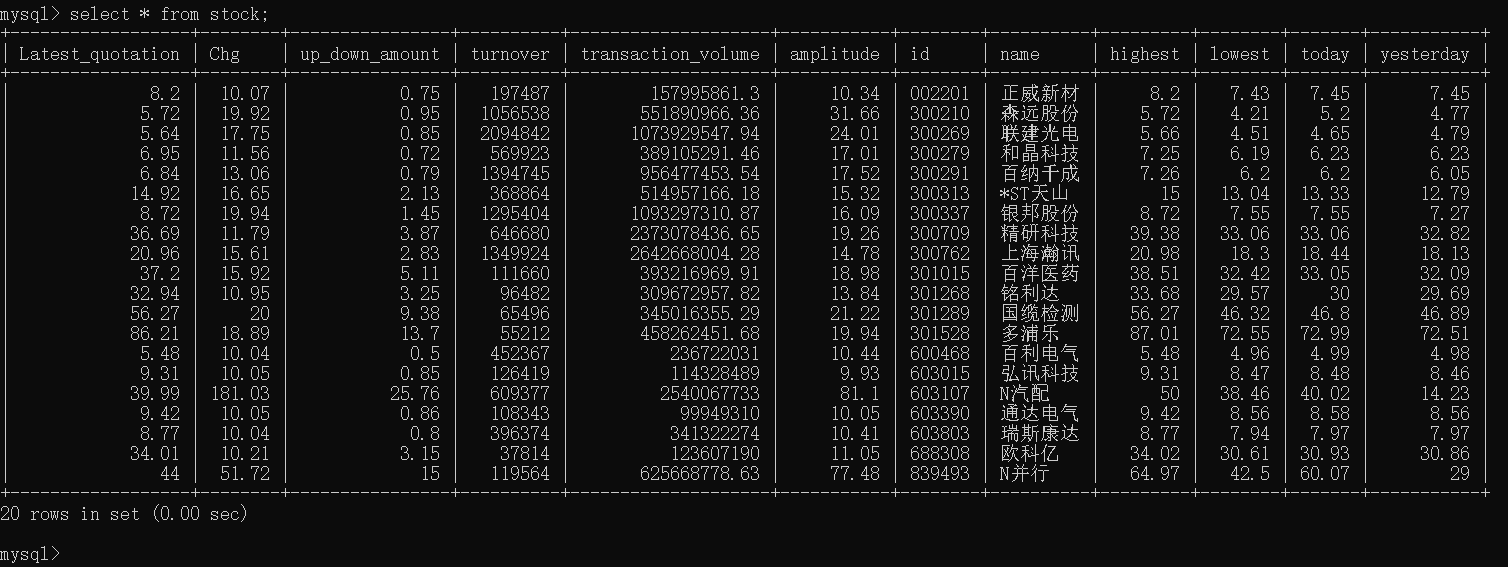

(1)实验内容

- 要求: 熟练掌握scrapy中的item,pipeline 数据序列化输出方法;Scrapy+Xpath+MySQL数据库存储技术路线爬取股票相关信息(东方财富网:https://www.eastmoney.com/)

- 输出信息: MySQL数据库存储和输出格式如下,表头应该是英文名命名,自定义设计

item.py

import scrapy

class work2_Item(scrapy.Item):

f2 = scrapy.Field()

f3 = scrapy.Field()

f4 = scrapy.Field()

f5 = scrapy.Field()

f6 = scrapy.Field()

f7 = scrapy.Field()

f12 = scrapy.Field()

f14 = scrapy.Field()

f15 = scrapy.Field()

f16 = scrapy.Field()

f17 = scrapy.Field()

f18 = scrapy.Field()

pipeline.py

import pymysql

from Practical_work3.items import work2_Item

class work2_Pipeline:

def open_spider(self,spider):

try:

self.db = pymysql.connect(host='127.0.0.1', user='root', passwd='gbz102102147', port=3306,charset='utf8',database='scrapy')

self.cursor = self.db.cursor()

self.cursor.execute('DROP TABLE IF EXISTS stock')

sql = """CREATE TABLE stock(Latest_quotation Double,Chg Double,up_down_amount Double,turnover Double,transaction_volume Double,

amplitude Double,id varchar(12) PRIMARY KEY,name varchar(32),highest Double, lowest Double,today Double,yesterday Double)"""

self.cursor.execute(sql)

except Exception as e:

print(e)

def process_item(self, item, spider):

if isinstance(item,work2_Item):

sql = """INSERT INTO stock VALUES (%f,%f,%f,%f,%f,%f,"%s","%s",%f,%f,%f,%f)""" % (item['f2'],item['f3'],item['f4'],item['f5'],item['f6'],

item['f7'],item['f12'],item['f14'],item['f15'],item['f16'],item['f17'],item['f18'])

self.cursor.execute(sql)

self.db.commit()

return item

def close_spider(self,spider):

self.cursor.close()

self.db.close()

spider.py

import scrapy

import re

import json

from Practical_work3.items import work2_Item

class Work2Spider(scrapy.Spider):

name = 'work2'

# allowed_domains = ['25.push2.eastmoney.com']

start_urls = ['http://25.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124021313927342030325_1696658971596&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f2,f3,f4,f5,f6,f7,f12,f14,f15,f16,f17,f18&_=1696658971636']

def parse(self, response):

data = response.body.decode()

item = work2_Item()

data = re.compile('"diff":\[(.*?)\]',re.S).findall(data)

columns={'f2':'最新价','f3':'涨跌幅(%)','f4':'涨跌额','f5':'成交量','f6':'成交额','f7':'振幅(%)','f12':'代码','f14':'名称','f15':'最高',

'f16':'最低','f17':'今开','f18':'昨收'}

for one_data in re.compile('\{(.*?)\}',re.S).findall(data[0]):

data_dic = json.loads('{' + one_data + '}')

for k,v in data_dic.items():

item[k] = v

yield item

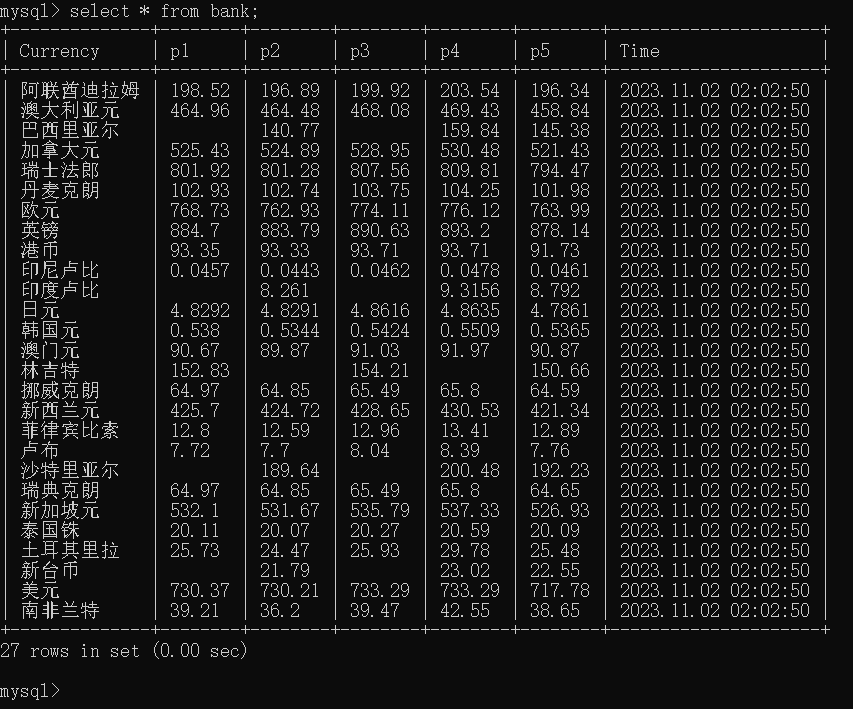

结果如下:

(2)心得体会

(1)mysql初上手,了解了python怎么连接mysql数据库

(2)由于该网址的数据是动态加载的,scrapy也只能爬取静态的网页,最终还是通过抓包的方式直接获得数据

作业三

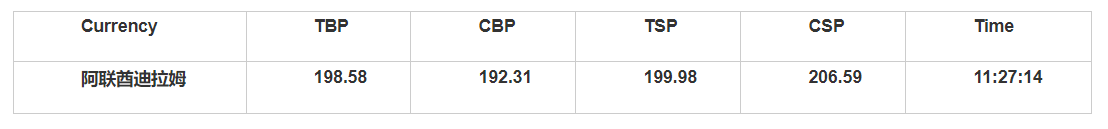

(1)实验内容

- 要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

- 输出信息如下

item.py

import scrapy

class work3_Item(scrapy.Item):

name = scrapy.Field()

price1 = scrapy.Field()

price2 = scrapy.Field()

price3 = scrapy.Field()

price4 = scrapy.Field()

price5 = scrapy.Field()

date = scrapy.Field()

pipelines.py

import pymysql

from Practical_work3.items import work3_Item

class work3_Pipeline:

def open_spider(self,spider):

try:

self.db = pymysql.connect(host='127.0.0.1', user='root', passwd='gbz102102147', port=3306,charset='utf8',database='scrapy')

self.cursor = self.db.cursor()

self.cursor.execute('DROP TABLE IF EXISTS bank')

sql = """CREATE TABLE bank(Currency varchar(32),p1 varchar(17),p2 varchar(17),p3 varchar(17),p4 varchar(17),p5 varchar(17),Time varchar(32))"""

self.cursor.execute(sql)

except Exception as e:

print(e)

def process_item(self, item, spider):

if isinstance(item,work3_Item):

sql = 'INSERT INTO bank VALUES ("%s","%s","%s","%s","%s","%s","%s")' % (item['name'],item['price1'],item['price2'],

item['price3'],item['price4'],item['price5'],item['date'])

self.cursor.execute(sql)

self.db.commit()

return item

def close_spider(self,spider):

self.cursor.close()

self.db.close()

spider.py

import scrapy

from Practical_work3.items import work3_Item

class Work3Spider(scrapy.Spider):

name = 'work3'

# allowed_domains = ['www.boc.cn']

start_urls = ['https://www.boc.cn/sourcedb/whpj/']

def parse(self, response):

data = response.body.decode()

selector=scrapy.Selector(text=data)

data_lists = selector.xpath('//table[@align="left"]/tr')

for data_list in data_lists:

datas = data_list.xpath('.//td')

if datas != []:

item = work3_Item()

keys = ['name','price1','price2','price3','price4','price5','date']

str_lists = datas.extract()

for i in range(len(str_lists)-1):

item[keys[i]] = str_lists[i].strip('<td class="pjrq"></td>').strip()

yield item

结果如下:

(2)心得体会

(1)一开始xpath是直接在浏览器上复制下来的,结果却爬不到数据,一开始还以为该网址的数据也是动态加载的,结果并不是。尝试了半天,最后将相应的网页源码直接打印出来,然后查找,发现相应的源码少了tbody标签,将该层标签去掉之后便顺利爬取到数据。后面了解到浏览器显示的tbody标签是浏览器自行加上去的,xpath表达式中最好不要有tbody标签。

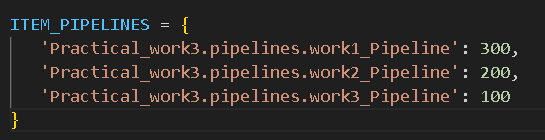

(2)因为三个爬虫都是写在同一个项目中的,所以写到后面,刚开始在setting.py中把所有管道类都打开了,结果报错了,在机房的时候,暂时是每跑一个爬虫,就把其他的两个管道类注释掉。后面经了解,只需要在每一个爬虫的管道类的process_item函数中增加对item类的类型判断就可以同时开始多个管道类,且了解到scrapy框架首先会把每一个item对象传给优先级最高的管道类,其他管道类是拿不到的。第一个拿到item对象的管道类处理完之后,通过return item将item对象传递给下一个优先级的管道类,依次类推。所以scrapy框架默认生成的管道类都会有return item,即使没有下一个管道类,但是以后如果你的项目功能需要扩展的时候,就可能新增新的管道类,如果没有return item,那么新增的管道类就拿不到item对象,所以最好还是每一个管道类都return item一下。

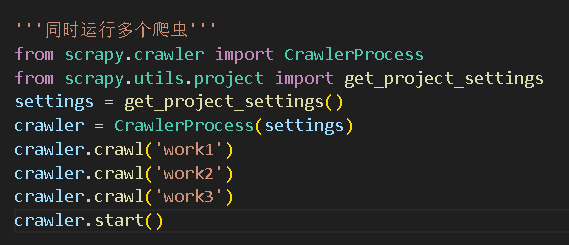

(3)由上述第二点的需求:同时开启多个管道类。联想到能不能同时启动多个爬虫?经了解,是可以实现的。run.py代码修改如下

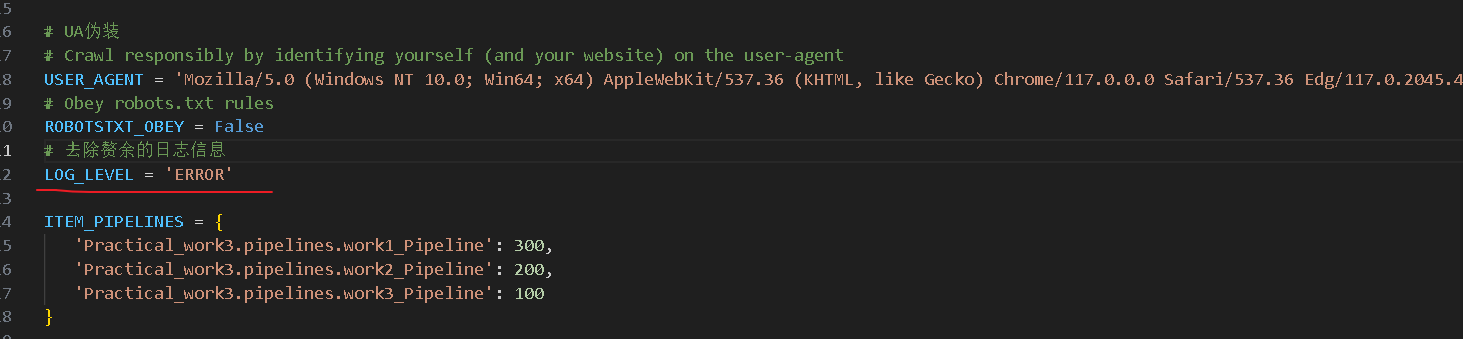

(4)了解到scrapy去除繁琐的日志信息除了可以在命令行实现,还可以在setting.py中增加一行代码即可实现

浙公网安备 33010602011771号

浙公网安备 33010602011771号