网上已经有很多现成的资料了,但是还是决定一步一步走下来,细细品味一下这个开源神作。人气如此之高,当然有其独到之处,作为一个KV Store ,首先被关心的问题自然是 Replication /Performance /Persistence / Single Point of Failure 等方面 ,安装之前,先去其官方站点仔细了解一下其特点。

l Replication

对于Replication , 官网上有以下几点信息。其一,Redis是Master-slave结构的(这意味着用户得自己解决单点问题?) ;其二,可以将Redis看成读写分离的架构(Master负责写请求,redies负责读请求);其三,Master处理读写请求时无阻塞的,但是slave端在第一次同步完成之前是不能处理读请求的。

l Performance

这个与概念无关,Redis有大量丰富的client端,待安装完环境之后会做一些测试 。

l Persistence

关于持久化,其实有太多的问题。新版的Redis支持页面交换,简而言之就是能够存储大于系统类存的数据,这也是其和memcached本质的区别,但是性能如何,还得用数据说话了。 另外,为了使持久化不过分影响系能,大多数的KV store都采取的是延时提交的策略,这样就存在当机时内存数据丢失的问题,通用的解决方案是引入log系统,但是这个对性能的影响也是不可忽视的。

l Single Point of Failure

其实在我看来 Master-slave架构的系统必然存在单点问题的,而这个问题是提供可持续在线服务的厂商非常关注的问题,但是粗略搜索了一下,对于Redis的单点问题,网上所论甚少,期待随着对Redis了解的深入,能够找到答案

总而言之,带着问题去开展research,能够做到有的放矢,初步了解Redis特性以后,列出如下问题:

1. Redis 的具体命令,以及java client调用及接口

2. Redis的安装以及config文件的具体配置

3. M-S结构中, 实现 scalability 仅仅靠增加slave , 最终master server是否会成为瓶颈?

4. Redis的性能和Salve的增减是否有成正比之关系,如是,为什么

5. Redis的persistence原理是怎样的,如果master需要维护整个一份数据,那么是否会有scalability 问题

6. 如何部署一个可以用于企业产品的Redis集群(如何解决单点问题)

7. Redis的性能全方位的一个评估,包括对其页置换以及打开log模式下的性能评估,和传统DB以及memached的横向比较

8. 在性能测试基础上如何利用配置和部署对其进行优化

9. 最后如有条件,可以研究一下代码

创新,就是把睡过两个月的床单拿起来抖抖,反过来再铺上

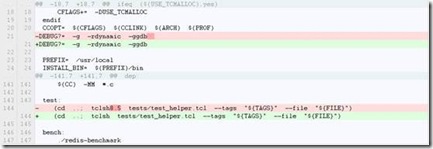

Install & Configuration

没啥好说的三部曲 download-unzip-make ,Redis已经安静的躺在硬盘中等待你的使用了,这里说一句 在执行make的时候会有一个error ,将src/makefile 打开,做如下更改即可

读一下Redis的conf文件 ,其中有几项比较重要

2

3 # Accept connections on the specified port, default is 6379.

4

5 port 6379

6

7 这个不知道啥意思

8

9 # If you want you can bind a single interface, if the bind option is not

10

11 # specified all the interfaces will listen for incoming connections.

12

13 # bind 127.0.0.1

14

15 客户端连接超时时间,默认300秒

16

17 # Close the connection after a client is idle for N seconds (0 to disable)

18

19 timeout 300

20

21 log级别,默认verbose

22

23 # Set server verbosity to 'debug'

24

25 # it can be one of:

26

27 # debug (a lot of information, useful for development/testing)

28

29 # verbose (many rarely useful info, but not a mess like the debug level)

30

31 # notice (moderately verbose, what you want in production probably)

32

33 # warning (only very important / critical messages are logged)

34

35 loglevel verbose

36

37 指定输出log的文件名

38

39 # Specify the log file name. Also 'stdout' can be used to force

40

41 # Redis to log on the standard output. Note that if you use standard

42

43 # output for logging but daemonize, logs will be sent to /dev/null

44

45 logfile stdout

46

47 这个不知道啥意思

48

49 # Set the number of databases. The default database is DB 0, you can select

50

51 # a different one on a per-connection basis using SELECT <dbid> where

52

53 # dbid is a number between 0 and 'databases'-1

54

55 databases 16

56

57 快照策略,快照是Redis持久化策略之一,官网上是如此描述的,大意就是Redis会根据数据变化的数量和时间定时将内存快照写入磁盘save 900 1的意思就是每900s检测一次,如果有一个及以上的变化,则将快照写入磁盘。这里的条件是可以组合的 ,多个条件之间可以看成用or进行连接

58

59 By default Redis saves snapshots of the dataset on disk, in a binary file called dump.rdb. You can configure Redis to have it save the dataset every N seconds if there are at least M changes in the dataset, or you can manually call the SAVE or BGSAVE commands.

60

61 For example, this configuration will make Redis automatically dump the dataset to disk every 60 seconds if at least 1000 keys changed:

62

63 ################################ SNAPSHOTTING #################################

64

65 #

66

67 # Save the DB on disk:

68

69 #

70

71 # save <seconds> <changes>

72

73 #

74

75 # Will save the DB if both the given number of seconds and the given

76

77 # number of write operations against the DB occurred.

78

79 #

80

81 # In the example below the behaviour will be to save:

82

83 # after 900 sec (15 min) if at least 1 key changed

84

85 # after 300 sec (5 min) if at least 10 keys changed

86

87 # after 60 sec if at least 10000 keys changed

88

89 #

90

91 # Note: you can disable saving at all commenting all the "save" lines.

92

93 save 900 1

94

95 save 300 10

96

97 save 60 10000

98

99 是否对dump .rdb进行压缩

100

101 # Compress string objects using LZF when dump .rdb databases?

102

103 # For default that's set to 'yes' as it's almost always a win.

104

105 # If you want to save some CPU in the saving child set it to 'no' but

106

107 # the dataset will likely be bigger if you have compressible values or keys.

108

109 rdbcompression yes

110

111 db文件名

112

113 # The filename where to dump the DB

114

115 dbfilename dump.rdb

116

117 db文件路径

118

119 # The working directory.

120

121 #

122

123 # The DB will be written inside this directory, with the filename specified

124

125 # above using the 'dbfilename' configuration directive.

126

127 #

128

129 # Also the Append Only File will be created inside this directory.

130

131 #

132

133 # Note that you must specify a directory here, not a file name.

134

135 dir ./

136

137 REPLICATION是Redis非常重要的特性,如果当前配置的server被定为为slave服务器,则需要配置这个部分。通常来说,slave服务器提供两种功能 :冗余备份和负载平衡,但是如同官网所说,slave server在第一次同步结束前是不提供服务的,但是每当master接受写请求,都会导致 slave和master有短暂的不一致,这个时候如果slave去接收读请求如何确保数据的有效性?

138

139 ################################# REPLICATION #################################

140

141 将此server配置成slave 填入master的 ip和port

142

143 # Master-Slave replication. Use slaveof to make a Redis instance a copy of

144

145 # another Redis server. Note that the configuration is local to the slave

146

147 # so for example it is possible to configure the slave to save the DB with a

148

149 # different interval, or to listen to another port, and so on.

150

151 #

152

153 # slaveof <masterip> <masterport>

154

155 如果master有密码保护,在这里配置密码

156

157 # If the master is password protected (using the "requirepass" configuration

158

159 # directive below) it is possible to tell the slave to authenticate before

160

161 # starting the replication synchronization process, otherwise the master will

162

163 # refuse the slave request.

164

165 #

166

167 # masterauth <master-password>

168

169 当replication进行时或者slave失去了和master的连接时,在接收到client端请求时如何响应,yes是返回空数据 no是返回an error "SYNC with master in progress"

170

171 # When a slave lost the connection with the master, or when the replication

172

173 # is still in progress, the slave can act in two different ways:

174

175 #

176

177 # 1) if slave-serve-stale-data is set to 'yes' (the default) the slave will

178

179 # still reply to client requests, possibly with out of data , or the

180

181 # data set may just be empty if this is the first synchronization.

182

183 #

184

185 # 2) if slave-serve-stale data is set to 'no' the slave will reply with

186

187 # an error "SYNC with master in progress" to all the kind of commands

188

189 # but to INFO and SLAVEOF.

190

191 #

192

193 slave-serve-stale-data yes

194

195 一些常用的约束

196

197 ################################### LIMITS ####################################

198

199 最大能够同时被多少client端连接

200

201 # Set the max number of connected clients at the same time. By default there

202

203 # is no limit, and it's up to the number of file descriptors the Redis process

204

205 # is able to open. The special value '0' means no limits.

206

207 # Once the limit is reached Redis will close all the new connections sending

208

209 # an error 'max number of clients reached'.

210

211 #

212

213 # maxclients 128

214

215 Redis能够被分配的系统内存

216

217 # Don't use more memory than the specified amount of bytes.

218

219 # When the memory limit is reached Redis will try to remove keys with an

220

221 # EXPIRE set. It will try to start freeing keys that are going to expire

222

223 # in little time and preserve keys with a longer time to live.

224

225 # Redis will also try to remove objects from free lists if possible.

226

227 #

228

229 # If all this fails, Redis will start to reply with errors to commands

230

231 # that will use more memory, like SET, LPUSH, and so on, and will continue

232

233 # to reply to most read-only commands like GET.

234

235 #

236

237 # WARNING: maxmemory can be a good idea mainly if you want to use Redis as a

238

239 # 'state' server or cache, not as a real DB. When Redis is used as a real

240

241 # database the memory usage will grow over the weeks, it will be obvious if

242

243 # it is going to use too much memory in the long run, and you'll have the time

244

245 # to upgrade. With maxmemory after the limit is reached you'll start to get

246

247 # errors for write operations, and this may even lead to DB inconsistency.

248

249 #

250

251 maxmemory <268435456>

252

253 当内存用完以后的策略

254

255 # MAXMEMORY POLICY: how Redis will select what to remove when maxmemory

256

257 # is reached? You can select among five behavior:

258

259 #

260

261 # volatile-lru -> remove the key with an expire set using an LRU algorithm

262

263 # allkeys-lru -> remove any key accordingly to the LRU algorithm

264

265 # volatile-random -> remove a random key with an expire set

266

267 # allkeys->random -> remove a random key, any key

268

269 # volatile-ttl -> remove the key with the nearest expire time (minor TTL)

270

271 # noeviction -> don't expire at all, just return an error on write operations

272

273 #

274

275 # Note: with all the kind of policies, Redis will return an error on write

276

277 # operations, when there are not suitable keys for eviction.

278

279 #

280

281 # At the date of writing this commands are: set setnx setex append

282

283 # incr decr rpush lpush rpushx lpushx linsert lset rpoplpush sadd

284

285 # sinter sinterstore sunion sunionstore sdiff sdiffstore zadd zincrby

286

287 # zunionstore zinterstore hset hsetnx hmset hincrby incrby decrby

288

289 # getset mset msetnx exec sort

290

291 #

292

293 # The default is:

294

295 #

296

297 # maxmemory-policy volatile-lru

298

299 # LRU and minimal TTL algorithms are not precise algorithms but approximated

300

301 # algorithms (in order to save memory), so you can select as well the sample

302

303 # size to check. For instance for default Redis will check three keys and

304

305 # pick the one that was used less recently, you can change the sample size

306

307 # using the following configuration directive.

308

309 #

310

311 # maxmemory-samples 3

312

313 Append only 是除了快照以外Redis提供的又一种持久化模式每当对数据集发生改变的时候,Redis会把该命令记录到日志文件中,每当Redis重启的时候,都会先重放AOF中的命令来重构状态

314

315 ##############################APPENDONLYMODE##############################

316

317 # By default Redis asynchronously dumps the dataset on disk. If you can live

318

319 # with the idea that the latest records will be lost if something like a crash

320

321 # happens this is the preferred way to run Redis. If instead you care a lot

322

323 # about your data and don't want to that a single record can get lost you should

324

325 # enable the append only mode: when this mode is enabled Redis will append

326

327 # every write operation received in the file appendonly.aof. This file will

328

329 # be read on startup in order to rebuild the full dataset in memory.

330

331 #

332

333 # Note that you can have both the async dumps and the append only file if you

334

335 # like (you have to comment the "save" statements above to disable the dumps).

336

337 # Still if append only mode is enabled Redis will load the data from the

338

339 # log file at startup ignoring the dump.rdb file.

340

341 #

342

343 # IMPORTANT: Check the BGREWRITEAOF to check how to rewrite the append

344

345 # log file in background when it gets too big.

346

347 启用appendonly

348

349 appendonly no

350

351 给落地文件起名字

352

353 # The name of the append only file (default: "appendonly.aof")

354

355 # appendfilename appendonly.aof

356

357 三种同步方式,一般采用第三种 每个一秒写一下落地文件

358

359 # The fsync() call tells the Operating System to actually write data on disk

360

361 # instead to wait for more data in the output buffer. Some OS will really flush

362

363 # data on disk, some other OS will just try to do it ASAP.

364

365 #

366

367 # Redis supports three different modes:

368

369 #

370

371 # no: don't fsync, just let the OS flush the data when it wants. Faster.

372

373 # always: fsync after every write to the append only log . Slow, Safest.

374

375 # everysec: fsync only if one second passed since the last fsync. Compromise.

376

377 #

378

379 # The default is "everysec" that's usually the right compromise between

380

381 # speed and data safety. It's up to you to understand if you can relax this to

382

383 # "no" that will will let the operating system flush the output buffer when

384

385 # it wants, for better performances (but if you can live with the idea of

386

387 # some data loss consider the default persistence mode that's snapshotting),

388

389 # or on the contrary, use "always" that's very slow but a bit safer than

390

391 # everysec.

392

393 #

394

395 # If unsure, use "everysec".

396

397 # appendfsync always

398

399 appendfsync everysec

400

401 # appendfsync no

402

403 没大看懂

404

405 # When the AOF fsync policy is set to always or everysec, and a background

406

407 # saving process (a background save or AOF log background rewriting) is

408

409 # performing a lot of I/O against the disk, in some Linux configurations

410

411 # Redis may block too long on the fsync() call. Note that there is no fix for

412

413 # this currently, as even performing fsync in a different thread will block

414

415 # our synchronous write(2) call.

416

417 #

418

419 # In order to mitigate this problem it's possible to use the following option

420

421 # that will prevent fsync() from being called in the main process while a

422

423 # BGSAVE or BGREWRITEAOF is in progress.

424

425 #

426

427 # This means that while another child is saving the durability of Redis is

428

429 # the same as "appendfsync none", that in pratical terms means that it is

430

431 # possible to lost up to 30 seconds of log in the worst scenario (with the

432

433 # default Linux settings).

434

435 #

436

437 # If you have latency problems turn this to "yes". Otherwise leave it as

438

439 # "no" that is the safest pick from the point of view of durability.

440

441 no-appendfsync-on-rewrite no

配置完了 下一步就是将Redis 安装在server上。

配置一台master 和salve 。

按照配置文件设置好主从关系以后,分别启动主从server , 当从server启动时 主服务端显示

[1585] 02 Mar 02:33:24 - Accepted 10.224.57.64:59503

[1585] 02 Mar 02:33:24 * Slave ask for synchronization

[1585] 02 Mar 02:33:24 * Starting BGSAVE for SYNC

[1585] 02 Mar 02:33:24 * Background saving started by pid 19263

[19263] 02 Mar 02:33:24 * DB saved on disk

[1585] 02 Mar 02:33:24 * Background saving terminated with success

[1585] 02 Mar 02:33:24 * Synchronization with slave succeeded

[1585] 02 Mar 02:33:27 - DB 0: 15 keys (0 volatile) in 16 slots HT.

[1585] 02 Mar 02:33:27 - 0 clients connected (1 slaves), 800088 bytes in use

从服务端显示

[32767] 02 Mar 02:32:14 * The server is now ready to accept connections on port 6379

[32767] 02 Mar 02:32:14 - 0 clients connected (0 slaves), 790448 bytes in use

[32767] 02 Mar 02:32:14 * Connecting to MASTER...

[32767] 02 Mar 02:32:14 * MASTER <-> SLAVE sync started: SYNC sent

[32767] 02 Mar 02:32:14 * MASTER <-> SLAVE sync: receiving 164 bytes from master

[32767] 02 Mar 02:32:14 * MASTER <-> SLAVE sync: Loading DB in memory

[32767] 02 Mar 02:32:14 * MASTER <-> SLAVE sync: Finished with success

[32767] 02 Mar 02:32:19 - DB 0: 15 keys (0 volatile) in 16 slots HT.

[32767] 02 Mar 02:32:19 - 1 clients connected (0 slaves), 800024 bytes in use

说明部署成功

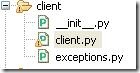

由于使用的是Redis2.2.1版本,貌似java client端更新很不给力,只好下了一个ptyhon client端进行测试。Client端非常简单,包含三个文件

使用如下代码测试

for i in range(1, 5):

r.set('foo'+ str(i) + 'master' , 'bar' + str(i) + 'master') # or r['foo'] = 'bar'

for i in range(1, 5):

result = r.get('foo'+ str(i) + 'master') # or r['foo']

result返回

bar1master

bar2master

bar3master

bar4master

for i in range(1, 5):

result = r.get('foo'+ str(i) + 'master') # or r['foo']

result返回

bar1master

bar2master

bar3master

bar4master

说明master的 write操作已经给同步到 slave上了

反过来再做一次

for i in range(1, 5):

r.set('foo'+ str(i) + 'slave' , 'bar' + str(i) + 'slave') # or r['foo'] = 'bar'

for i in range(1, 5):

result = r.get('foo'+ str(i) + 'slave') # or r['foo']

result返回

bar1slave

bar2slave

bar3slave

bar4slave

for i in range(1, 5):

result = r.get('foo'+ str(i) + 'slave') # or r['foo']

result返回

None

None

None

None

说明slave的写请求不会同步到master上