kylin3.1.3部署

3 软件安装及配置

3.2 基础配置

安装jdk及其devel包

# yum install java-1.8.0-openjdk*

[root@localhost ~]# useradd -d /home/grid -m grid

[root@localhost ~]# usermod -G root grid

[root@localhost ~]# passwd grid

[root@localhost ~]# hostnamectl set-hostname master

[root@localhost ~]# su - grid

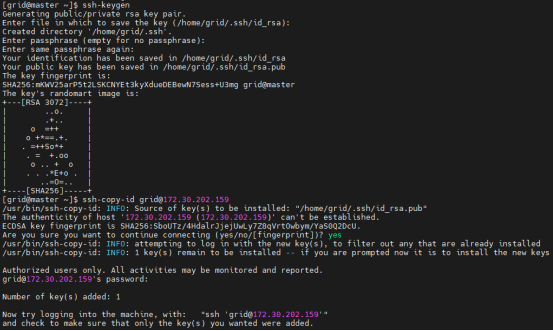

[grid@localhost ~]$ ssh-keygen

[grid@localhost ~]$ ssh-copy-id grid@172.30.202.159

3.3 搭建hadoop

安装

[grid@bogon ~]$ tar -xvf hadoop-2.7.1.tar.gz

[grid@bogon ~]$ cd hadoop-2.7.1

[grid@bogon hadoop-2.7.1]$ mkdir -p tmp hdfs/ hdfs/data hdfs/name/

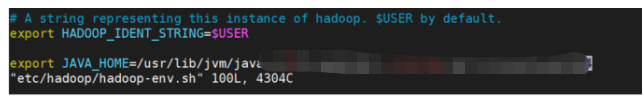

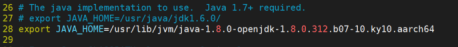

配置 hadoop-env.sh

需要提前安装openjdk的devel软件包。

[grid@bogon hadoop-2.7.1]$ vim etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0

配置 core-site.xml

配置默认采用的文件系统和配置hadoop的公共目录。

[grid@bogon hadoop-2.7.1]$ vim etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/grid/hadoop-2.7.1/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

</configuration>

配置 hdfs-site.xml

[grid@bogon hadoop-2.7.1]$ vim etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/grid/hadoop-2.7.1/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/grid/hadoop-2.7.1/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>172.30.202.159:9001</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address</name>

<value>172.30.202.159:10000</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

配置 mapred-site.xml

[grid@localhost hadoop-2.7.1]cp etc/hadoop/mapred-site.xml.template etc/hadoop/mapred-site.xml

[grid@localhost hadoop-2.7.1]$ vim etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>172.30.202.159:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>172.30.202.159:19888</value>

</property>

</configuration>

配置 yarn-site.xml

[grid@localhost hadoop-2.7.1]$ vim etc/hadoop/yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>172.30.202.159:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>172.30.202.159:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>172.30.202.159:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>172.30.202.159:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>172.30.202.159:8088</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>8192</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

配置slaves(workers)

[grid@localhost hadoop-2.7.1]$ vim etc/hadoop/slaves

master

配置环境变量PATH

[root@ bogon]# vim /etc/profile

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.312.b07-10.ky10.x86_64

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export HADOOP_HOME=/home/grid/hadoop-2.7.1

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/lib

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export LD_LIBRARY_PATH=$HADOOP_HOME/lib/native

使变量生效 source /etc/profile

关闭防火墙

systemctl stop firewalld.service 关闭防火墙

namenode初始化

[root@localhost hadoop-2.7.1]# sbin/hadoop-daemon.sh start namenode

[grid@localhost hadoop-2.7.1]$ cd bin

[grid@localhost bin]$ hdfs namenode -format

关闭安全模式:

[grid@master hadoop-2.7.1]$ hadoop dfsadmin -safemode leave

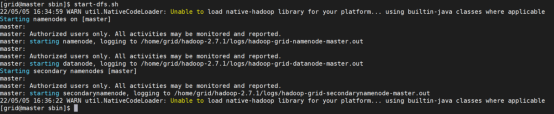

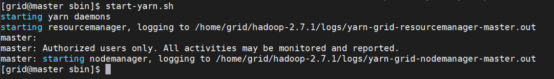

启动

重启服务器

[grid@master hadoop-2.7.1]$ cd sbin/

[grid@localhost hadoop-2.7.1]$ start-dfs.sh

[grid@localhost hadoop-2.7.1]$ start-yarn.sh

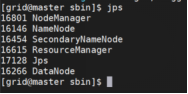

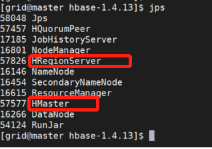

查看主节点Java进程

输入jps 查看已成功启动的进程

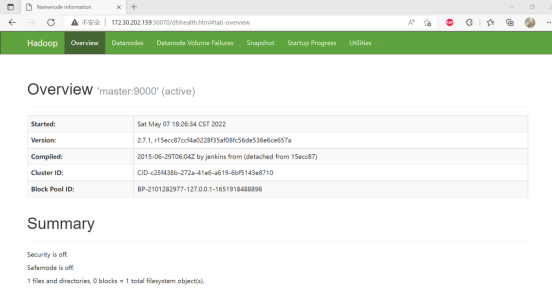

浏览器登录:http://[ip]:50070

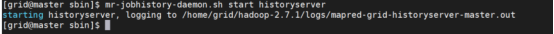

启动历史服务器

[grid@master hadoop-2.7.1]$ mr-jobhistory-daemon.sh start historyserver

3.4搭建Hive

下载Hive

[grid@master ~]$ wget https://archive.apache.org/dist/hive/hive-1.2.1/apache-hive-1.2.1-bin.tar.gz

[grid@master ~]$ tar -xvf apache-hive-1.2.1-bin.tar.gz

[grid@master ~]$ cd apache-hive-1.2.1-bin

删除系统的mariadb,并安装mysql

卸载mariadb

[root@master ~]# yum remove -y `rpm -aq mariadb*`

[root@master ~]# rm -rf /etc/my.cnf

[root@master ~]# rm -rf /var/lib/mysql/

安装mysql

安装过程不再细述

[root@master ~]# systemctl start mysqld

[root@master ~]# mysql_secure_installation

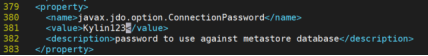

设置root密码为Kylin123

配置环境变量

[root@master ~]# vim /etc/profile

export HIVE_HOME=/home/grid/apache-hive-1.2.1-bin

export PATH=$PATH:$HIVE_HOME/bin

[grid@master ~]$ source /etc/profile

配置hive-env.sh

[root@master conf]# cd /home/grid/apache-hive-1.2.1-bin/conf

[root@master conf]# cp hive-env.sh.template hive-env.sh

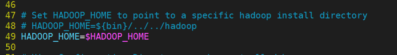

[root@master conf]# vim hive-env.sh

HADOOP_HOME=$HADOOP_HOME

配置hive-site.xml

[grid@master conf]$ cp hive-default.xml.template hive-site.xml

[grid@master apache-hive-1.2.1-bin]$ vim conf/hive-site.xml

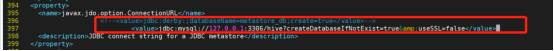

<property>

<name>javax.jdo.option.ConnectionURL</name>

<!--value>jdbc:derby:;databaseName=metastore_db;create=true</value-->

<value>jdbc:mysql://127.0.0.1:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

…

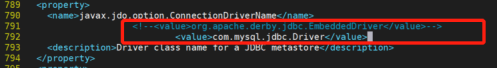

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<!--value>org.apache.derby.jdbc.EmbeddedDriver</value-->

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

…

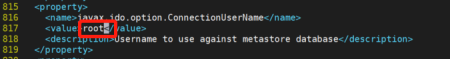

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

…

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>Kylin123</value> “初始数据库设置的密码”

<description>password to use against metastore database</description>

</property>

…

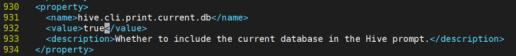

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

<description>Whether to include the current database in the Hive prompt.</description>

</property>

…

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp/hive</value>

<description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir:

${hive.exec.scratchdir}/<username> is created, with

${hive.scratch.dir.permission}.</description>

</property>

…

<property>

<name>hive.exec.local.scratchdir</name>

<!--value>${system:java.io.tmpdir}/${system:user.name}</value-->

<value>/tmp/hive/local</value>

<description>Local scratch space for Hive jobs</description>

</property>

…

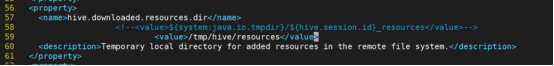

<property>

<name>hive.downloaded.resources.dir</name>

<!--<value>${system:java.io.tmpdir}/${hive.session.id}_resources</value>-->

<value>/tmp/hive/resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

…

添加JDBC驱动

将附件驱动mysql-connector-java.jar 复制到/home/grid/apache-hive-1.2.1-bin/lib/

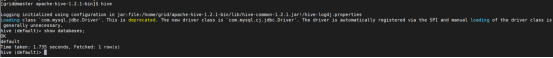

测试服务

后台启动服务器

[grid@master hive]$ nohup hive --service metastore > /tmp/grid/apache-hive-1.2.1-bin_metastore.log 2>&1 &

3.5搭建hbase

下载软件

[grid@master ~]$ wget https://archive.apache.org/dist/hbase/1.4.13/hbase-1.4.13-bin.tar.gz

[grid@master ~]$ tar -xvf hbase-1.4.13-bin.tar.gz

配置环境变量

[root@master apache-kylin]# vim /etc/profile

export HBASE_HOME=/home/grid/hbase-1.4.13

export PATH=$PATH:$HBASE_HOME/bin

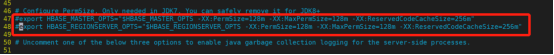

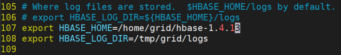

配置hbase-env.sh

[grid@master ~]$ cd hbase-1.4.13/

[grid@master hbase-1.4.13]$ vim conf/hbase-env.sh

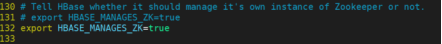

注释:

添加:

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.312.b07-10.ky10.x86_64

export HBASE_HOME=/home/grid/hbase-1.4.13

export HBASE_LOG_DIR=/tmp/grid/logs

export HBASE_MANAGES_ZK=true

配置hbase-site.xml

[grid@master hbase-1.4.13]$ vim conf/hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.master</name>

<value>master:60000</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/home/grid/hbase-1.4.13/zookeeper</value>

</property>

</configuration>

配置regionservers

[grid@master hbase-1.4.13]$ vim conf/regionservers

master

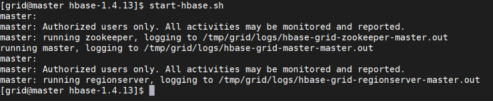

启动

[grid@master hbase-1.4.13]$ start-hbase.sh

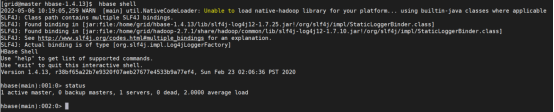

查看java进程:

查看status

[grid@master hbase]$ hbase shell

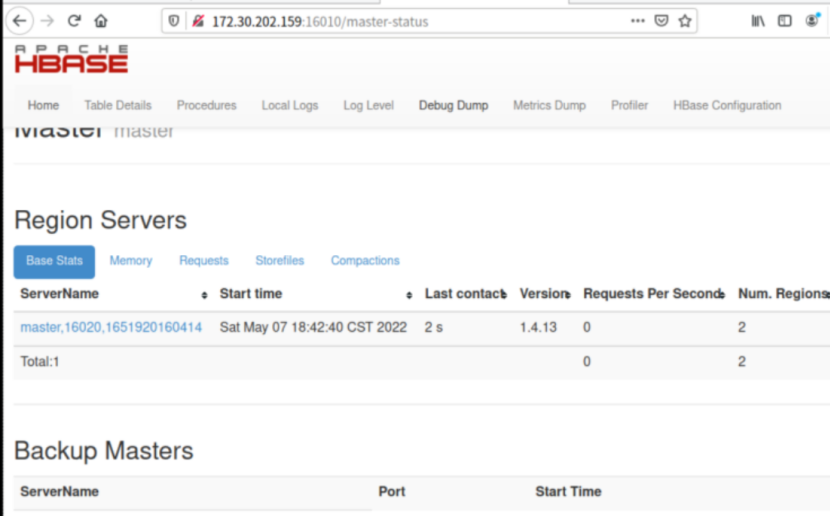

浏览器访问:

3.6搭建Apache Kylin

下载安装

[grid@master ~]$ wget https://archive.apache.org/dist/kylin/apache-kylin-3.1.3/apache-kylin-3.1.3-bin-hbase1x.tar.gz

[grid@master ~]$ tar -xvf apache-kylin-3.1.3-bin-hbase1x.tar.gz

[grid@master ~]$ cd apache-kylin-3.1.3-bin-hbase1x

配置环境变量

[root@master ~]# vim /etc/profile

export hive_dependency=/home/grid/apache-hive-1.2.1-bin/conf:/home/grid/apache-hive-1.2.1-bin/lib/*:/home/grid/apache-hive-1.2.1-bin/hcatalog/share/hcatalog/hive-hcatalog-core-1.2.1.jar

[grid@master ~]$ source /etc/profile

修改kylin.sh

[grid@master apache-kylin-3.1.3-bin-hbase1x]$ vim bin/kylin.sh

# 增加KYLIN_HOME的路径

export KYLIN_HOME=/home/grid/apache-kylin-3.1.3-bin-hbase1x

#在路径中添加$hive_dependency

export HBASE_CLASSPATH_PREFIX=${tomcat_root}/bin/bootstrap.jar:${tomcat_root}/bin/tomcat-juli.jar:${tomcat_root}/lib/*:$hive_dependency:${HBASE_CLASSPATH_PREFIX}

修改kylin.properties

[grid@master apache-kylin-3.1.3-bin-hbase1x]$ vim conf/kylin.properties

kylin.storage.hbase.cluster-fs=hdfs://master:9000/hbase

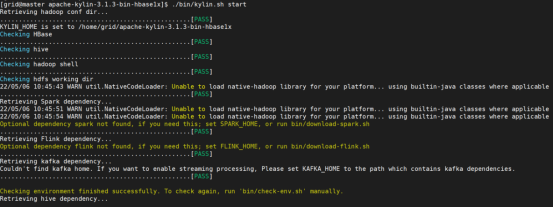

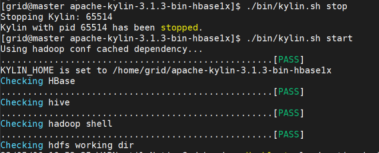

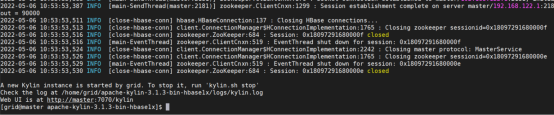

在master上启动服务

[grid@master apache-kylin-3.1.3-bin-hbase1x]$ ./bin/kylin.sh start

4 验证

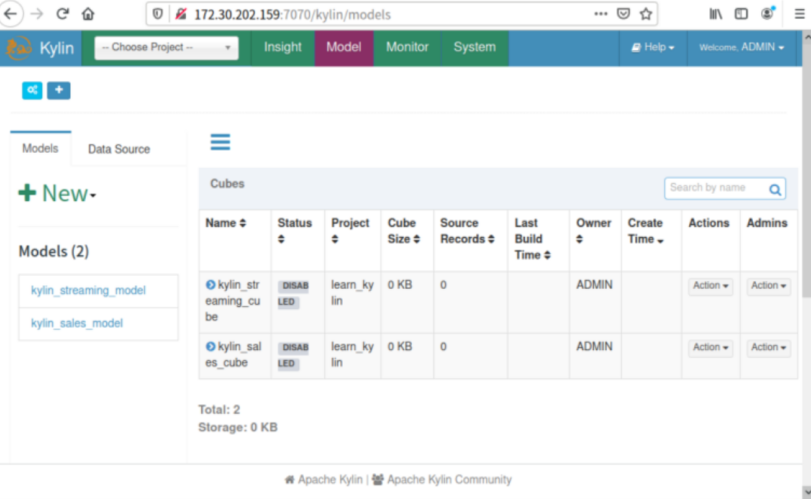

以自带的sample为例:

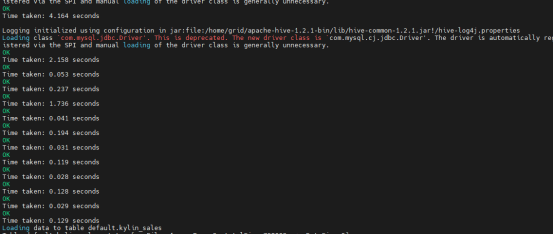

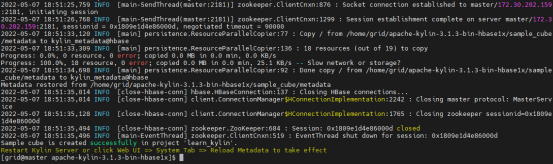

[grid@master kylin]$ ./bin/sample.sh

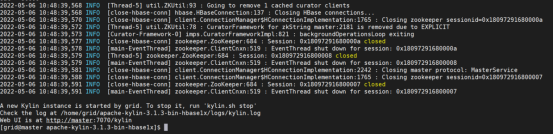

重启服务

[grid@master kylin]$ ./bin/kylin.sh stop

[grid@master kylin]$ ./bin/kylin.sh start

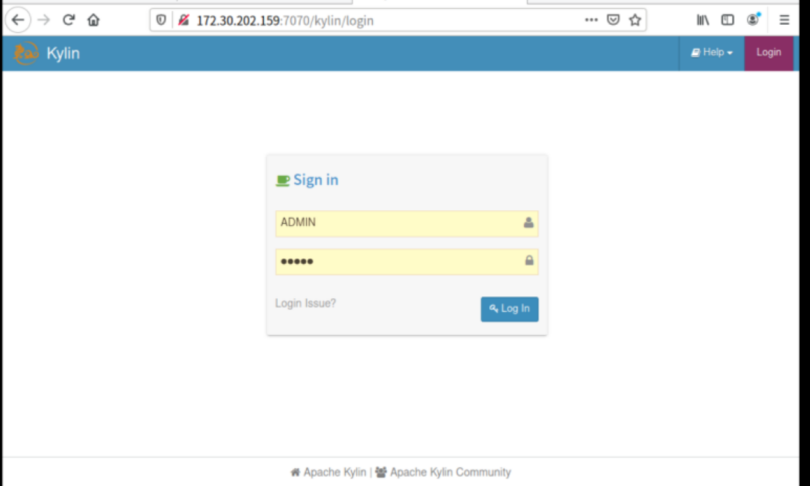

使用ADMIN/KYLIN作为用户名/密码登录以下URL,在左上角的project下拉列表中选择'learn_kylin'项目

http://172.30.202.159:7070/kylin

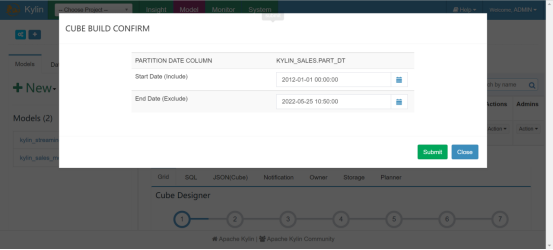

选中'kylin_sales_cube'示例立方体,点击'Actions'->'Build',选择一个截止日期

后面build就失败了

https://ask.csdn.net/questions/7720718?answer=53794154&spm=1001.2014.3001.5504

两个平台反复横跳呀

浙公网安备 33010602011771号

浙公网安备 33010602011771号