DenseNet实现

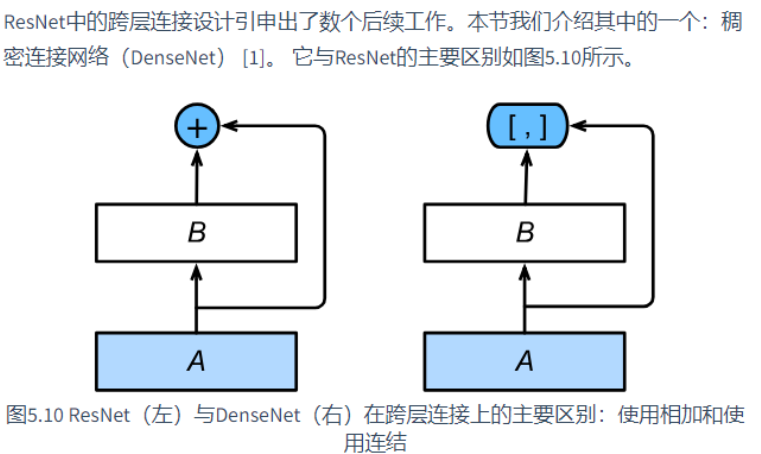

稠密块

DenseNet使用了ResNet改良版的“批量归一化、激活和卷积”结构,我们首先在conv_block函数里实现这个结构。

import time import torch from torch import nn, optim import torch.nn.functional as F import sys sys.path.append("./Dive-into-DL-PyTorch-master/Dive-into-DL-PyTorch-master/code/") import d2lzh_pytorch as d2l device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') def conv_block(in_channels,out_channels): blk = nn.Sequential(nn.BatchNorm2d(in_channels), nn.ReLU(), nn.Conv2d(in_channels,out_channels,kernel_size=3,padding=1)) return blk

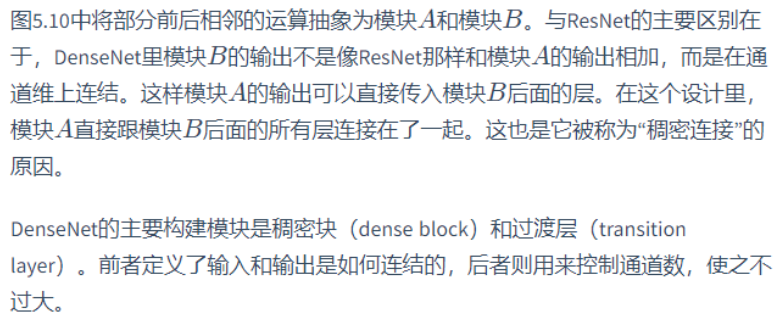

稠密块由多个conv_block组成,每块使用相同的输出通道数。但在前向计算时,我们将每块的输入和输出在通道维上连结。

class DenseBlock(nn.Module): def __init__(self,num_convs,in_channels,out_channels): super(DenseBlock,self).__init__() net = [] for i in range(num_convs): in_c = in_channels + i*out_channels net.append(conv_block(in_c,out_channels)) self.net = nn.ModuleList(net) # 计算输出通道 self.out_channels = in_channels+num_convs*out_channels def forward(self,x): for blk in self.net: y = blk(x) # 在通道维度上将输入和输出连接 x = torch.cat((x,y),dim=1) return x

blk

过渡层

def transition_block(in_channels,out_channels): blk = nn.Sequential(nn.BatchNorm2d(in_channels), nn.ReLU(), nn.Conv2d(in_channels,out_channels,kernel_size=1), nn.AvgPool2d(kernel_size=2,stride=2)) return blk

DenseNet模型

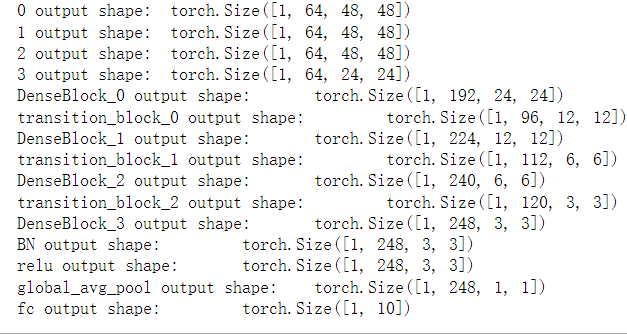

DenseNet首先使用同ResNet一样的单卷积层和最大池化层。

net = nn.Sequential(nn.Conv2d(1,64,kernel_size=7,stride=2,padding=3), nn.BatchNorm2d(64), nn.ReLU(), nn.MaxPool2d(kernel_size=3,stride=2,padding=1))

类似于ResNet接下来使用的4个残差块,DenseNet使用的是4个稠密块。同ResNet一样,我们可以设置每个稠密块使用多少个卷积层。这里我们设成4,从而与上一节的ResNet-18保持一致。稠密块里的卷积层通道数(即增长率)设为32,所以每个稠密块将增加128个通道。

num_channels,growth_rate = 64,32 # num_channels为当前的通道数 num_convs_in_dense_blocks = [4,4,4,4] for i,num_convs in enumerate(num_convs_in_dense_blocks): DB = DenseBlock(num_convs,num_channels,growth_rate) net.add_module('DenseBlock_%d'%i,DB) # 上一个稠密块的输出通道数 num_channels = DB.out_channels # 在稠密块之间加入通道数减半的过度层 if i != len(num_convs_in_dense_blocks)-1: # 不是最后一层 net.add_module('transition_block_%d'%i, transition_block(num_channels,num_channels //2)) num_channels = num_channels//2

最后接上全局池化层和全连接层来输出。

net.add_module('BN',nn.BatchNorm2d(num_channels)) net.add_module('relu',nn.ReLU()) # GlobalAvgPool2d的输出: (Batch, num_channels, 1, 1) net.add_module('global_avg_pool',d2l.GlobalAvgPool2d()) net.add_module("fc", nn.Sequential(d2l.FlattenLayer(), nn.Linear(num_channels, 10)))

x = torch.rand((1,1,96,96)) for name,layer in net.named_children(): x = layer(x) print(name,'output shape:\t',x.shape)

浙公网安备 33010602011771号

浙公网安备 33010602011771号