W4算法基于背景建模的运动目标检测

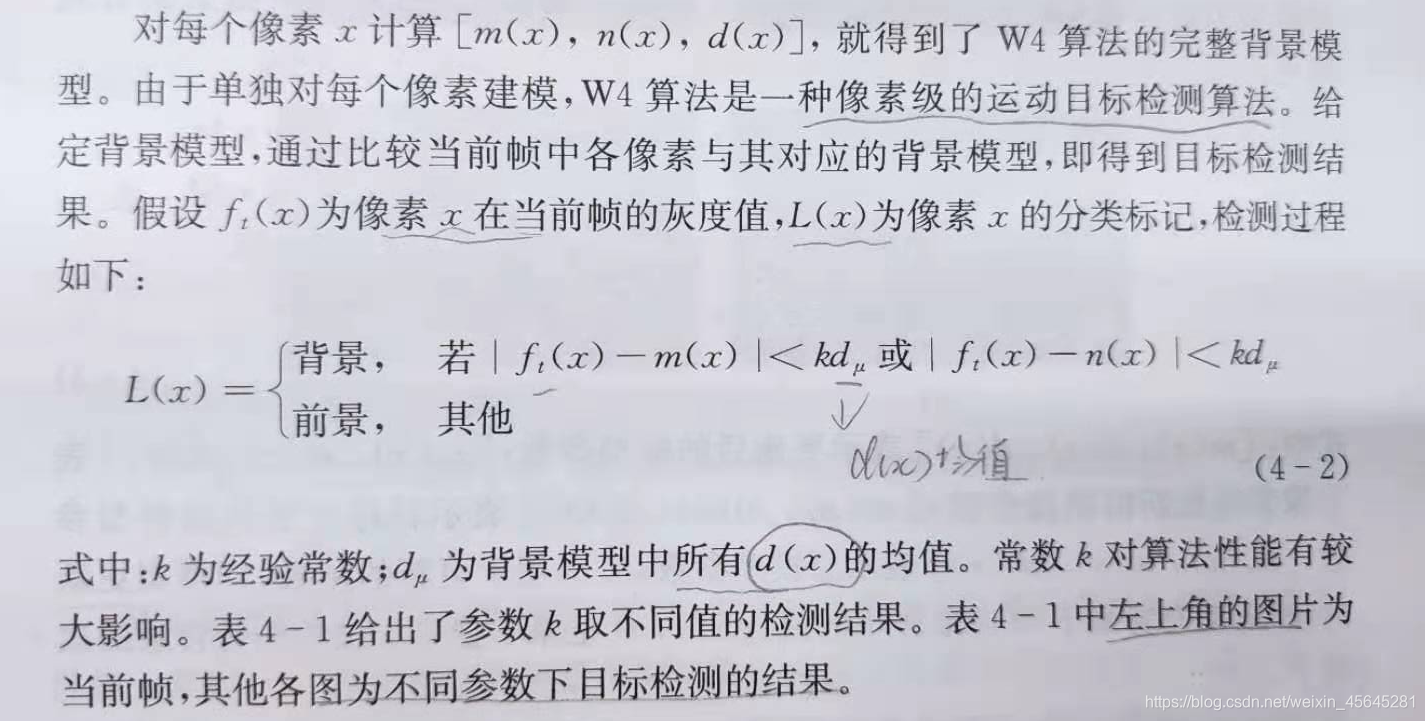

W4算法对每个像素点统计三个特征值:最小灰度值、最大灰度值以及最大帧间差分值。所有像素点的特征值就构成了背景模型,比较当前帧与背景模型就能检测出运动的目标。具体步骤可分为三部分:模型初始化、目标检测以及模型更新。

模型初始化:首先遍历初始数帧,并对每个像素点计算灰度中值和标准差,然后计算每个像素点帧间灰度变化的最大值、最小值以及最大帧间差分值。计算时间过长,没有进行优化。

目标检测:

#include <iostream>

#include<opencv2/opencv.hpp>

#include <vector>

using namespace cv;

using namespace std;

//每个像素的灰度中值

bool comp(int x, int y)

{

return x > y;

}

void sort_vector(vector <int> v)

{

sort(v.begin(), v.end(), comp);

}

//每个像素的灰度值标准差

double mean(vector <int> v) {

double ans = 0.0;

for (int i = 0; i < v.size(); i++) {

ans += v[i];

}

ans = ans / v.size();

return ans;

}

double vector_stddev(vector <int> v) {

double a1 = mean(v);

double a2 = 0.0;

for (int i = 0; i < v.size(); i++) {

a2 = (a1 - v[i]) * (a1 - v[i]);

}

a2 = sqrt(a2 / v.size());

return a2;

}

//相邻帧像素灰度差分值

int vector_diff(vector <int> v) {

vector<int> temp;

for (int i = 0; i < v.size()-1; i++) {

int result = abs(v[i + 1] - v[i]);

temp.push_back(result);

}

int max = *max_element(temp.begin(), temp.end()); ;

return max;

}

//相邻帧像素灰度差分值的平均值

double mean_diff(vector <int> v) {

vector<int> temp;

for (int i = 0; i < v.size() - 1; i++) {

int result = abs(v[i + 1] - v[i]);

temp.push_back(result);

}

double a1 = mean(temp);

return a1;

}

int main()

{

VideoCapture capture;

Mat frame,framegray,result;

//保存目标轨迹

std::vector<Point> pt;

//capture.open(0);

frame = capture.open("D:/testimage/video/fish.mp4"); //F:/opencv/sources/amples/data/vtest.avi D:/testimage/CXK.avi D:/testimage/video/fish.mp4

if (!capture.isOpened())

{

printf("can not open camera \n");

return -1;

}

int iVideoTime = capture.get(CAP_PROP_FRAME_COUNT); // capture.get(CAP_PROP_FPS);

int Frame_start= capture.get(CAP_PROP_POS_FRAMES);

capture.read(frame);

vector <double> all_diff;

cvtColor(frame, result, COLOR_RGB2GRAY);

//namedWindow("input", WINDOW_AUTOSIZE);

for (int i = 0; i < frame.rows; i++)

{

vector <int> v_median;

for (int j = 0; j < frame.cols; j++)

{

//temp1.push_back(gray_value);

while (capture.read(frame) && Frame_start < 20)

{

cvtColor(frame, framegray, COLOR_RGB2GRAY);

int gray_value = framegray.at<uchar>(i, j);

//cout << gray_value << endl;

v_median.push_back(gray_value);

Frame_start++;

//imshow("input", frame);

//waitKey(10);

}

/*判断当前像素与灰度中值差值的绝对值是否小于2倍标准差*/

double temp = vector_stddev(v_median);

double error = abs(framegray.at<uchar>(i, j) - v_median[v_median.size() / 2.0]) - 2 * temp;

if (error < 0) {

int diff = vector_diff(v_median);

double Mean_diff = mean_diff(v_median);

cout << "d(x)相邻帧像素差分值:" << diff << endl;

cout << "d(x)相邻帧像素差分值的平均值:" << Mean_diff << endl;

sort_vector(v_median);

cout << "m(x)最大值:" << v_median[0] << endl;

cout << "n(x)最小值:" << v_median[v_median.size() - 1] << endl;

cout << "坐标(" << i << "," << j << ")的灰度中值:" << v_median[v_median.size() / 2.0] << endl;

cout << "坐标(" << i << "," << j << ")的灰度标准差:" << temp << endl;

cout << "error:" << error << endl;

int gray_value = framegray.at<uchar>(i, j);

if (abs(gray_value - v_median[0]) < 2 * Mean_diff || abs(gray_value - v_median[v_median.size() - 1]) < 2 * Mean_diff) {

result.at<uchar>(i, j) = 0;

}

else

{

result.at<uchar>(i, j) = 255;

}

}

Frame_start = 0;

frame = capture.open("D:/testimage/video/fish.mp4");

cvtColor(frame, framegray, COLOR_RGB2GRAY);

}

cout << "--------"<< i <<"---------" << endl;

Frame_start = 0;

frame = capture.open("D:/testimage/video/fish.mp4");

cvtColor(frame, framegray, COLOR_RGB2GRAY);

}

namedWindow("result", WINDOW_AUTOSIZE);

imshow("result", result);

capture.release();

system("pause");

return 0;

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号