add tcp_add_backlog

1、当 TCP 在存在丢包率为 1% 到 10% 的环境中运行时,可能会交换许多 SACK 块。在发送方繁忙时,如果这些 SACK 块必须排队进入套接字积压队列,我们可以丢弃它们。

主要原因是 RACK/SACK 处理性能不佳,我们可以尝试避免这些宝贵信息的丢弃,这些信息的丢失会导致错误的超时和重传。

导致丢弃的原因是 skb->truesize 的过度估算,这由以下情况引起:

- 驱动程序分配大约 2048 字节(或更多)作为片段来存储以太网帧。

- 各种 pskb_may_pull() 调用将报头拉入 skb->head,可能已经拉入了所有帧内容,但 skb->truesize 无法降低,因为堆栈无法了解每个片段的实际大小。

在双向流上,积压队列的丢弃更加明显,因为它们的 sk_rmem_alloc 可能非常大。

让我们为积压队列增加一些空间,因为只有套接字所有者可以选择性地采取措施来降低内存需求,例如压缩接收队列或部分 OFO 修剪。

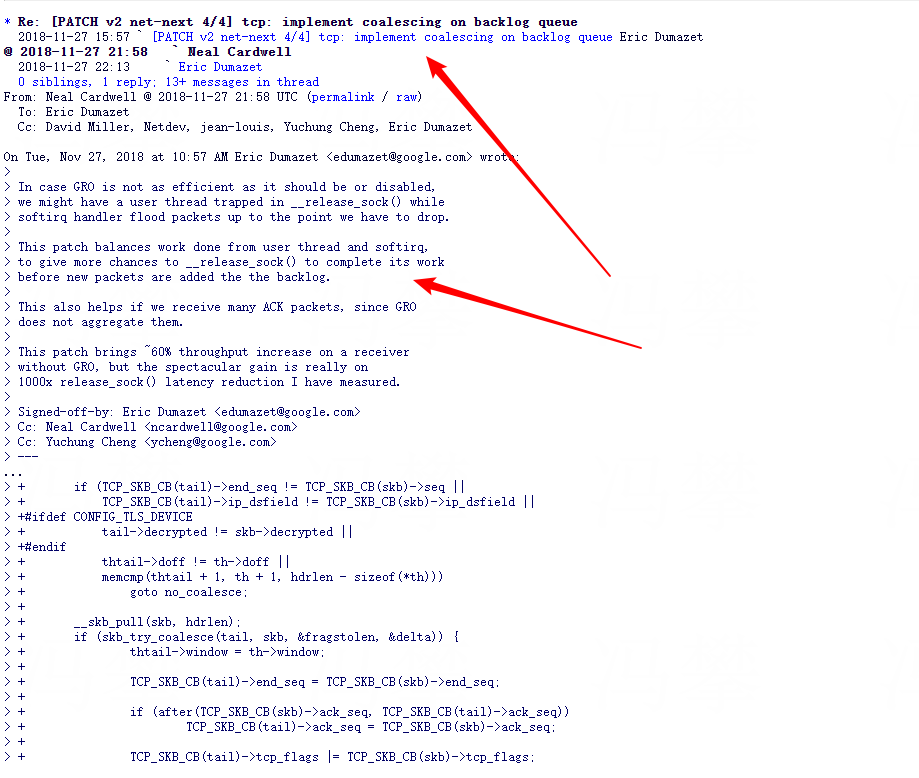

2、如果 GRO(大接收卸载)没有像预期那样高效或被禁用,我们可能会遇到用户线程被困在 __release_sock() 中,而软中断处理程序会不断地处理数据包,直到我们不得不丢弃它们。

这个补丁平衡了用户线程和软中断的工作,从而让 __release_sock() 有更多机会完成其工作,而不会在积压队列中添加新数据包。如果我们接收到许多 ACK 包,这个补丁也会有所帮助,因为 GRO 并不会聚合它们。

这个补丁(implement coalescing on backlog queue)在禁用 GRO 的接收端带来了大约 60% 的吞吐量提升,但真正显著的收益是我测量到的 release_sock() 延迟减少了 1000 倍。

bool tcp_add_backlog(struct sock *sk, struct sk_buff *skb)

{

u32 limit = READ_ONCE(sk->sk_rcvbuf) + READ_ONCE(sk->sk_sndbuf);

struct skb_shared_info *shinfo;

const struct tcphdr *th;

struct tcphdr *thtail;

struct sk_buff *tail;

unsigned int hdrlen;

bool fragstolen;

u32 gso_segs;

int delta;

/* In case all data was pulled from skb frags (in __pskb_pull_tail()),

* we can fix skb->truesize to its real value to avoid future drops.

* This is valid because skb is not yet charged to the socket.

* It has been noticed pure SACK packets were sometimes dropped

* (if cooked by drivers without copybreak feature).

*/

skb_condense(skb);

skb_dst_drop(skb);

if (unlikely(tcp_checksum_complete(skb))) {

bh_unlock_sock(sk);

__TCP_INC_STATS(sock_net(sk), TCP_MIB_CSUMERRORS);

__TCP_INC_STATS(sock_net(sk), TCP_MIB_INERRS);

return true;

}

/* Attempt coalescing to last skb in backlog, even if we are

* above the limits.

* This is okay because skb capacity is limited to MAX_SKB_FRAGS.

*/

th = (const struct tcphdr *)skb->data;

hdrlen = th->doff * 4;

shinfo = skb_shinfo(skb);

if (!shinfo->gso_size)

shinfo->gso_size = skb->len - hdrlen;

if (!shinfo->gso_segs)

shinfo->gso_segs = 1;

tail = sk->sk_backlog.tail;

if (!tail)

goto no_coalesce;

thtail = (struct tcphdr *)tail->data;

if (TCP_SKB_CB(tail)->end_seq != TCP_SKB_CB(skb)->seq ||

TCP_SKB_CB(tail)->ip_dsfield != TCP_SKB_CB(skb)->ip_dsfield ||

((TCP_SKB_CB(tail)->tcp_flags |

TCP_SKB_CB(skb)->tcp_flags) & (TCPHDR_SYN | TCPHDR_RST | TCPHDR_URG)) ||

!((TCP_SKB_CB(tail)->tcp_flags &

TCP_SKB_CB(skb)->tcp_flags) & TCPHDR_ACK) ||

((TCP_SKB_CB(tail)->tcp_flags ^

TCP_SKB_CB(skb)->tcp_flags) & (TCPHDR_ECE | TCPHDR_CWR)) ||

#ifdef CONFIG_TLS_DEVICE

tail->decrypted != skb->decrypted ||

#endif

thtail->doff != th->doff ||

memcmp(thtail + 1, th + 1, hdrlen - sizeof(*th)))

goto no_coalesce;

__skb_pull(skb, hdrlen);

if (skb_try_coalesce(tail, skb, &fragstolen, &delta)) {

TCP_SKB_CB(tail)->end_seq = TCP_SKB_CB(skb)->end_seq;

if (likely(!before(TCP_SKB_CB(skb)->ack_seq, TCP_SKB_CB(tail)->ack_seq))) {

TCP_SKB_CB(tail)->ack_seq = TCP_SKB_CB(skb)->ack_seq;

thtail->window = th->window;

}

/* We have to update both TCP_SKB_CB(tail)->tcp_flags and

* thtail->fin, so that the fast path in tcp_rcv_established()

* is not entered if we append a packet with a FIN.

* SYN, RST, URG are not present.

* ACK is set on both packets.

* PSH : we do not really care in TCP stack,

* at least for 'GRO' packets.

*/

thtail->fin |= th->fin;

TCP_SKB_CB(tail)->tcp_flags |= TCP_SKB_CB(skb)->tcp_flags;

if (TCP_SKB_CB(skb)->has_rxtstamp) {

TCP_SKB_CB(tail)->has_rxtstamp = true;

tail->tstamp = skb->tstamp;

skb_hwtstamps(tail)->hwtstamp = skb_hwtstamps(skb)->hwtstamp;

}

/* Not as strict as GRO. We only need to carry mss max value */

skb_shinfo(tail)->gso_size = max(shinfo->gso_size,

skb_shinfo(tail)->gso_size);

gso_segs = skb_shinfo(tail)->gso_segs + shinfo->gso_segs;

skb_shinfo(tail)->gso_segs = min_t(u32, gso_segs, 0xFFFF);

sk->sk_backlog.len += delta;

__NET_INC_STATS(sock_net(sk),

LINUX_MIB_TCPBACKLOGCOALESCE);

kfree_skb_partial(skb, fragstolen);

return false;

}

__skb_push(skb, hdrlen);

no_coalesce:

/* Only socket owner can try to collapse/prune rx queues

* to reduce memory overhead, so add a little headroom here.

* Few sockets backlog are possibly concurrently non empty.

*/

limit += 64*1024;

if (unlikely(sk_add_backlog(sk, skb, limit))) {

bh_unlock_sock(sk);

__NET_INC_STATS(sock_net(sk), LINUX_MIB_TCPBACKLOGDROP);

return true;

}

return false;

}backlog链表的添加操作不能执行接收队列(包括sk_receive_queue和out_of_order_queue)的减小内存占用类的操作(collapse/prune),只有套接口的所有者可执行此类操作。在tcp_add_backlog函数执行链表添加操作之前,内核在最大接收缓存和发送缓存之和的基础之上,再增加64K的额外量以保证成功添加,

之前也聊过 backlog链表处理时机 在tcp_sendmsg/tcp_recvmsg都会调用。

以上代码修改来之patch2:tcp: add tcp_add_backlog() patch2:implement coalescing on backlog queue

最开始的commit为:

https://git.kernel.org/pub/scm/linux/kernel/git/netdev/net-next.git/commit/net/ipv4?id=c9c3321257e1b95be9b375f811fb250162af8d39

From: Eric Dumazet <edumazet@google.com>

When TCP operates in lossy environments (between 1 and 10 % packet

losses), many SACK blocks can be exchanged, and I noticed we could

drop them on busy senders, if these SACK blocks have to be queued

into the socket backlog.

While the main cause is the poor performance of RACK/SACK processing,

we can try to avoid these drops of valuable information that can lead to

spurious timeouts and retransmits.

Cause of the drops is the skb->truesize overestimation caused by :

- drivers allocating ~2048 (or more) bytes as a fragment to hold an

Ethernet frame.

- various pskb_may_pull() calls bringing the headers into skb->head

might have pulled all the frame content, but skb->truesize could

not be lowered, as the stack has no idea of each fragment truesize.

The backlog drops are also more visible on bidirectional flows, since

their sk_rmem_alloc can be quite big.

Let's add some room for the backlog, as only the socket owner

can selectively take action to lower memory needs, like collapsing

receive queues or partial ofo pruning.

Signed-off-by: Eric Dumazet <edumazet@google.com>

Cc: Yuchung Cheng <ycheng@google.com>

Cc: Neal Cardwell <ncardwell@google.com>

---

include/net/tcp.h | 1 +

net/ipv4/tcp_ipv4.c | 33 +++++++++++++++++++++++++++++----

net/ipv6/tcp_ipv6.c | 5 +----

3 files changed, 31 insertions(+), 8 deletions(-)

diff --git a/include/net/tcp.h b/include/net/tcp.h

index 25d64f6de69e1f639ed1531bf2d2df3f00fd76a2..5f5f09f6e019682ef29c864d2f43a8f247fcdd9a 100644

--- a/include/net/tcp.h

+++ b/include/net/tcp.h

@@ -1163,6 +1163,7 @@ static inline void tcp_prequeue_init(struct tcp_sock *tp)

}

bool tcp_prequeue(struct sock *sk, struct sk_buff *skb);

+bool tcp_add_backlog(struct sock *sk, struct sk_buff *skb);

#undef STATE_TRACE

diff --git a/net/ipv4/tcp_ipv4.c b/net/ipv4/tcp_ipv4.c

index ad41e8ecf796bba1bd6d9ed155ca4a57ced96844..53e80cd004b6ce401c3acbb4b243b243c5c3c4a3 100644

--- a/net/ipv4/tcp_ipv4.c

+++ b/net/ipv4/tcp_ipv4.c

@@ -1532,6 +1532,34 @@ bool tcp_prequeue(struct sock *sk, struct sk_buff *skb)

}

EXPORT_SYMBOL(tcp_prequeue);

+bool tcp_add_backlog(struct sock *sk, struct sk_buff *skb)

+{

+ u32 limit = sk->sk_rcvbuf + sk->sk_sndbuf;

+

+ /* Only socket owner can try to collapse/prune rx queues

+ * to reduce memory overhead, so add a little headroom here.

+ * Few sockets backlog are possibly concurrently non empty.

+ */

+ limit += 64*1024;

+

+ /* In case all data was pulled from skb frags (in __pskb_pull_tail()),

+ * we can fix skb->truesize to its real value to avoid future drops.

+ * This is valid because skb is not yet charged to the socket.

+ * It has been noticed pure SACK packets were sometimes dropped

+ * (if cooked by drivers without copybreak feature).

+ */

+ if (!skb->data_len)

+ skb->truesize = SKB_TRUESIZE(skb_end_offset(skb));

+

+ if (unlikely(sk_add_backlog(sk, skb, limit))) {

+ bh_unlock_sock(sk);

+ __NET_INC_STATS(sock_net(sk), LINUX_MIB_TCPBACKLOGDROP);

+ return true;

+ }

+ return false;

+}

+EXPORT_SYMBOL(tcp_add_backlog);

+

/*

* From tcp_input.c

*/

@@ -1662,10 +1690,7 @@ process:

if (!sock_owned_by_user(sk)) {

if (!tcp_prequeue(sk, skb))

ret = tcp_v4_do_rcv(sk, skb);

- } else if (unlikely(sk_add_backlog(sk, skb,

- sk->sk_rcvbuf + sk->sk_sndbuf))) {

- bh_unlock_sock(sk);

- __NET_INC_STATS(net, LINUX_MIB_TCPBACKLOGDROP);

+ } else if (tcp_add_backlog(sk, skb)) {

goto discard_and_relse;

}

bh_unlock_sock(sk);

内核目录查找tcp: implement coalescing on backlog queue 即可看到更新历史记录

3. Re: [PATCH V3 1/8] net: add limit for socket backlog

- by Arnaldo Carvalho de Melo @ 2010-03-05 13:00 UTC [1%]

4. [PATCH V2 1/7] net: add limit for socket backlog

- by Zhu Yi @ 2010-03-03 8:36 UTC [10%]

:

We got system OOM while running some UDP netperf testing on the loopback

device. The case is multiple senders sent stream UDP packets to a single

receiver via loopback on local host. Of course, the receiver is not able

to handle all the packets in time. But we surprisingly found that these

packets were not discarded due to the receiver's sk->sk_rcvbuf limit.

Instead, they are kept queuing to sk->sk_backlog and finally ate up all

the memory. We believe this is a secure hole that a none privileged user

can crash the system.

The root cause for this problem is, when the receiver is doing

__release_sock() (i.e. after userspace recv, kernel udp_recvmsg ->

skb_free_datagram_locked -> release_sock), it moves skbs from backlog to

sk_receive_queue with the softirq enabled. In the above case, multiple

busy senders will almost make it an endless loop. The skbs in the

backlog end up eat all the system memory.

The issue is not only for UDP. Any protocols using socket backlog is

potentially affected. The patch adds limit for socket backlog so that

the backlog size cannot be expanded endlessly.

>> On Wed, Nov 21, 2018 at 9:52 AM, Eric Dumazet <edumazet@google.com> wrote:

>>> Under high stress, and if GRO or coalescing does not help,

>>> we better make room in backlog queue to be able to keep latest

>>> packet coming.

>>>

>>> This generally helps fast recovery, given that we often receive

>>> packets in order.

>>

>> I like the benefit of fast recovery but I am a bit leery about head

>> drop causing HoLB on large read, while tail drops can be repaired by

>> RACK and TLP already. Hmm -

>

> This is very different pattern here.

>

> We have a train of packets coming, the last packet is not a TLP probe...

>

> Consider this train coming from an old stack without burst control nor pacing.

>

> This patch guarantees last packet will be processed, and either :

>

> 1) We are a receiver, we will send a SACK. Sender will typically start recovery

>

> 2) We are a sender, we will process the most recent ACK sent by the receiver.

>

浙公网安备 33010602011771号

浙公网安备 33010602011771号