chisel 分析3 业务流缓存

目前业务使用chisel 代理内网流量到云端Internet节点使用,本地流量从tun接口上读取引流封包走chisel的ssh 隧道到云端,

本次主要看:chisel的server端业务流分析

client 和server 端读写设计以及怎样mux demux

client 收到浏览器的请求后,进过一些列的处理进入piperemote逻辑;

// newMux returns a mux that runs over the given connection. func newMux(p packetConn) *mux { m := &mux{ conn: p, incomingChannels: make(chan NewChannel, chanSize), globalResponses: make(chan interface{}, 1), incomingRequests: make(chan *Request, chanSize), errCond: newCond(), } if debugMux { m.chanList.offset = atomic.AddUint32(&globalOff, 1) } go m.loop() return m }

- sshConn := p.sshTun.getSSH(ctx) 获取当前ssh conn连接

- dst, reqs, err := sshConn.OpenChannel("chisel", []byte(p.remote.Remote())) 计算当期请求的channel id,便于在tcp流中找到属于自己的channel

- s, r := cio.Pipe(src, dst) 将浏览器src 和remote chisel server dst 相互proxy-pipe-流量转发。

func Pipe(src io.ReadWriteCloser, dst io.ReadWriteCloser) (int64, int64) { var sent, received int64 var wg sync.WaitGroup var o sync.Once close := func() { src.Close() dst.Close() } wg.Add(2) go func() { received, _ = io.Copy(src, dst) o.Do(close) wg.Done() }() go func() { sent, _ = io.Copy(dst, src) o.Do(close) wg.Done() }() wg.Wait() return sent, received }

调用Io.copy 实现src 和dst之间的流量转发,io.copy可以参考iocopy分析

由于是多个channel 共用一个tcp ssh 流, 那当前这个tcp流的packet是属于哪个channel 呢? 和Tcp拆包和粘包一样处理即可!

也就是channel 会自己封装一个read write函数,channel的write函数实现粘包组合,最后调用channel的conn 发送报文走tcp传输

channel的io.ReadWriteCloser 接口实现

channel的初始化

func (m *mux) newChannel(chanType string, direction channelDirection, extraData []byte) *channel { ch := &channel{ remoteWin: window{Cond: newCond()}, myWindow: channelWindowSize, pending: newBuffer(),// channel read 中间缓冲区 extPending: newBuffer(), direction: direction, incomingRequests: make(chan *Request, chanSize), msg: make(chan interface{}, chanSize), chanType: chanType, extraData: extraData, mux: m, packetPool: make(map[uint32][]byte), } fmt.Printf("addr:%p newChannel:%#v\n\n", ch, *ch) ch.localId = m.chanList.add(ch) fmt.Printf("addr:%p newChannel:%#v ---> localid:%d \n\n", ch, *ch, ch.localId) return ch }

channel的Write接口

func (ch *channel) Write(data []byte) (int, error) { if !ch.decided { return 0, errUndecided } return ch.WriteExtended(data, 0) }

调用WriteExtended 向stream 写入data,

ch.remoteWin.reserve(space) 保留当前channel 的窗口space=(len(data),ch.maxRemotePayload),也就是在channel 应用层也有一个类似与tcp层的窗口(发送窗口以及接收窗口)

也就是当前reserve reserves win from the available window capacity.If no capacity remains, reserve will block. reserve may / return less than requested.

packet = make([]byte, want) 当前ch 的packet调用for len(data) > 0 阻塞式的发送数据

// WriteExtended writes data to a specific extended stream. These streams are // used, for example, for stderr. func (ch *channel) WriteExtended(data []byte, extendedCode uint32) (n int, err error) { if ch.sentEOF { return 0, io.EOF } // 1 byte message type, 4 bytes remoteId, 4 bytes data length opCode := byte(msgChannelData) headerLength := uint32(9) if extendedCode > 0 { headerLength += 4 opCode = msgChannelExtendedData } ch.writeMu.Lock() packet := ch.packetPool[extendedCode] // We don't remove the buffer from packetPool, so // WriteExtended calls from different goroutines will be // flagged as errors by the race detector. ch.writeMu.Unlock() for len(data) > 0 { space := min(ch.maxRemotePayload, len(data)) if space, err = ch.remoteWin.reserve(space); err != nil { return n, err } if want := headerLength + space; uint32(cap(packet)) < want { packet = make([]byte, want) } else { packet = packet[:want] } todo := data[:space] packet[0] = opCode binary.BigEndian.PutUint32(packet[1:], ch.remoteId) if extendedCode > 0 { binary.BigEndian.PutUint32(packet[5:], uint32(extendedCode)) } binary.BigEndian.PutUint32(packet[headerLength-4:], uint32(len(todo))) copy(packet[headerLength:], todo) if err = ch.writePacket(packet); err != nil { return n, err } n += len(todo) data = data[len(todo):] } ch.writeMu.Lock() ch.packetPool[extendedCode] = packet// 保留当前ch的中间缓存空间,避免重复make 申请内存空间释放 ch.writeMu.Unlock() return n, err }

最后调用mux的conn handshakeTransport 流接口发送数据

// writePacket sends a packet. If the packet is a channel close, it updates // sentClose. This method takes the lock c.writeMu. func (ch *channel) writePacket(packet []byte) error { ch.writeMu.Lock() if ch.sentClose { ch.writeMu.Unlock() return io.EOF } ch.sentClose = (packet[0] == msgChannelClose) err := ch.mux.conn.writePacket(packet) ch.writeMu.Unlock() return err }

根据初始化逻辑

// NewClientConn establishes an authenticated SSH connection using c // as the underlying transport. The Request and NewChannel channels // must be serviced or the connection will hang. func NewClientConn(c net.Conn, addr string, config *ClientConfig) (Conn, <-chan NewChannel, <-chan *Request, error) { conn := &connection{ sshConn: sshConn{conn: c, user: fullConf.User}, } if err := conn.clientHandshake(addr, &fullConf); err != nil { conn.mux = newMux(conn.transport)----> conn.mux.conn = conn.transport return conn, conn.mux.incomingChannels, conn.mux.incomingRequests, nil }

也就是 c.transport = newClientTransport( newTransport(c.sshConn.conn, config.Rand, true /* is client */), c.clientVersion, c.serverVersion, config, dialAddress, c.sshConn.RemoteAddr()) c.transport <-----newClientTransport<----newHandshakeTransport<---newTransport

keyconn = transporp ---> t.conn = keyconn ---> c.transport = t

func newTransport(rwc io.ReadWriteCloser, rand io.Reader, isClient bool) *transport { t := &transport{ bufReader: bufio.NewReader(rwc),//c.sshConn.conn bufWriter: bufio.NewWriter(rwc),//c.sshConn.conn

rand: rand,

reader: connectionState{

}

}

func newClientTransport(conn keyingTransport, clientVersion, serverVersion []byte, config *ClientConfig, dialAddr string, addr net.Addr) *handshakeTransport {

t := newHandshakeTransport(conn, &config.Config, clientVersion, serverVersion)

t.dialAddress = dialAddr

t.remoteAddr = addr

t.hostKeyCallback = config.HostKeyCallback

t.bannerCallback = config.BannerCallback

if config.HostKeyAlgorithms != nil {

t.hostKeyAlgorithms = config.HostKeyAlgorithms

} else {

t.hostKeyAlgorithms = supportedHostKeyAlgos

}

go t.readLoop()

go t.kexLoop()

return t

}

调用 handshakeTransport的接口

func (t *handshakeTransport) writePacket(p []byte) error { t.mu.Lock() defer t.mu.Unlock() if t.writeError != nil { return t.writeError } if t.sentInitMsg != nil { // Copy the packet so the writer can reuse the buffer. cp := make([]byte, len(p)) copy(cp, p) t.pendingPackets = append(t.pendingPackets, cp) return nil } if t.writeBytesLeft > 0 { t.writeBytesLeft -= int64(len(p)) } else { t.requestKeyExchange() } if t.writePacketsLeft > 0 { t.writePacketsLeft-- } else { t.requestKeyExchange() } if err := t.pushPacket(p); err != nil { t.writeError = err } return nil }

调用HandshakeTransport writePacket

func (t *handshakeTransport) pushPacket(p []byte) error { if debugHandshake { t.printPacket(p, true) } return t.conn.writePacket(p) }

调用newTransport transport 的 writePacket

func (t *transport) writePacket(packet []byte) error { if debugTransport { t.printPacket(packet, true) } return t.writer.writePacket(t.bufWriter, t.rand, packet) }

调用 (s *connectionState) writePacket(w *bufio.Writer, rand io.Reader, packet []byte) error

从面向过程就是这样---->

channel的Read接口

func (ch *channel) Read(data []byte) (int, error) { if !ch.decided { return 0, errUndecided } return ch.ReadExtended(data, 0) }

调用ReadExtended向stream 读取data,

n, err = c.pending.Read(data)c.adjustWindow(uint32(n)) 读取了n byte后告知对端, ch 接收窗口增大n

func (m *mux) newChannel(chanType string, direction channelDirection, extraData []byte) *channel { ch := &channel{ remoteWin: window{Cond: newCond()}, myWindow: channelWindowSize, pending: newBuffer(), extPending: newBuffer(), direction: direction, incomingRequests: make(chan *Request, chanSize), msg: make(chan interface{}, chanSize), chanType: chanType, extraData: extraData, mux: m, packetPool: make(map[uint32][]byte), } return ch }

再根据mux 创建channel的时候, 会给ch创建reading pending 读缓存, tcp流数据写入到此pending ,此时read就会有数据

那么pending: newBuffer(),的数据是什么时候写入的呢?

// newMux returns a mux that runs over the given connection. func newMux(p packetConn) *mux { m := &mux{ conn: p, incomingChannels: make(chan NewChannel, chanSize), globalResponses: make(chan interface{}, 1), incomingRequests: make(chan *Request, chanSize), errCond: newCond(), } if debugMux { m.chanList.offset = atomic.AddUint32(&globalOff, 1) } go m.loop() return m }

在创建mux的时候, 会创建一个loop协程,此协程不停地从mux的ch中读取数据

// loop runs the connection machine. It will process packets until an // error is encountered. To synchronize on loop exit, use mux.Wait. func (m *mux) loop() { var err error for err == nil { err = m.onePacket() } ---------------------------- } / onePacket reads and processes one packet. func (m *mux) onePacket() error { packet, err := m.conn.readPacket() switch packet[0] { case msgChannelOpen: return m.handleChannelOpen(packet) case msgGlobalRequest, msgRequestSuccess, msgRequestFailure: return m.handleGlobalPacket(packet) } id := binary.BigEndian.Uint32(packet[1:]) ch := m.chanList.getChan(id) fmt.Printf("choose ch id:%d process %+#v\n", id, ch) return ch.handlePacket(packet) } func (ch *channel) handlePacket(packet []byte) error { fmt.Printf(" ch handle packet:%d \n", packet[0]) switch packet[0] { case msgChannelData, msgChannelExtendedData: return ch.handleData(packet) case msgChannelClose: ch.sendMessage(channelCloseMsg{PeersID: ch.remoteId}) ch.mux.chanList.remove(ch.localId) ch.close() return nil case msgChannelEOF: --------------------- return nil } decoded, err := decode(packet) switch msg := decoded.(type) { case *channelOpenFailureMsg: case *channelOpenConfirmMsg: case *windowAdjustMsg: case *channelRequestMsg: default: ch.msg <- msg --------------------------- } return nil }

来看packetdate的处理 写入pending buf

func (ch *channel) handleData(packet []byte) error {

headerLen := 9

isExtendedData := packet[0] == msgChannelExtendedData

ch.pending.write(data)

return nil

}

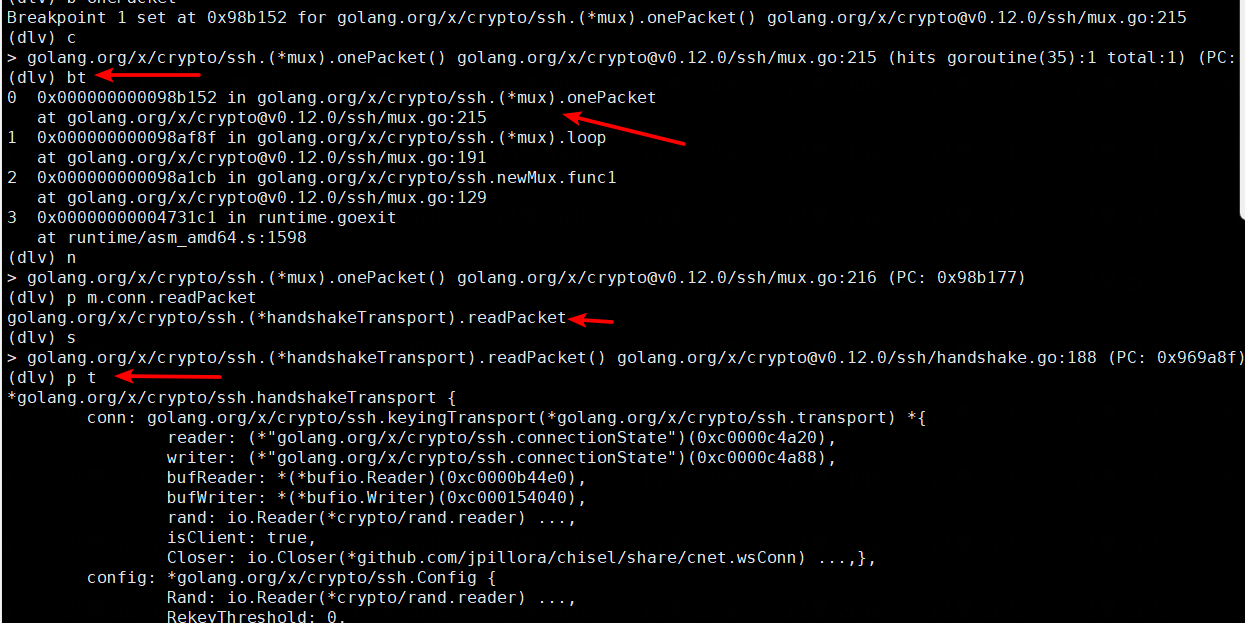

仔细分析:m.conn.readPacket() 调用的可是(t *handshakeTransport) readPacket()的接口;然后此接口是从handshakeTransport的incoming chan读取数据。

那么incoming的数据是谁写入的呢?

func (t *handshakeTransport) readPacket() ([]byte, error) {

p, ok := <-t.incoming

if !ok {

return nil, t.readError

}

return p, nil

}

在初始化

func newClientTransport(conn keyingTransport, clientVersion, serverVersion []byte, config *ClientConfig, dialAddr string, addr net.Addr) *handshakeTransport {

t := newHandshakeTransport(conn, &config.Config, clientVersion, serverVersion)

-----------------------

go t.readLoop()

go t.kexLoop()

return t

} go t.readLoop() 此协程从 对应的t.conn.readPacket()读取数据也就是到了transport 的 t.reader.readPacket(t.bufReader)接口读取数据

go t.kexLoop()//ssh 的handshakefunc (t *handshakeTransport) readLoop() {

first := true

for {

p, err := t.readOnePacket(first)

first = false

if err != nil {

t.readError = err

close(t.incoming)

break

}

if p[0] == msgIgnore || p[0] == msgDebug {

continue

}

t.incoming <- p

}

// Stop writers too.

t.recordWriteError(t.readError)

// Unblock the writer should it wait for this.

close(t.startKex)

// Don't close t.requestKex; it's also written to from writePacket.

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号