Kernel panic - not syncing: softlockup: hung tasks

目前遇到一个崩溃问题记录一下!

使用crash 分析结果如下:

crash> sys KERNEL: vmlinux DUMPFILE: kernel_dump_file_debug [PARTIAL DUMP] CPUS: 32 DATE: Thu Jul 8 16:06:13 2021 UPTIME: 12 days, 01:19:36 LOAD AVERAGE: 4.57, 5.64, 5.97 TASKS: 832 NODENAME: localhost RELEASE: 2.6.39-gentoo-r3-wafg2-47137 VERSION: #18 SMP Wed Dec 30 21:37:53 JST 2020 MACHINE: x86_64 (2599 Mhz) MEMORY: 128 GB PANIC: "[1039338.727675] Kernel panic - not syncing: softlockup: hung tasks"

crash> bt PID: 22501 TASK: ffff881ff4340690 CPU: 1 COMMAND: "xxxxproess" #0 [ffff88107fc238b0] machine_kexec at ffffffff810243b6 #1 [ffff88107fc23920] crash_kexec at ffffffff810773b9 #2 [ffff88107fc239f0] panic at ffffffff815f35e0 #3 [ffff88107fc23a70] watchdog_timer_fn at ffffffff81089a38 #4 [ffff88107fc23aa0] __run_hrtimer.clone.28 at ffffffff8106303a #5 [ffff88107fc23ad0] hrtimer_interrupt at ffffffff81063541 #6 [ffff88107fc23b30] smp_apic_timer_interrupt at ffffffff81020b92 #7 [ffff88107fc23b50] apic_timer_interrupt at ffffffff815f6553 #8 [ffff88107fc23bb8] igb_xmit_frame_ring at ffffffffa006a754 [igb] #9 [ffff88107fc23c70] igb_xmit_frame at ffffffffa006ada4 [igb] #10 [ffff88107fc23ca0] dev_hard_start_xmit at ffffffff814d588d #11 [ffff88107fc23d10] sch_direct_xmit at ffffffff814e87f7 #12 [ffff88107fc23d60] dev_queue_xmit at ffffffff814d5c2e #13 [ffff88107fc23db0] transmit_skb at ffffffffa0111032 [wafg2] #14 [ffff88107fc23dc0] forward_skb at ffffffffa01113b4 [wafg2] #15 [ffff88107fc23df0] dev_rx_skb at ffffffffa0111875 [wafg2] #16 [ffff88107fc23e40] igb_poll at ffffffffa006d6fc [igb] #17 [ffff88107fc23f10] net_rx_action at ffffffff814d437a #18 [ffff88107fc23f60] __do_softirq at ffffffff8104f3bf #19 [ffff88107fc23fb0] call_softirq at ffffffff815f6d9c --- <IRQ stack> --- #20 [ffff881f2ebcfae0] __skb_queue_purge at ffffffff8153af65 #21 [ffff881f2ebcfb00] do_softirq at ffffffff8100d1c4 #22 [ffff881f2ebcfb20] _local_bh_enable_ip.clone.8 at ffffffff8104f311 #23 [ffff881f2ebcfb30] local_bh_enable at ffffffff8104f336 #24 [ffff881f2ebcfb40] inet_csk_listen_stop at ffffffff8152a94b #25 [ffff881f2ebcfb80] tcp_close at ffffffff8152c8aa #26 [ffff881f2ebcfbb0] inet_release at ffffffff8154a44d #27 [ffff881f2ebcfbd0] sock_release at ffffffff814c409f #28 [ffff881f2ebcfbf0] sock_close at ffffffff814c4111 #29 [ffff881f2ebcfc00] fput at ffffffff810d4c85 #30 [ffff881f2ebcfc50] filp_close at ffffffff810d1ea0 #31 [ffff881f2ebcfc80] put_files_struct at ffffffff8104d4d9 #32 [ffff881f2ebcfcd0] exit_files at ffffffff8104d5b4 #33 [ffff881f2ebcfcf0] do_exit at ffffffff8104d821 #34 [ffff881f2ebcfd70] do_group_exit at ffffffff8104df5c #35 [ffff881f2ebcfda0] get_signal_to_deliver at ffffffff810570b2 #36 [ffff881f2ebcfe20] do_signal at ffffffff8100ae52 #37 [ffff881f2ebcff20] do_notify_resume at ffffffff8100b47e #38 [ffff881f2ebcff50] int_signal at ffffffff815f5e63 RIP: 00007fd9e52e1cdd RSP: 00007fd9a7cfa370 RFLAGS: 00000293 RAX: 000000000000001b RBX: 00000000000000fb RCX: ffffffffffffffff RDX: 000000000000001b RSI: 00007fd96a77e05e RDI: 00000000000000fb RBP: 00007fd9a8513e80 R8: 00000000007a7880 R9: 0000000000000000 R10: 0000000000000000 R11: 0000000000000293 R12: 000000000000001b R13: 00007fd96a77e05e R14: 000000000000001b R15: 0000000000735240 ORIG_RAX: 0000000000000001 CS: 0033 SS: 002b

首先弄明白 “Kernel panic - not syncing: softlockup: hung tasks” 这个结果是怎么出现,它代表着什么意思?也就是翻译翻译这个结论!!

lockup分为soft lockup和hard lockup

soft lockup是指内核中有BUG导致在内核模式下一直循环的时间超过n s(n为配置参数),而其他进程得不到运行的机会;实现方式:内核对于每一个cpu都有一个监控进程watchdog/x 每秒钟会对比进程时间戳,对比时间戳就可以知道运行情况了,进程长时间没有运行,时间戳没有更新,超过一定时间就报错。

hard lockup的发生是由于禁止了CPU的所有中断超过一定时间(几秒)这种情况下,外部设备发生的中断无法处理,内核认为此时发生了所谓的hard lockup

A ‘softlockup’ is defined as a bug that causes the kernel to loop in kernel mode for more than 20 seconds (see “Implementation” below for details), without giving other tasks a chance to run. The current stack

trace is displayed upon detection and, by default, the system will stay locked up. Alternatively, the kernel can be configured to panic; a sysctl, “kernel.softlockup_panic”, a kernel parameter,

“softlockup_panic” (see “Documentation/kernel-parameters.txt” for details), and a compile option,“BOOTPARAM_SOFTLOCKUP_PANIC”, are provided for this. A ‘hardlockup’ is defined as a bug that causes the CPU to loop in kernel mode for more than 10 seconds (see “Implementation” below for details), without letting other interrupts have a chance to run.

Similarly to the softlockup case, the current stack trace is displayed upon detection and the system will stay locked up unless the default behavior is changed, which can be done through a sysctl,

‘hardlockup_panic’, a compile time knob,“BOOTPARAM_HARDLOCKUP_PANIC”, and a kernel parameter, “nmi_watchdog”

那就看为啥cpu 没有被调度过来了?? 看了一下鬼知道!!! 干饭去----->下午继续

目前crash 查看相关命令为:

- 使用bt -slf 函数所在的文件和每一帧的具体内容,从而对照源码和汇编代码,查看函数入参 等详细情况

- 具体使用 help bt 就行和gdb 一样

- dis [-r][-l][-u][-b [num]] [address | symbol | (expression)] [count]

- 该命令是disassemble的缩写。把一个命令或者函数分解成汇编代码。

-

Sym命令

sym [-l] | [-M] | [-m module] | [-p|-n] | [-q string] | [symbol | vaddr]

把一个标志符转换到它所对应的虚拟地址,或者把虚拟地址转换为它所对应的标志符

- struct 用于查看数据结构原形

View Code

View Codecrash> struct -o request_sock struct request_sock { [0] struct request_sock *dl_next; [8] u16 mss; [10] u8 retrans; [11] u8 cookie_ts; [12] u32 window_clamp; [16] u32 rcv_wnd; [20] u32 ts_recent; [24] unsigned long expires; [32] const struct request_sock_ops *rsk_ops; [40] struct sock *sk; [48] u32 secid; [52] u32 peer_secid; } SIZE: 56

- 目前看到mem使用正常

crash> kmem -i PAGES TOTAL PERCENTAGE TOTAL MEM 33001378 125.9 GB ---- FREE 31408525 119.8 GB 95% of TOTAL MEM USED 1592853 6.1 GB 4% of TOTAL MEM SHARED 107702 420.7 MB 0% of TOTAL MEM BUFFERS 3207 12.5 MB 0% of TOTAL MEM CACHED 721460 2.8 GB 2% of TOTAL MEM SLAB 472316 1.8 GB 1% of TOTAL MEM TOTAL SWAP 0 0 ---- SWAP USED 0 0 100% of TOTAL SWAP SWAP FREE 0 0 0% of TOTAL SWAP

- 命令列表

View Code

View CodeCrash命令列表 命令 功能 * 指针快捷健 alias 命令快捷键 ascii ASCII码转换和码表 bpf eBPF - extended Berkeley Filter bt 堆栈查看 btop 地址页表转换 dev 设备数据查询 dis 返汇编 eval 计算器 exit 退出 extend 命令扩展 files 打开的文件查看 foreach 循环查看 fuser 文件使用者查看 gdb 调用gdb执行命令 help 帮助 ipcs 查看system V IPC工具 irq 查看irq数据 kmem 查看Kernel内存 list 查看链表 log 查看系统消息缓存 mach 查看平台信息 mod 加载符号表 mount Mount文件系统数据 net 网络命令 p 查看数据结构 ps 查看进程状态信息 pte 查看页表 ptob 页表地址转换 ptov 物理地址虚拟地址转换 rd 查看内存 repeat 重复执行 runq 查看run queue上的线程 search 搜索内存 set 设置线程环境和Crash内部变量 sig 查询线程消息 struct 查询结构体 swap 查看swap信息 sym 符号和虚拟地址转换 sys 查看系统信息 task 查看task_struct和thread_thread信息 timer 查看timer队列 tree 查看radix树和rb树 union 查看union结构体 vm 查看虚拟内存 vtop 虚拟地址物理地址转换 waitq 查看wait queue上的进程 whatis 符号表查询 wr 改写内存 q 退出

crash> bt -T PID: 22501 TASK: ffff881ff4340690 CPU: 1 COMMAND: "先休息" [ffff881f2ebcf3e0] put_dec at ffffffff8127ac94 [ffff881f2ebcf3f0] put_dec at ffffffff8127ac94 [ffff881f2ebcf410] number.clone.1 at ffffffff8127b9a1 [ffff881f2ebcf450] number.clone.1 at ffffffff8127b9a1 [ffff881f2ebcf460] put_dec at ffffffff8127ac94 [ffff881f2ebcf480] number.clone.1 at ffffffff8127b9a1 [ffff881f2ebcf490] __kmalloc_node_track_caller at ffffffff810ce77f [ffff881f2ebcf4c0] number.clone.1 at ffffffff8127b9a1 [ffff881f2ebcf4d0] get_partial_node at ffffffff810cc603 [ffff881f2ebcf500] number.clone.1 at ffffffff8127b9a1 [ffff881f2ebcf510] get_partial_node at ffffffff810cc603 [ffff881f2ebcf550] vsnprintf at ffffffff8127c36f [ffff881f2ebcf590] arch_local_irq_save at ffffffff810709ee [ffff881f2ebcf5b0] _raw_spin_unlock_irqrestore at ffffffff815f5156 [ffff881f2ebcf5d0] _raw_spin_unlock_irqrestore at ffffffff815f5156 [ffff881f2ebcf5e0] console_unlock at ffffffff8104b2bf [ffff881f2ebcf620] vprintk at ffffffff8104b706 [ffff881f2ebcf660] vprintk at ffffffff8104b706 [ffff881f2ebcf690] common_interrupt at ffffffff815f54ce [ffff881f2ebcf700] swiotlb_dma_mapping_error at ffffffff8128a0d3 [ffff881f2ebcf720] igb_xmit_frame_ring at ffffffffa006a3c1 [igb] [ffff881f2ebcf740] swiotlb_dma_mapping_error at ffffffff8128a0d3 [ffff881f2ebcf760] igb_xmit_frame_ring at ffffffffa006a3c1 [igb] [ffff881f2ebcf778] swiotlb_map_page at ffffffff8128a87e [ffff881f2ebcf790] local_bh_enable at ffffffff8104f336 [ffff881f2ebcf7f0] igb_xmit_frame at ffffffffa006ada4 [igb] [ffff881f2ebcf820] dev_hard_start_xmit at ffffffff814d588d [ffff881f2ebcf880] _raw_spin_lock at ffffffff815f50fc [ffff881f2ebcf890] sch_direct_xmit at ffffffff814e881f [ffff881f2ebcf8c0] _local_bh_enable_ip.clone.8 at ffffffff8104f2b9 [ffff881f2ebcf8d0] local_bh_enable at ffffffff8104f336 [ffff881f2ebcf8e0] dev_queue_xmit at ffffffff814d5dc0 [ffff881f2ebcf930] mac_build_and_send_pkt at ffffffffa010dca6 [wafg2] [ffff881f2ebcf950] ip_finish_output2 at ffffffff8152568a [ffff881f2ebcf980] ip_finish_output at ffffffff81525792 [ffff881f2ebcf9a0] ip_output at ffffffff815261a7

crash> log

[1039338.458914] Second detect insufficient ring room. Requested: 22. [1039338.459474] Second detect insufficient ring room. Requested: 22. [1039338.460095] Second detect insufficient ring room. Requested: 22. [1039338.460628] Second detect insufficient ring room. Requested: 22. [1039338.461218] Second detect insufficient ring room. Requested: 22. [1039338.461792] Second detect insufficient ring room. Requested: 22. [1039338.462317] Second detect insufficient ring room. Requested: 22. [1039338.462936] Second detect insufficient ring room. Requested: 22. [1039338.463755] Second detect insufficient ring room. Requested: 22. [1039338.646254] Second detect insufficient ring room. Requested: 22. [1039338.646769] Second detect insufficient ring room. Requested: 22. [1039338.647356] Second detect insufficient ring room. Requested: 22. [1039338.679837] Second detect insufficient ring room. Requested: 22. [1039338.680431] Second detect insufficient ring room. Requested: 22. [1039338.680961] Second detect insufficient ring room. Requested: 22. [1039338.681491] Second detect insufficient ring room. Requested: 22. [1039338.682080] Second detect insufficient ring room. Requested: 22. [1039338.682696] Second detect insufficient ring room. Requested: 22. [1039338.683330] Second detect insufficient ring room. Requested: 22. [1039338.683845] Second detect insufficient ring room. Requested: 22. [1039338.684741] Second detect insufficient ring room. Requested: 22. [1039338.685251] Second detect insufficient ring room. Requested: 22. [1039338.727267] BUG: soft lockup - CPU#1 stuck for 67s! [wafd:22501] [1039338.727277] CPU 1 [1039338.727285] [1039338.727292] RIP: 0010:[<ffffffffa006a754>] [<ffffffffa006a754>] igb_xmit_frame_ring+0x744/0xd10 [igb] [1039338.727301] RSP: 0018:ffff88107fc23be8 EFLAGS: 00000216 [1039338.727303] RAX: 0000000000000000 RBX: ffffffff8106230e RCX: 0000000000000100 [1039338.727305] RDX: 00000000000000f3 RSI: 0000000000000000 RDI: 0000000062300000 [1039338.727307] RBP: ffff88107fc23c68 R08: 0000000ef7858840 R09: 00000000008d8000 [1039338.727309] R10: 0000000000000000 R11: 00000000000010c0 R12: ffffffff815f6553 [1039338.727311] R13: ffff88107fc23b58 R14: 0000000000000032 R15: ffff88103f64de00 [1039338.727313] FS: 00007fd9a7d0b700(0000) GS:ffff88107fc20000(0000) knlGS:0000000000000000 [1039338.727315] CS: 0010 DS: 0000 ES: 0000 CR0: 000000008005003b [1039338.727317] CR2: 00007fd96a7a0000 CR3: 000000000195f000 CR4: 00000000000406e0

objdump只反汇编想要的函数

#!/bin/bash vmlinux=$1 symbol=$2 if [ -z "$vmlinux" ]; then echo "usage : $0 vmlinux symbol" exit fi startaddress=$(nm -n $vmlinux | grep "\w\s$symbol" | awk '{print "0x"$1;exit}') endaddress=$(nm -n $vmlinux | grep -A1 "\w\s$symbol" | awk '{getline; print "0x"$1;exit}') if [ -z "$symbol" ]; then echo "dump all symbol" objdump -d $vmlinux else echo "start-address: $startaddress, end-address: $endaddress" objdump -d $vmlinux --start-address=$startaddress --stop-address=$endaddress fi

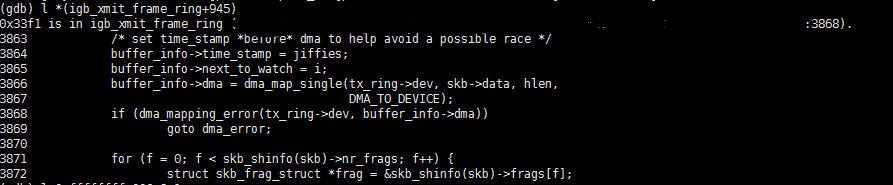

对比 代码查看 应该就是 xmit pkt 的时候dma 出现错误

但是为什么 ? 是什么原因导致? 怎么分析 啃代码??

crash> dis -l ffffffffa006a3c1 0xffffffffa006a3c1 <igb_xmit_frame_ring+945>: test %eax,%eax crash> dis -l 0xffffffffa006a754 0xffffffffa006a754 <igb_xmit_frame_ring+1860>: add $0x58,%rsp swiotlb_dma_mapping_error at ffffffff8128a0d3 [ffff881f2ebcf720] igb_xmit_frame_ring at ffffffffa006a3c1 [igb] [ffff881f2ebcf740] swiotlb_dma_mapping_error at ffffffff8128a0d3 [ffff881f2ebcf760] igb_xmit_frame_ring at ffffffffa006a3c1 [igb] [ffff881f2ebcf778] swiotlb_map_page at ffffffff8128a87e

这是gdb 驱动的结果

ps:驱动出现的问题, 那么gdb直接调试驱动的 ko 文件, 如果是源内核出现的 OOPS, 那么只能用 gdb 对 vmlinux(内核根目录下) 文件进行调试

或者 gdb 调试对应的.o文件 disass 对应的函数名称 找到其 偏移地址对应的地址 ;然后使用addrline -a 地址 -e file 找出对应代码

根据log以及panic堆栈 估计就是网卡一直在发包导致的吧!!!但是 根本原因不知道啊!!!!!

log分析可知: 是unused_desc不足导致的问题

/* We need to check again in a case another CPU has just * made room available. */ if (igb_desc_unused(tx_ring) < size) return -EBUSY;

err = request_irq(adapter->msix_entries[vector].vector, igb_msix_ring, 0, q_vector->name, q_vector); static irqreturn_t igb_msix_ring(int irq, void *data) { struct igb_q_vector *q_vector = data; /* Write the ITR value calculated from the previous interrupt. */ igb_write_itr(q_vector); napi_schedule(&q_vector->napi); return IRQ_HANDLED; } static inline void napi_schedule(struct napi_struct *n) { if (napi_schedule_prep(n)) __napi_schedule(n); } void __napi_schedule(struct napi_struct *n) { unsigned long flags; local_irq_save(flags); ____napi_schedule(this_cpu_ptr(&softnet_data), n); local_irq_restore(flags); } /* Called with irq disabled */ static inline void ____napi_schedule(struct softnet_data *sd, struct napi_struct *napi) { list_add_tail(&napi->poll_list, &sd->poll_list); __raise_softirq_irqoff(NET_RX_SOFTIRQ); }

发送数据,但是硬中断最终触发的软中断却是 NET_RX_SOFTIRQ; 如果要是有源源不断的数据 是不是会 收包 发包 一直循环??

void __qdisc_run(struct Qdisc *q) { int quota = weight_p; int packets; while (qdisc_restart(q, &packets)) { /* * Ordered by possible occurrence: Postpone processing if * 1. we've exceeded packet quota * 2. another process needs the CPU; */ quota -= packets; if (quota <= 0 || need_resched()) { __netif_schedule(q); break; } } qdisc_run_end(q); }

while 循环调用 qdisc_restart(),后者取出一个 skb,然后尝试通过 sch_direct_xmit() 来发送;sch_direct_xmit 调用 dev_hard_start_xmit 来向驱动 程序进行实际发送。任何无法发送的 skb 都重新入队,将在 NET_TX softirq 中进行 发送。

目前是by pass 中断收发包, 继续分析

目前这边对比分析:认为是网卡中断都负载在第一个cpu 上导致, 虽然有16核cpu,但是只有一个cpu 在处理!! 采用rss 即可

后续还是会继续研究其vmcore

对于vmcore 可以使用一下方式 查看二季指针

如果要查看二阶指针的值,可以通过rd命令需要先获取一级指针的值,然后再用struct 结构体名 + addr获取具体的值 crash> files 1076 PID: 1076 TASK: ffff882034f68000 CPU: 9 COMMAND: "python" ROOT: / CWD: / FD FILE DENTRY INODE TYPE PATH 0 ffff88203480a300 ffff88107f4e00c0 ffff88103f99b700 CHR /dev/null 1 ffff88203480a300 ffff88107f4e00c0 ffff88103f99b700 CHR /dev/null 2 ffff88203480a300 ffff88107f4e00c0 ffff88103f99b700 CHR /dev/null 3 ffff881036921e00 ffff8810375449c0 ffff88107f4526b0 SOCK 4 ffff881036921ec0 ffff881037544480 ffff882078c00980 UNKN [eventpoll] 5 ffff881037149bc0 ffff8810377c3800 ffff88107f452ef0 SOCK 6 ffff881037149ec0 ffff8810377c20c0 ffff88107f42fbf0 SOCK 7 ffff8810371480c0 ffff8810377c3740 ffff88107f42f930 SOCK 8 ffff881037148c00 ffff8810377c2cc0 ffff88107f7fe330 SOCK 9 ffff8810371495c0 ffff8810377c3a40 ffff88107f7fa970 SOCK 10 ffff8820364ae600 ffff88201d7223c0 ffff88203bcd73b0 SOCK 11 ffff8820364aea80 ffff88201d723680 ffff88203bcd7670 SOCK 12 ffff8820364af200 ffff88201d723740 ffff88203bcd7930 SOCK 13 ffff8820364af380 ffff88201d723800 ffff88203bcd7bf0 SOCK 14 ffff88203eba15c0 ffff88201ccc4240 ffff88203be53730 SOCK 15 ffff88203eba1140 ffff88201ccc4300 ffff88203be539f0 SOCK 16 ffff88203eba0300 ffff88201ccc43c0 ffff88203be53cb0 SOCK 17 ffff88203eba0a80 ffff88201ccc4480 ffff88203be53f70 SOCK 18 ffff88203eba1740 ffff88201ccc4540 ffff88203be54230 SOCK 19 ffff88203eba0000 ffff88201ccc4600 ffff88203be544f0 SOCK 20 ffff88203eba1500 ffff88201ccc46c0 ffff88203be547b0 SOCK 21 ffff88203eba0600 ffff88201ccc4780 ffff88203be54a70 SOCK 22 ffff88203eba1b00 ffff88201ccc4840 ffff88203be54d30 SOCK 23 ffff88203eba18c0 ffff88201ccc4900 ffff88203be54ff0 SOCK 24 ffff88203eba0fc0 ffff88201ccc49c0 ffff88203be552b0 SOCK 25 ffff88203aac9440 ffff88201ccc4a80 ffff88203be55570 SOCK 26 ffff88203aac8c00 ffff88201ccc4b40 ffff88203be55830 SOCK 27 ffff88203aac86c0 ffff88201ccc4c00 ffff88203be55af0 SOCK 28 ffff88203aac8780 ffff88201ccc4cc0 ffff88203be55db0 SOCK 29 ffff88203aac95c0 ffff88201ccc4d80 ffff88203be56070 SOCK 30 ffff88203aac83c0 ffff88201ccc4e40 ffff88203be56330 SOCK 31 ffff88203aac9680 ffff88201ccc4f00 ffff88203be565f0 SOCK 32 ffff88203aac9800 ffff88201ccc4fc0 ffff88203be568b0 SOCK 33 ffff88203aac8d80 ffff88201ccc5080 ffff88203be56b70 SOCK 34 ffff88203aac8900 ffff88201ccc5140 ffff88203be56e30 SOCK 35 ffff88203aac8f00 ffff88201ccc5200 ffff88203be570f0 SOCK 36 ffff88203aac8180 ffff88201ccc52c0 ffff88203be573b0 SOCK crash> files 1076 PID: 1076 TASK: ffff882034f68000 CPU: 9 COMMAND: "python" ROOT: / CWD: / FD FILE DENTRY INODE TYPE PATH 0 ffff88203480a300 ffff88107f4e00c0 ffff88103f99b700 CHR /dev/null 1 ffff88203480a300 ffff88107f4e00c0 ffff88103f99b700 CHR /dev/null 2 ffff88203480a300 ffff88107f4e00c0 ffff88103f99b700 CHR /dev/null 3 ffff881036921e00 ffff8810375449c0 ffff88107f4526b0 SOCK 4 ffff881036921ec0 ffff881037544480 ffff882078c00980 UNKN [eventpoll] 5 ffff881037149bc0 ffff8810377c3800 ffff88107f452ef0 SOCK 6 ffff881037149ec0 ffff8810377c20c0 ffff88107f42fbf0 SOCK 7 ffff8810371480c0 ffff8810377c3740 ffff88107f42f930 SOCK 8 ffff881037148c00 ffff8810377c2cc0 ffff88107f7fe330 SOCK 9 ffff8810371495c0 ffff8810377c3a40 ffff88107f7fa970 SOCK 10 ffff8820364ae600 ffff88201d7223c0 ffff88203bcd73b0 SOCK 11 ffff8820364aea80 ffff88201d723680 ffff88203bcd7670 SOCK 12 ffff8820364af200 ffff88201d723740 ffff88203bcd7930 SOCK 13 ffff8820364af380 ffff88201d723800 ffff88203bcd7bf0 SOCK 14 ffff88203eba15c0 ffff88201ccc4240 ffff88203be53730 SOCK 15 ffff88203eba1140 ffff88201ccc4300 ffff88203be539f0 SOCK 16 ffff88203eba0300 ffff88201ccc43c0 ffff88203be53cb0 SOCK 17 ffff88203eba0a80 ffff88201ccc4480 ffff88203be53f70 SOCK 18 ffff88203eba1740 ffff88201ccc4540 ffff88203be54230 SOCK 19 ffff88203eba0000 ffff88201ccc4600 ffff88203be544f0 SOCK 20 ffff88203eba1500 ffff88201ccc46c0 ffff88203be547b0 SOCK 21 ffff88203eba0600 ffff88201ccc4780 ffff88203be54a70 SOCK 22 ffff88203eba1b00 ffff88201ccc4840 ffff88203be54d30 SOCK 23 ffff88203eba18c0 ffff88201ccc4900 ffff88203be54ff0 SOCK 24 ffff88203eba0fc0 ffff88201ccc49c0 ffff88203be552b0 SOCK 25 ffff88203aac9440 ffff88201ccc4a80 ffff88203be55570 SOCK 26 ffff88203aac8c00 ffff88201ccc4b40 ffff88203be55830 SOCK 27 ffff88203aac86c0 ffff88201ccc4c00 ffff88203be55af0 SOCK 28 ffff88203aac8780 ffff88201ccc4cc0 ffff88203be55db0 SOCK 29 ffff88203aac95c0 ffff88201ccc4d80 ffff88203be56070 SOCK 30 ffff88203aac83c0 ffff88201ccc4e40 ffff88203be56330 SOCK 31 ffff88203aac9680 ffff88201ccc4f00 ffff88203be565f0 SOCK 32 ffff88203aac9800 ffff88201ccc4fc0 ffff88203be568b0 SOCK 33 ffff88203aac8d80 ffff88201ccc5080 ffff88203be56b70 SOCK 34 ffff88203aac8900 ffff88201ccc5140 ffff88203be56e30 SOCK 35 ffff88203aac8f00 ffff88201ccc5200 ffff88203be570f0 SOCK 36 ffff88203aac8180 ffff88201ccc52c0 ffff88203be573b0 SOCK crash> struct task_struct.files ffff882034f68000 files = 0xffff8820779739c0 crash> struct files_struct 0xffff8820779739c0 struct files_struct { count = { counter = 3 }, fdt = 0xffff8820779739d0, fdtab = { max_fds = 64, fd = 0xffff882077973a58, close_on_exec = 0xffff882077973a48, open_fds = 0xffff882077973a50, rcu = { next = 0x0, func = 0xffffffff810e801b <free_fdtable_rcu> }, next = 0x0 }, file_lock = { { rlock = { raw_lock = { slock = 3753500601 } } } }, next_fd = 37, close_on_exec_init = { fds_bits = {0} }, open_fds_init = { fds_bits = {137438953471} }, fd_array = {0xffff88203480a300, 0xffff88203480a300, 0xffff88203480a300, 0xffff881036921e00, 0xffff881036921ec0, 0xffff881037149bc0, 0xffff881037149ec0, 0xffff8810371480c0, 0xffff881037148c00, 0xffff8810371495c0, 0xffff8820364ae600, 0xffff8820364aea80, 0xffff8820364af200, 0xffff8820364af380, 0xffff88203eba15c0, 0xffff88203eba1140, 0xffff88203eba0300, 0xffff88203eba0a80, 0xffff88203eba1740, 0xffff88203eba0000, 0xffff88203eba1500, 0xffff88203eba0600, 0xffff88203eba1b00, 0xffff88203eba18c0, 0xffff88203eba0fc0, 0xffff88203aac9440, 0xffff88203aac8c00, 0xffff88203aac86c0, 0xffff88203aac8780, 0xffff88203aac95c0, 0xffff88203aac83c0, 0xffff88203aac9680, 0xffff88203aac9800, 0xffff88203aac8d80, 0xffff88203aac8900, 0xffff88203aac8f00, 0xffff88203aac8180, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0} } crash> struct files_struct struct files_struct { atomic_t count; struct fdtable *fdt; struct fdtable fdtab; spinlock_t file_lock; int next_fd; struct embedded_fd_set close_on_exec_init; struct embedded_fd_set open_fds_init; struct file *fd_array[64]; } SIZE: 704 crash> struct files_struct.fdt 0xffff8820779739c0 fdt = 0xffff8820779739d0 crash> struct fdtable 0xffff8820779739d0 struct fdtable { max_fds = 64, fd = 0xffff882077973a58, close_on_exec = 0xffff882077973a48, open_fds = 0xffff882077973a50, rcu = { next = 0x0, func = 0xffffffff810e801b <free_fdtable_rcu> }, next = 0x0 } crash> rd 0xffff882077973a58 ffff882077973a58: ffff88203480a300 ...4 ... fd[0] crash> rd 0xffff882077973a60 ffff882077973a60: ffff88203480a300 ...4 ... crash> rd 0xffff882077973a68 ffff882077973a68: ffff88203480a300 ...4 ... crash> rd 0xffff882077973a70 ffff882077973a70: ffff881036921e00 ...6....fd[3] crash>

Crash命令列表

| 命令 | 功能 |

|---|---|

| * | 指针快捷健 |

| alias | 命令快捷键 |

| ascii | ASCII码转换和码表 |

| bpf | eBPF - extended Berkeley Filter |

| bt | 堆栈查看 |

| btop | 地址页表转换 |

| dev | 设备数据查询 |

| dis | 返汇编 |

| eval | 计算器 |

| exit | 退出 |

| extend | 命令扩展 |

| files | 打开的文件查看 |

| foreach | 循环查看 |

| fuser | 文件使用者查看 |

| gdb | 调用gdb执行命令 |

| help | 帮助 |

| ipcs | 查看system V IPC工具 |

| irq | 查看irq数据 |

| kmem | 查看Kernel内存 |

| list | 查看链表 |

| log | 查看系统消息缓存 |

| mach | 查看平台信息 |

| mod | 加载符号表 |

| mount | Mount文件系统数据 |

| net | 网络命令 |

| p | 查看数据结构 |

| ps | 查看进程状态信息 |

| pte | 查看页表 |

| ptob | 页表地址转换 |

| ptov | 物理地址虚拟地址转换 |

| rd | 查看内存 |

| repeat | 重复执行 |

| runq | 查看run queue上的线程 |

| search | 搜索内存 |

| set | 设置线程环境和Crash内部变量 |

| sig | 查询线程消息 |

| struct | 查询结构体 |

| swap | 查看swap信息 |

| sym | 符号和虚拟地址转换 |

| sys | 查看系统信息 |

| task | 查看task_struct和thread_thread信息 |

| timer | 查看timer队列 |

| tree | 查看radix树和rb树 |

| union | 查看union结构体 |

| vm | 查看虚拟内存 |

| vtop | 虚拟地址物理地址转换 |

| waitq | 查看wait queue上的进程 |

| whatis | 符号表查询 |

| wr | 改写内存 |

| q | 退出 |

表格转载自链接:https://www.jianshu.com/p/ad03152a0a53

浙公网安备 33010602011771号

浙公网安备 33010602011771号