XDP/AF_XDP ? eBPF

参考书籍:https://files.cnblogs.com/files/codestack/OReilly-Linux-Observability-with-BPF-2019.rar

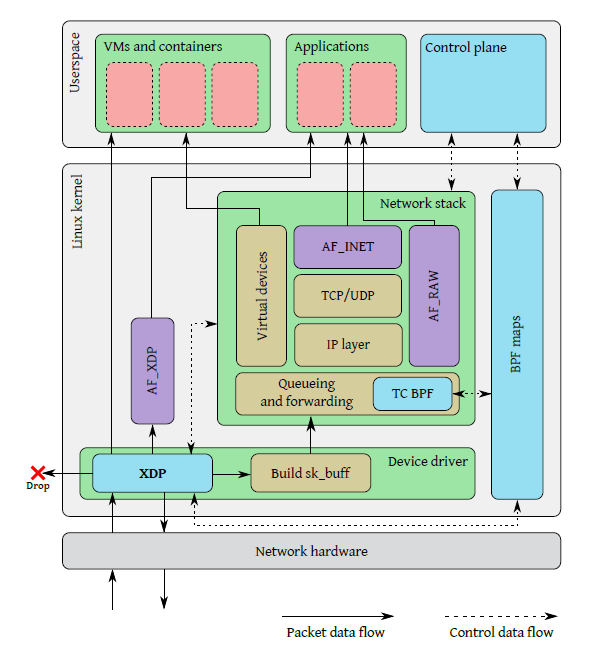

XDP总体设计包括以下几个部分:

- XDP驱动钩子:网卡驱动中XDP程序的一个hook,XDP程序可以对数据包进行逐层解析、按规则进行过滤,或者对数据包进行封装或者解封装,修改字段对数据包进行转发等;

- eBPF虚拟机:字节码加载到内核之后运行在eBPF虚拟机上,

- BPF maps(BPF映射):存储键值对,作为用户态程序和内核态XDP程序、内核态XDP程序之间的通信媒介,类似于进程间通信的共享内存访问;

- eBPF程序校验器:XDP程序肯定是我们自己编写的,那么如何确保XDP程序加载到内核之后不会导致内核崩溃或者带来其他的安全问题呢?程序校验器就是在将XDP字节码加载到内核之前对字节码进行安全检查,比如判断是否有循环,程序长度是否超过限制,程序内存访问是否越界,程序是否包含不可达的指令;

当一个数据包到达网卡,在内核网络栈分配缓冲区将数据包内容存到sk_buff结构体之前,xdp程序执行,读取由用户态的控制平面写入到BPF maps的数据包处理规则,对数据包执行相应的操作,比如可以直接丢弃该数据包,或者将数据包发回当前网卡,或者直接将数据包通过AF_XDP这个特殊的socket直接转发给上层应用程序。

XDP can (as of November 2019):

- Fast incoming packet filtering. XDP can inspect fields in incoming packets and take simple action like DROP, TX to send it out the same interface it was received, REDIRECT to other interface or PASS to kernel stack for processing. XDP can alternate packet data like swap MAC addresses, change ip addresses, ports, ICMP type, recalculate checksums, etc. So obvious usage is for implementing:

- Filerwalls (DROP)

- L2/L3 lookup & forward

- NAT – it is possible to implement static NAT indirectly (two XDP programs, each attached to own interface, processing and forwarding the traffic out, via the other interface). Connection tracking is possible, but more complicated with preserving and exchanging session-related data in TABLES.

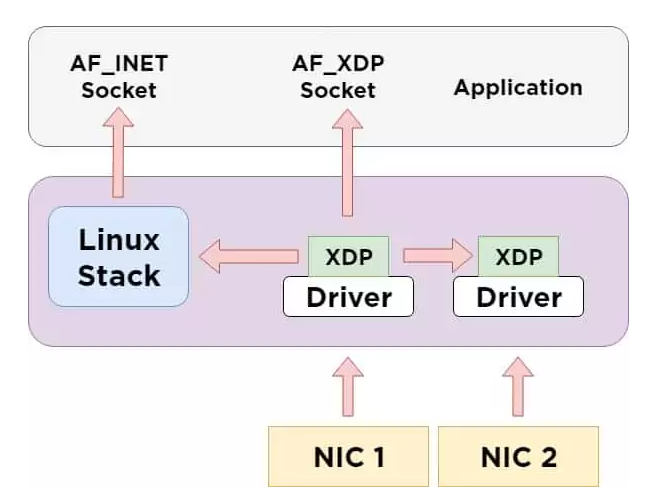

AF_XDP

- As opposed to AF_Packet, AF_XDP moves frames directly to the userspace, without the need to go through the whole kernel network stack. They arrive in the shortest possible time. AF_XDP does not bypass the kernel but creates an in-kernel fast path.

- It also offers advantages like zero-copy (between kernel space & userspace) or offloading of the XDP bytecode into NIC. AF_XDP can run in interrupt mode, as well as polling mode, while DPDK polling mode drivers always poll – this means that they use 100% of the available CPU processing power.

AF_XDP socket

sfd = socket(PF_XDP, SOCK_RAW, 0);

buffs = calloc(num_buffs, FRAME_SIZE);

setsockopt(sfd, SOL_XDP, XDP_MEM_REG, buffs);

setsockopt(sfd, SOL_XDP, XDP_{RX|TX|FILL|COMPLETE}_RING,

ring_size);

mmap(..., sfd, ......); /* map kernel rings */

bind(sfd, ”/dev/eth0”, queue_id,....);

for (;;) {

read_process_send_messages(sfd);

};

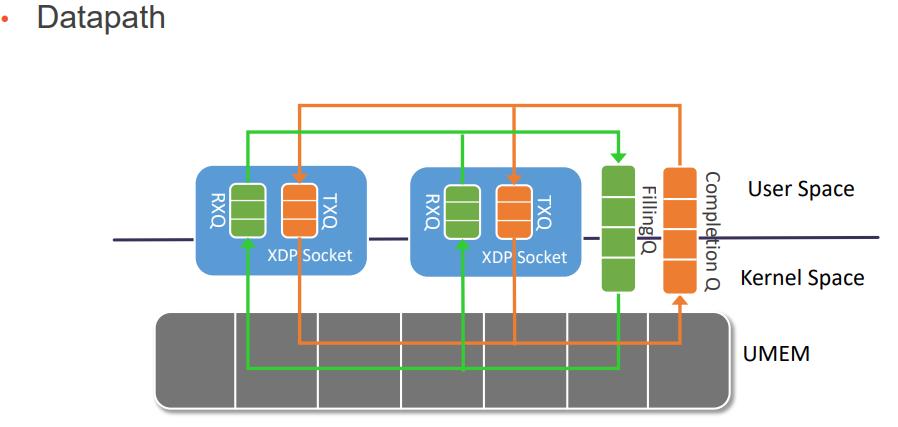

所以AF_XDP Socket的创建过程可以使用在网络编程中常见的socket()系统调用,就是参数需要特别配置一下。在创建之后,每个socket都各自分配了一个RX ring和TX ring。这两个ring保存的都是descriptor,里面有指向UMEM中真正保存帧的地址。

UMEM也有两个队列,一个叫FILL ring,一个叫COMPLETION ring。其实就和传统网络IO过程中给DMA填写的接收和发送环形队列很类似。在RX过程中,用户应用程序在FILL ring中填入接收数据包的地址,XDP程序会将接收到的数据包放入该地址中,并在socket的RX ring中填入对应的descriptor。

但COMPLETION ring中保存的并非用户应用程序“将要”发送的帧的地址,而是已经完成发送的帧的地址。这些帧可以用来被再次发送或者接收。“将要”发送的帧的地址是在socket的TX ring中,同样由用户应用填入。RX/TX ring和FILL/COMPLETION ring之间是多对一(n:1)的关系。也就是说可以有多个socket和它们的RX/TX ring共享一个UMEN和它的FILL/COMPLETION ring。

什么xdp 什么ebpf 什么map 什么af_packet 什么xxx, 到头来就是 user和kernel 共享同一片内存,都能去撸羊毛而已 !!!

什么是通信,就是数据交换,也就是内存倒腾!!!!

参考:

https://www.kernel.org/doc/html/v4.18/networking/af_xdp.html

https://www.dpdk.org/wp-content/uploads/sites/35/2018/10/pm-06-DPDK-PMD-for-AF_XDP.pdf

https://pantheon.tech/what-is-af_xdp/

https://www.kernel.org/doc/html/latest/networking/af_xdp.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号