tcp 接收被动关闭 fin

void tcp_rcv_established(struct sock *sk, struct sk_buff *skb, const struct tcphdr *th, unsigned int len)

主要是处理已经连理连接的输入的tcp数据包。tcp_rcv_established实际上包含了两条路径用于处理不同目的的数据包。

- 快速路径 使用快速路径只进行最少的处理,如处理数据段、发生ACK、存储时间戳等。

- 慢速路径 使用慢速路径可以处理乱序数据段、PAWS、socket内存管理和紧急数据等。

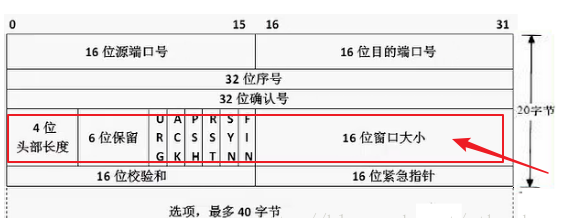

主要是:TCP首部中第4个32位字除去保留的bit位和预测标志一致,比如:skb包的序列号和sock结构下一个要接收到序号相等,并且skb包中的确认序列号是有效的,除了head_len,只有为1的bit位对应的是ACK标志其余为0,snd_wnd则是本端发送窗口的大小。就走快速路径;毕竟是预期的报文!!

但是如果设置了FIN标志的话,则检查预测标志时失败,所以会在慢速路径中处理FIN包。

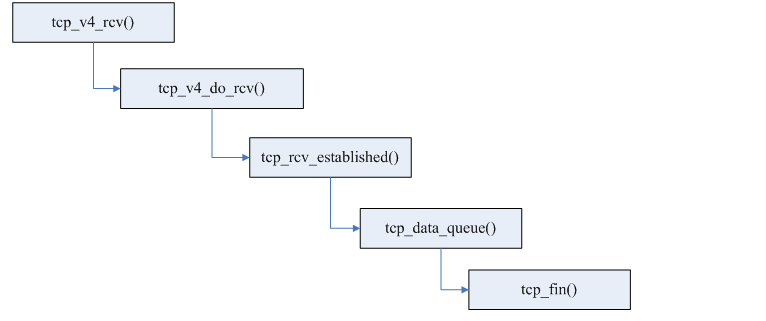

其流程大约是:

if (TCP_SKB_CB(skb)->tcp_flags & TCPHDR_FIN) tcp_fin(sk);

/* * Process the FIN bit. This now behaves as it is supposed to work * and the FIN takes effect when it is validly part of sequence * space. Not before when we get holes. * * If we are ESTABLISHED, a received fin moves us to CLOSE-WAIT * (and thence onto LAST-ACK and finally, CLOSE, we never enter * TIME-WAIT) * * If we are in FINWAIT-1, a received FIN indicates simultaneous * close and we go into CLOSING (and later onto TIME-WAIT) * * If we are in FINWAIT-2, a received FIN moves us to TIME-WAIT. */ static void tcp_fin(struct sock *sk)TCP_FIN_TIM { struct tcp_sock *tp = tcp_sk(sk); inet_csk_schedule_ack(sk);//表明需要发送ack sk->sk_shutdown |= RCV_SHUTDOWN; //表明rcv 关闭 关闭接收通道 sock_set_flag(sk, SOCK_DONE);//sock结构的标志,表示连接将要结束 switch (sk->sk_state) { case TCP_SYN_RECV: case TCP_ESTABLISHED: /* Move to CLOSE_WAIT */ tcp_set_state(sk, TCP_CLOSE_WAIT);//设置为 close_wait 状态 inet_csk(sk)->icsk_ack.pingpong = 1;//设置延迟发送ACK的标志----也就是进入延迟确认模式 -----期望发送数据或FIN时携带ACK确认 /*tcp_fin()中虽然设置了发送ACK的相关标志,但是要有一个引发ACK发送的操作,或者是给内核发送ACK的一个提示。 这个操作是在上层函数tcp_rcv_established()函数中进行的,通过间接调用__tcp_ack_snd_check()中完成 */ break; case TCP_CLOSE_WAIT: case TCP_CLOSING: /* Received a retransmission of the FIN, do * nothing. */ break; case TCP_LAST_ACK: /* RFC793: Remain in the LAST-ACK state. */ break; case TCP_FIN_WAIT1: /* This case occurs when a simultaneous close * happens, we must ack the received FIN and * enter the CLOSING state. */ tcp_send_ack(sk); tcp_set_state(sk, TCP_CLOSING); break; case TCP_FIN_WAIT2: /* Received a FIN -- send ACK and enter TIME_WAIT. */ tcp_send_ack(sk); tcp_time_wait(sk, TCP_TIME_WAIT, 0); break; default: /* Only TCP_LISTEN and TCP_CLOSE are left, in these * cases we should never reach this piece of code. */ pr_err("%s: Impossible, sk->sk_state=%d\n", __func__, sk->sk_state); break; } /* It _is_ possible, that we have something out-of-order _after_ FIN. * Probably, we should reset in this case. For now drop them. 清理乱序队列、状态更改时可能要唤醒相关进程 乱序队列数据直接干掉哦!!! 之前正常的序列还在 ---- */ __skb_queue_purge(&tp->out_of_order_queue); if (tcp_is_sack(tp))//开启了SACK选项 tcp_sack_reset(&tp->rx_opt);//清除SACK选项信息,因为对端已经不会再发送数据了 sk_mem_reclaim(sk); if (!sock_flag(sk, SOCK_DEAD)) { sk->sk_state_change(sk);//状态更改时可能要唤醒相关进程 ---sock_def_wakeup /* Do not send POLL_HUP for half duplex close. */ if (sk->sk_shutdown == SHUTDOWN_MASK || sk->sk_state == TCP_CLOSE) sk_wake_async(sk, SOCK_WAKE_WAITD, POLL_HUP); else sk_wake_async(sk, SOCK_WAKE_WAITD, POLL_IN); } }

如果收包队列中只有无数据的FIN包,则收包系统会调用返回0,这时应用进程就知道收到了FIN,对端关闭了连接。如果队列中有数据则系统调用会返回大于0的值 ;

然后 应用进程如果调用事件监听函数(如epoll_wait /poll)等待数据到来,这时会调用tcp_poll函数:

其实现如下:

/* * Wait for a TCP event. * * Note that we don't need to lock the socket, as the upper poll layers * take care of normal races (between the test and the event) and we don't * go look at any of the socket buffers directly. */ unsigned int tcp_poll(struct file *file, struct socket *sock, poll_table *wait) { unsigned int mask; struct sock *sk = sock->sk; const struct tcp_sock *tp = tcp_sk(sk); int state; sock_rps_record_flow(sk); sock_poll_wait(file, sk_sleep(sk), wait); state = sk_state_load(sk); if (state == TCP_LISTEN) return inet_csk_listen_poll(sk); /* Socket is not locked. We are protected from async events * by poll logic and correct handling of state changes * made by other threads is impossible in any case. */ mask = 0; /* * POLLHUP is certainly not done right. But poll() doesn't * have a notion of HUP in just one direction, and for a * socket the read side is more interesting. * * Some poll() documentation says that POLLHUP is incompatible * with the POLLOUT/POLLWR flags, so somebody should check this * all. But careful, it tends to be safer to return too many * bits than too few, and you can easily break real applications * if you don't tell them that something has hung up! * * Check-me. * * Check number 1. POLLHUP is _UNMASKABLE_ event (see UNIX98 and * our fs/select.c). It means that after we received EOF, * poll always returns immediately, making impossible poll() on write() * in state CLOSE_WAIT. One solution is evident --- to set POLLHUP * if and only if shutdown has been made in both directions. * Actually, it is interesting to look how Solaris and DUX * solve this dilemma. I would prefer, if POLLHUP were maskable, * then we could set it on SND_SHUTDOWN. BTW examples given * in Stevens' books assume exactly this behaviour, it explains * why POLLHUP is incompatible with POLLOUT. --ANK * * NOTE. Check for TCP_CLOSE is added. The goal is to prevent * blocking on fresh not-connected or disconnected socket. --ANK */ if (sk->sk_shutdown == SHUTDOWN_MASK || state == TCP_CLOSE) mask |= POLLHUP; if (sk->sk_shutdown & RCV_SHUTDOWN) mask |= POLLIN | POLLRDNORM | POLLRDHUP; /* Connected or passive Fast Open socket? */ if (state != TCP_SYN_SENT && (state != TCP_SYN_RECV || tp->fastopen_rsk)) { int target = sock_rcvlowat(sk, 0, INT_MAX); if (tp->urg_seq == tp->copied_seq && !sock_flag(sk, SOCK_URGINLINE) && tp->urg_data) target++; if (tp->rcv_nxt - tp->copied_seq >= target) mask |= POLLIN | POLLRDNORM; if (!(sk->sk_shutdown & SEND_SHUTDOWN)) { if (sk_stream_is_writeable(sk)) { mask |= POLLOUT | POLLWRNORM; } else { /* send SIGIO later */ sk_set_bit(SOCKWQ_ASYNC_NOSPACE, sk); set_bit(SOCK_NOSPACE, &sk->sk_socket->flags); /* Race breaker. If space is freed after * wspace test but before the flags are set, * IO signal will be lost. Memory barrier * pairs with the input side. */ smp_mb__after_atomic(); if (sk_stream_is_writeable(sk)) mask |= POLLOUT | POLLWRNORM; } } else mask |= POLLOUT | POLLWRNORM; if (tp->urg_data & TCP_URG_VALID) mask |= POLLPRI; } /* This barrier is coupled with smp_wmb() in tcp_reset() */ smp_rmb(); if (sk->sk_err || !skb_queue_empty(&sk->sk_error_queue)) mask |= POLLERR; return mask; }

if (sk->sk_shutdown & RCV_SHUTDOWN) mask |= POLLIN | POLLRDNORM | POLLRDHUP;

其代码中会判断 其 RCV_SHUTDOWN SEND_SHUTDOWN等状态 然后返回对应的掩码!!!

所以POLLRDHUP可以检测到文件是否挂起!!!-----也就是fd 是否被close

http代理服务器(3-4-7层代理)-网络事件库公共组件、内核kernel驱动 摄像头驱动 tcpip网络协议栈、netfilter、bridge 好像看过!!!!

但行好事 莫问前程

--身高体重180的胖子

浙公网安备 33010602011771号

浙公网安备 33010602011771号