SpringCloud 整合 Seata

《分布式事务》![]() https://blog.csdn.net/u011060911/article/details/122210788上面的文章系统介绍了分布式事务相关的理论知识,本文则通过代码来展示如何在项目中来使用并落地。

https://blog.csdn.net/u011060911/article/details/122210788上面的文章系统介绍了分布式事务相关的理论知识,本文则通过代码来展示如何在项目中来使用并落地。

目录

Seata 作为分布式事务的框架应用非常广泛,刚好最近公司的项目有用到,所以就写下本文以供参考。

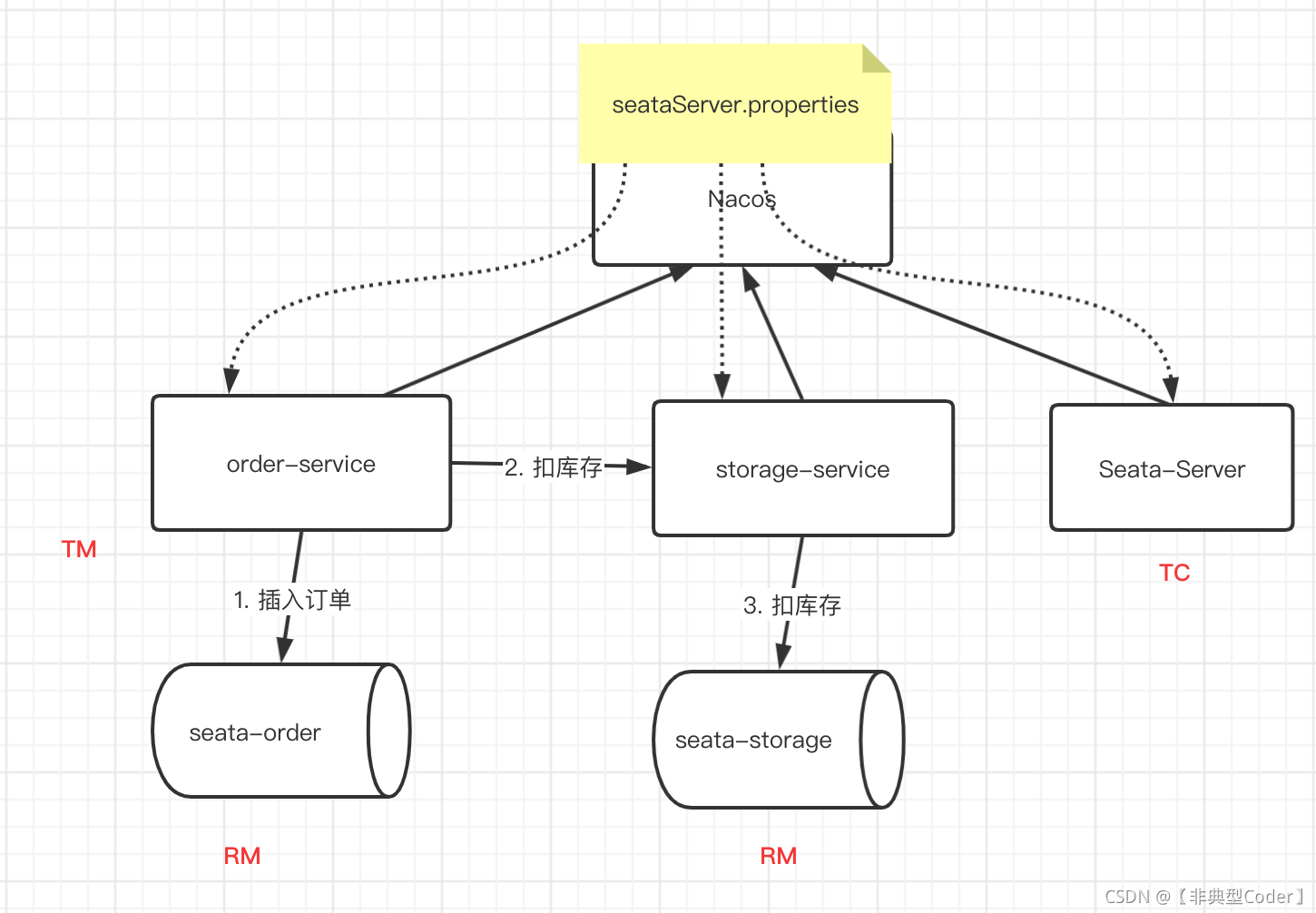

下面就带大家亲手搭建基于 SpringBoot + SpringCloud + Nacos + Seata 这样一套框架。服务见关系图如下:

推荐大家用 Seata 1.4.2 版本,配置信息可以通过一个 dataId 在 nacos 上指定,省去了很多麻烦,并且不容易出错。

1. 启动 seata-server

修改 /conf/registry.conf 文件

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8849"

group = "SEATA_GROUP"

namespace = "baeba154-3521-468d-b5de-8efb86cbf3d9"

cluster = "default"

username = "nacos"

password = "nacos"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

serverAddr = "127.0.0.1:8849"

namespace = "baeba154-3521-468d-b5de-8efb86cbf3d9"

group = "SEATA_GROUP"

username = "nacos"

password = "nacos"

# 需要在nacos上配置

dataId = "seataServer.properties"

}

}

在nacos上添加 seataServer.properties 配置,内容可以拷贝 github 根据自己的实际信息修改。

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableClientBatchSendRequest=true

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

# 事务组集群名:default

service.vgroupMapping.my_test_tx_group=default

service.vgroupMapping.order-service-group=default

service.vgroupMapping.storage-service-group=default

# 通过 service.集群名.grouplist 找到 TC 服务地址

service.default.grouplist=127.0.0.1:8091

service.enableDegrade=false

service.disableGlobalTransaction=false

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=false

client.rm.tableMetaCheckerInterval=60000

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.rm.sagaJsonParser=fastjson

client.rm.tccActionInterceptorOrder=-2147482648

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

client.tm.interceptorOrder=-2147482648

store.mode=db

store.lock.mode=file

store.session.mode=file

store.db.datasource=druid

store.db.dbType=mysql

store.db.driverClassName=com.mysql.jdbc.Driver

store.db.url=jdbc:mysql://127.0.0.1:3307/seata?useUnicode=true&rewriteBatchedStatements=true

store.db.user=root

store.db.password=123456

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.distributedLockTable=distributed_lock

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

server.distributedLockExpireTime=10000

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.undo.compress.enable=true

client.undo.compress.type=zip

client.undo.compress.threshold=64k

log.exceptionRate=100

transport.serialization=seata

transport.compressor=none

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

tcc.fence.logTableName=tcc_fence_log

tcc.fence.cleanPeriod=1h启动 seata-server

./bin/seata-server.sh 2. SpringCloud 整合 seata

注意依赖也需要使用1.4以上的,

<dependency>

<groupId>com.alibaba.nacos</groupId>

<artifactId>nacos-client</artifactId>

<version>1.2.0</version>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

<version>1.4.2</version>

</dependency>应用配置:(这里指列出一个服务的,另一个类似)

logging:

level:

io:

seata: debug

server:

port: 9092

spring:

application:

name: storage-service

cloud:

nacos:

discovery:

server-addr: 127.0.0.1:8849

username: nacos

password: nacos

namespace: baeba154-3521-468d-b5de-8efb86cbf3d9

group: SEATA_GROUP

datasource:

druid:

driverClassName: com.mysql.cj.jdbc.Driver

password: 123456

url: jdbc:mysql://localhost:3307/seata_storage?serverTimezone=Asia/Shanghai&characterEncoding=utf-8&characterSetResults=utf8&useSSL=true&allowMultiQueries=true

username: root

seata:

application-id: ${spring.application.name}

# 这里的配置对应 seataServer.properties 中的 service.vgroupMapping.storage-service-group=default

tx-service-group: storage-service-group

enable-auto-data-source-proxy: false

config:

type: nacos

nacos:

server-addr: 127.0.0.1:8849

username: nacos

password: nacos

namespace: baeba154-3521-468d-b5de-8efb86cbf3d9

# 微服务必须在一个组,否则服务发现不了,但Seata-server 可以在不同的组中

group: SEATA_GROUP

dataId: "seataServer.properties"

registry:

type: nacos

nacos:

server-addr: 127.0.0.1:8849

username: nacos

password: nacos

namespace: baeba154-3521-468d-b5de-8efb86cbf3d9

# 微服务必须在一个组,否则服务发现不了,但Seata-server 可以在不同的组中

group: SEATA_GROUP

3. 启动应用

http://localhost:9091/order/placeOrder/rollback

http://localhost:9091/order/placeOrder/commit

如果觉得还不错的话,关注、分享、在看(关注不失联~), 原创不易,且看且珍惜~

浙公网安备 33010602011771号

浙公网安备 33010602011771号