DAY-03

上午

selenium元素交互操作

——点击、清除

1 from selenium import webdriver 2 from selenium.webdriver import ActionChains # 破解滑动验证码的时候用的 可以拖动图片 3 from selenium.webdriver.common.keys import Keys # 键盘按键操作 4 import time 5 driver = webdriver.Chrome() 6 try: 7 driver.implicitly_wait(10) 8 driver.get('https://www.jd.com/') 9 10 #点击、清除 11 input = driver.find_element_by_id('key') 12 input.send_keys('人间失格') 13 14 #通过class查找搜索 15 search = driver.find_element_by_class_name('button') 16 search.click(); #点击搜索按钮 17 time.sleep(3) 18 19 input2 = driver.find_element_by_id('key') 20 input2.clear() #清空输入框 21 time.sleep(2) 22 23 input2.send_keys('三体') 24 input2.send_keys(Keys.ENTER) 25 time.sleep(5) 26 27 finally: 28 driver.close()

——Actions Chains

动作链对象,需要把driver驱动传给它。

动作链对象可以操作一系列设定好的动作行为

——frame的切换

1.方式一:起始目标向重点目标 瞬间移动

1 from selenium import webdriver 2 from selenium.webdriver import ActionChains # 破解滑动验证码的时候用的 可以拖动图片 3 from selenium.webdriver.common.keys import Keys # 键盘按键操作 4 import time 5 driver = webdriver.Chrome() 6 7 try: 8 driver.implicitly_wait(5) 9 driver.get('http://www.runoob.com/try/try.php?filename=jqueryui-api-droppable') 10 time.sleep(5) 11 12 #frame切换 13 driver.switch_to.frame('ifframeResault') 14 time.sleep(1) 15 16 #起始方块id:draggable 17 action = ActionChains(driver) 18 19 #目标方块id:droppable 20 source = driver.find_element_by_id('draggable') 21 22 target = driver.find_element_by_id('droppable') 23 24 # 起始目标向终点目标 瞬间移动 25 action.drag_and_drop(source,target).perform() 26 time.sleep(10) 27 28 finally: 29 driver.close()

2.方式二:缓慢移动

1 from selenium import webdriver 2 from selenium.webdriver import ActionChains # 破解滑动验证码的时候用的 可以拖动图片 3 from selenium.webdriver.common.keys import Keys # 键盘按键操作 4 import time 5 6 driver = webdriver.Chrome() 7 8 try: 9 driver.implicitly_wait(10) 10 driver.get('https://www.runoob.com/try/try.php?filename=jqueryui-api-droppable') 11 time.sleep(5) 12 13 # frame切换 14 driver.switch_to.frame('iframeResult') 15 time.sleep(1) 16 17 # 起始方块id:draggable 18 source = driver.find_element_by_id('draggable') 19 20 # 目标方块id:droppable 21 target = driver.find_element_by_id('droppable') 22 print(source.size) #大小 23 print(source.tag_name) #标签 24 print(source.text) #文本 25 print(source.location) #坐标:x,y 26 27 # 缓慢移动 28 # 找到移动距离 29 distance = target.location['x'] - source.location['x'] 30 31 # 按住起始目标 32 ActionChains(driver).click_and_hold(source).perform() 33 34 # 移动 35 s = 0 36 while s < distance: 37 ActionChains(driver).move_by_offset(xoffset=2, yoffset=0).perform() 38 s += 2 39 time.sleep(0.1) 40 41 # 松开目标 42 ActionChains(driver).release().perform() 43 time.sleep(10) 44 45 finally: 46 driver.close()

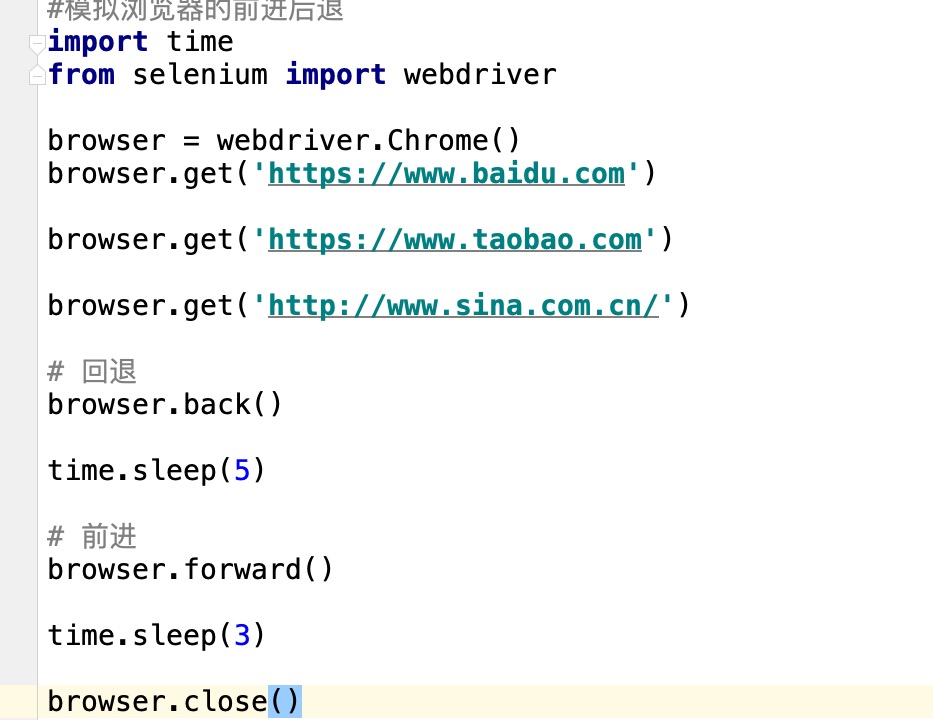

——执行js代码

1 from selenium import webdriver 2 import time 3 4 driver = webdriver.Chrome() 5 6 try: 7 driver.implicitly_wait(10) 8 driver.get('https://www.baidu.com/') 9 driver.execute_script( 10 ''' 11 alert("噜噜噜") 12 ''') 13 time.sleep(5) 14 15 16 finally: 17 driver.close()

爬取京东商品信息

1 from selenium import webdriver 2 from selenium.webdriver.common.keys import Keys # 键盘按键操作 3 import time 4 5 # 6 def get_good(driver): 7 num = 1 8 try: 9 time.sleep(5) 10 11 #下拉滑动5000px 12 js_code = ''' 13 window.scrollTo(0,5000) 14 ''' 15 driver.execute_script(js_code) 16 17 time.sleep(5) # 商品信息加载,等待5s 18 good_list = driver.find_elements_by_class_name('gl-item') 19 for good in good_list: 20 # 商品名称 21 good_name = good.find_element_by_css_selector('.p-name em').text 22 # 商品链接 23 good_url = good.find_element_by_css_selector('.p-name a').get_attribute('href') 24 # 商品价格 25 good_price = good.find_element_by_class_name('p-price').text 26 # 商品评价 27 good_commit = good.find_element_by_class_name('p-commit').text 28 29 good_content = f''' 30 num:{num} 31 商品名称:{good_name} 32 商品链接:{good_url} 33 商品价格:{good_price} 34 商品评论:{good_commit} 35 \n 36 ''' 37 print(good_content) 38 # 保存数据写入文件 39 with open('京东商品信息爬取.txt', 'a', encoding='utf-8') as f: 40 f.write(good_content) 41 num += 1 42 43 # 找到页面下一页点击 44 next_tag = driver.find_element_by_class_name('pn-next') 45 next_tag.click() 46 47 time.sleep(5) 48 #递归调用函数本身 49 get_good(driver) 50 51 finally: 52 driver.close() 53 54 if __name__ == '__main__': 55 driver = webdriver.Chrome() 56 try: 57 driver.implicitly_wait(10) 58 driver.get('https://www.jd.com/') 59 60 input = driver.find_element_by_id('key') 61 input.send_keys('人间失格') 62 input.send_keys(Keys.ENTER) 63 get_good(driver) 64 print('商品信息写入完成') 65 finally: 66 driver.close()

下午

BeautifulSoup4(BS4)

1.什么是bs4?

bs4是一个解析库,可以通过某种解析器来帮我们提取想要的数据。

2.为什么要使用bs4?

它可以通过简洁的语法快速提取用户想要的数据

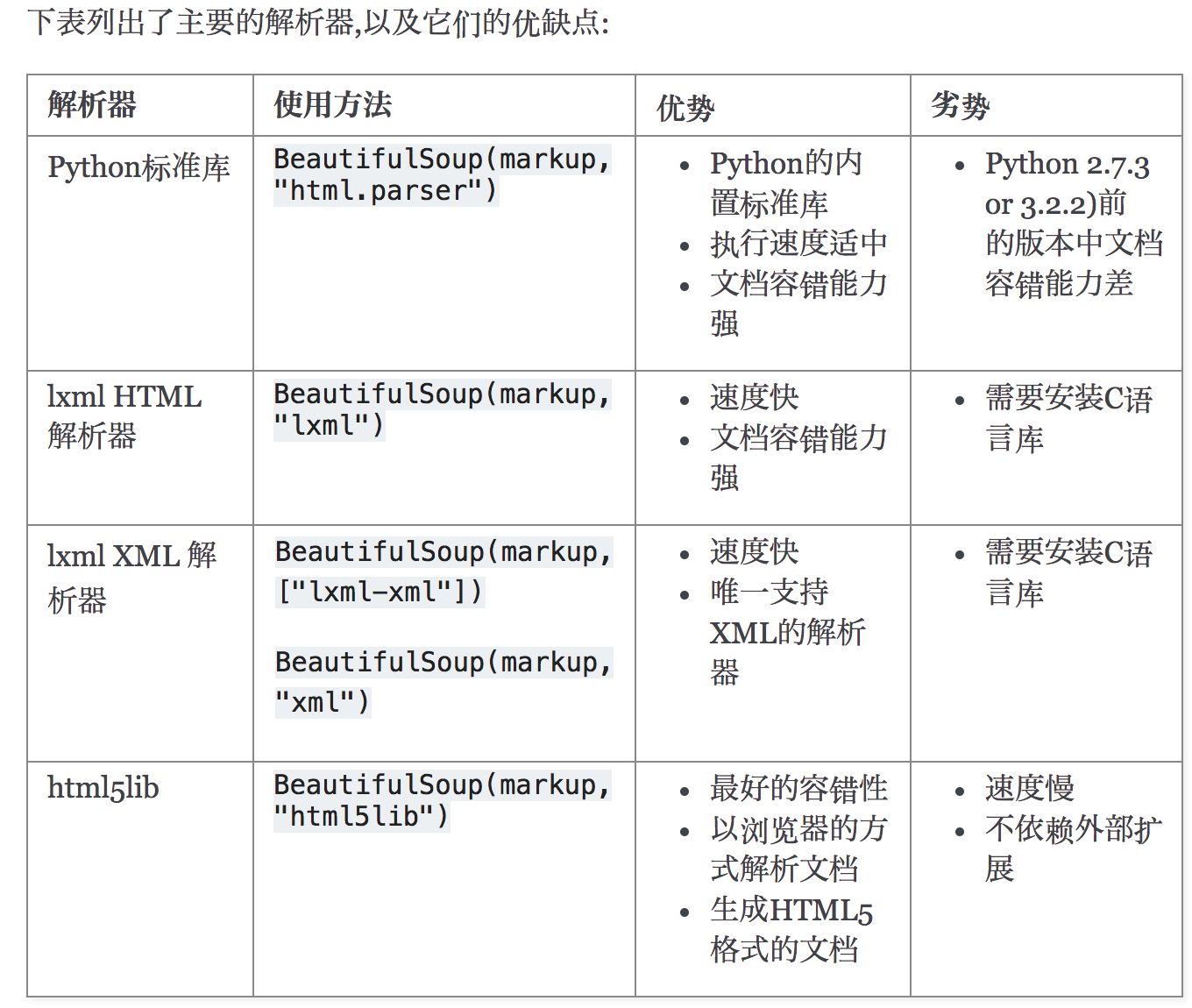

3.解析器的分类

首选:lxml XML

次选:Python标准库

4.bs4的安装与使用

安装解析器:pip install lxml

安装解析库:pip install bs4

html_doc = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="sister"><b>$37</b></p> <p class="story" id="p">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" >Elsie</a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """ from bs4 import BeautifulSoup # python自带的解析库 # soup = BeautifulSoup(html_doc,'html.parser') #调用bs4得到一个对象 soup = BeautifulSoup(html_doc,'lxml') #bs4对象 print(soup) #bs4类型 print(type(soup)) #美化功能 html = soup.prettify()

bs4解析库--搜索文档树

'''''' ''' find:找第一个 find_all:找所有 标签查找与属性查找: name属性匹配: name 标签名 attrs 属性查找匹配 text 文本匹配 标签: - 正则过滤器 re模块匹配 - 列表过滤器 列表内的数据匹配 - bool过滤器 True匹配 - 方法过滤器 用于一些要的属性以及不需要的属性查找。 属性: - class_ - id ''' html_doc = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="sister"><b>$37</b></p> <p class="story" id="p">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" >Elsie</a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """ from bs4 import BeautifulSoup soup = BeautifulSoup(html_doc,'lxml') # # name 标签名 # div = soup.find(name='div') # div_s = soup.find_all(name='div') # # attrs 属性查找匹配 # p = soup.find(attrs={"id":"p"}) # p = soup.find_all(attrs="id","p") # # text 文本匹配 # # name+attrs # p = soup.find(name='div',attrs={"id":"p"}) # # name+text # p = soup.find(name='div',text='j') # - 正则过滤器 import re # re模块匹配 #根据re模块匹配所有带 a 的节点 a = soup.find(name=re.compile('a')) print(a) # - 列表过滤器 # 列表内的数据匹配 print(soup.find(name=['a','p',re.compile('a')])) print(soup.find_all(name=['a','p',re.compile('a')])) # - bool过滤器 # True匹配 print(soup.find(name=True,attrs={"id":True})) print(soup.find_all(name=True,attrs={"id":True})) # - 方法过滤器 # 用于一些要的属性以及不需要的属性查找。 # print(soup.find_all(name='函数对象')) def have_id_not_class(tag): print(tag.name) if tag.name == 'p' and tag.has_attr("id") and not tag.has_attr("class"): return tag print(soup.find_all(name=have_id_not_class)) #补充 a = soup.find(id='link2') print(a) p = soup.find(class_='sister') print(p)

bs4解析库--遍历文档树

html_doc = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="sister"><b>$37</b></p> <p class="story" id="p">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" >Elsie</a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """ from bs4 import BeautifulSoup soup = BeautifulSoup(html_doc,'lxml') # 1、直接使用 √ # print(soup.html) #寻找第一个a标签 # print(soup.a) #寻找第一个p标签 # print(soup.p) # 2、获取标签的名称 # print(soup.a.name) # 3、获取标签的属性 √ # print(soup.a.attrs) #获取a标签中所有的属性 # print(soup.a.attrs['href']) #获取a标签里href属性 # 4、获取标签的内容 √ # print(soup.p.txt) # 5、嵌套选择 # print(soup.html.body.p) # 6、子节点、子孙节点 # print(soup.p.children) #返回迭代器对象 # print(list(soup.p.children)) # 7、父节点、祖先节点 # print(soup.b.parent) #父节点 # print(soup.b.parents) #祖先节点 :<generator object parents at 0x000002999E869308> # 8、兄弟节点 # print(soup.a) # # 获取下一个兄弟节点 # print(soup.a.next_sibling) # # 获取下一个的所有兄弟节点,返回的是一个生成器 # print(soup.a.next_siblings) # print(list(soup.a.next_siblings)) # # 获取上一个兄弟节点 # print(soup.a.previous_sibling) # # 获取上一个的所有兄弟节点,返回的是一个生成器 # print(list(soup.a.previous_siblings))

补充知识点:

yeild值(把值放进生成器中)

def f():

# return 1

yield 1

yield 2

yield 3

g = f()

print(g)

for line in g:

print(line)

浙公网安备 33010602011771号

浙公网安备 33010602011771号