Java线程池浅析

1. 什么是线程池?我们为什么需要线程池?

线程池即可以存放线程的容器,若干个可执行现成在“容器”中等待被调度。

我们都知道,线程的生命周期中有以下状态:新建状态(New)、就绪状态(Runnable)、运行状态(Running)、阻塞状态(Blocked)、死亡状态(Dead)。当一个线程任务执行完成之后,就会被销毁,然后其他任务继续创建线程。所以就会存在大量的cpu时间用到了线程的新建和死亡,然而一个线程我们没有必要去重复创建。所以线程池的任务就是,在启动时、批量任务开始时,先创建包含N个核心线程的线程池。这N个核心线程在没有任务时,会一直处于就绪状态,资源不会被释放。当任务提交后,我们就可以直接从线程池中取出线程来执行,省略了线程的新建和销毁的CPU时间。

我们使用线程池也是为了充分利用硬件,使系统能够存在更高的效率。同时,我们使用线程池也解决了线程运行时统一管理更加麻烦的问题。

2. Java线程池的结构

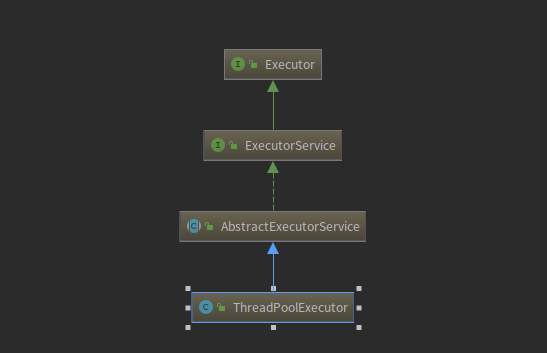

我们可以看到Java源码中的线程池结构如下所示:

下面我们对这4层结构进行看看:

Executor Interface

- 他是线程池的顶级接口,这个接口中只存在一个方法:void execute(Runnable)

- 此接口通常被称为执行器,通过Executor去执行一个任务。此方法的主要作用就是在给定的时间执行线程。

public interface Executor {

/**

* Executes the given command at some time in the future. The command

* may execute in a new thread, in a pooled thread, or in the calling

* thread, at the discretion of the {@code Executor} implementation.

*

* @param command the runnable task

*/

void execute(Runnable command);

}

ExecutorService Interface

- 这个接口继承了执行器接口Executor,对Executor进行扩展,主要围绕“线程池”的概念增加了2类方法:线程操控(提交线程、停止线程)、线程状态(任务执行状态获取)

public interface ExecutorService extends Executor {

void shutdown();

List<Runnable> shutdownNow();

boolean isShutdown();

boolean isTerminated();

boolean awaitTermination(long timeout, TimeUnit unit)

throws InterruptedException;

<T> Future<T> submit(Callable<T> task);

<T> Future<T> submit(Runnable task, T result);

Future<?> submit(Runnable task);

<T> List<Future<T>> invokeAll(Collection<? extends Callable<T>> tasks)

throws InterruptedException;

<T> List<Future<T>> invokeAll(Collection<? extends Callable<T>> tasks,

long timeout, TimeUnit unit)

throws InterruptedException;

<T> T invokeAny(Collection<? extends Callable<T>> tasks)

throws InterruptedException, ExecutionException;

<T> T invokeAny(Collection<? extends Callable<T>> tasks,

long timeout, TimeUnit unit)

throws InterruptedException, ExecutionException, TimeoutException;

}

AbstractExecutorService Abstract

- 抽象类。实现ExecutorService接口。

- 给出了提交线程执行策略。且扩展了submit(...)方法,提供了invokeXXX(...)方法

在这里我要问一个问题:看完上述的结构你们认为,任务执行即线程执行,是在哪个方法中?

这个问题就是submit方法和executor方法的区别。顶级方法定义的是一个executor方法即执行器,那么为什么还要存在submit方法?刚才我们看到executor方法是没有返回值的,切只支持Runnable参数。所以我们提交了线程,但是如果无法知道线程执行结果或执行过程中的问题,那我感觉线程池存在的意义少了一大半。

我们查看submit的源码可以发现,在submit方法中是先获取task的Future对象,然后交给execute去执行。在讲此任务的Future对象返回。Future对象的作用就是能够阻塞且等到线程执行完成,获取此线程的执行结果。所以执行任务还是在执行器的executor方法中执行,而submit的意义在扩展执行器方法,返回此线程的执行详情。

public abstract class AbstractExecutorService implements ExecutorService {

//这个源码不方便,还是去ide中看吧