K8s部署单节点Zookeeper并进行监控

0、写在前面

1> K8s监控Zookeeper,这里并没有使用zookeeper-exporter的方式进行监控,主要是由于zookeeper-exporter提供的相关指标不太全,zookeeper官网提供的监控指标信息可参看如下地址:https://github.com/apache/zookeeper/blob/master/zookeeper-server/src/main/java/org/apache/zookeeper/server/ServerMetrics.java,同时参看zookeeper官网发现,在zookeeper 3.6版本之后,官网也给出了相对应的监控方式(zookeeper官网地址:https://zookeeper.apache.org/doc/r3.6.4/zookeeperMonitor.html,zookeeper监控相关文档地址:https://github.com/apache/zookeeper/blob/master/zookeeper-docs/src/main/resources/markdown/zookeeperMonitor.md),所以本文采用部署serviceMonitor方式监控zookeeper。

2> zookeeper部署文件由于zookeeper官方镜像对于zookeeper部署时创建的用户名和用户组为zookeeper,但是博主所部属的K8s环境对应的用户名、用户组均为root,所以如果挂载zookeeper配置文件覆盖原有配置文件时,会报只读文件没有操作权限的提示信息,因此这部分在部署时,需要指定zookeeper所使用的用户名、用户组信息,对于zookeeper所使用的用户名、用户组信息,可以在先不指定用户名、用户组部署成功之后,进入shell控制台,通过使用id zookeeper命令查看

3> prometheus监控zookeeper时,需要开放7000端口,prometheus通过暴露出来的端口获取到对应的指标数据

4> zookeeper部署以及serviceMonitor配置,都是在Kuboard中执行,如果使用命令行或者其他可视化操作平台请自行按照相关操作执行

1.1、部署Deployment

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: zk-deployment

name: zookeeper

namespace: k8s-middleware

spec:

replicas: 1

selector:

matchLabels:

app: zk

template:

metadata:

name: zk

labels:

app: zk

spec:

# 由于zookeeper部署时定义了用户组为zookeeper,此处使用zookeeper的用户组覆盖当前的用户组

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsUser: 1000

volumes:

- name: localtime

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

- configMap:

defaultMode: 493

name: zookeeper-configmap

name: zkconf

containers:

- name: zookeeper

image: zookeeper:3.6.2

imagePullPolicy: IfNotPresent

volumeMounts:

- name: localtime

mountPath: /etc/localtime

readOnly: true

- mountPath: /conf/zoo.cfg

name: zkconf

subPath: zoo.cfg

---

kind: Service

apiVersion: v1

metadata:

name: zookeeper

namespace: k8s-middleware

labels:

app: zk

spec:

ports:

- port: 2181

name: client

protocol: TCP

targetPort: 2181

- name: metrics

port: 7000

protocol: TCP

targetPort: 7000

clusterIP: None

selector:

app: zk

---

apiVersion: v1

data:

zoo.cfg: >-

dataDir=/data

dataLogDir=/datalog

clientPort=2181

tickTime=2000

initLimit=10

syncLimit=5

autopurge.snapRetainCount=10

autopurge.purgeInterval=24

maxClientCnxns=600

standaloneEnabled=true

admin.enableServer=true

server.1=localhost:2888:3888

## Metrics Providers

# https://prometheus.io Metrics Exporter

metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

metricsProvider.httpPort=7000

metricsProvider.exportJvmInfo=true

kind: ConfigMap

metadata:

name: zookeeper-configmap

namespace: k8s-middleware

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: zookeeper-prometheus

namespace: k8s-middleware

spec:

endpoints:

- interval: 1m

port: metrics

namespaceSelector:

matchNames:

- k8s-middleware

selector:

matchLabels:

app: zk

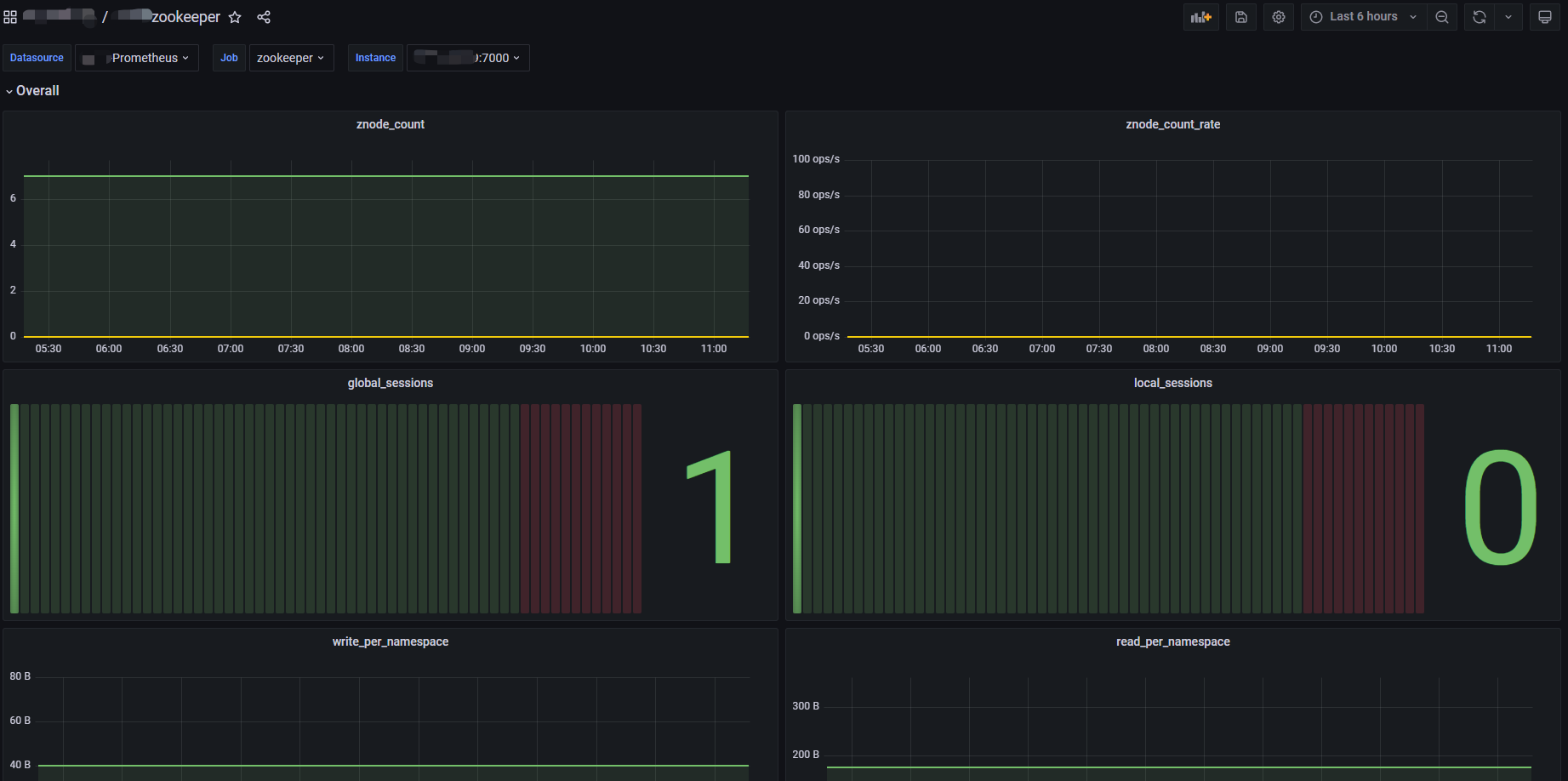

3、配置Grafana监控大盘

关于Grafana监控大盘,这里使用的大盘ID是10465,相关介绍可参看如下地址:https://grafana.com/grafana/dashboards/10465-zookeeper-by-prometheus/

使用此大盘时,注意修改变量中数据源信息。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?