k8s容器内部通过Prometheus Operator部署MySQL Exporter监控k8s集群外部的MySQL

写在前面

在按照下面步骤操作之前,请先确保服务器已经部署k8s,prometheus,prometheus operator,关于这些环境的部署,可以自行查找相关资料安装部署,本文档便不在此赘述。

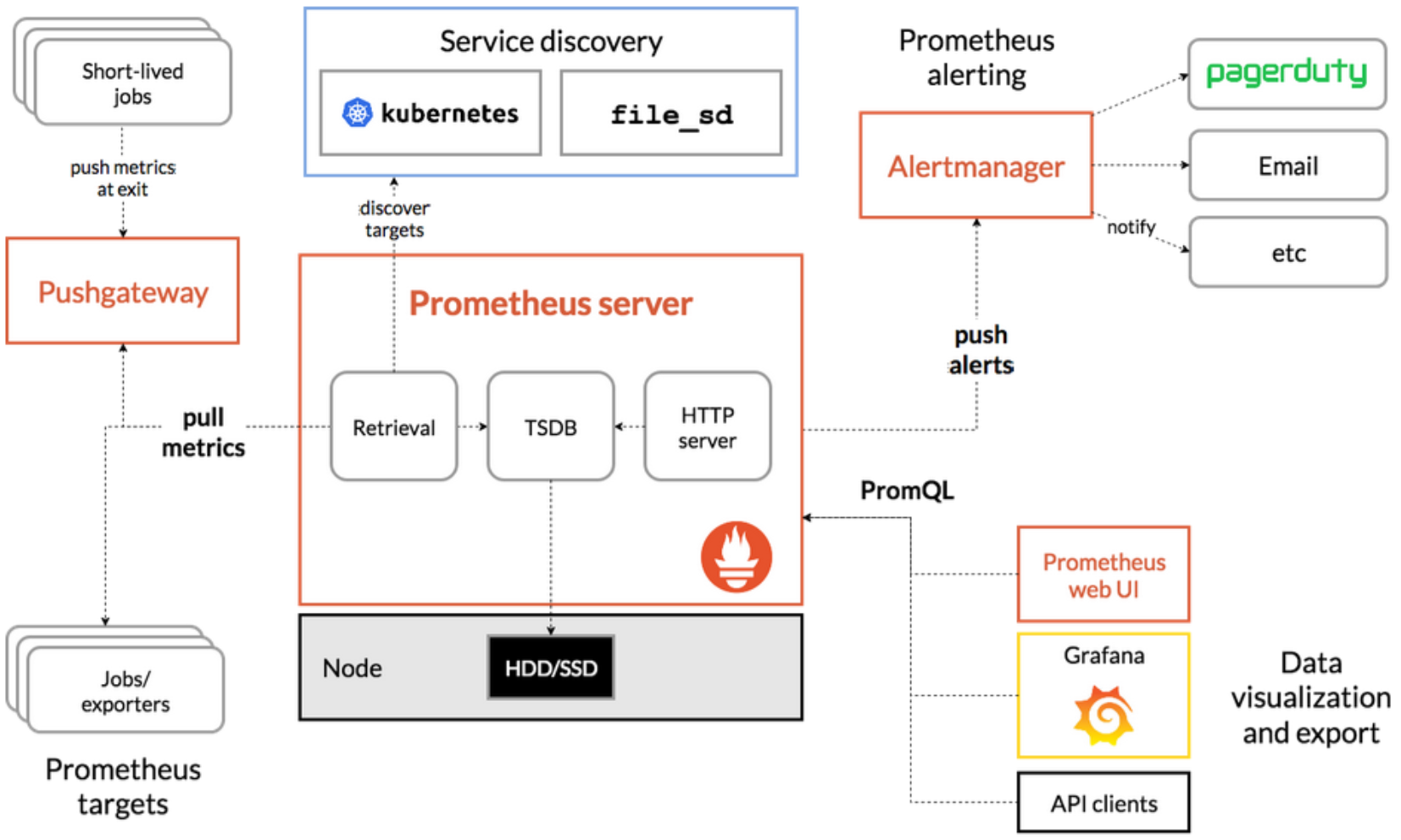

关于prometheus监控这部分,大致的系统架构图如下,感兴趣的同学可以自行研究一下,这里就不再具体说明。

1、问题说明

由于部分业务系统使用MySQL数据库存储数据,同时该数据库部署在k8s集群之外,但是prometheus operator部署在k8s集群内部,这里就涉及到了如何监控k8s集群外部的MySQL实例的问题。MySQL的监控可以使用prometheus的mysql-exporter暴露metrics,对于mysql处在k8s集群外部的场景,可以在创建Deployment时指定监控的数据源实例的IP地址为MySQL所在主机的IP地址,以此来暴露外部MySQL服务到k8s集群中。

2、部署操作

2.1、创建监控数据库的用户并授权

这里主要是创建mysql-exporter连接mysql需要的用户,同时并授予相应权限,操作SQL如下:

# 查看数据库密码长度,确保密码符合要求

SHOW VARIABLES LIKE 'validate_password%';

# 创建用户并授权,这里以exporter用户为例,密码长度与上述查询长度保持一致

create user 'exporter'@'%' identified with mysql_native_password by 'admin@321';

GRANT ALL PRIVILEGES ON *.* TO 'exporter'@'%' with grant option;

flush privileges;

2.2、k8s集群创建mysql-exporter的Deployment

创建mysql-exporter容器,利用上一步创建的账户密码信息,通过DATA_SOURCE_NAME环境变量传入连接mysql实例的信息,注意需要暴露mysql-exporter的9104端口。

---

apiVersion: apps/v1 kind: Deployment metadata: name: mysqld-exporter namespace: prometheus-exporter labels: app: mysqld-exporter spec: replicas: 1 selector: matchLabels: app: mysqld-exporter template: metadata: labels: app: mysqld-exporter spec: containers: - name: mysqld-exporter image: prom/mysqld-exporter imagePullPolicy: IfNotPresent env: # 此处为mysql-exporter指定监控的数据库地址以及对应的用户名、密码,这里监控的数据库IP地址为10.26.124.16:3306 - name: DATA_SOURCE_NAME value: exporter:admin@321@(10.26.124.16:3306)/mysql ports: - containerPort: 9104

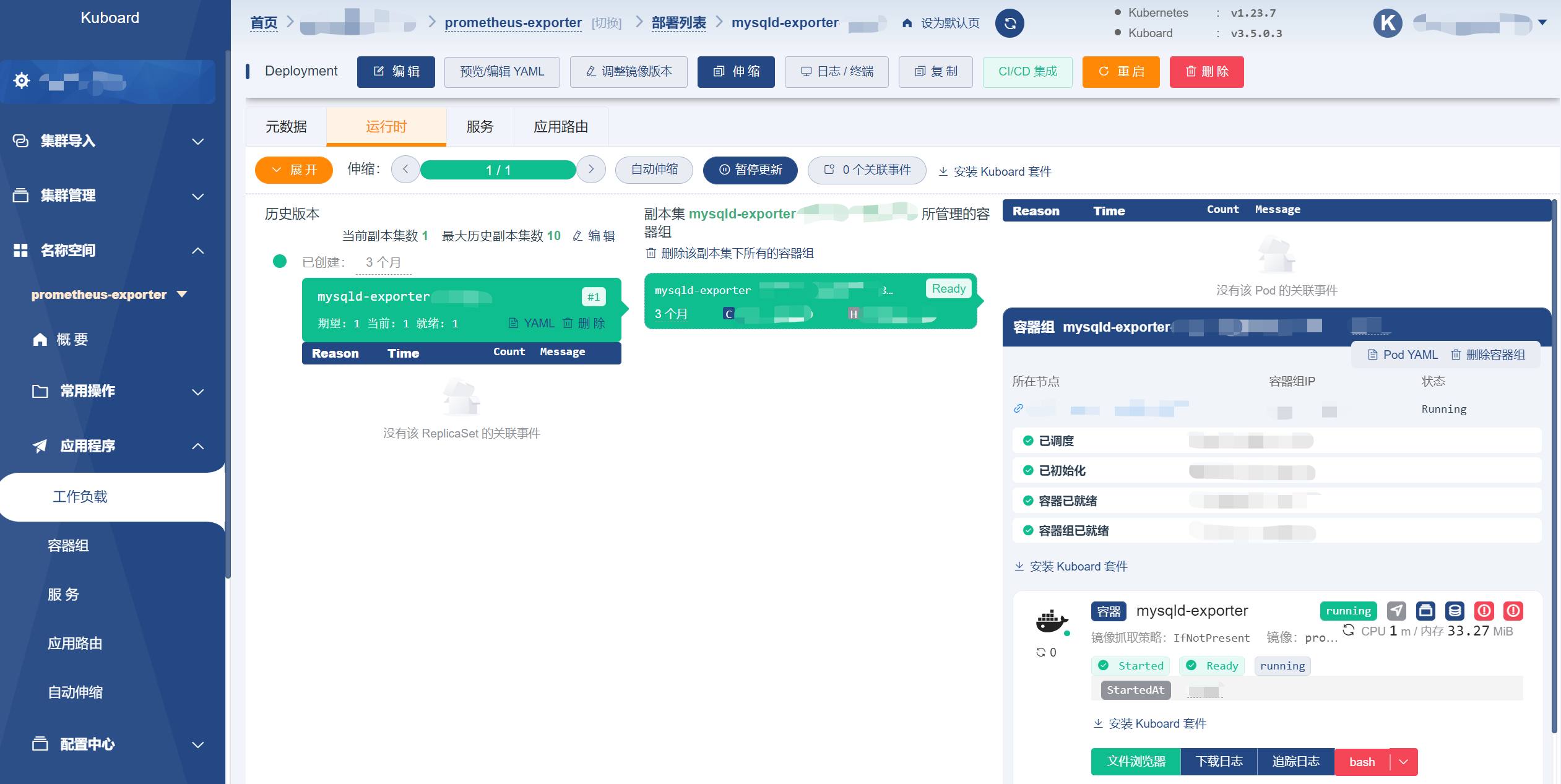

部署成功图如下:

2.3、k8s集群创建mysql-exporter的Service

---

apiVersion: v1

kind: Service

metadata:

labels:

app: mysqld-exporter

name: mysqld-exporter

namespace: prometheus-exporter

spec:

type: ClusterIP

ports:

- name: metrics

port: 9104

protocol: TCP

targetPort: 9104

selector:

app: mysqld-exporter

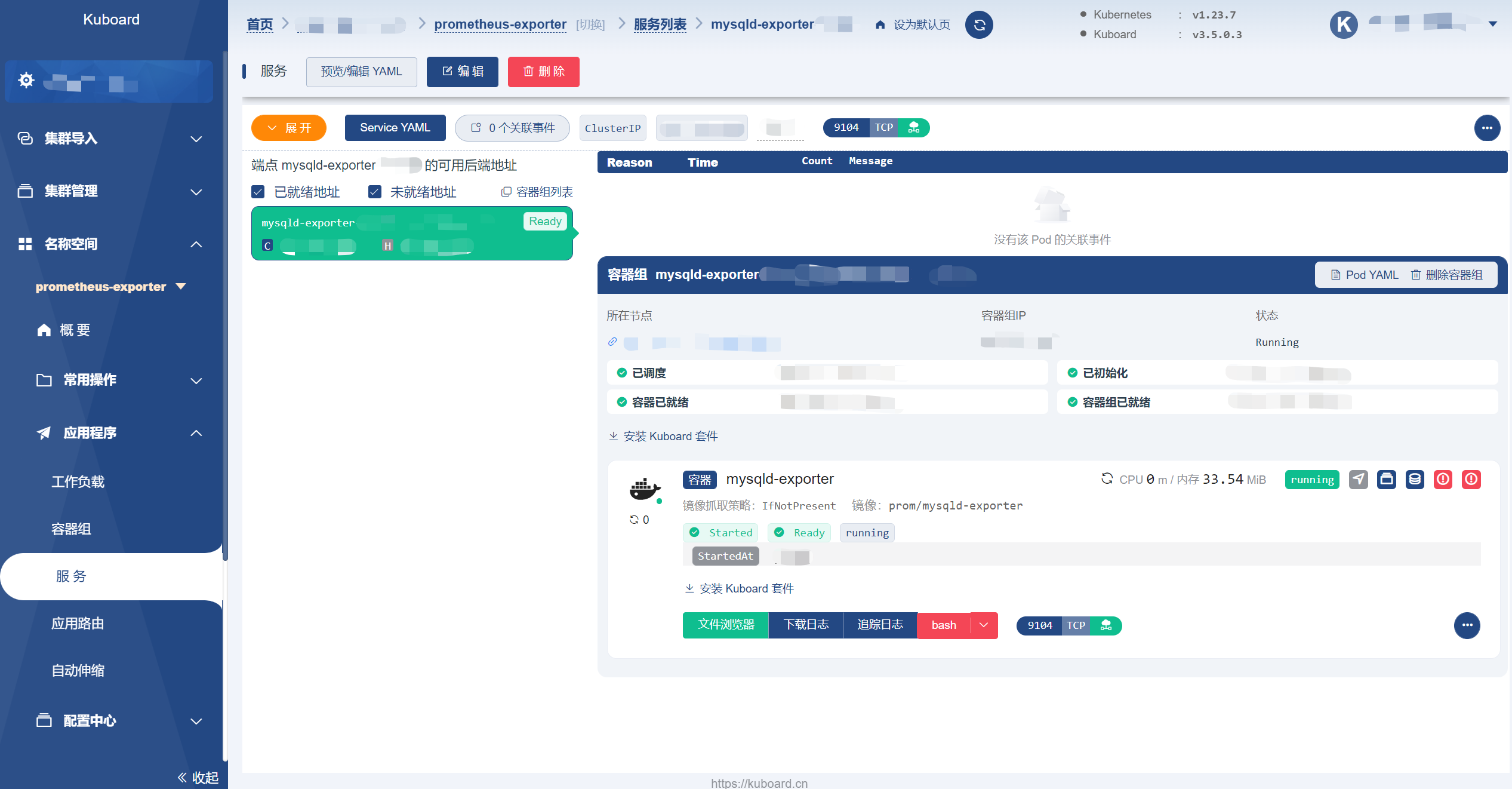

部署成功图如下:

2.4、创建ServiceMonitor

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app: mysqld-exporter

prometheus: k8s

name: prometheus-mysqld-exporter

namespace: prometheus-exporter

spec:

endpoints:

- interval: 1m

port: metrics

params:

target:

- '10.26.124.16:3306'

relabelings:

- sourceLabels: [__param_target]

targetLabel: instance

namespaceSelector:

matchNames:

- prometheus-exporter

selector:

matchLabels:

app: mysqld-exporter

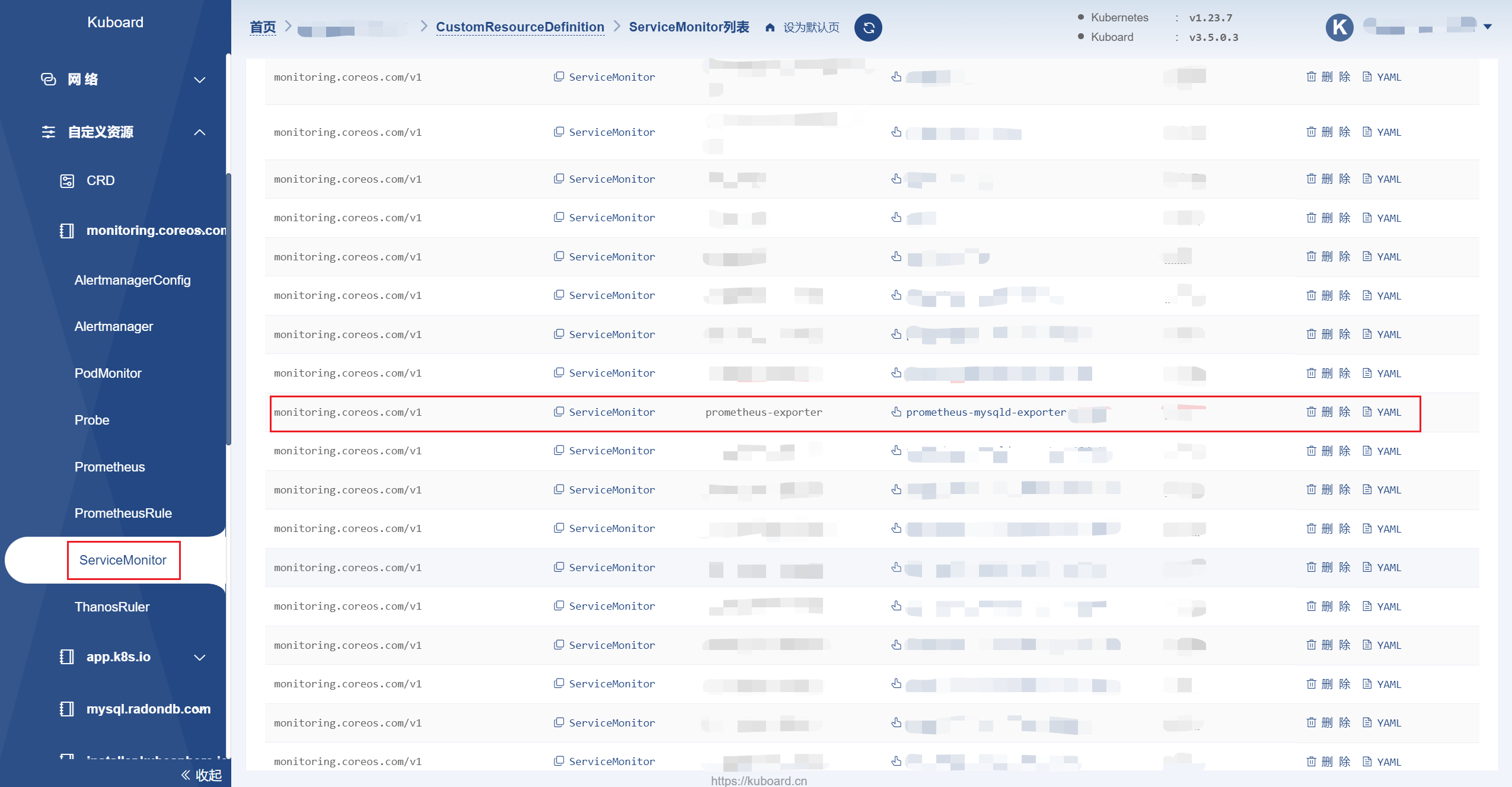

部署成功图如下:

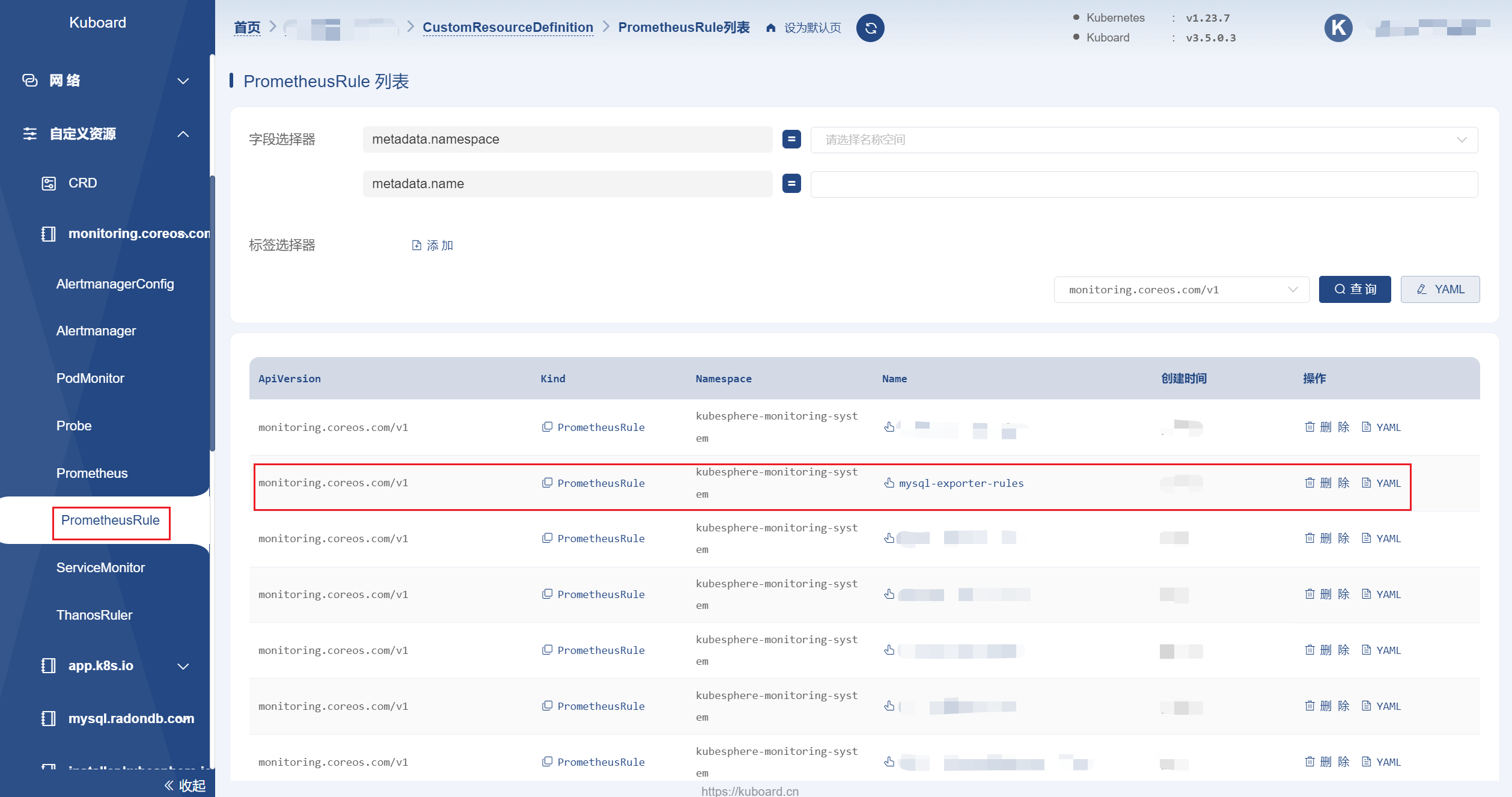

2.5、添加PrometheusRule监控规则

---

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: k8s

role: alert-rules

name: mysql-exporter-rules

namespace: kubesphere-monitoring-system

spec:

groups:

- name: mysql-exporter

rules:

- alert: MysqlDown

annotations:

description: |-

MySQL instance is down on {{ $labels.instance }}

VALUE = {{ $value }}

LABELS = {{ $labels }}

summary: 'MySQL down (instance {{ $labels.instance }})'

expr: mysql_up == 0

for: 0m

labels:

severity: critical

- alert: MysqlSlaveIoThreadNotRunning

annotations:

description: |-

MySQL Slave IO thread not running on {{ $labels.instance }}

VALUE = {{ $value }}

LABELS = {{ $labels }}

summary: >-

MySQL Slave IO thread not running (instance {{ $labels.instance

}})

expr: >-

mysql_slave_status_master_server_id > 0 and ON (instance)

mysql_slave_status_slave_io_running == 0

for: 0m

labels:

severity: critical

- alert: MysqlSlaveSqlThreadNotRunning

annotations:

description: |-

MySQL Slave SQL thread not running on {{ $labels.instance }}

VALUE = {{ $value }}

LABELS = {{ $labels }}

summary: >-

MySQL Slave SQL thread not running (instance {{ $labels.instance

}})

expr: >-

mysql_slave_status_master_server_id > 0 and ON (instance)

mysql_slave_status_slave_sql_running == 0

for: 0m

labels:

severity: critical

部署成功图如下:

**************************************************** 林深时见鹿,海蓝时见鲸 ****************************************************

分类:

k8s

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?