flink EventTime 事件时间 WaterMark 水印 demo理解附工程源码

首先感谢此博客,借用了里面的图, 因为我觉得没有比这个更好的图了。

博客链接:https://blog.csdn.net/a6822342/article/details/78064815

英文链接:http://vishnuviswanath.com/flink_eventtime.html

起

场景

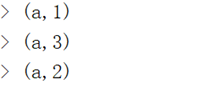

1,我们创建一个大小为10秒,每5秒滑动一次的滑动窗口。

2,假如在2020-04-28 17:00:00,我们最简单的实时流程序已经稳定运行并处理事件一段时间。

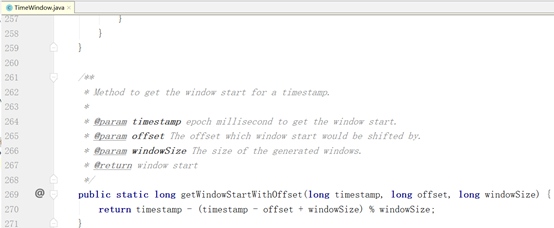

3,窗口的开窗起始时间规则如下:

那么假设已经根据开窗规则计算出,本例中滑动窗口的时间范围如下(左闭右开区间):

……

[17:00:00, 17:00:10)

[17:00:05, 17:00:15)

[17:00:10, 17:00:20)

[17:00:15, 17:00:25)

……

4,在17:00:13秒时,数据源产生了一个事件a,在17:00:14秒时,数据源也产生了一个事件a,在17:00:16秒时,数据源又产生了一个事件a。我们只用这三个事件就足以描述所有问题。

指标计算

现在我们要在这个设定的滑动窗口中,对事件a进行计数。

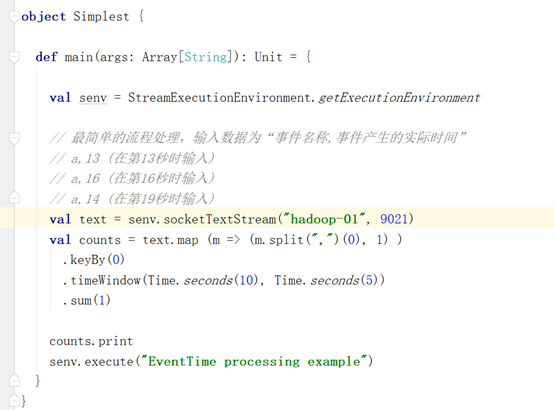

最简单的flink实时流处理

即整个实时流不存在EventTime控制和水印机制。

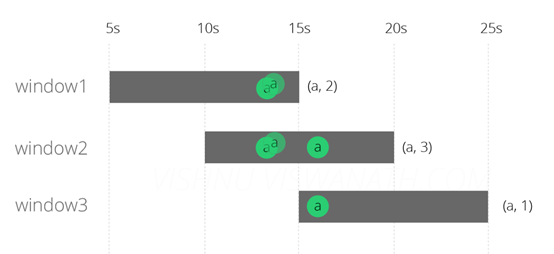

理想情况

理想情况下,实时流如下所示:

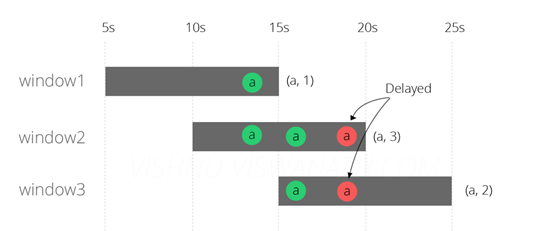

现实问题

上文中的“在17:00:14秒时,数据源产生了一个事件a”,该事件在经过网络传输的过程中,发生网络延迟(延迟5秒到达),实际到达实时流程序时已经是17:00:19,而我们最简单的实时流程序无法分辨出这是一个延迟达到的事件,那么的计算结果如下:

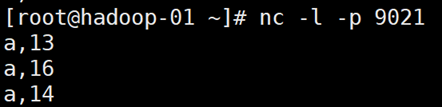

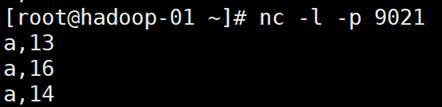

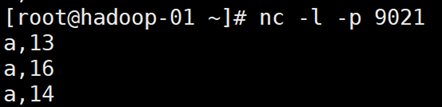

demo截图及源码

1 import org.apache.flink.api.scala._ 2 import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment 3 import org.apache.flink.streaming.api.windowing.time.Time 4 5 object Simplest { 6 7 def main(args: Array[String]): Unit = { 8 9 val senv = StreamExecutionEnvironment.getExecutionEnvironment 10 11 // 最简单的流程处理,输入数据为“事件名称,事件产生的实际时间” 12 // a,13 (在第13秒时输入) 13 // a,16 (在第16秒时输入) 14 // a,14 (在第19秒时输入) 15 val text = senv.socketTextStream("hadoop-01", 9021) 16 val counts = text.map (m => (m.split(",")(0), 1) ) 17 .keyBy(0) 18 .timeWindow(Time.seconds(10), Time.seconds(5)) 19 .sum(1) 20 21 counts.print 22 senv.execute("EventTime processing example") 23 } 24 }

pom.xml见最后。

具有EventTime控制的实时流处理

EventTime控制

跟上述最简单的实时流相比,我们只是启用了EventTime控制。

对原有代码改动有两点:

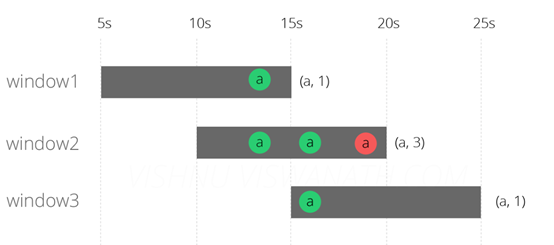

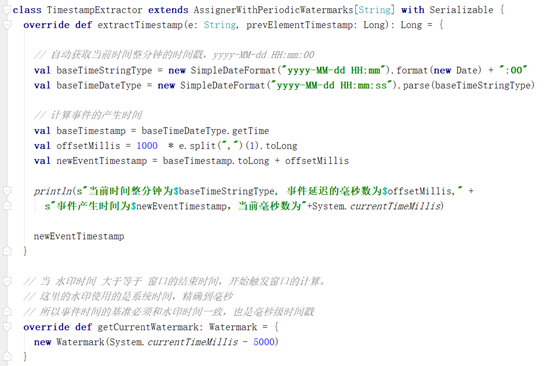

1, 我们需要手动实现一个时间戳提取器,它可以从事件中提取事件的产生时间。事件的格式为“a,事件产生时的时间戳”。时间戳提取器中的extractTimestamp方法获取时间戳。getCurrentWatermark方法可以暂时忽略不计。

2, 对输入流注册时间戳提取器

现实问题

经过EventTime控制后,实时流的处理在窗口1中仍然为1,正确结果应该为2,窗口2和窗口3的结果计数正确。

如图所示:

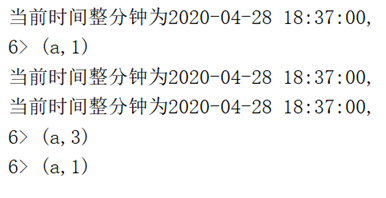

demo截图及源码

1 import org.apache.flink.api.scala._ 2 import org.apache.flink.streaming.api.TimeCharacteristic 3 import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment 4 import org.apache.flink.streaming.api.windowing.time.Time 5 6 object SimplestWithEventTime { 7 8 def main(args: Array[String]): Unit = { 9 10 val senv = StreamExecutionEnvironment.getExecutionEnvironment 11 12 // 增加了EventTime的实时流 13 // 通过EventTime处理延迟消息, 使前一窗口的延迟消息不再计入后面的窗口中 14 senv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime) 15 val input = senv.socketTextStream("hadoop-01", 9021) 16 17 val text = input.assignTimestampsAndWatermarks(new TimestampExtractor) 18 19 val counts = text.map (m => (m.split(",")(0), 1) ) 20 .keyBy(0) 21 .timeWindow(Time.seconds(10), Time.seconds(5)) 22 .sum(1) 23 24 counts.print 25 senv.execute("EventTime processing example") 26 } 27 }

1 import java.text.SimpleDateFormat 2 import java.util.Date 3 4 import org.apache.flink.streaming.api.functions.AssignerWithPeriodicWatermarks 5 import org.apache.flink.streaming.api.watermark.Watermark 6 7 class TimestampExtractor extends AssignerWithPeriodicWatermarks[String] with Serializable { 8 override def extractTimestamp(e: String, prevElementTimestamp: Long): Long = { 9 10 // 自动获取当前时间整分钟的时间戳,yyyy-MM-dd HH:mm:00 11 val baseTimeStringType = new SimpleDateFormat("yyyy-MM-dd HH:mm").format(new Date) + ":00" 12 val baseTimeDateType = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss").parse(baseTimeStringType) 13 14 // 计算事件的产生时间 15 val baseTimestamp = baseTimeDateType.getTime 16 val offsetMillis = 1000 * e.split(",")(1).toLong 17 val newEventTimestamp = baseTimestamp.toLong + offsetMillis 18 19 println(s"当前时间整分钟为$baseTimeStringType, 事件延迟的毫秒数为$offsetMillis," + 20 s"事件产生时间为$newEventTimestamp,当前毫秒数为"+System.currentTimeMillis) 21 22 newEventTimestamp 23 } 24 25 // 当 水印时间 大于等于 窗口的结束时间,开始触发窗口的计算。 26 // 这里的水印使用的是系统时间,精确到毫秒 27 // 所以事件时间的基准必须和水印时间一致,也是毫秒级时间戳 28 override def getCurrentWatermark: Watermark = { 29 new Watermark(System.currentTimeMillis - 5000) 30 } 31 }

具有EventTime和水印机制的实时流处理

水印机制

跟上述“只增加EventTime控制的实时流”相比,再增加水印机制。

对上述代码改动只有一点:

1, 修改时间戳提取器中的getCurrentWatermark方法,将获取到的当前时间的时间戳减去5秒作为水印时间。

运行效果

demo截图及源码

终

pom.xml

1 <!-- 2 Licensed to the Apache Software Foundation (ASF) under one 3 or more contributor license agreements. See the NOTICE file 4 distributed with this work for additional information 5 regarding copyright ownership. The ASF licenses this file 6 to you under the Apache License, Version 2.0 (the 7 "License"); you may not use this file except in compliance 8 with the License. You may obtain a copy of the License at 9 10 http://www.apache.org/licenses/LICENSE-2.0 11 12 Unless required by applicable law or agreed to in writing, 13 software distributed under the License is distributed on an 14 "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY 15 KIND, either express or implied. See the License for the 16 specific language governing permissions and limitations 17 under the License. 18 --> 19 <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" 20 xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> 21 <modelVersion>4.0.0</modelVersion> 22 23 <groupId>my-flink-project</groupId> 24 <artifactId>my-flink-project</artifactId> 25 <version>0.1</version> 26 <packaging>jar</packaging> 27 28 <name>Flink Quickstart Job</name> 29 <url>http://www.myorganization.org</url> 30 31 <properties> 32 <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> 33 <flink.version>1.9.1</flink.version> 34 <java.version>1.8</java.version> 35 <scala.binary.version>2.11</scala.binary.version> 36 <maven.compiler.source>${java.version}</maven.compiler.source> 37 <maven.compiler.target>${java.version}</maven.compiler.target> 38 </properties> 39 40 <repositories> 41 <repository> 42 <id>apache.snapshots</id> 43 <name>Apache Development Snapshot Repository</name> 44 <url>https://repository.apache.org/content/repositories/snapshots/</url> 45 <releases> 46 <enabled>false</enabled> 47 </releases> 48 <snapshots> 49 <enabled>true</enabled> 50 </snapshots> 51 </repository> 52 </repositories> 53 54 <dependencies> 55 <!-- Apache Flink dependencies --> 56 <!-- These dependencies are provided, because they should not be packaged into the JAR file. --> 57 <dependency> 58 <groupId>org.apache.flink</groupId> 59 <artifactId>flink-java</artifactId> 60 <version>${flink.version}</version> 61 <scope>provided</scope> 62 </dependency> 63 <dependency> 64 <groupId>org.apache.flink</groupId> 65 <artifactId>flink-streaming-scala_${scala.binary.version}</artifactId> 66 <version>${flink.version}</version> 67 </dependency> 68 <dependency> 69 <groupId>org.apache.flink</groupId> 70 <artifactId>flink-streaming-java_${scala.binary.version}</artifactId> 71 <version>${flink.version}</version> 72 <scope>provided</scope> 73 </dependency> 74 <dependency> 75 <groupId>org.apache.flink</groupId> 76 <artifactId>flink-scala_${scala.binary.version}</artifactId> 77 <version>${flink.version}</version> 78 </dependency> 79 80 <!-- Add connector dependencies here. They must be in the default scope (compile). --> 81 82 <!-- Example: 83 84 <dependency> 85 <groupId>org.apache.flink</groupId> 86 <artifactId>flink-connector-kafka-0.10_${scala.binary.version}</artifactId> 87 <version>${flink.version}</version> 88 </dependency> 89 --> 90 91 <!-- Add logging framework, to produce console output when running in the IDE. --> 92 <!-- These dependencies are excluded from the application JAR by default. --> 93 <dependency> 94 <groupId>org.slf4j</groupId> 95 <artifactId>slf4j-log4j12</artifactId> 96 <version>1.7.7</version> 97 <scope>runtime</scope> 98 </dependency> 99 <dependency> 100 <groupId>log4j</groupId> 101 <artifactId>log4j</artifactId> 102 <version>1.2.17</version> 103 <scope>runtime</scope> 104 </dependency> 105 </dependencies> 106 107 <build> 108 <plugins> 109 110 <!-- Java Compiler --> 111 <plugin> 112 <groupId>org.apache.maven.plugins</groupId> 113 <artifactId>maven-compiler-plugin</artifactId> 114 <version>3.1</version> 115 <configuration> 116 <source>${java.version}</source> 117 <target>${java.version}</target> 118 </configuration> 119 </plugin> 120 121 <!-- We use the maven-shade plugin to create a fat jar that contains all necessary dependencies. --> 122 <!-- Change the value of <mainClass>...</mainClass> if your program entry point changes. --> 123 <plugin> 124 <groupId>org.apache.maven.plugins</groupId> 125 <artifactId>maven-shade-plugin</artifactId> 126 <version>3.0.0</version> 127 <executions> 128 <!-- Run shade goal on package phase --> 129 <execution> 130 <phase>package</phase> 131 <goals> 132 <goal>shade</goal> 133 </goals> 134 <configuration> 135 <artifactSet> 136 <excludes> 137 <exclude>org.apache.flink:force-shading</exclude> 138 <exclude>com.google.code.findbugs:jsr305</exclude> 139 <exclude>org.slf4j:*</exclude> 140 <exclude>log4j:*</exclude> 141 </excludes> 142 </artifactSet> 143 <filters> 144 <filter> 145 <!-- Do not copy the signatures in the META-INF folder. 146 Otherwise, this might cause SecurityExceptions when using the JAR. --> 147 <artifact>*:*</artifact> 148 <excludes> 149 <exclude>META-INF/*.SF</exclude> 150 <exclude>META-INF/*.DSA</exclude> 151 <exclude>META-INF/*.RSA</exclude> 152 </excludes> 153 </filter> 154 </filters> 155 <transformers> 156 <transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer"> 157 <mainClass>myflink.StreamingJob</mainClass> 158 </transformer> 159 </transformers> 160 </configuration> 161 </execution> 162 </executions> 163 </plugin> 164 </plugins> 165 166 <pluginManagement> 167 <plugins> 168 169 <!-- This improves the out-of-the-box experience in Eclipse by resolving some warnings. --> 170 <plugin> 171 <groupId>org.eclipse.m2e</groupId> 172 <artifactId>lifecycle-mapping</artifactId> 173 <version>1.0.0</version> 174 <configuration> 175 <lifecycleMappingMetadata> 176 <pluginExecutions> 177 <pluginExecution> 178 <pluginExecutionFilter> 179 <groupId>org.apache.maven.plugins</groupId> 180 <artifactId>maven-shade-plugin</artifactId> 181 <versionRange>[3.0.0,)</versionRange> 182 <goals> 183 <goal>shade</goal> 184 </goals> 185 </pluginExecutionFilter> 186 <action> 187 <ignore/> 188 </action> 189 </pluginExecution> 190 <pluginExecution> 191 <pluginExecutionFilter> 192 <groupId>org.apache.maven.plugins</groupId> 193 <artifactId>maven-compiler-plugin</artifactId> 194 <versionRange>[3.1,)</versionRange> 195 <goals> 196 <goal>testCompile</goal> 197 <goal>compile</goal> 198 </goals> 199 </pluginExecutionFilter> 200 <action> 201 <ignore/> 202 </action> 203 </pluginExecution> 204 </pluginExecutions> 205 </lifecycleMappingMetadata> 206 </configuration> 207 </plugin> 208 </plugins> 209 </pluginManagement> 210 </build> 211 212 <!-- This profile helps to make things run out of the box in IntelliJ --> 213 <!-- Its adds Flink's core classes to the runtime class path. --> 214 <!-- Otherwise they are missing in IntelliJ, because the dependency is 'provided' --> 215 <profiles> 216 <profile> 217 <id>add-dependencies-for-IDEA</id> 218 219 <activation> 220 <property> 221 <name>idea.version</name> 222 </property> 223 </activation> 224 225 <dependencies> 226 <dependency> 227 <groupId>org.apache.flink</groupId> 228 <artifactId>flink-java</artifactId> 229 <version>${flink.version}</version> 230 <scope>compile</scope> 231 </dependency> 232 <dependency> 233 <groupId>org.apache.flink</groupId> 234 <artifactId>flink-streaming-java_${scala.binary.version}</artifactId> 235 <version>${flink.version}</version> 236 <scope>compile</scope> 237 </dependency> 238 </dependencies> 239 </profile> 240 </profiles> 241 242 </project>

【推荐】还在用 ECharts 开发大屏?试试这款永久免费的开源 BI 工具!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET 原生驾驭 AI 新基建实战系列:向量数据库的应用与畅想

· 从问题排查到源码分析:ActiveMQ消费端频繁日志刷屏的秘密

· 一次Java后端服务间歇性响应慢的问题排查记录

· dotnet 源代码生成器分析器入门

· ASP.NET Core 模型验证消息的本地化新姿势

· 开发的设计和重构,为开发效率服务

· 从零开始开发一个 MCP Server!

· Ai满嘴顺口溜,想考研?浪费我几个小时

· 从问题排查到源码分析:ActiveMQ消费端频繁日志刷屏的秘密

· .NET 原生驾驭 AI 新基建实战系列(一):向量数据库的应用与畅想

2019-04-28 canal-随记001-吐血一个下午找bug