metric-server和HPA(POD自动缩放)

1. metric-server

metric-server时kubernetes集群的核心监控数据的聚合器,他从kubelet summary API接口中采集指标信息

获取metric-server:

获取yaml文件:https://github.com/kubernetes-sigs/metrics-server/releases:

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.5.2/components.yaml

文件中镜像地址为:k8s.gcr.io/metrics-server/metrics-server:v0.5.2

更改为:registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.5.2,或者从阿里云下载后,打上标签上传到本地harbor仓库,然后使用本地仓库作为镜像源

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.5.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.5.2 harbor.myland.com/baseimages/metrics-server:v0.5.2

docker push harbor.myland.com/baseimages/metrics-server:v0.5.2

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

image: harbor.myland.com/baseimages/metrics-server:v0.5.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

kubectl apply -f components.yaml

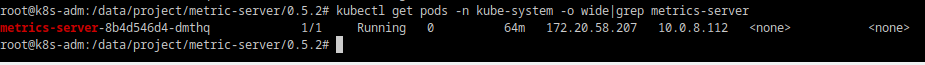

kubectl get pods -n kube-system -o wide|grep metrics-server

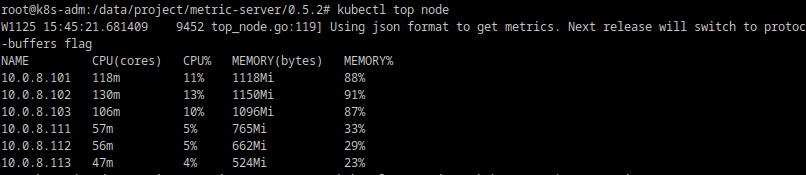

kubectl top node 查看node资源使用情况

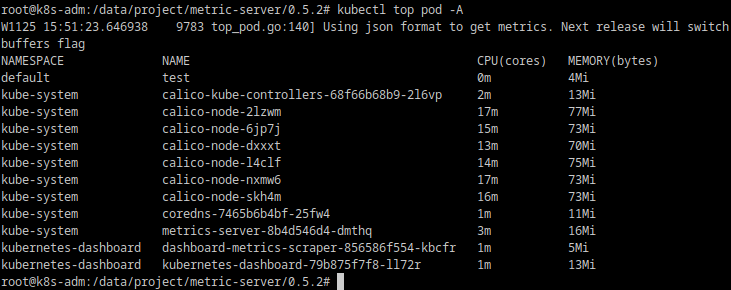

kubectl top pod -A 查看POD资源使用情况

2 HPA

HorizontalPodAutoscaler,作为Kubernetes API资源和控制器的实现,根据采集到的指标数据来调整控制器的行为,控制器会定期调整RepilcaSet或则Deployment控制器中的对象副本数,使实际的指标值和用户指定的目标相匹配。

修改:/etc/systemd/system/kube-controller-manager.service:

新增:

--horizontal-pod-autoscaler-use-rest-clients=true \ #是否使用其他客户端数据

--horizontal-pod-autoscaler-sync-period=10s \ #POD数量水平伸缩间隔周期

重启kube-controller-manager:

systemctl restart kube-controller-manager

2.1 HPAv1

HPAv1对象属于autoscaling/v1群组,只支持基于CPU利用率的弹性伸缩机制,可以从metric servers获取指标数据。

如:以下deployment,有3个副本,注意deployment模板中必须配置:deployment.spec.template.spec.containers.resources.request和deployment.spec.template.spec.containers.resources.limits,否则HPA不会生效,target资源

kind: Deployment

apiVersion: apps/v1

metadata:

name: deployment-demo

spec:

replicas: 3

selector:

matchLabels:

app: de-demoapp

release: stable

template:

metadata:

labels:

app: de-demoapp

release: stable

spec:

containers:

- name: de-demoapp

image: harbor.myland.com/baseimages/demoapp:v1.1

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 50m

memory: 50Mi

ports:

- containerPort: 80

name: http

livenessProbe:

httpGet:

path: "/livez"

port: 80

initialDelaySeconds: 5

readinessProbe:

httpGet:

path: '/livez'

port: 80

initialDelaySeconds: 15

可以通过命令kubectl auto deploy实现调整,如

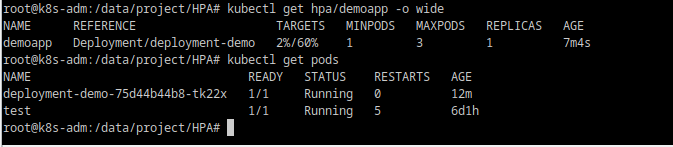

kubectl autoscale deployment demoapp --min=1 --max=3 --cpu-percent=60 或者通过资源清单创建 hpav1.yaml

hpav1.yaml

由于指标的动态变动特性,HPA控制器可能会造成副本数量频繁的波动,kubernetes从1.6开始可以通过调整kube-controller-manager的选项值定义副本数变动延迟时长。默认缩容延迟时长为5分钟,扩容延迟时长为3分钟。

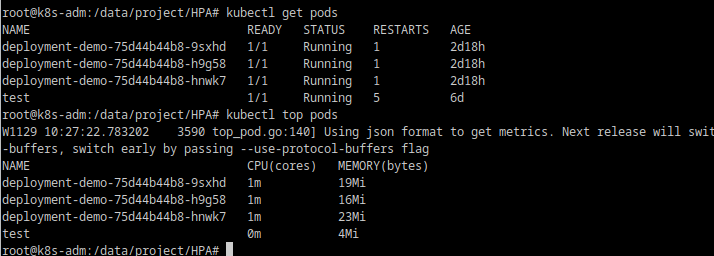

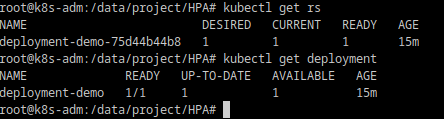

可以看到HPA根据指标值将deployment中定义3个副本,更改成了1个

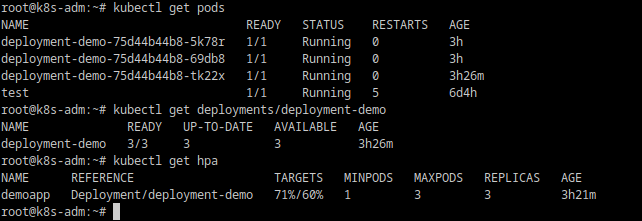

在容器内部使用stress-ng压测工具,增加CPU负载

stress-ng -c 1 --cpu-method all

HPA自动将POD副本时扩展到最大 3个副本

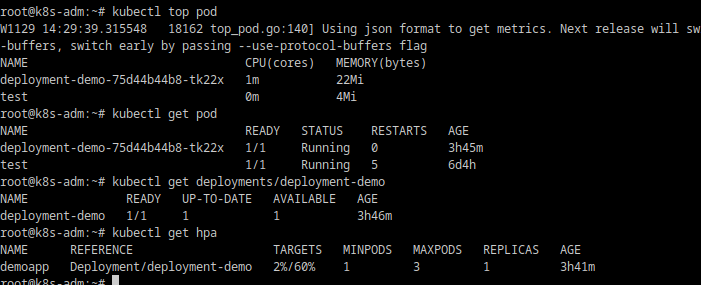

停止压测进程,可以看到5分钟后POD副本数自动减到最小POD副本数1

2.2 HPAv2

HPAv1只能针对CPU资源实现自动缩放,HPAv2可以针对CPU、内存和其他任意自定义指标实现自动缩放

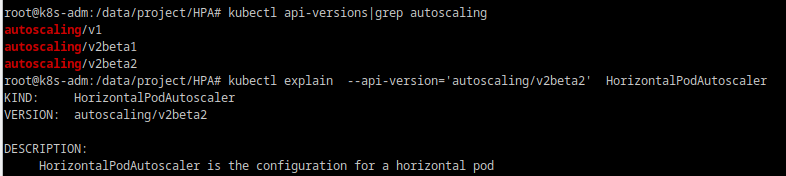

查看api接口和帮助:

kubectl api-versions|grep autoscaling

kubectl explain --api-version='autoscaling/v2beta2' HorizontalPodAutoscaler

1 apiVersion: autoscaling/v2beta2 2 kind: HorizontalPodAutoscaler 3 metadata: 4 name: demoapp 5 spec: 6 scaleTargetRef: 7 apiVersion: apps/v1 8 kind: Deployment 9 name: deployment-demo 10 minReplicas: 1 11 maxReplicas: 3 12 metrics: 13 - type: Resource 14 resource: 15 name: cpu 16 target: 17 type: Utilization 18 averageUtilization: 70 19 - type: Resource 20 resource: 21 name: memory 22 target: 23 type: AverageValue 24 averageValue: 30Mi

5分钟后会自动减至1个