Kubernetes-1.16部署之一 ETCD+K8s+Calico

一、环境说明

| 操作系统 | 主机名 | 节点及功能 | IP | 备注 |

| CentOS7.7 X86_64 |

k8s-master |

master/etcd/registry | 192.168.168.2 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、docker、calico |

| CentOS7.7 X86_64 |

work-node01 |

node01/etcd | 192.168.168.3 | kube-proxy、kubelet、etcd、docker、calico |

| CentOS7.7 X86_64 | work-node02 | node02/etcd | 192.168.168.4 | kube-proxy、kubelet、etcd、docker、calico |

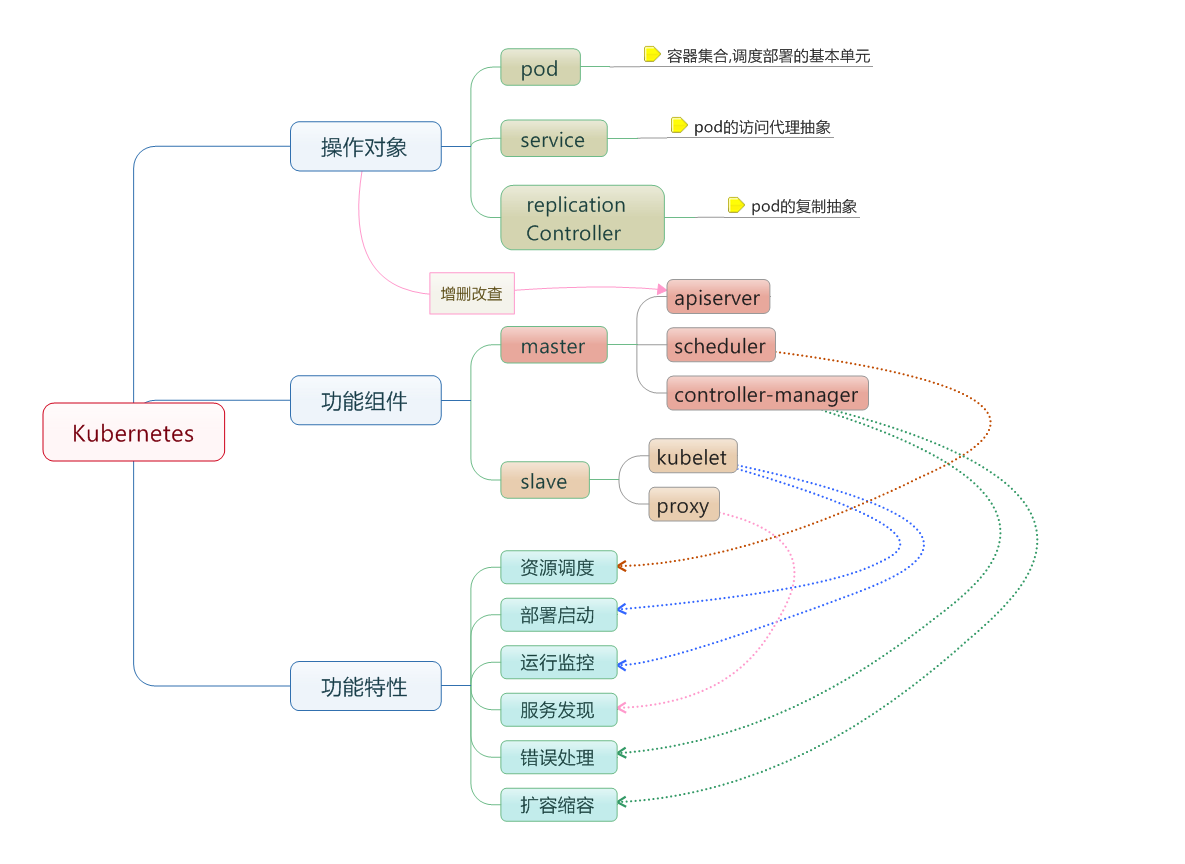

集群功能各模块功能描述:

Master节点:

Master节点上面主要由四个模块组成,APIServer,Schedule,Controller-manager,Etcd

APIServer: APIServer负责对外提供RESTful的kubernetes API的服务,它是系统管理指令的统一接口,任何对资源的增删该查都要交给APIServer处理后再交给etcd,如图,kubectl(kubernetes提供的客户端工具,该工具内部是对kubernetes API的调用)是直接和APIServer交互的。

schedule: schedule负责调度Pod到合适的Node上,如果把scheduler看成一个黑匣子,那么它的输入是pod和由多个Node组成的列表,输出是Pod和一个Node的绑定。 kubernetes目前提供了调度算法,同样也保留了接口。用户根据自己的需求定义自己的调度算法。

controller manager: 如果APIServer做的是前台的工作的话,那么controller manager就是负责后台的。每一个资源都对应一个控制器。而control manager就是负责管理这些控制器的,比如我们通过APIServer创建了一个Pod,当这个Pod创建成功后,APIServer的任务就算完成了。

etcd:etcd是一个高可用的键值存储系统,kubernetes使用它来存储各个资源的状态,从而实现了Restful的API。

Node节点:

每个Node节点主要由三个模板组成:Kublet, Kube-proxy

kube-proxy: 该模块实现了kubernetes中的服务发现和反向代理功能。kube-proxy支持TCP和UDP连接转发,默认基Round Robin算法将客户端流量转发到与service对应的一组后端pod。服务发现方面,kube-proxy使用etcd的watch机制监控集群中service和endpoint对象数据的动态变化,并且维护一个service到endpoint的映射关系,从而保证了后端pod的IP变化不会对访问者造成影响,另外,kube-proxy还支持session affinity。

kublet:kublet是Master在每个Node节点上面的agent,是Node节点上面最重要的模块,它负责维护和管理该Node上的所有容器,但是如果容器不是通过kubernetes创建的,它并不会管理。本质上,它负责使Pod的运行状态与期望的状态一致。

二、3台主机安装前准备

1)更新软件包和内核

yum -y update

2) 关闭防火墙

systemctl disable firewalld.service

3) 关闭SELinux

vi /etc/selinux/config 改SELINUX=enforcing为SELINUX=disabled

4)安装常用

yum -y install net-tools ntpdate conntrack-tools

5)禁用虚拟内存

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

6)优化内核参数

# cat > /etc/sysctl.d/kubernetes.conf <<EOF net.ipv4.ip_forward = 1 net.ipv4.tcp_tw_recycle=0 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv6.conf.all.disable_ipv6 = 0 net.ipv6.conf.default.forwarding = 1 net.ipv6.conf.all.forwarding = 1 vm.swappiness=0 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 EOF # sysctl -p /etc/sysctl.d/kubernetes.conf

7)配置前所有服务器做NTP时间同步

三、修改三台主机命名

1) k8s-master

hostnamectl --static set-hostname k8s-master

2) work-node01

hostnamectl --static set-hostname work-node01

3) work-node02

hostnamectl --static set-hostname work-node02

四、制作CA证书

1.创建生成证书和存放证书目录(3台主机上都进行此操作)

mkdir /root/ssl mkdir -p /opt/kubernetes/{conf,scripts,bin,ssl,yaml}

2.设置环境变量(3台主机上都进行此操作)

# vi /etc/profile.d/kubernetes.sh K8S_HOME=/opt/kubernetes export PATH=$K8S_HOME/bin:$PATH # vi /etc/profile.d/etcd.sh export ETCDCTL_API=3 source /etc/profile.d/kubernetes.sh source /etc/profile.d/etcd.sh

3.安装CFSSL并复制到node01号node02节点

cd /root/ssl wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl* mv cfssl-certinfo_linux-amd64 /opt/kubernetes/bin/cfssl-certinfo mv cfssljson_linux-amd64 /opt/kubernetes/bin/cfssljson mv cfssl_linux-amd64 /opt/kubernetes/bin/cfssl scp /opt/kubernetes/bin/cfssl* root@192.168.168.3:/opt/kubernetes/bin scp /opt/kubernetes/bin/cfssl* root@192.168.168.4:/opt/kubernetes/bin

4.创建用来生成 CA 文件的 JSON 配置文件

cd /root/ssl cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "876000h" }, "profiles": { "kubernetes": { "expiry": "876000h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF

server auth表示client可以用该ca对server提供的证书进行验证

client auth表示server可以用该ca对client提供的证书进行验证

5.创建用来生成 CA 证书签名请求(CSR)的 JSON 配置文件

cat > ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

6.生成CA证书和私钥

# cfssl gencert -initca ca-csr.json | cfssljson -bare ca # ls ca* ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

将SCP证书分发到各节点

# cp ca.pem ca-key.pem /opt/kubernetes/ssl # scp ca.pem ca-key.pem root@192.168.168.3:/opt/kubernetes/ssl # scp ca.pem ca-key.pem root@192.168.168.4:/opt/kubernetes/ssl

注:各node节点也需复制ca.pem和ca-key.pem

7.创建etcd证书请求

# cat > etcd-csr.json <<EOF { "CN": "etcd", "hosts": [ "k8s-master", "work-node01", "work-node02", "192.168.168.2", "192.168.168.3", "192.168.168.4" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

8.生成 etcd 证书和私钥

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd # ls etcd* etcd.csr etcd-csr.json etcd-key.pem etcd.pem

分发证书文件

# cp /root/ssl/etcd*.pem /opt/kubernetes/ssl # scp /root/ssl/etcd*.pem root@192.168.168.3:/opt/kubernetes/ssl # scp /root/ssl/etcd*.pem root@192.168.168.4:/opt/kubernetes/ssl

五、Etcd集群安装配置(配置前3台主机需时间同步)

1.修改hosts(3台主机上都进行此操作)

vi /etc/hosts # echo '192.168.168.2 k8s-master 192.168.168.3 work-node01 192.168.168.4 work-node02' >> /etc/hosts

2.下载etcd安装包

wget https://github.com/coreos/etcd/releases/download/v3.4.0/etcd-v3.4.0-linux-amd64.tar.gz

3.解压安装etcd(3台主机做同样配置)

mkdir /var/lib/etcd tar -zxvf etcd-v3.4.0-linux-amd64.tar.gz && cd $(ls -d etcd-*) cp etcd etcdctl /opt/kubernetes/bin

4.创建etcd启动文件

cat > /usr/lib/systemd/system/etcd.service <<EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=simple WorkingDirectory=/var/lib/etcd EnvironmentFile=/opt/kubernetes/conf/etcd.conf # set GOMAXPROCS to number of processors ExecStart=/bin/bash -c "GOMAXPROCS=1 /opt/kubernetes/bin/etcd" Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

将etcd.service文件分发到各node节点

scp /usr/lib/systemd/system/etcd.service root@192.168.168.3:/usr/lib/systemd/system/etcd.service scp /usr/lib/systemd/system/etcd.service root@192.168.168.4:/usr/lib/systemd/system/etcd.service

5.k8s-master(192.168.168.2)编译etcd.conf文件

vi /opt/kubernetes/conf/etcd.conf #[Member] ETCD_NAME="k8s-master" ETCD_DATA_DIR="/var/lib/etcd/k8s.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.168.2:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.168.2:2379,https://127.0.0.1:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.168.2:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.168.2:2379" ETCD_INITIAL_CLUSTER="k8s-master=https://192.168.168.2:2380,work-node01=https://192.168.168.3:2380,work-node02=https://192.168.168.4:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" #[Security] ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" ETCD_TRUSTED_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" ETCD_PEER_TRUSTED_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_PEER_CLIENT_CERT_AUTH="true"

6.work-node01(192.168.168.3)编译etcd.conf文件

vi /opt/kubernetes/conf/ectd.conf #[Member] ETCD_NAME="work-node01" ETCD_DATA_DIR="/var/lib/etcd/k8s.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.168.3:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.168.3:2379,https://127.0.0.1:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.168.3:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.168.3:2379" ETCD_INITIAL_CLUSTER="k8s-master=https://192.168.168.2:2380,work-node01=https://192.168.168.3:2380,work-node02=https://192.168.168.4:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" #[Security] ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" ETCD_TRUSTED_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" ETCD_PEER_TRUSTED_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_PEER_CLIENT_CERT_AUTH="true"

7.work-node02(192.168.168.4)编译etcd.conf文件

vi /opt/kubernetes/conf/etcd.conf #[Member] ETCD_NAME="work-node02" ETCD_DATA_DIR="/var/lib/etcd/k8s.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.168.4:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.168.4:2379,https://127.0.0.1:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.168.4:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.168.4:2379" ETCD_INITIAL_CLUSTER="k8s-master=https://192.168.168.2:2380,work-node01=https://192.168.168.3:2380,work-node02=https://192.168.168.4:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" #[Security] ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" ETCD_TRUSTED_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" ETCD_PEER_TRUSTED_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_PEER_CLIENT_CERT_AUTH="true"

8.各etcd节点启动etcd并设置开机自动启动

# systemctl daemon-reload

# systemctl start etcd.service

# systemctl status etcd.service

# systemctl enable etcd

查看etcd集群各节点健康状态

# etcdctl --cacert=/opt/kubernetes/ssl/ca.pem \ --cert=/opt/kubernetes/ssl/etcd.pem \ --key=/opt/kubernetes/ssl/etcd-key.pem \ --endpoints="https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379" endpoint health https://192.168.168.2:2379 is healthy: successfully committed proposal: took = 37.391708ms https://192.168.168.3:2379 is healthy: successfully committed proposal: took = 37.9063ms https://192.168.168.4:2379 is healthy: successfully committed proposal: took = 37.364345ms # etcdctl --write-out=table --cacert=/opt/kubernetes/ssl/ca.pem --cert=/opt/kubernetes/ssl/etcd.pem --key=/opt/kubernetes/ssl/etcd-key.pem --endpoints="https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379" endpoint status +---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://192.168.168.2:2379 | 8e26ead5138d3770 | 3.4.0 | 20 kB | true | false | 3 | 12 | 12 | | | https://192.168.168.3:2379 | 8dda35f790d1cc37 | 3.4.0 | 16 kB | false | false | 3 | 12 | 12 | | | https://192.168.168.4:2379 | 9c1fbda9f18d4be | 3.4.0 | 20 kB | false | false | 3 | 12 | 12 | | +---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

查看etcd集群各节点状态

# etcdctl --cacert=/opt/kubernetes/ssl/ca.pem \ --cert=/opt/kubernetes/ssl/etcd.pem \ --key=/opt/kubernetes/ssl/etcd-key.pem \ --endpoints="https://192.168.168.2:2379" member list 9c1fbda9f18d4be, started, work-node02, https://192.168.168.4:2380, https://192.168.168.4:2379, false 8dda35f790d1cc37, started, work-node01, https://192.168.168.3:2380, https://192.168.168.3:2379, false 8e26ead5138d3770, started, k8s-master, https://192.168.168.2:2380, https://192.168.168.2:2379, false

六、3台主机上安装docker-engine

1.详细步骤见“Oracle Linux7安装Docker”

2.配置Docker允许在systemd模式

设置docker的JSON文件

# vi /etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"], "registry-mirrors": ["https://wghlmi3i.mirror.aliyuncs.com"] }

七、Kubernetes集群安装配置

1.下载Kubernetes源码(本次使用k8s-1.16.4)

kubernetes-server-linux-amd64.tar.gz

kubernetes-node-linux-amd64.tar.gz

kubernetes.tar.gz

2.解压Kubernets压缩包,生成一个kubernetes目录

tar -zxvf kubernetes-server-linux-amd64.tar.gz3.配置k8s-master(192.168.168.2)

1)将k8s可执行文件拷贝至kubernets/bin目录下

# cp -r /opt/software/kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} /opt/kubernetes/bin/2)配置kubernetes命令自动补齐

# yum install -y bash-completion # source /usr/share/bash-completion/bash_completion # source <(kubectl completion bash) # echo “source <(kubectl completion bash)” >> ~/.bashrc

3)创建生成K8S csr的JSON配置文件:

# cd /root/ssl # cat > kubernetes-csr.json <<EOF { "CN": "kubernetes", "hosts": [ "127.0.0.1", "192.168.168.2", //k8s-master ip,k8s集群时在此地址下还可以加一个k8s-cluster vip ip "192.168.168.3", "192.168.168.4", "10.1.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

注:10.1.0.1地址为service-cluster网段中第一个ip

4)在/root/ssl目录下生成k8s证书和私钥,并分发到各节点

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes # cp kubernetes*.pem /opt/kubernetes/ssl/ # scp kubernetes*.pem root@192.168.168.3:/opt/kubernetes/ssl/ # scp kubernetes*.pem root@192.168.168.4:/opt/kubernetes/ssl/

5)创建生成admin证书csr的JSON配置文件

# cat > admin-csr.json <<EOF { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF

6)生成admin证书和私钥

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin # cp admin*.pem /opt/kubernetes/ssl/

7)创建kube-apiserver使用的客户端token文件

# mkdir /opt/kubernetes/token //在各k8s节点执行相同步骤 # export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ') //生成一个程序登录3rd_session # cat > /opt/kubernetes/token/bootstrap-token.csv <<EOF ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" EOF

8)创建基础用户名/密码认证配置

# vi /opt/kubernetes/token/basic-auth.csv //添加如下内容 admin,admin,1 readonly,readonly,2 # kubectl config set-credentials admin \ --client-certificate=/opt/kubernetes/ssl/admin.pem \ --embed-certs=true \ --client-key=/opt/kubernetes/ssl/admin-key.pem User "admin" set.

9)创建Kube API Server启动文件

# vi /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=etcd.service Wants=etcd.service [Service] EnvironmentFile=/opt/kubernetes/conf/apiserver.conf ExecStart=/opt/kubernetes/bin/kube-apiserver \ $KUBE_API_ADDRESS \ $KUBE_ETCD_SERVERS \ $KUBE_SERVICE_ADDRESSES \ $KUBE_ADMISSION_CONTROL \ $KUBE_API_ARGS Restart=on-failure [Install] WantedBy=multi-user.target # mkdir /var/log/kubernetes //在各k8s节点执行相同步骤 # mkdir /var/log/kubernetes/apiserver && touch /var/log/kubernetes/apiserver/api-audit.log

10)生成日志审计功能

cat > /opt/kubernetes/yaml/audit-policy.yaml <<EOF # Log all requests at the Metadata level. apiVersion: audit.k8s.io/v1beta1 kind: Policy omitStages: - "RequestReceived" rules: - level: Request users: ["admin"] resources: - group: "" resources: ["*"] - level: Request user: ["system:anonymous"] resources: - group: "" resources: ["*"] EOF

11)创建kube API Server配置文件并启动

# vi /opt/kubernetes/conf/apiserver.conf ## kubernetes apiserver system config # ## The address on the local server to listen to. KUBE_API_ADDRESS="--advertise-address=192.168.168.2 --bind-address=192.168.168.2" # ## The port on the local server to listen on. #KUBE_API_PORT="--port=8080" # ## Port minions listen on #KUBELET_PORT="--kubelet-port=10250" # ## Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379" # ## Address range to use for services KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.1.0.0/16" # ## default admission control policies KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction,ValidatingAdmissionWebhook,MutatingAdmissionWebhook" # ## Add your own! KUBE_API_ARGS="--secure-port=6443 \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth \ --token-auth-file=/opt/kubernetes/token/bootstrap-token.csv \ --service-cluster-ip-range=10.1.0.0/16 \ --service-node-port-range=30000-50000 \ --audit-policy-file=/opt/kubernetes/yaml/audit-policy.yaml \ --tls-cert-file=/opt/kubernetes/ssl/kubernetes.pem \ --tls-private-key-file=/opt/kubernetes/ssl/kubernetes-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/kubernetes/ssl/ca.pem \ --etcd-certfile=/opt/kubernetes/ssl/kubernetes.pem \ --etcd-keyfile=/opt/kubernetes/ssl/kubernetes-key.pem \ --allow-privileged=true \ --logtostderr=true \ --log-dir=/var/log/kubernetes/apiserver \ --v=4 \ --audit-policy-file=/opt/kubernetes/yaml/audit-policy.yaml \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/var/log/kubernetes/apiserver/api-audit.log" # systemctl daemon-reload # systemctl enable kube-apiserver # systemctl start kube-apiserver # systemctl status kube-apiserver

12)创建Kube Controller Manager启动文件

# vi /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] EnvironmentFile=/opt/kubernetes/conf/controller-manager.conf ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS Restart=on-failure [Install] WantedBy=multi-user.target mkdir /var/log/kubernetes/controller-manager

13)创建kube Controller Manager配置文件并启动

# vi /opt/kubernetes/conf/controller-manager.conf ### # The following values are used to configure the kubernetes controller-manager # # defaults from config and apiserver should be adequate # # Add your own! KUBE_CONTROLLER_MANAGER_ARGS="--address=127.0.0.1 \ --master=http://127.0.0.1:8080 \ --service-cluster-ip-range=10.1.0.0/16 \ --cluster-cidr=10.2.0.0/16 \ --allocate-node-cidrs=true \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \ --root-ca-file=/opt/kubernetes/ssl/ca.pem \ --leader-elect=true \ --logtostderr=true \ --v=4 \ --log-dir=/var/log/kubernetes/controller-manager"

注:--service-cluster-ip-range是服务的地址范围,--cluster-cidr是pod的地址范围

# systemctl daemon-reload # systemctl enable kube-controller-manager # systemctl start kube-controller-manager # systemctl status kube-controller-manager

14)创建Kube Scheduler启动文件

# vi /usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] EnvironmentFile=/opt/kubernetes/conf/scheduler.conf ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_ARGS Restart=on-failure [Install] WantedBy=multi-user.target mkdir /var/log/kubernetes/scheduler

15)创建Kube Scheduler配置文件并启动

# vi /opt/kubernetes/conf/scheduler.conf ### # kubernetes scheduler config # # default config should be adequate # # Add your own! KUBE_SCHEDULER_ARGS="--master=http://127.0.0.1:8080 \ --leader-elect=true \ --logtostderr=true \ --v=4 \ --log-dir=/var/log/kubernetes/scheduler" # systemctl daemon-reload # systemctl enable kube-scheduler # systemctl start kube-scheduler # systemctl status kube-scheduler

16)创建生成kube-proxy证书csr的JSON配置文件

# cd /root/ssl # cat > kube-proxy-csr.json << EOF { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

17)生成kube-proxy证书和私钥,并分发到给节点

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy # scp kube-proxy*.pem root@192.168.168.3:/opt/kubernetes/ssl/ # scp kube-proxy*.pem root@192.168.168.4:/opt/kubernetes/ssl/

18)获取 bootstrap.kubeconfig 配置文件

# vi /opt/kubernetes/scripts/environment.sh #!/bin/bash # 创建kubelet bootstrapping kubeconfig BOOTSTRAP_TOKEN=$(cat /opt/kubernetes/token/bootstrap-token.csv | awk -F ',' '{print $1}') KUBE_APISERVER="https://192.168.168.2:6443" # 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=bootstrap.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kubelet-bootstrap \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=bootstrap.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=bootstrap.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=bootstrap.kubeconfig # cd /opt/kubernetes/scripts && sh environment.sh

19)获取kubelet.kubeconfig 文件

# vi /opt/kubernetes/scripts/envkubelet.kubeconfig.sh #!/bin/bash # 创建kubelet bootstrapping kubeconfig BOOTSTRAP_TOKEN=$(cat /opt/kubernetes/token/bootstrap-token.csv | awk -F ',' '{print $1}') KUBE_APISERVER="https://192.168.168.2:6443" # 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kubelet.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kubelet \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=kubelet.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet \ --kubeconfig=kubelet.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=kubelet.kubeconfig # cd /opt/kubernetes/scripts && sh envkubelet.kubeconfig.sh

20)获取kube-proxy kubeconfig文件

# vi /opt/kubernetes/scripts/env_proxy.sh #!/bin/bash # 创建kube-proxy kubeconfig文件 BOOTSTRAP_TOKEN=$(cat /opt/kubernetes/token/bootstrap-token.csv | awk -F ',' '{print $1}') KUBE_APISERVER="https://192.168.168.2:6443" kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \ --client-certificate=/root/ssl/kube-proxy.pem \ --client-key=/root/ssl/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig # cd /opt/kubernetes/scripts && sh env_proxy.sh

21)将kubelet-bootstrap用户绑定到系统集群角色

#kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

22)分发bootstrap.kubeconfig、kubelet.kubeconfig和kube-proxy.kubeconfig三个文件到各node节点

# cd /opt/kubernetes/scripts # cp *.kubeconfig /opt/kubernetes/conf # scp *.kubeconfig root@192.168.168.3:/opt/kubernetes/conf # scp *.kubeconfig root@192.168.168.4:/opt/kubernetes/conf

23)检查集群组件健康状况

# kubectl get cs NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok k8s-master Healthy {"health":"true"} work-node01 Healthy {"health":"true"} work-node02 Healthy {"health":"true"}

注:由于Kubernetes v1.16.x版bug,暂时使用如下命令查看组件健康状态

# kubectl get cs -o=go-template='{{printf "|NAME|STATUS|MESSAGE|\n"}}{{range .items}}{{$name := .metadata.name}}{{range .conditions}}{{printf "|%s|%s|%s|\n" $name .status .message}}{{end}}{{end}}' |NAME|STATUS|MESSAGE| |controller-manager|True|ok| |scheduler|True|ok| |etcd-1|True|{"health":"true"}| |etcd-0|True|{"health":"true"}| |etcd-2|True|{"health":"true"}|

4.配置work-node01/02

1)安装ipvsadm等工具(各node节点相同操作)

yum install -y ipvsadm ipset bridge-utils conntrack

2)将k8s可执行文件拷贝至kubernets/bin目录下

# tar -zxvf kubernetes-node-linux-amd64.tar.gz # cp /opt/software/kubernetes/node/bin/{kube-proxy,kubelet} /opt/kubernetes/bin/ # scp /opt/software/kubernetes/node/bin/{kube-proxy,kubelet} root@192.168.168.4:/opt/kubernetes/bin/

3)创建kubelet工作目录(各node节点做相同操作)

mkdir /var/lib/kubelet

4)创建kubelet配置文件和yaml文件

# vi /opt/kubernetes/conf/kubelet.conf ### ## kubernetes kubelet (minion) config # KUBELET_ARGS="--hostname-override=192.168.168.3 \ --pod_infra_container_image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1 \ --bootstrap-kubeconfig=/opt/kubernetes/conf/bootstrap.kubeconfig \ --kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig \ --config=/opt/kubernetes/yaml/kubelet.yaml \ --cert-dir=/opt/kubernetes/ssl \ --network-plugin=cni \ --fail-swap-on=false \ --logtostderr=true \ --v=4 \ --logtostderr=false \ --log-dir=/var/log/kubernetes/kubelet"

注:hostname-override设置为各node节点主机IP,KUBELET_POD_INFRA_CONTAINER可设置为私有容器仓库地址,如有可设置为KUBELET_POD_INFRA_CONTAINER="--pod_infra_container_image={私有镜像仓库ip}:80/k8s/pause-amd64:v3.1"

# vi /opt/kubernetes/yaml/kubelet.yaml kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 0.0.0.0 port: 10250 readOnlyPort: 10255 cgroupDriver: systemd clusterDNS: ["10.1.0.2"] clusterDomain: cluster.local. failSwapOn: false authentication: anonymous: enabled: true x509: clientCAFile: /opt/kubernetes/ssl/ca.pem authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5% maxOpenFiles: 1000000 maxPods: 110

注:address设置为各node节点的本机IP,clusterDNS设为service-cluster网段中第二个ip

mkdir -p /var/log/kubernetes/kubelet //各node节点做相同操作

分发kubelet.conf和kubelet.yaml到各node节点

scp /opt/kubernetes/conf/kubelet.conf root@192.168.168.4:/opt/kubernetes/conf/ scp /opt/kubernetes/yaml/kubelet.yaml root@192.168.168.4:/opt/kubernetes/yaml/

5)创建Kubelet启动文件并启动

# vi /usr/lib/systemd/system/kubelet.service 注:ubuntu此处为 vi /lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=docker.service Requires=docker.service [Service] WorkingDirectory=/var/lib/kubelet EnvironmentFile=/opt/kubernetes/conf/kubelet.conf ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_ARGS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target

分发kubelet.service文件到各node节点

scp /usr/lib/systemd/system/kubelet.service root@192.168.168.4:/usr/lib/systemd/system/ 注:ubuntu此处为 scp /lib/systemd/system/kubelet.service root@192.168.168.4:/lib/systemd/system/ systemctl daemon-reload systemctl enable kubelet systemctl start kubelet systemctl status kubelet

6)查看CSR证书请求并批准kubelet 的 TLS 证书请求(在k8s-master上执行)

# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-W4Ijt25xbyeEp_fqo6NW6UBSrzyzWmRC46V0JyM4U_o 8s kubelet-bootstrap Pending node-csr-c0tH_m3KHVtN3StGnlBh87g5B_54EOcR68VKL4bgn_s 85s kubelet-bootstrap Pending # kubectl get csr|grep 'Pending' | awk 'NR>0{print $1}'| xargs kubectl certificate approve certificatesigningrequest.certificates.k8s.io/node-csr-W4Ijt25xbyeEp_fqo6NW6UBSrzyzWmRC46V0JyM4U_o approved certificatesigningrequest.certificates.k8s.io/node-csr-c0tH_m3KHVtN3StGnlBh87g5B_54EOcR68VKL4bgn_s approved # kubectl get csr //批准申请查看后CONDITION显示Approved,Issued即可 NAME AGE REQUESTOR CONDITION node-csr-W4Ijt25xbyeEp_fqo6NW6UBSrzyzWmRC46V0JyM4U_o 93s kubelet-bootstrap Approved,Issued node-csr-c0tH_m3KHVtN3StGnlBh87g5B_54EOcR68VKL4bgn_s 2m50s kubelet-bootstrap Approved,Issued

补充:在使用vCenter复制work-node01位work-node02节点启动kubelet报告"Unable to register node "work-node02" with API server: nodes "work-node02" is forbidden: node "work-node01" is not allowed to modify node "work-node02",此时停止work-node02节点的kubelet,并删除/opt/kubernetes/conf/kubelet.kubeconfig文件和/opt/kubernetes/ssl目录下的kubelet-client-*.pem、kubelet.crt、kubelet.key三个文件,从k8s-master重新拷贝kubelet.kubeconfig到work-node02并重启kubelet即可

7)创建kube-proxy工作目录和日志目录(各node节点做相同操作)

# mkdir /var/lib/kube-proxy # mkdir /var/log/kubernetes/kube-proxy

8)创建kube-proxy的启动文件

# vi /usr/lib/systemd/system/kube-proxy.service 注:ubuntu此处为 vi /lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] WorkingDirectory=/var/lib/kube-proxy EnvironmentFile=/opt/kubernetes/conf/kube-proxy.conf ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_ARGS Restart=on-failure [Install] WantedBy=multi-user.target

分发kube-proxy.service到各node节点

scp /usr/lib/systemd/system/kube-proxy.service root@192.168.168.4:/usr/lib/systemd/system/ 注:ubuntu此处为 scp /lib/systemd/system/kube-proxy.service root@192.168.168.4:/lib/systemd/system/

9)创建kube-proxy配置文件并启动

# vi /opt/kubernetes/conf/kube-proxy.conf ### # kubernetes proxy config # Add your own! KUBE_PROXY_ARGS="--hostname-override=192.168.168.3 \ --kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfig \ --cluster-cidr=10.1.0.0/16 \ --masquerade-all \ --feature-gates=SupportIPVSProxyMode=true \ --proxy-mode=ipvs \ --ipvs-min-sync-period=5s \ --ipvs-sync-period=5s \ --ipvs-scheduler=rr \ --logtostderr=true \ --v=4 \ --log-dir=/var/log/kubernetes/kube-proxy"

注:hostname-override设置为各node节点主机IP

分发kube-proxy.conf到各node节点

scp /opt/kubernetes/conf/kube-proxy.conf root@192.168.168.4:/opt/kubernetes/conf/ # systemctl daemon-reload # systemctl enable kube-proxy # systemctl start kube-proxy # systemctl status kube-proxy

10)查看LVS状态

# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.1.0.1:443 rr persistent 10800 -> 192.168.168.2:6443 Masq 1 0 0

5.配置calico网络

1)下载calico和cni插件

在k8s-master主机执行:

# mkdir -p /etc/calico/{conf,yaml,bin} /opt/cni/bin/ /etc/cni/net.d # wget https://github.com/projectcalico/calicoctl/releases/download/v3.10.2/calicoctl -P /etc/calico/bin # chmod +x /etc/calico/bin/calicoctl # cat > /etc/profile.d/calico.sh << EOF CALICO_HOME=/etc/calico export PATH=$CALICO_HOME/bin:$PATH EOF # source /etc/profile.d/calico.sh # wget https://github.com/projectcalico/cni-plugin/releases/download/v3.10.2/calico-amd64 -P /opt/cni/bin # mv /opt/cni/bin/calico-amd64 /opt/cni/bin/calico # wget /https://github.com/projectcalico/cni-plugin/releases/download/v3.10.2/calico-ipam-amd64 -P /opt/cni/bin # mv /opt/cni/bin/calico-ipam-amd64 /opt/cni/bin/calico-ipam # mkdir /opt/software/cni-plugins # wget https://github.com/containernetworking/plugins/releases/download/v0.6.0/cni-plugins-amd64-v0.6.0.tgz -P /opt/software/cni-plugins # tar -xvf /opt/software/cni-plugins/cni-plugins-amd64-v0.6.0.tgz && cp /opt/software/cni-plugins/{bandwidth,loopback,portmap,tuning} /opt/cni/bin/ # chmod +x /opt/cni/bin/* # docker pull calico/node:v3.10.2 # docker pull calico/cni:v3.10.2 # docker pull calico/kube-controllers:v3.10.2 # docker pull calico/pod2daemon-flexvol:v3.10.2 如node节点无法上网可从k8s-master节点复制calico镜像文件到各node节点

查看docker calico image信息

# docker images REPOSITORY TAG IMAGE ID CREATED SIZE calico/node v3.10.2 4a88ba569c29 6 days ago 192MB calico/cni v3.10.2 4f761b4ba7f5 6 days ago 163MB calico/kube-controllers v3.10.2 8f87d09ab811 6 days ago 50.6MB calico/pod2daemon-flexvol v3.10.2 5b249c03bee8 6 days ago 9.78MB

2)在k8s-master创建CNI网络配置文件

# cat > /etc/cni/net.d/10-calico.conf <<EOF { "name": "calico-k8s-network", "cniVersion": "0.3.1", "type": "calico", "etcd_endpoints": "https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379", "etcd_key_file": "/opt/kubernetes/ssl/etcd-key.pem", "etcd_cert_file": "/opt/kubernetes/ssl/etcd.pem", "etcd_ca_cert_file": "/opt/kubernetes/ssl/ca.pem", "log_level": "info", "mtu": 1500, "ipam": { "type": "calico-ipam" }, "policy": { "type": "k8s" }, "kubernetes": { "kubeconfig": "/opt/kubernetes/conf/kubelet.kubeconfig" } } EOF

3)在k8s-master创建calico和etcd交互文件

# cat > /etc/calico/calicoctl.cfg <<EOF apiVersion: projectcalico.org/v3 kind: CalicoAPIConfig metadata: spec: datastoreType: "etcdv3" etcdEndpoints: "https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379" etcdKeyFile: "/opt/kubernetes/ssl/etcd-key.pem" etcdCertFile: "/opt/kubernetes/ssl/etcd.pem" etcdCACertFile: "/opt/kubernetes/ssl/ca.pem" EOF

4)在k8s-master获取calico.yaml

# wget https://docs.projectcalico.org/v3.10/getting-started/kubernetes/installation/hosted/calico.yaml -P /etc/calico/yaml/

注:此处版本与docker calico image版本相同

修改calico.yaml文件内容

# cd /etc/calico/yaml ### 替换 Etcd 地址 sed -i 's@.*etcd_endpoints:.*@\ \ etcd_endpoints:\ \"https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379\"@gi' calico.yaml ### 替换 Etcd 证书 export ETCD_CERT=`cat /opt/kubernetes/ssl/etcd.pem | base64 | tr -d '\n'` export ETCD_KEY=`cat /opt/kubernetes/ssl/etcd-key.pem | base64 | tr -d '\n'` export ETCD_CA=`cat /opt/kubernetes/ssl/ca.pem | base64 | tr -d '\n'` sed -i "s@.*etcd-cert:.*@\ \ etcd-cert:\ ${ETCD_CERT}@gi" calico.yaml sed -i "s@.*etcd-key:.*@\ \ etcd-key:\ ${ETCD_KEY}@gi" calico.yaml sed -i "s@.*etcd-ca:.*@\ \ etcd-ca:\ ${ETCD_CA}@gi" calico.yaml sed -i 's@.*etcd_ca:.*@\ \ etcd_ca:\ "/calico-secrets/etcd-ca"@gi' calico.yaml sed -i 's@.*etcd_cert:.*@\ \ etcd_cert:\ "/calico-secrets/etcd-cert"@gi' calico.yaml sed -i 's@.*etcd_key:.*@\ \ etcd_key:\ "/calico-secrets/etcd-key"@gi' calico.yaml ### 替换 IPPOOL 地址 sed -i 's/192.168.0.0/10.2.0.0/g' calico.yaml ### 替换 MTU值 sed -i 's/1440/1500/g' calico.yaml //按实际网络情况修改

calico资源进行配置(在master上执行)

# kubectl create -f /etc/calico/yaml/calico.yaml -n kube-system

5)查看所有namespace下面的pod/deployment

# kubectl get deployment,pod -n kube-system NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/calico-kube-controllers 1/1 1 1 100m NAME READY STATUS RESTARTS AGE pod/calico-kube-controllers-6dbf77c57f-snft8 1/1 Running 0 100m pod/calico-node-6nn4x 1/1 Running 0 100m pod/calico-node-sns4z 1/1 Running 0 100m

6)在k8s-master创建calico配置文件

# cat >/etc/calico/conf/calico.conf <<EOF CALICO_NODENAME="k8s-master" ETCD_ENDPOINTS=https://192.168.168.2:2379,https://192.168.168.3:2379,https://192.168.168.4:2379 ETCD_CA_CERT_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" CALICO_IP="192.168.168.2" CALICO_IP6="" CALICO_AS="" NO_DEFAULT_POOLS="" CALICO_NETWORKING_BACKEND=bird FELIX_DEFAULTENDPOINTTOHOSTACTION=ACCEPT FELIX_LOGSEVERITYSCREEN=info EOF

注:CALICO_NODENAME设置为k8s-master节点hostname,CALICO_IP设置为k8s-master节点IP,CALICO_AS值同一IDC内设置为同一个AS号

7)在k8s-master创建calico启动文件

# vi /usr/lib/systemd/system/calico.service 注:ubuntu此处为 vi /lib/systemd/system/calico.service [Unit] Description=calico-node After=docker.service Requires=docker.service [Service] User=root PermissionsStartOnly=true EnvironmentFile=/etc/calico/conf/calico.conf ExecStart=/usr/bin/docker run --net=host --privileged --name=calico-node \ -e NODENAME=${CALICO_NODENAME} \ -e ETCD_ENDPOINTS=${ETCD_ENDPOINTS} \ -e ETCD_CA_CERT_FILE=${ETCD_CA_CERT_FILE} \ -e ETCD_CERT_FILE=${ETCD_CERT_FILE} \ -e ETCD_KEY_FILE=${ETCD_KEY_FILE} \ -e IP=${CALICO_IP} \ -e IP6=${CALICO_IP6} \ -e AS=${CALICO_AS} \ -e NO_DEFAULT_POOLS=${NO_DEFAULT_POOLS} \ -e CALICO_NETWORKING_BACKEND=${CALICO_NETWORKING_BACKEND} \ -e FELIX_DEFAULTENDPOINTTOHOSTACTION=${FELIX_DEFAULTENDPOINTTOHOSTACTION} \ -e FELIX_LOGSEVERITYSCREEN=${FELIX_LOGSEVERITYSCREEN} \ -v /opt/kubernetes/ssl/ca.pem:/opt/kubernetes/ssl/ca.pem \ -v /opt/kubernetes/ssl/etcd.pem:/opt/kubernetes/ssl/etcd.pem \ -v /opt/kubernetes/ssl/etcd-key.pem:/opt/kubernetes/ssl/etcd-key.pem \ -v /run/docker/plugins:/run/docker/plugins \ -v /lib/modules:/lib/modules \ -v /var/run/calico:/var/run/calico \ -v /var/log/calico:/var/log/calico \ -v /var/lib/calico:/var/lib/calico \ calico/node:v3.10.2 ExecStop=/usr/bin/docker rm -f calico-node Restart=always RestartSec=10 [Install] WantedBy=multi-user.target mkdir /var/log/calico /var/lib/calico /var/run/calico # systemctl daemon-reload # systemctl enable calico # systemctl start calico # systemctl status calico

8)查看calico node信息

# calicoctl get node -o wide NAME ASN IPV4 IPV6 k8s-master (64512) 192.168.168.2/32 work-node01 (64512) 192.168.168.3/24 work-node02 (64512) 192.168.168.4/24

9)查看peer信息

# calicoctl node status Calico process is running. IPv4 BGP status +---------------+-------------------+-------+----------+-------------+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +---------------+-------------------+-------+----------+-------------+ | 192.168.168.3 | node-to-node mesh | up | 07:41:40 | Established | | 192.168.168.4 | node-to-node mesh | up | 07:41:43 | Established | +---------------+-------------------+-------+----------+-------------+ IPv6 BGP status No IPv6 peers found.

10)创建Calico IPPool

# calicoctl get ippool -o wide NAME CIDR NAT IPIPMODE VXLANMODE DISABLED SELECTOR default-ipv4-ippool 192.168.0.0/16 true Always Never false all() default-ipv6-ippool fd93:317a:e57d::/48 false Never Never false all() # calicoctl delete ippool default-ipv4-ippool Successfully deleted 1 'IPPool' resource(s) # calicoctl apply -f - << EOF apiVersion: projectcalico.org/v3 kind: IPPool metadata: name: cluster-ipv4-ippool spec: cidr: 10.2.0.0/16 ipipMode: CrossSubnet natOutgoing: true EOF # calicoctl get ippool -o wide NAME CIDR NAT IPIPMODE VXLANMODE DISABLED SELECTOR cluster-ipv4-ippool 10.2.0.0/16 true CrossSubnet Never false all() default-ipv6-ippool fd08:4d71:39af::/48 false Never Never false all()

注:default-ipv4-ippool 192.168.0.0/16是calico初始ippool

- Always: 永远进行 IPIP 封装(默认)

- CrossSubnet: 只在跨网段时才进行 IPIP 封装,适合有 Kubernetes 节点在其他网段的情况,属于中肯友好方案

- Never: 从不进行 IPIP 封装,适合确认所有 Kubernetes 节点都在同一个网段下的情况

查看路由表

# route -n Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.168.1 0.0.0.0 UG 100 0 0 ens34 10.2.88.192 0.0.0.0 255.255.255.192 U 0 0 0 * 10.2.140.64 192.168.168.3 255.255.255.192 UG 0 0 0 tunl0 10.2.196.128 192.168.168.4 255.255.255.192 UG 0 0 0 tunl0 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 192.168.168.0 0.0.0.0 255.255.255.0 U 100 0 0 ens34

11)查看节点状态如果是Ready的状态就说明一切正常(在k8s-master上执行)

# kubectl get node NAME STATUS ROLES AGE VERSION node/192.168.168.3 Ready <none> 53s v1.16.4 node/192.168.168.4 Ready <none> 53s v1.16.4

6.部署kubernetes DNS(在master执行|本次使用k8s-1.16.4版本)

ubuntu系统解决127.0.0.53导致CoreDNS故障问题

# rm -f /etc/resolv.conf # ln -s /run/systemd/resolve/resolv.conf /etc/resolv.conf # echo 'nameserver 1.2.4.8' >> /run/systemd/resolve/resolv.conf # vi /etc/hosts 127.0.0.1 localhost work-node01

# cd /opt/software # tar -zxvf kubernetes.tar.gz # mv kubernetes/cluster/addons/dns/coredns.yaml.base /opt/kubernetes/yaml/coredns.yaml

2)修改coredns.yaml配置文件

# vi /opt/kubernetes/yaml/coredns.yaml 将配置文件coredns.yaml中,修改如下三个地方为自己的domain和cluster ip地址. 1.kubernetes __PILLAR__DNS__DOMAIN__ 改为 kubernetes cluster.local. 2.clusterIP: __PILLAR__DNS__SERVER__ 改为: clusterIP: 10.1.0.2 3.memory: __PILLAR__DNS__MEMORY__LIMIT__ 改为 memory: 170Mi 4.image: k8s.gcr.io/coredns:1.6.2 改为 image: coredns/coredns:1.6.2

3)创建CoreDNS

# kubectl create -f coredns.yaml serviceaccount "coredns" created clusterrole.rbac.authorization.k8s.io "system:coredns" created clusterrolebinding.rbac.authorization.k8s.io "system:coredns" created configmap "coredns" created deployment.extensions "coredns" created service "coredns" created

4)查看CoreDNS服务状态

# kubectl get deployment,pod -n kube-system -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deployment.apps/calico-kube-controllers 1/1 1 1 3h43m calico-kube-controllers calico/kube-controllers:v3.10.2 k8s-app=calico-kube-controllers deployment.apps/coredns 1/1 1 1 90m coredns k8s.gcr.io/coredns:1.6.2 k8s-app=kube-dns NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/calico-kube-controllers-7c4fb94b96-kdx2r 1/1 Running 1 3h43m 192.168.168.4 work-node02 <none> <none> pod/calico-node-8kr9t 1/1 Running 1 3h43m 192.168.168.3 work-node01 <none> <none> pod/calico-node-k4vg7 1/1 Running 1 3h43m 192.168.168.4 work-node02 <none> <none> pod/coredns-788bb5bd8d-ctk8f 1/1 Running 0 15m 10.2.196.132 work-node01 <none> <none> # kubectl get svc --all-namespaces NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 42h kube-system kube-dns ClusterIP 10.1.0.2 <none> 53/UDP,53/TCP,9153/TCP 158m

5)创建busybox测试CoreDNS

# mkdir /opt/pods_yaml # cat > /opt/pods_yaml/busybox.yaml <<EOF apiVersion: v1 kind: Pod metadata: name: busybox namespace: default spec: containers: - image: busybox:1.28.3 command: - sleep - "3600" imagePullPolicy: IfNotPresent name: busybox restartPolicy: Always EOF # kubectl create -f /opt/pods_yaml/busybox.yaml pod/busybox created # kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES busybox 1/1 Running 0 6m14s 10.2.140.161 192.168.168.4 <none> <none>

6)CoreDNS解析测试

# kubectl exec busybox cat /etc/resolv.conf nameserver 10.1.0.2 search default.svc.cluster.local. svc.cluster.local. cluster.local. options ndots:5 # kubectl exec -ti busybox -- nslookup www.baidu.com Server: 10.1.0.2 Address 1: 10.1.0.2 kube-dns.kube-system.svc.cluster.local Name: www.baidu.com Address 1: 2408:80f0:410c:1c:0:ff:b00e:347f Address 2: 2408:80f0:410c:1d:0:ff:b07a:39af Address 3: 61.135.169.125 Address 4: 61.135.169.121 # kubectl exec -i --tty busybox /bin/sh / # nslookup kubernetes Server: 10.1.0.2 Address 1: 10.1.0.2 kube-dns.kube-system.svc.cluster.local Name: kubernetes Address 1: 10.1.0.1 kubernetes.default.svc.cluster.local

注:如果执行kubectl exec busybox时报如下错误,可按以下方法临时解决

# kubectl exec busybox cat /etc/resolv.conf error: unable to upgrade connection: Forbidden (user=system:anonymous, verb=create, resource=nodes, subresource=proxy) # kubectl create clusterrolebinding system:anonymous --clusterrole=cluster-admin --user=system:anonymous clusterrolebinding.rbac.authorization.k8s.io/system:anonymous created