2、ceph安装部署

一、硬件

monitor:16c 16g 200g

mgr:16c 16g 200g,如果启用对象存储RGW,配置翻倍

osd:16c 16g ssd 万兆网卡

二、ceph存储部署

部署环境

| 主机名 | os | 配置 | public网络 | cluster网络 | role |

| node1 | Ubuntu 22.04.1 | 2c2g | 192.168.42.140 | 172.16.1.1 | mon,mgr,radosgw,osd,/dev/nvme0n1 |

| node2 | Ubuntu 22.04.1 | 2c2g | 192.168.42.141 | 172.16.1.2 | osd,mds,/dev/nvme0n1 |

| node3 | Ubuntu 22.04.1 | 2c2g | 192.168.42.142 | 172.16.1.3 | osd,/dev/nvme0n1,/dev/nvme0n2 |

1、环境准备

配置网络

更改源

设置允许root用户登录

配置主机名解析

root@node1:~# vim /etc/hosts # 添加 192.168.42.140 node1 192.168.42.141 node2 192.168.42.142 node3

配置时钟同步

echo "*/30 * * * * root /usr/sbin/ntpdate time1.aliyun.com" >> /etc/crontab

配置免密登录

root@node1:~# ssh-keygen root@node1:~$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys root@node1:~$ vi ~/.ssh/config 添加: Host * StrictHostKeyChecking no # 修改文件权限 root@node1:~$ chmod 600 ~/.ssh/config # 将密钥复制到其他主机的root用户目录下 root@node1:~$ scp -r /root/.ssh node1:/root/ root@node1:~$ scp -r /root/.ssh node2:/root/

# 测试

root@node1:~$ ssh node1 root@node1:~$ ssh node2 root@node1:~$ ssh node3

内核参数优化

root@node1:~$ vi /etc/sysctl.conf 添加: fs.file-max = 10000000000 fs.nr_open = 1000000000 root@node1:~$ vi /etc/security/limits.conf 添加: * soft nproc 102400 * hard nproc 104800 * soft nofile 102400 * hard nofile 104800 root soft nproc 102400 root hard nproc 104800 root soft nofile 102400 root hard nofile 104800

# 将这两个修改过的文件拷贝到其他节点

root@node1:~$ scp /etc/sysctl.conf node1:/etc/ root@node1:~$ scp /etc/sysctl.conf node2:/etc/ root@node1:~$ scp /etc/security/limits.conf node1:/etc/security/ root@node1:~$ scp /etc/security/limits.conf node2:/etc/security/

# 在三台虚拟机上分别执行以下命令,让内核参数生效,并重启

root@node1:~$ sysctl -p

root@node1:~$ reboot

2、ceph软件安装 (三台主机均需执行,各节点需保证ceph版本一致)

root@node1:~# wget -q -O- 'https://download.ceph.com/keys/release.asc' | sudo apt-key add - root@node1:~# echo deb https://download.ceph.com/debian-pacific/ $(lsb_release -sc) main | sudo tee /etc/apt/sources.list.d/ceph.list root@node1:~# vi /etc/apt/sources.list.d/ceph.list deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main root@node1:~# cp /etc/apt/trusted.gpg /etc/apt/trusted.gpg.d root@node1:~# apt update root@node1:~# apt -y install ceph root@node1:~# ceph -v ceph version 17.2.6 (d7ff0d10654d2280e08f1ab989c7cdf3064446a5) quincy (stable)

3、创建并配置mon

# 给集群生成一个唯一 ID

root@node1:~# uuidgen 0f342ec2-6119-4261-b65a-0833732b12cb

创建配置文件

root@node1:~# vi /etc/ceph/ceph.conf [global] fsid = 0f342ec2-6119-4261-b65a-0833732b12cb mon_initial_members = node1 mon host = 192.168.42.140 public network = 192.168.42.0/24 cluster network = 172.16.1.0/24 auth cluster required = cephx auth service required = cephx auth client required = cephx osd journal size = 1024 osd pool default size = 3 osd pool default min size = 2 osd pool default pg num = 16 osd pool default pgp num = 16 osd crush chooseleaf type = 1

# 为集群生成一个 mon 密钥环

root@node1:~# ceph-authtool --create-keyring /tmp/ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *' creating /tmp/ceph.mon.keyring

# 为集群生成一个 mgr 密钥环

root@node1:~# ceph-authtool --create-keyring /etc/ceph/ceph.client.admin.keyring --gen-key -n client.admin --cap mon 'allow *' --cap osd 'allow *' --cap mds 'allow *' --cap mgr 'allow *'

# 为集群生成一个bootstrap-osd密钥环

root@node1:~# ceph-authtool --create-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring --gen-key -n client.bootstrap-osd --cap mon 'profile bootstrap-osd' --cap mgr 'allow r'

# 将生成的密钥环导入

root@node1:~# ceph-authtool /tmp/ceph.mon.keyring --import-keyring /etc/ceph/ceph.client.admin.keyring

root@node1:~# ceph-authtool /tmp/ceph.mon.keyring --import-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring

#修改权限

root@node1:~# sudo chown ceph:ceph /tmp/ceph.mon.keyring

# 生成监视图,保存到/tmp/monmap

root@node1:~# monmaptool --create --add node1 192.168.42.140 --fsid 0f342ec2-6119-4261-b65a-0833732b12cb /tmp/monmap

#创建节点mon目录

root@node1:~# sudo -u ceph mkdir /var/lib/ceph/mon/ceph-node1

# 初始化

root@node1:~# sudo -u ceph ceph-mon --mkfs -i node1 --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring

# 启动服务并查看状态

root@node1:~# sudo systemctl start ceph-mon@node1 root@node1:~# sudo systemctl enable ceph-mon@node1 root@node1:~# sudo systemctl status ceph-mon@node1

#查看集群状态

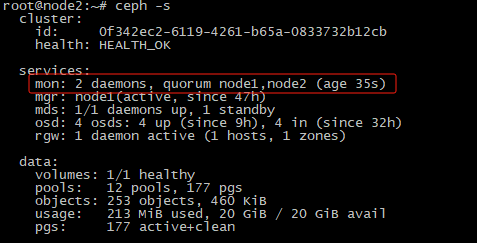

root@node1:~# ceph -s root@node1:~# ceph health detail 消除告警: root@node1:~# ceph config set mon auth_allow_insecure_global_id_reclaim false #禁用不安全模式 root@node1:~# ceph mon enable-msgr2

4、ceph-mon高可用,节点数应为奇数(1,3,5,7...)

ceph-mon@node2:

#修改配置文件,把新的mon加进去,只写node1的话,node1 down了之后ceph命令就执行不了。

root@node1:~# vim /etc/ceph/ceph.conf mon_initial_members = node1,node2,node3 mon host = 192.168.42.140,192.168.42.141,192.168.42.142 root@node1:~# scp /etc/ceph/ceph.conf node2:/etc/ceph/ root@node2:~# sudo -u ceph mkdir /var/lib/ceph/mon/ceph-node2

#在临时目录获取监视器密钥环

root@node2:~# ceph auth get mon. -o /tmp/ceph.mon.keyring

#获取监视器运行图

root@node2:~# ceph mon getmap -o /tmp/ceph.mon.map got monmap epoch 2 root@node2:~# chown ceph.ceph /tmp/ceph.mon.keyring

#初始化mon

root@node2:~# sudo -u ceph ceph-mon --mkfs -i node2 --monmap /tmp/ceph.mon.map --keyring /tmp/ceph.mon.keyring

# 启动服务,它会自动加入集群

root@node2:~# systemctl start ceph-mon@node2 root@node2:~# systemctl enable ceph-mon@node2 root@node2:~# systemctl status ceph-mon@node2

ceph-mon@node3:

root@node1:~# scp /etc/ceph/ceph.conf node2:/etc/ceph/ root@node1:~# scp /etc/ceph/ceph.client.admin.keyring node3:/etc/ceph/ root@node3:~# ceph auth get mon. -o /tmp/ceph.mon.keyring root@node3:~# ceph mon getmap -o /tmp/ceph.mon.map got monmap epoch 3 root@node3:~# chown ceph.ceph /tmp/ceph.mon.keyring root@node3:~# sudo -u ceph ceph-mon --mkfs -i node3 --monmap /tmp/ceph.mon.map --keyring /tmp/ceph.mon.keyring root@node3:~# systemctl enable ceph-mon@node3 root@node3:~# systemctl start ceph-mon@node3 root@node3:~# systemctl status ceph-mon@node3

测试高可用:

root@node1:~# systemctl stop ceph-mon@node1

4、添加mgr

root@node1:~# ceph auth get-or-create mgr.node1 mon 'allow profile mgr' osd 'allow *' mds 'allow *' [mgr.node1] key = AQC4TYBlJROvDBAAxPV1uK8dEilotSub/whl5Q==

# 创建 mgr 节点目录

root@node1:~# sudo -u ceph mkdir /var/lib/ceph/mgr/ceph-node1/

# 将 mgr 节点密钥环输出到 mgr 节点目录下

root@node1:~# ceph auth get mgr.node1 -o /var/lib/ceph/mgr/ceph-node1/keyring

# 启动服务

root@node1:~# systemctl start ceph-mgr@node1 root@node1:~# systemctl enable ceph-mgr@node1 root@node1:~# systemctl status ceph-mgr@node1

# 查看集群验证

root@node1:~# ceph -s

5、添加osd

# 密钥环从初始 mon 节点拷贝到其他节点相同目录下

root@node1:~# scp /var/lib/ceph/bootstrap-osd/ceph.keyring node2:/var/lib/ceph/bootstrap-osd/ root@node1:~# scp /var/lib/ceph/bootstrap-osd/ceph.keyring node3:/var/lib/ceph/bootstrap-osd/ root@node1:~# scp /etc/ceph/ceph.conf node2:/etc/ceph/ root@node1:~# scp /etc/ceph/ceph.conf node3:/etc/ceph/

# 在node1添加osd

root@node1:~# ceph-volume lvm create --data /dev/nvme0n1 root@node1:~# systemctl status ceph-osd@0 # 无需手动启动

# 在node2添加osd

root@node2:~# ceph-volume lvm create --data /dev/nvme0n1 root@node2:~# systemctl status ceph-osd@1

# 在node3添加osd

root@node3:~# ceph-volume lvm create --data /dev/nvme0n1 root@node3:~# systemctl status ceph-osd@2

# 查看主机和osd的对应关系

root@node1:~# ceph osd tree

# 查看mypool这个pool的pg分布

root@node1:~# ceph pg ls-by-pool mypool root@node1:~# ceph pg ls-by-pool mypool | awk '{print $1,$2,$15}'

在实际应用中,ceph 主要用来提供块存储,目前已基本安装就绪。

6、启用dashboard(可选)

root@node1:~# apt install ceph-mgr-dashboard root@node1:~# ceph mgr module enable dashboard root@node1:~# ceph mgr module ls | grep board

# 自创建安装证书或关闭ssl( ceph config set mgr mgr/dashboard/ssl false)

root@node0:~$ ceph dashboard create-self-signed-cert root@node0:~$ openssl req -new -nodes -x509 -subj "/O=IT/CN=ceph-mgr-dashboard" -days 3650 -keyout dashboard.key -out dashboard.crt -extensions v3_ca root@node0:~$ ceph dashboard set-ssl-certificate -i dashboard.crt root@node0:~$ ceph dashboard set-ssl-certificate-key -i dashboard.key root@node1:~# ceph config set mgr mgr/dashboard/server_addr 192.168.42.140 root@node1:~# ceph config set mgr mgr/dashboard/server_port 80 root@node1:~# ceph config set mgr mgr/dashboard/ssl_server_port 8443

#设置密码和查看配置

root@node1:~# echo '123456' >> /root/cephpasswd root@node1:~# ceph dashboard ac-user-create admin -i /root/cephpasswd administrator root@node1:~# ceph config dump

重启mgr服务

root@node1:~# systemctl restart ceph-mgr@node1

#查看ceph-mgr dashboard监听端口

root@node1:/var/log/ceph# ceph mgr services { "dashboard": "https://192.168.42.140:8443/" }

7、块存储

#创建rbd pool,名字为myrbd,pg为16个

root@node1:~# ceph osd pool create myrbd 16 pool 'myrbd' created root@node1:~# ceph osd lspools 1 .mgr 2 myrbd

#对存储池初始化

root@node1:~# rbd pool init myrbd

注:rbd存储池不能直接用于块设备,需要实现创建image,并把image文件作为块设备使用,rbd命令可用于创建、查看及删除块设备相应的image,以及克隆image,创建快照,将镜像回滚到快照,查看快照等管理操作。

#创建一块1G的磁盘disk01,指定磁盘特性layering,不指定默认的所有的特性需要高版本内核支持。

root@node1:~# rbd create disk01 --pool myrbd --size 1G --image-feature layering root@node1:~# rbd ls myrbd disk01 root@node1:~# rbd --pool myrbd --image disk01 info

客户机挂载使用。

root@node2:~# apt -y install ceph-common 版本一致

#从部署服务器同步认证文件。这样客户机就相当于用admin权限在访问集群,生产不建议。

root@node1:~# scp /etc/ceph/ceph.conf node2:/etc/ceph/ root@node1:~# scp /etc/ceph/ceph.client.admin.keyring node2:/etc/ceph/

# 映射到客户机本地,并查看映射情况

root@node2:~# rbd --pool myrbd map disk01 #需设置开机自启动 /dev/rbd0 root@node2:~# rbd showmapped id pool namespace image snap device 0 myrbd disk01 - /dev/rbd0

#挂载使用

root@node2:~# fdisk -l root@node2:~# mkfs.xfs /dev/rbd0 root@node2:~# mkdir /data02 root@node2:~# mount /dev/rbd0 /data02 #需设置开机自启动

#删掉image

root@node2:~# umount /data02 # 断开映射 root@node2:~# rbd --pool myrbd unmap disk01 root@node2:~# rbd showmapped # 删除image root@node2:~# rbd --pool myrbd remove disk01 root@node2:~# rbd ls myrbd

为rbd使用创建单独授权

# 创建用户client.rbd01

root@node1:~# ceph auth get-or-create client.rbd01

# 授予权限给用户

root@node1:~# ceph auth caps client.rbd01 mon 'allow r' osd 'allow rwx pool=myrbd'

# 获取该用户的权限列表并导出该文件

root@node1:~# ceph auth get client.rbd01 -o /etc/ceph/client.rbd01.keyring

客户机使用:

root@node3:~# apt -y install ceph-common 版本一致 root@node1:~# scp /etc/ceph/client.rbd01.keyring node3:/etc/ceph/ root@node1:~# scp /etc/ceph/ceph.conf node3:/etc/ceph/ root@node3:~# vi /etc/ceph/ceph.conf [global] fsid = 0f342ec2-6119-4261-b65a-0833732b12cb mon_initial_members = node1 mon host = 192.168.42.140 public network = 192.168.42.0/24 cluster network = 172.16.1.0/24 auth cluster required = cephx auth service required = cephx auth client required = cephx osd journal size = 1024 osd pool default size = 3 osd pool default min size = 2 osd pool default pg num = 16 osd pool default pgp num = 16 osd crush chooseleaf type = 1 [client.rbd01] keyring=/etc/ceph/client.rbd01.keyring

验证使用:

root@node3:~# ceph -s --id rbd01 root@node3:~# ceph osd lspools --id rbd01 1 .mgr 2 myrbd root@node3:~# rbd create disk01 --pool myrbd --size 1G --image-feature layering --id rbd01 root@node3:~# rbd ls pool --pool myrbd --id rbd01 disk01 root@node3:~# rbd --pool myrbd map disk01 --id rbd01 root@node3:~# ll /dev/rbd0 brw-rw---- 1 root disk 252, 0 Dec 19 11:27 /dev/rbd0

接下来创建文件系统,挂载使用即可。

8、对象存储

在集群中添加对象存储服务(radosgw):

root@node1:~# apt install radosgw -y root@node1:~# vi /etc/ceph/ceph.conf # 添加配置:默认端口7480 [client.rgw.node1] host = node1 rgw frontends = "beast port=7480" rgw dns name = node1

# 将密钥环输出至rgw-node1

root@node1:~# sudo -u ceph mkdir -p /var/lib/ceph/radosgw/ceph-rgw.node1 root@node1:~# ceph auth get-or-create client.rgw.node1 osd 'allow rwx' mon 'allow rw' -o /var/lib/ceph/radosgw/ceph-rgw.node1/keyring

#启动rgw,会 自 动 生 成 .rgw.root 与 default.rgw.log 与 default.rgw.control 与 default.rgw.meta 与default.rgw.buckets.index 默认存储池,类型均为 rgw。

root@node1:~# systemctl enable --now ceph-radosgw@rgw.node1

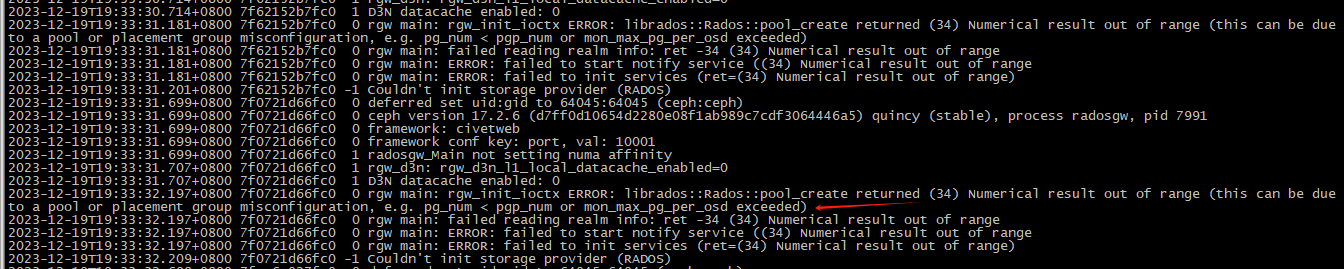

报错:

处理方式:

root@node1:~# vi /etc/ceph/ceph.conf 添加/修改: [global] osd pool default pg num = 16 osd pool default pgp num = 16 mon_max_pg_per_osd = 1000 root@node1:~# systemctl restart ceph-mon@node1 root@node1:~# systemctl status ceph-radosgw@rgw.node1

未解决

实际解决的方案:

# 手动创建rgw需要的pool

# ceph osd pool create .rgw.root 16 16 # ceph osd pool create default.rgw.control 16 16 # ceph osd pool create default.rgw.meta 16 16 # ceph osd pool create default.rgw.log 16 16 # ceph osd pool create default.rgw.buckets.index 16 16 # ceph osd pool create default.rgw.buckets.data 16 16 # ceph osd pool create default.rgw.buckets.non-ec 16 16

设置存储池类型为rgw

# ceph osd pool application enable .rgw.root rgw

# ceph osd pool application enable default.rgw.control rgw

# ceph osd pool application enable default.rgw.meta rgw

# ceph osd pool application enable default.rgw.log rgw

# ceph osd pool application enable default.rgw.buckets.index rgw

# ceph osd pool application enable default.rgw.buckets.data rgw

# ceph osd pool application enable default.rgw.buckets.non-ec rgw

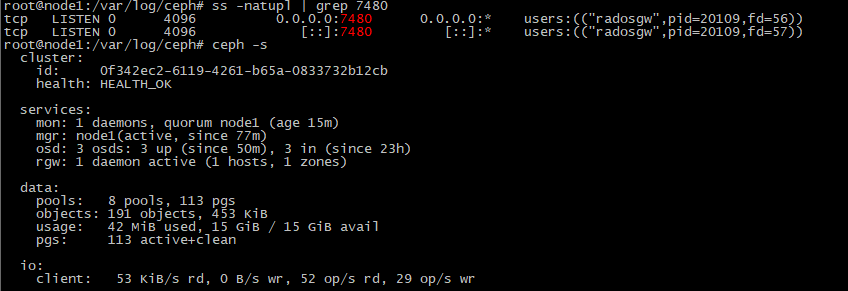

#重新启动rgw

root@node1:~# systemctl restart ceph-radosgw@rgw.node1 root@node1:~# systemctl enable ceph-radosgw@rgw.node1 root@node1:~# systemctl status ceph-radosgw@rgw.node1

# 在RGW节点添加一个对象存储网关的管理员账户

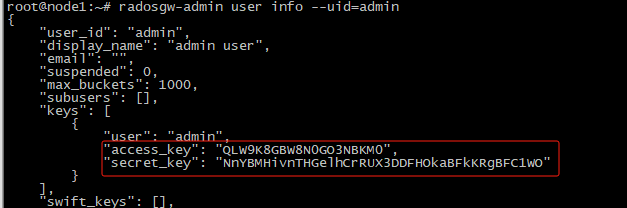

root@node1:~# radosgw-admin user create --uid="admin" --display-name="admin user" --system

# 查询用户信息

root@node1:~# radosgw-admin user list root@node1:~# radosgw-admin user info --uid=admin

# 分别将上面admin账户信息中的access_key和secret_key复制粘贴到rgw_access_key、rgw_secret_key文件中

# 将radosgw集成到dashboard

root@node1:~/ceph# ceph dashboard set-rgw-api-ssl-verify false root@node1:~/ceph# ceph dashboard set-rgw-api-access-key -i rgw_access_key root@node1:~/ceph# ceph dashboard set-rgw-api-secret-key -i rgw_secret_key

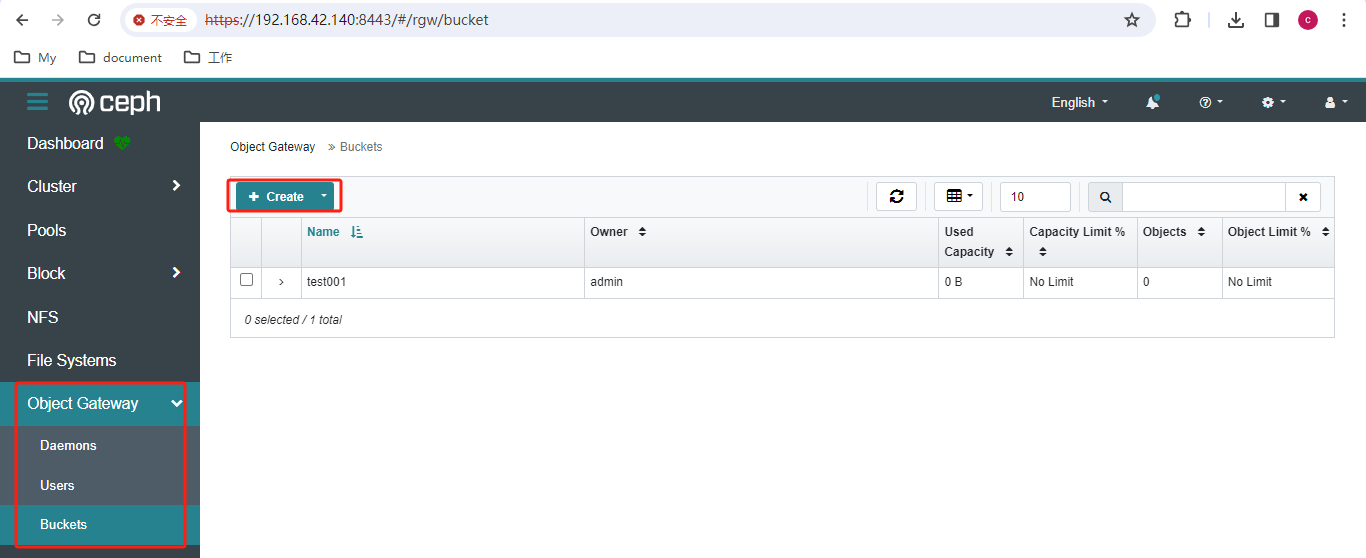

访问dashboard,https://192.168.42.140:8443/ ,可在上面创建用户和bucket等

9、文件存储

#在集群中添加ceph MDS服务

root@node2:~# apt install -y ceph-mds

#创建目录,秘钥,导出mds秘钥

root@node2:~# sudo -u ceph mkdir -p /var/lib/ceph/mds/ceph-node2 root@node2:~# ceph auth get-or-create mds.node2 osd "allow rwx" mds "allow" mon "allow profile mds" root@node2:~# ceph auth get mds.node2 -o /var/lib/ceph/mds/ceph-node2/keyring

#启动服务

root@node2:~# systemctl start ceph-mds@node2 root@node2:~# systemctl enable ceph-mds@node2 root@node2:~# systemctl status ceph-mds@node2 root@node1:~# ceph mds stat 1 up:standby

# 创建cephfs所需要的的metadata和data存储池

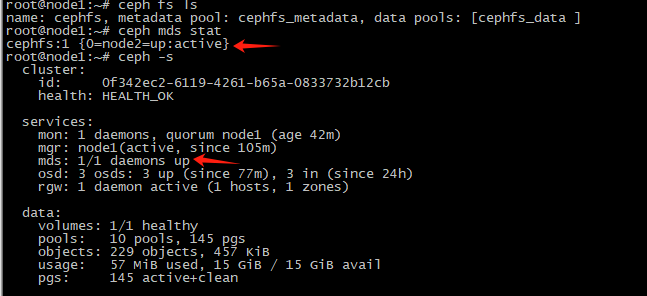

root@node1:~# ceph osd pool create cephfs_data 16 root@node1:~# ceph osd pool create cephfs_metadata 16 root@node1:~# ceph fs new cephfs cephfs_metadata cephfs_data #cephfs是name root@node1:~# ceph fs ls name: cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_data ] root@node1:~# ceph mds stat

root@node1:~# ceph fs status cephfs #查看name为cephfs的状态

客户端挂载使用cephfs,需要指定mon节点的6789端口。

root@node3:~# apt install ceph-common -y

拷贝mon节点的ceph.conf、和管理员秘钥到客户端(实际应单独创建用户和秘钥)

root@node3:~# scp 192.168.42.140:/etc/ceph/ceph.client.admin.keyring /etc/ceph/keyring root@node3:~# mount -t ceph 192.168.42.140:6789:/ /mnt -o name=admin

单独创建用户和秘钥挂载:

root@node1:~# ceph fs authorize cephfs client.cephfs01 / rw [client.cephfs01] key = AQDLq4FlgiIVCxAAdb0JifnQNqRH5e5R4ZeT+g== root@node1:~# ceph auth get-or-create client.cephfs01 -o /etc/ceph/client.cephfs.keyring

将client.cephfs.keyring文件拷贝到客户端并重命名为/etc/ceph/keyring

root@node1:~# scp /etc/ceph/client.cephfs.keyring node3:/etc/ceph/keyring root@node3:~# mount -t ceph 192.168.42.140:6789:/ /mnt -o name=cephfs01

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)