kafka + ELK(elasticsearch,logstash,kibana)

https://www.jianshu.com/p/0edcc3addf3f

https://blog.csdn.net/u010622525/article/details/100066743

kafka->logstash->elasticsearch->kibana

一.镜像拉取

docker pull wurstmeister/zookeeper

docker pull wurstmeister/kafka

二.定义docker-compose.yml

version: '3' services: zookeeper: image: wurstmeister/zookeeper ports: - "2181:2181" kafka: image: wurstmeister/kafka depends_on: [ zookeeper ] ports: - "9092:9092" environment: KAFKA_ADVERTISED_HOST_NAME: 192.168.220.150 KAFKA_CREATE_TOPICS: "test:1:1" KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181 volumes: - /data/product/zj_bigdata/data/kafka/docker.sock:/var/run/docker.sock

在docker-compose.yml文件目录进行服务打包

[root@VM_0_16_centos kafka] # docker-compose build

zookeeper uses an image, skipping

kafka uses an image, skipping

三.启动服务

[root@VM_0_16_centos kafka]# docker-compose up -d

Starting kafka_kafka_1 ... done

Starting kafka_zookeeper_1 ... done

四.启动测试

记住启动的启动名称,kafka为 kafka_kafka_1 ,zookeeper 为 kafka_zookeeper_1 .

如果docker-compose正常启动,此时docker ps会看到以上两个容器。进入kafka容器

docker exec -it kafka_kafka_1 bash

创建一个topic

$KAFKA_HOME/bin/kafka-topics.sh --create --topic topic --partitions 4 --zookeeper kafka_zookeeper_1:2181 --replication-factor 1

注意–zookeeper后面的参数为,容器的name

查看刚刚创建的topic

$KAFKA_HOME/bin/kafka-topics.sh --zookeeper kafka_zookeeper_1:2181 --describe --topic test

发布信息

bash-4.4# $KAFKA_HOME/bin/kafka-console-producer.sh --topic=test --broker-list kafka_kafka_1:9092

>ni

>haha

同样注意--broker-list后面的参数

接收消息

bash-4.4# $KAFKA_HOME/bin/kafka-console-consumer.sh --bootstrap-server kafka_kafka_1:9092 --from-beginning --topic test

ni

haha

五.kafka集群管理界面

docker run -itd --name=kafka-manager -p 9000:9000 -e ZK_HOSTS="192.168.220.150:2181" sheepkiller/kafka-manager

访问

http://192.168.220.150:9000/

bash-4.4# $KAFKA_HOME/bin/kafka-topics.sh --create --topic tttt --partitions 4 --zookeeper sde_zookeeper_1:2181 --replication-factor 1 Created topic tttt. bash-4.4# bin/kafka-topics.sh --list^C bash-4.4# $KAFKA_HOME/bin/kafka-console-producer.sh --topic=tttt --broker-list sde_kafka_1:9092 >^Cbash-4.$KAFKA_HOME/bin/kafka-topics.sh --create --topic topic --partitions 4 --zookeeper sde_zookeeper_1:2181 --replication-factor 1 ^C bash-4.4# $KAFKA_HOME/bin/kafka-console-consumer.sh --bootstrap-server sde_kafka_1:9092 --from-beginning --topic=tttt

sde@sde:~$ pwd /home/sde sde@sde:~$ cat config/ elasticsearch.yml kibana.yml sde@sde:~$ cat config/elasticsearch.yml cluster.name: "docker-cluster" network.host: 0.0.0.0 xpack: ml.enabled: false monitoring.enabled: false security.enabled: false watcher.enabled: false sde@sde:~$ cat config/kibana.yml server.name: kibana server.host: "0" elasticsearch.url: "http://10.18.1.2:9200" xpack.monitoring.ui.container.elasticsearch.enabled: true i18n.locale: "zh-CN" sde@sde:~$

docker run -itd --name=kafka-manager -p 9000:9000 -e ZK_HOSTS="10.18.1.2:2181" sheepkiller/kafka-manager

sudo sysctl -w vm.max_map_count=262144

docker run --rm -d -it -p 9200:9200 -p 9300:9300 -v "$PWD"/config/elasticsearch.yml:/home/jeon/elasticsearch/config/elasticsearch.yml --name elasticsearch docker.elastic.co/elasticsearch/elasticsearch:6.8.2

docker run --rm -d -it -p 5601:5601 -v "$PWD"/config/kibana.yml:/usr/share/kibana/config/kibana.yml --name kibnaa docker.elastic.co/kibana/kibana:6.5.4

docker run --rm -d -it -v ~/logstash/pipeline:/usr/share/logstash/pipeline -v ~/logstash/conf/logstash.yml:/usr/share/logstash/config/logstash.yml -v /home/sde/logstash/plugin:/plugin -p 5044:5044 -p 9600:9600

--privileged=true --name logstash docker.elastic.co/logstash/logstash:7.10.0 -f /usr/share/logstash/pipeline/logstash.conf

rlogger-->kafka-->elk

http://10.18.1.2:9000/clusters/cluster1/topics

http://10.18.1.2:9200/

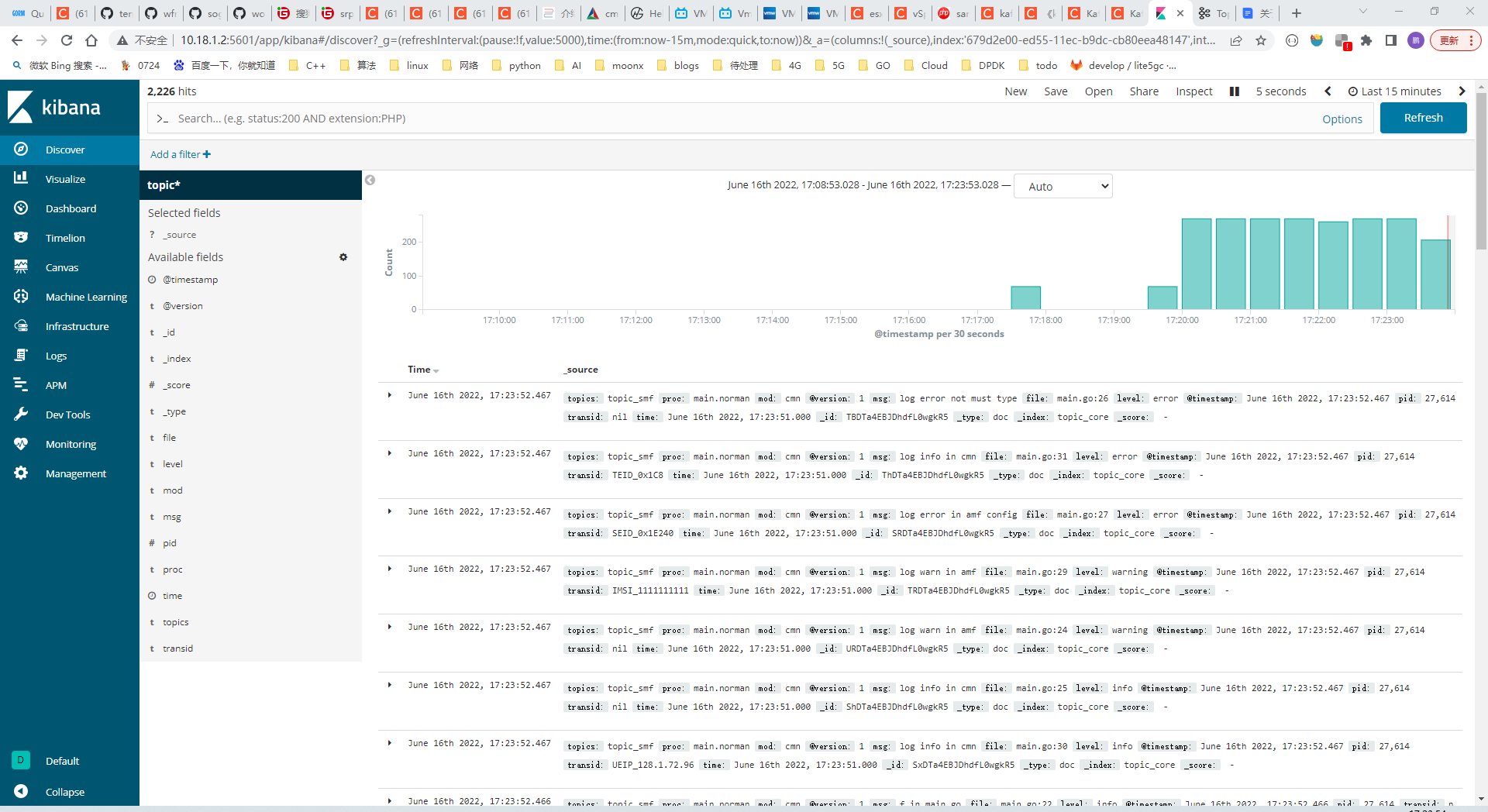

http://10.18.1.2:5601/

sde@sde:~/kafka$ tree . ├── docker-compose.yml ├── elasticsearch │ └── elasticsearch.yml ├── kibana │ └── kibana.yml └── logstash ├── conf │ └── logstash.yml ├── pipeline │ ├── logstash.conf │ └── logstash.conf.bak └── plugin 6 directories, 6 files

sde@sde:~/kafka$ cat docker-compose.yml version: '3' services: zookeeper: image: wurstmeister/zookeeper ports: - "2181:2181" restart: always kafka: image: wurstmeister/kafka depends_on: [ zookeeper ] ports: - "9092:9092" restart: always environment: KAFKA_ADVERTISED_HOST_NAME: 10.18.1.2 KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181 kafka_manager: image: sheepkiller/kafka-manager depends_on: [ zookeeper ] ports: - "9000:9000" restart: always environment: ZK_HOSTS: zookeeper:2181 logstash: image: docker.elastic.co/logstash/logstash:7.10.0 depends_on: - elasticsearch - kafka ports: - "5044:5044" - "9600:9600" volumes: - ./logstash/pipeline:/usr/share/logstash/pipeline - ./logstash/conf/logstash.yml:/usr/share/logstash/config/logstash.yml - ./logstash/plugin:/plugin privileged: true restart: always # command: logstash -f /usr/share/logstash/pipeline/logstash.conf elasticsearch: image: docker.elastic.co/elasticsearch/elasticsearch:6.8.2 ports: - "9200:9200" - "9300:9300" restart: always volumes: - ./elasticsearch/elasticsearch.yml:/home/jeon/elasticsearch/config/elasticsearch.yml kibana: image: docker.elastic.co/kibana/kibana:6.5.4 ports: - "5601:5601" restart: always volumes: - ./kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

sde@sde:~/kafka$ ls docker-compose.yml elasticsearch kibana logstash sde@sde:~/kafka$ cat elasticsearch/elasticsearch.yml cluster.name: "docker-cluster" network.host: 0.0.0.0 xpack: ml.enabled: false monitoring.enabled: false security.enabled: false watcher.enabled: false sde@sde:~/kafka$ cat kibana/kibana.yml server.name: kibana server.host: "0" elasticsearch.url: "http://10.18.1.2:9200" xpack.monitoring.ui.container.elasticsearch.enabled: true i18n.locale: "zh-CN" sde@sde:~/kafka$ cat logstash/conf/logstash.yml http.host: "0.0.0.0" #path.config: /home/sde/logstash/pipeline xpack.monitoring.enabled: true xpack.monitoring.elasticsearch.hosts: http://10.18.1.2:9200 sde@sde:~/kafka$ cat logstash/pipeline/logstash.conf input { kafka { bootstrap_servers => '10.18.1.2:9092' #topics => ["topic_amf", "topic_smf"] topics_pattern => "topic_.*" codec => json {} } } output { elasticsearch { hosts => ["http://10.18.1.2:9200"] index => "topic_2" sniffing => false } stdout { codec => rubydebug } }

posted on 2020-11-17 21:57 csuyangpeng 阅读(249) 评论(0) 编辑 收藏 举报