k8s 资源管理

1. kubectl 命令行

1.1 管理k8s 核心资源的三种方法

-

陈述式管理方法

依赖CLI 工具进行管理

-

声明式管理方法

依赖资源配置清单 (manifest)进行管理

-

GUI 式管理方法

主要依赖图形化操作界面(web页面)进行管理

1.2 陈述式管理方法

使用 kubectl 命令进行管理

用于增删查较为方便

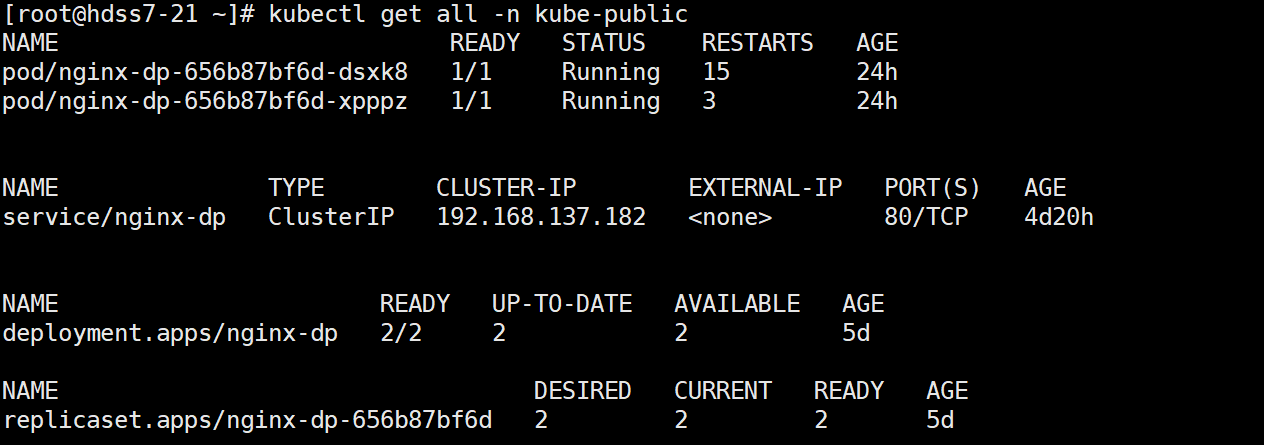

1.2.1 查看所有资源

kubectl get all -n kube-public

1.2.2 管理名称空间资源

- 查看某个名称空间下所有的资源

kubectl get all -n default

- 创建名称空间test

kubectl create namespace test

- 删除名称空间test

kubectl delete namespace test

1.2.3 管理Deployment 资源

- 创建deployment

kubectl create deploy nginx-dp --image harbor.od.com/public/nginx:1.7.9 -n kube-public

- 查看deployment

kubectl get deploy -n kube-public

kubectl describe deploy nginx-dp -n kube-public

1.2.4 管理pod资源

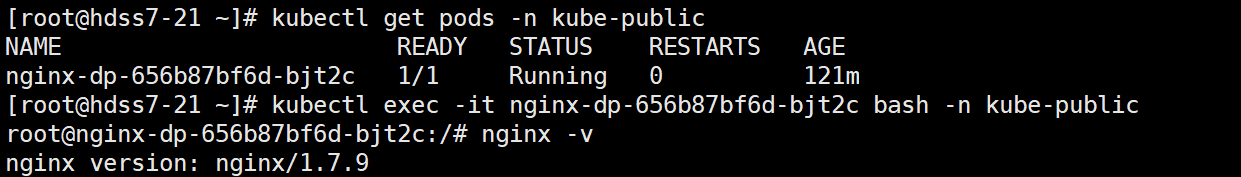

- 查看pod资源

kubectl get pods -n kube-public

- 进入pod资源

kubectl exec -it nginx-dp-656b87bf6d-bjt2c bash -n kube-public

1.2.5 管理service资源

通过名称空间和标签选择器关联service

- 创建deployment

kubectl create deploy nginx-dp --image harbor.od.com/public/nginx:1.7.9 -n kube-public

- 创建service

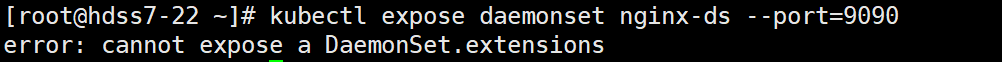

kubectl expose deployment nginx-dp --port=80 -n kube-public

不能够对daemonset 创建service

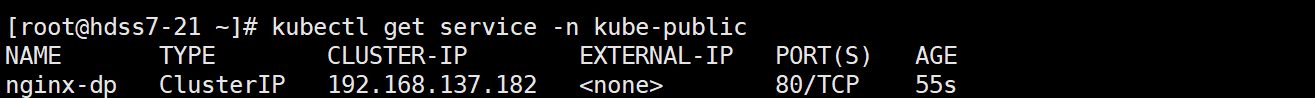

- 查看service

kubectl get service -n kube-public

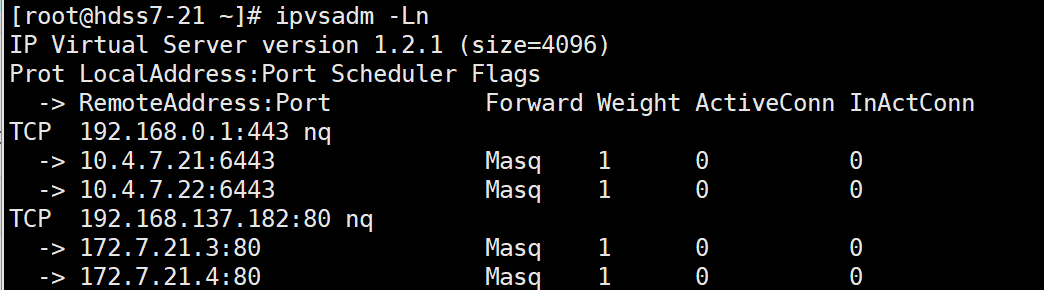

- 扩容deployment

kubectl scale deployment nginx-dp --replicas=2 -n kube-public

- 查看代理的pod地址

ipvsadm -Ln

- 查看pod的ip地址

kubectl get pod -n kube-public -owide

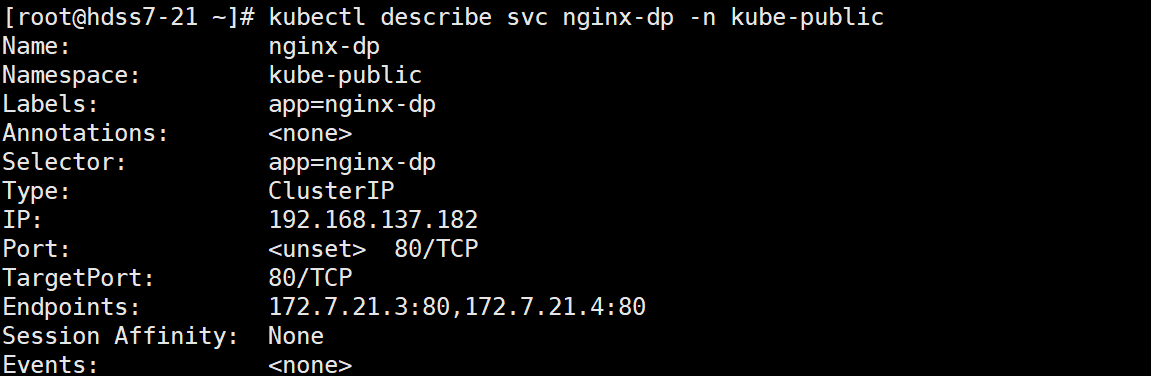

- 查看service详情

kubectl describe svc nginx-dp -n kube-public

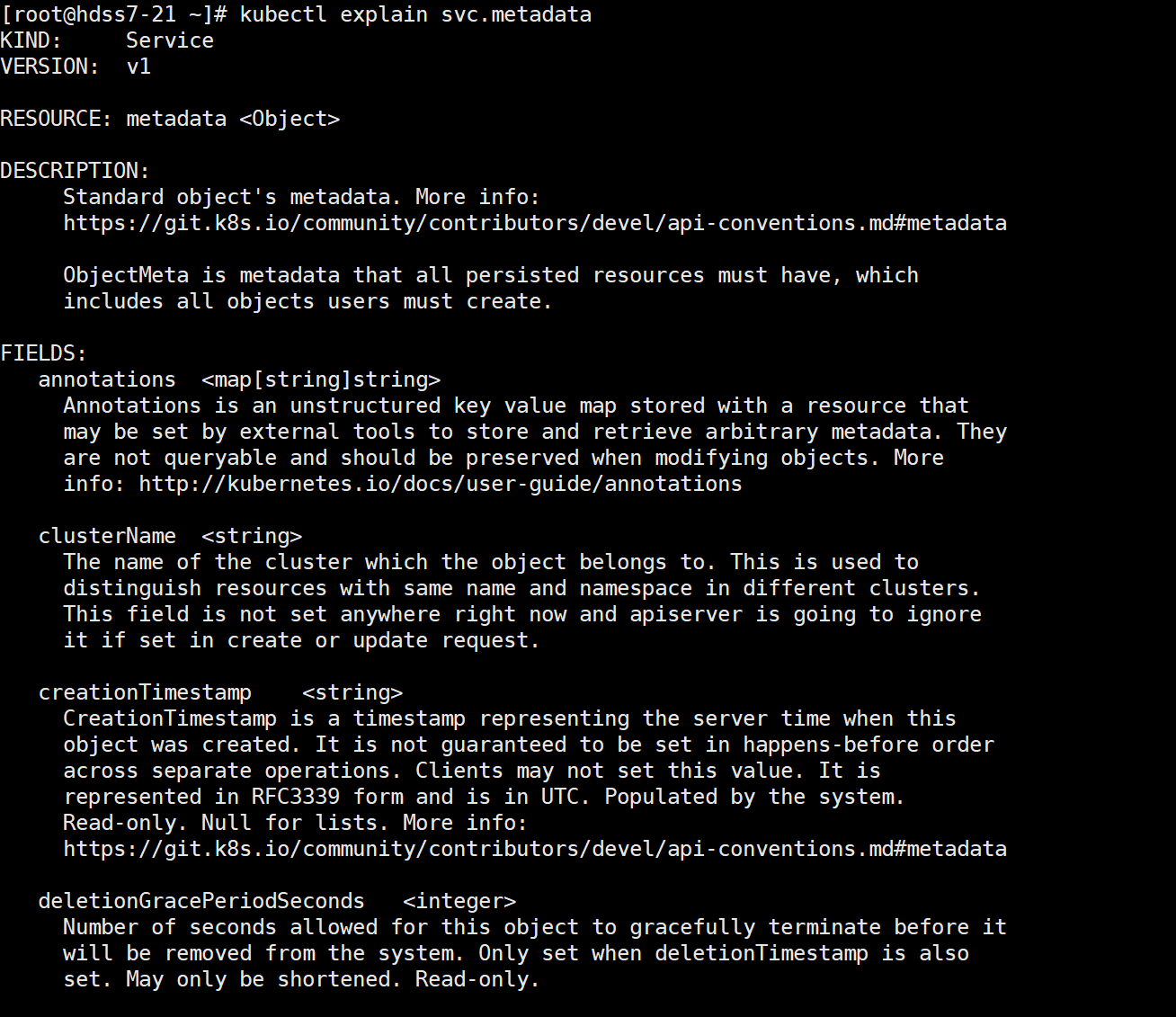

1.2.6 查看帮助信息

kubectl explain svc.metadata

1.3 声明式管理方法

依赖于YAML和JSON格式的资源配置清单进行管理, 用于修改较为方便

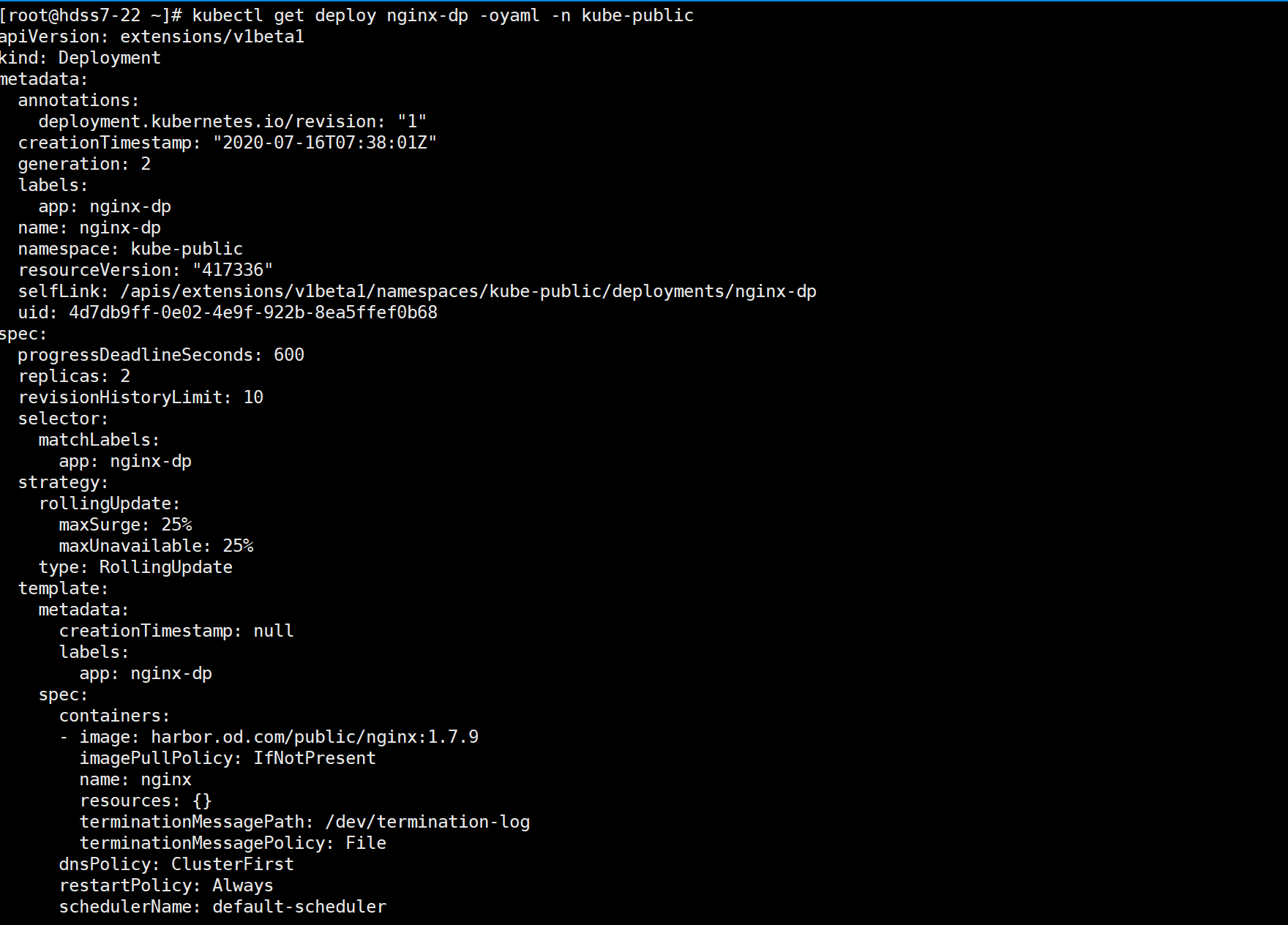

1.3.1 查看某个deployment的资源配置清单

kubectl get deploy nginx-dp -oyaml -n kube-public

1.3.2 查看某个service的资源配置清单

kubectl get svc nginx-dp -oyaml -n kube-public

1.3.3 创建资源配置清单

vim nginx-ds-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-ds

name: nginx-ds

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-ds

sessionAffinity: None

type: ClusterIP

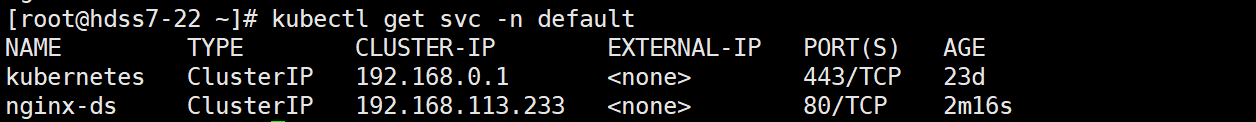

kubectl create -f nginx-ds-svc.yaml

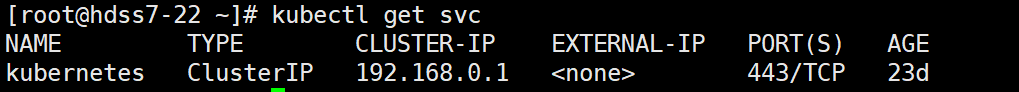

kubectl get svc -n default

1.3.4 在线修改资源配置清单

kubectl edit svc nginx-ds

kubectl get svc

1.3.5 离线修改资源配置清单

vim nginx-ds-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-ds

name: nginx-ds

namespace: default

spec:

ports:

- port: 8088

protocol: TCP

targetPort: 80

selector:

app: nginx-ds

sessionAffinity: None

type: ClusterIP

kubectl apply -f nginx-ds-svc.yaml

kubectl get svc

1.3.6 删除资源配置清单

kubectl delete -f nginx-ds-svc.yaml

kubectl get svc

2. kubernetes CNi网络插件 flannel

主要实现了跨宿主机网络通信

常见的CNI网络插件

- Flannel

- Calico

- Canal

- Contiv

- OpenContrail

- NSX-T

- Kube-router

| 主机名 | 角色 | IP地址 |

|---|---|---|

| hdss7-21.host.com | flannel | 10.4.7.21 |

| hdss7-22.host.com | flannel | 10.4.7.22 |

| hdss7-23.host.com | flannel | 10.4.7.23 |

| hdss7-24.host.com | flannel | 10.4.7.24 |

2.1 下载软件,解压, 软链接

https://github.com/coreos/flannel/releases

cd /opt/src/

wget https://github.com/coreos/flannel/releases/download/v0.12.0/flannel-v0.12.0-linux-amd64.tar.gz

mkdir /opt/flannel-v0.12.0

tar xf flannel-v0.12.0-linux-amd64.tar.gz -C /opt/flannel-v0.12.0/

ln -s /opt/flannel-v0.12.0/ /opt/flannel

2.2 创建目录并拷贝证书

mkdir /opt/flannel/cert

cd /opt/flannel/cert/

scp hdss7-200:/opt/certs/ca.pem .

scp hdss7-200:/opt/certs/client.pem .

scp hdss7-200:/opt/certs/client-key.pem .

2.3 创建配置

cd /opt/flannel

vi subnet.env

- 10.4.7.21

FLANNEL_NETWORK=172.7.0.0/16

FLANNEL_SUBNET=172.7.21.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=false

- 10.4.7.22

FLANNEL_NETWORK=172.7.0.0/16

FLANNEL_SUBNET=172.7.22.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=false

2.4 创建启动脚本

vi flanneld.sh

- 10.4.7.21

#!/bin/sh

./flanneld \

--public-ip=10.4.7.21 \

--etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--etcd-keyfile=./cert/client-key.pem \

--etcd-certfile=./cert/client.pem \

--etcd-cafile=./cert/ca.pem \

--iface=eth0 \

--subnet-file=./subnet.env \

--healthz-port=2401

- 10.4.7.22

#!/bin/sh

./flanneld \

--public-ip=10.4.7.22 \

--etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--etcd-keyfile=./cert/client-key.pem \

--etcd-certfile=./cert/client.pem \

--etcd-cafile=./cert/ca.pem \

--iface=eth0 \

--subnet-file=./subnet.env \

--healthz-port=2401

- 10.4.7.23

#!/bin/sh

./flanneld \

--public-ip=10.4.7.23 \

--etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--etcd-keyfile=./cert/client-key.pem \

--etcd-certfile=./cert/client.pem \

--etcd-cafile=./cert/ca.pem \

--iface=eth0 \

--subnet-file=./subnet.env \

--healthz-port=2401

- 10.4.7.24

#!/bin/sh

./flanneld \

--public-ip=10.4.7.24 \

--etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--etcd-keyfile=./cert/client-key.pem \

--etcd-certfile=./cert/client.pem \

--etcd-cafile=./cert/ca.pem \

--iface=eth0 \

--subnet-file=./subnet.env \

--healthz-port=2401

2.5 创建日志文件

chmod +x flaneld.sh

mkdir -p /data/logs/flanneld

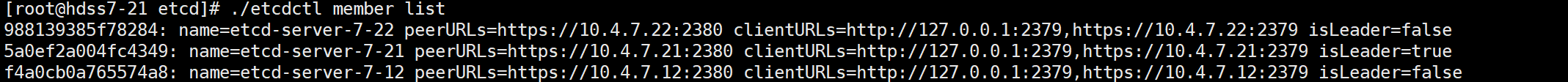

2.6 操作etcd, 创建host-gw

10.4.7.21上

cd /opt/etcd

./etcdctl member list

因为 10.4.7.21是etcd的leader角色, 所以在 10.4.7.21上操作

./etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

./etcdctl get /coreos.com/network/config

2.7 创建supervisor配置

vi /etc/supervisord.d/flannel.ini

- 10.4.7.21

[program:flanneld-7-21]

command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/flannel ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

- 10.4.7.22

[program:flanneld-7-22]

command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/flannel ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

- 10.4.7.23

[program:flanneld-7-23]

command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/flannel ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

- 10.4.7.24

[program:flanneld-7-24]

command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/flannel ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

2.9 更新supervisor配置

supervisorctl update

supervisorctl status

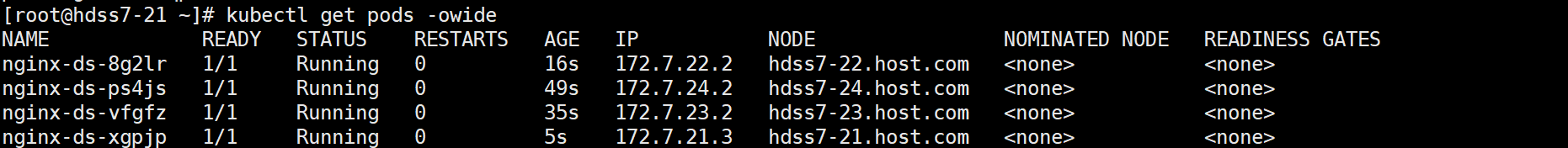

2.10 验证集群

kubectl get pods -owide

ping 172.7.22.2

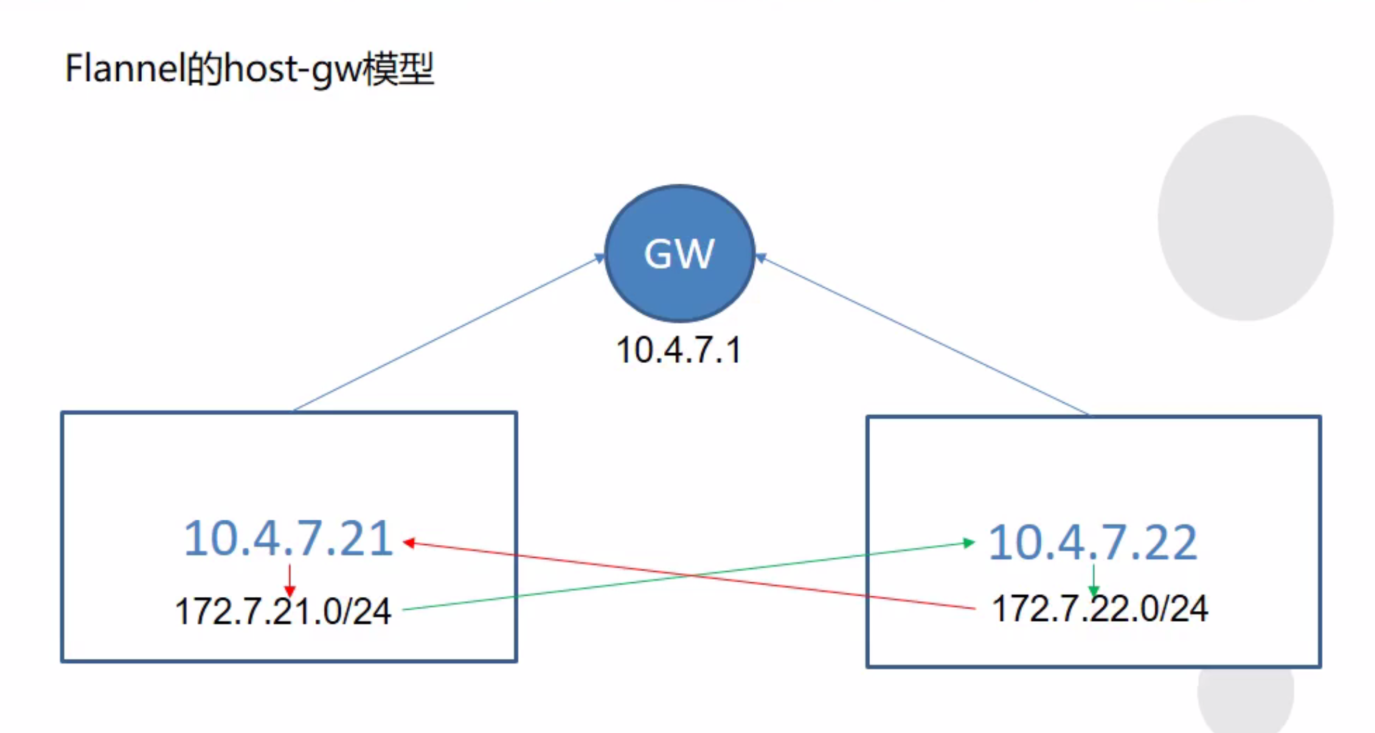

2.11 flannel 原理

2.11.1 host-gw 模型

前提是宿主机之间是相通的,通过添加一条路由实现 跨宿主机容器间的网络通信

2.11.2 VxLAN 模型

通过 各自添加一块虚拟网卡 flannel.1 来进行通信实现 跨宿主机容器间的网络通信

2.12 SNAT规则优化

2.12.1 制作nginx镜像

mkdir -p /opt/dockerfile/nginx

vim /opt/dockerfile/nginx/dockerfile

FROM centos:7

RUN useradd www -u 1200 -M -s /sbin/nologin

RUN mkdir -p /var/log/nginx

RUN yum install -y cmake pcre pcre-devel openssl openssl-devel gd-devel \

zlib-devel gcc gcc-c++ net-tools iproute telnet wget curl &&\

yum clean all && \

rm -rf /var/cache/yum/*

RUN wget https://www.chenleilei.net/soft/nginx-1.16.1.tar.gz

RUN tar xf nginx-1.16.1.tar.gz

WORKDIR nginx-1.16.1

RUN ./configure --prefix=/usr/local/nginx --with-http_image_filter_module --user=www --group=www \

--with-http_ssl_module --with-http_v2_module --with-http_stub_status_module \

--error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log \

--pid-path=/var/run/nginx/nginx.pid

RUN make -j 4 && make install && \

rm -rf /usr/local/nginx/html/* && \

echo "leilei hello" >/usr/local/nginx/html/index.html && \

rm -rf nginx* && \

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

ln -sf /dev/stdout /var/log/nginx/access.log && \

ln -sf /dev/stderr /var/log/nginx/error.log

RUN chown -R www.www /var/log/nginx

ENV LOG_DIR /var/log/nginx

ENV PATH $PATH:/usr/local/nginx/sbin

#COPY nginx.conf /usr/local/nginx/conf/nginx.conf

EXPOSE 80

WORKDIR /usr/local/nginx

CMD ["nginx","-g","daemon off;"]

docker image build -t nginx:curl /opt/dockerfile/nginx

docker tag nginx:curl harbor.od.com/public/nginx:curl

docker push !$

2.12.3 修改damonset的镜像

/root/nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.od.com/public/nginx:curl

ports:

- containerPort: 80

tolerations:

- key: hdss7-21

operator: Exists

effect: NoSchedule

- key: hdss7-22

operator: Exists

effect: NoSchedule

kubectl apply -f nginx-ds.yaml

重启所有的pod

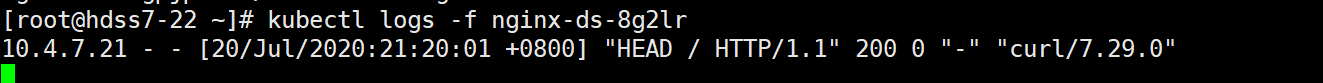

2.12.4 容器内通过curl, 日志中会显示node的IP地址

- 10.4.7.21上操作

kubectl get pods -owide

kubectl exec -ti nginx-ds-xgpjp bash

curl -I 172.7.22.2

- 10.4.7.22上操作

kubectl logs -f nginx-ds-8g2lr

2.12.5 优化iptables规则

iptables-save |grep -i postrouting

- 安装iptables-services

yum -y install iptables-services

systemctl start iptables.service

systemctl enable iptables.service

- 删除原来的规则

iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

- 添加规则

iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

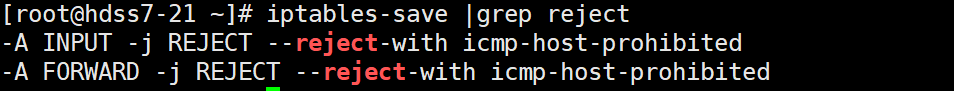

- 将iptables的reject规则清空

iptables-save |grep reject

iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

- 保存规则

iptables-save > /etc/sysconfig/iptables

- 10.4.7.21上操作

kubectl exec -ti nginx-ds-xgpjp bash

curl -I 172.7.22.2

- 10.4.7.22上操作

kubectl logs -f nginx-ds-8g2lr

2.12.6 问题

https://docs.docker.com/engine/reference/commandline/dockerd/#daemon-configuration-file

这里会出现问题, 如果机器重启或者重启docker, iptables的规则就会被docker重置, 解决方案是将docker的iptables规则禁止或者在.bashrc中添加这条iptables规则

docker进程禁用iptables

/etc/docker/daemon.json

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com", "quay.io", "harbor.od.com"],

"registry-mirrors": ["https://q2gr04ke.mirror.aliyuncs.com"],

"bip": "172.7.21.1/24",

"iptables": false,

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

这种方案造成的问题是重启主机后所有关于docker的iptables规则都没有了

启动时候添加iptables规则

~/.bashrc

iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

iptables-save > /etc/sysconfig/iptables

3. kubernetes 服务发现插件 coredns

服务发现就是服务(应用)之间相互定位的过程

服务发现主要有以下场景

- 服务(应用)的动态性强

- 服务(应用)更新发布频繁

- 服务(应用)支持自动伸缩

coredns 关联service的名称和它的cluster ip

coredns 是k8s中默认的dns插件, 只负责维护 ervice的名称和它的cluster ip的关联关系

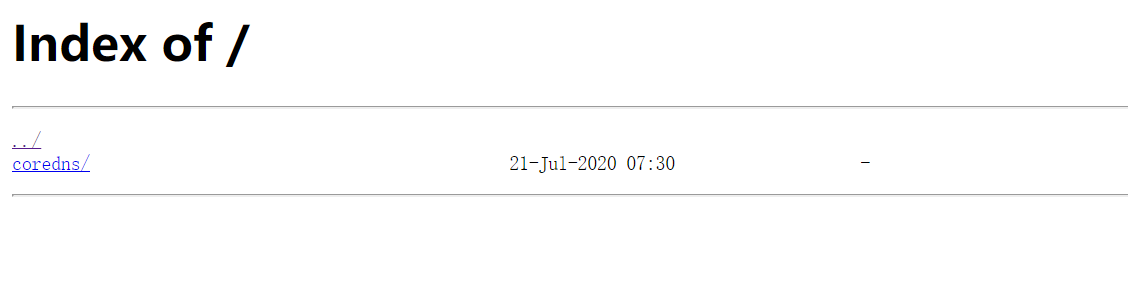

3.1.部署kubernetesubernetes的内网资源配置清单

在hdss7-200.host.com (10.4.7.200)上,配置一个NGINX,用以提供kubernetes统一的访问资源清单入口

vi /etc/nginx/conf.d/k8s-yaml.od.com.conf

server {

listen 80;

server_name k8s-yaml.od.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

}

nginx -s reload

mkdir -p /data/k8s-yaml/coredns

-

在 10.4.7.11 注册 k8s-yaml.od.com 的域名

/var/named/od.com.zone

ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020062204 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimun (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

systemctl restart named

- 查看dns解析

dig -t A k8s-yaml.od.com +short

- 浏览器访问

k8s-yaml.od.com

3.2 部署coredns

3.2.1 准备 coredns镜像

docker pull coredns/coredns:1.7.0

docker tag coredns/coredns:1.7.0 harbor.od.com/public/coredns:1.7.0

docker push !$

3.2.2 准备资源配置清单

/data/k8s-yaml/coredns/rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

/data/k8s-yaml/coredns/configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 192.168.0.0/16

forward . 10.4.7.11

cache 30

loop

reload

loadbalance

}

/data/k8s-yaml/coredns/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.od.com/public/coredns:1.7.0

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

/data/k8s-yaml/coredns/service.yaml

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 192.168.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP

3.2.3 应用资源配置

kubectl apply -f http://k8s-yaml.od.com/coredns/rbac.yaml

kubectl apply -f http://k8s-yaml.od.com/coredns/configmap.yaml

kubectl apply -f http://k8s-yaml.od.com/coredns/development.yaml

kubectl apply -f http://k8s-yaml.od.com/coredns/service.yaml

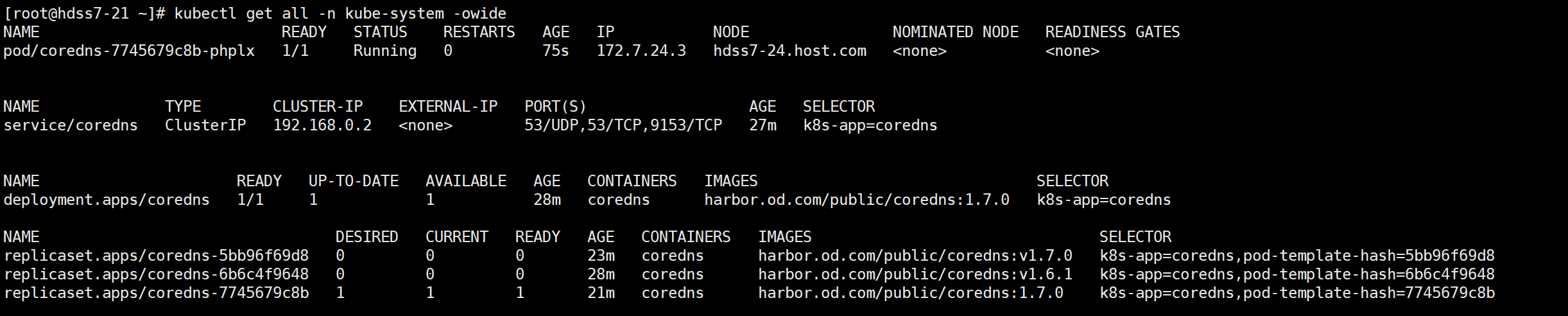

3.2.4 检查创建的资源

kubectl get all -n kube-system -owide

3.2.5 验证coreDNS

dig -t A hdss7-21.host.com @192.168.0.2 +short

dig -t A hdss7-22.host.com @192.168.0.2 +short

dig -t A hdss7-23.host.com @192.168.0.2 +short

dig -t A hdss7-24.host.com @192.168.0.2 +short

dig -t A hdss7-11.host.com @192.168.0.2 +short

dig -t A hdss7-12.host.com @192.168.0.2 +short

dig -t A www.baidu.com @192.168.0.2 +short

3.2.6 验证之前建的nginx的service ip是否能被解析

kubectl get all -n kube-public

dig -t A nginx-dp.kube-public.svc.cluster.local @192.168.0.2 +short

- 进入容器中, 可以使用简写

curl nginx-dp.kube-public