运维脚本

运维脚本

nginx日志切割

阿里云安装

yum install -y gcc gcc-c++ pcre pcre-devel zlib zlib-devel openssl openssl-devel

wget https://nginx.org/download/nginx-1.16.0.tar.gz

tar -xf nginx-1.16.0.tar.gz

cd nginx-1.16.0

./configure --prefix=/usr/local/nginx--with-http_stub_status_module --with-http_ssl_module

make && make install

/usr/local/nginx/sbin/nginx

安装python 3.6.8

#!/bin/bash

yum install -y wget gcc gcc-c++ pcre pcre-devel zlib zlib-devel openssl openssl-devel

if [ ! -f 'Python-3.6.8.tgz' ];then

wget https://www.python.org/ftp/python/3.6.8/Python-3.6.8.tgz

tar xf Python-3.6.8.tgz

cd Python-3.6.8/

export LANG=zh_CN.UTF-8

export LANGUAGE=zh_CN.UTF-8

./configure --prefix=/usr/local/python3

make && make install

echo "PATH=$PATH:/usr/local/python3/bin" >> /etc/profile

echo "export PATH" >> /etc/profile

source /etc/profile

方案1: 日志切割脚本

import os, datetime, re, time

program_start = round(float(time.time()),1)

date = datetime.datetime.now().strftime('%Y%m%d')

access_path = "/usr/local/nginx/logs/access.log"

error_path = "/usr/local/nginx/logs/error.log"

cut_dir = "/usr/local/nginx/logs/history"

# Get the size of the log

access_size = os.path.getsize(access_path)

error_size = os.path.getsize(error_path)

# convert the size from bytes to MB

def to_mb(size):

to_kb = float(size)/1024

to_mb = to_kb/1024

# remain 1 bits

return round(to_mb, 1)

access_size_withmb = to_mb(access_size)

error_size_withmb = to_mb(error_size)

# get nginx_pid

def get_nginx_pid():

nginx_conf_path = "/usr/local/nginx/conf/nginx.conf"

with open(nginx_conf_path, 'r', encoding='utf-8') as f:

nginx_conf = f.read()

nginx_pid = re.search("/.*nginx.pid", nginx_conf).group(0)

return nginx_pid

# compress logs

def compress_log():

print('>>>>>>>>>>>>>>>>>>>>>>>>>> start to compress logs <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<')

res = os.system("find %s -mtime 3 -type f |xargs tar czPvf %s/log.tar-%s 2>/dev/null"%(cut_dir, cut_dir, date))

if res == 0:

print('>>>>>>>>>>>>>>>>>>>>>>>>>> compress logs finished <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<')

else:

print('>>>>>>>>>>>>>>>>>>>>>>>>>> no logs need to compress <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<')

# cut logs

def main_process(size_withmb, path):

if size_withmb > 500:

new_path = '%s/%s-%s'%(cut_dir, path.rsplit('/', 1)[1], date)

nginx_pid = get_nginx_pid()

print('>>>>>>>>>>>>>>>>>>>>>>>>>>the log %s size is %sMB, start to cut <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<' %(path.rsplit('/',1)[1],size_withmb))

if not os.path.isdir(cut_dir):

os.system('mkdir {}'.format(cut_dir))

os.system('mv {} {}'.format(path, new_path))

print('>>>>>>>>>>>>>>>>>>>>>>>>>> cut log finished <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<')

print('>>>>>>>>>>>>>>>>>>>>>>>>>> log is reloading <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<')

os.system('kill -USR1 `cat {}`'.format(nginx_pid))

print('>>>>>>>>>>>>>>>>>>>>>>>>>> log reload finished <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<')

print('>>>>>>>>>>>>>>>>>>>>>>>>>> the log %s size is less than 500MB, not need to cut <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<'%(path.rsplit('/',1)[1]))

if __name__ == '__main__':

main_process(access_size_withmb, access_path)

main_process(error_size_withmb, error_path)

execute_date = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

compress_log()

program_end = round(float(time.time()),1)

execute_time = '%.1f'%(program_end - program_start)

print('>>>>>>>>>>>>>>>>>>>>>>>>>> the program executed %s seconds, today is %s <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<' %(execute_time, execute_date))

print('\n')

### 方案2: 使用nginx配置文件每天生成日志 添加如下字段 ```bash if ($time_iso8601 ~ "^(\d{4})-(\d{2})-(\d{2})T(\d{2}):(\d{2}):(\d{2})") { set $year $1; set $month $2; set $day $3; set $hour $4; set $minute $5; set $seconds $6; } access_log logs/$year-$month-$day-access.log; ```

完整配置文件 ```bash vim /usr/local/nginx/conf/nginx.conf user root; worker_processes 1; pid /var/nginx/nginx.pid; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { listen 80; server_name localhost; if ($time_iso8601 ~ "^(\d{4})-(\d{2})-(\d{2})T(\d{2}):(\d{2}):(\d{2})") { set $year $1; set $month $2; set $day $3; set $hour $4; set $minute $5; set $seconds $6; } access_log logs/$year-$month-$day-access.log; location / { root html; index index.html index.htm; }

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

/usr/local/nginx/sbin/nginx -s reload

<br>

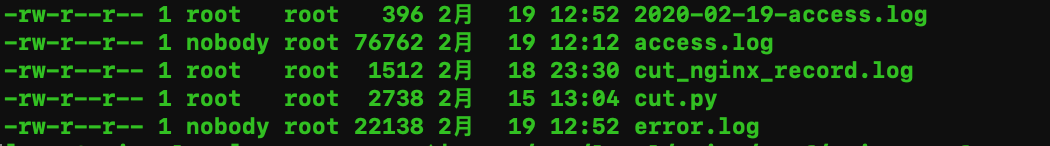

访问下nginx, 可以查看到日志都写入到以时间戳命名的日志文件中

<br>

设定超过30天的压缩归档

```bash

#!/bin/bash

res=`find /usr/local/nginx/logs -name '*-access.log' -type -mtime 1`

compress_date=`date '+%Y-%m-%d'`

compress_dir="/usr/local/nginx/logs/history"

if [[ ! -n "$res" ]];then

echo '>>>>>>>>>>>>>>>>>>当前没有文件需要压缩<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<'

else

echo '>>>>>>>>>>>>>>>>>>当前有文件需要压缩<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<'

if [ ! -d "$compress_dir" ]; then

mkdir -p /usr/local/nginx/logs/history

find /usr/local/nginx/logs/ -name '*-access.log' -type f -mtime 1|xargs tar czPvf $compress_dir/$compress_date"_compress.tar"

if [[ $? -eq 0 ]];then

echo '压缩完成, 开始删除文件'

find /usr/local/nginx/logs/ -name '*-access.log' -type f -mtime 1|xargs rm -rf

echo '删除完成'

fi

else

echo '压缩不成功'

fi

fi

## 生产环境docker registry仓库 同步灾备环境 registry仓库 ```python import os, json

def get_catalog_list(addr):

catalog_raw = os.popen("curl -XGET %s/v2/_catalog" %(addr)).read()

catalog_dic = json.loads(catalog_raw)

catalog_list = catalog_dic.get('repositories')

return catalog_list

def get_data(catalog_list, addr):

data = dict()

for catalog in catalog_list:

res = os.popen("curl -XGET %s/v2/%s/tags/list" % (addr, catalog)).read()

res_dic = json.loads(res)

data[res_dic.get('name')] = res_dic.get('tags')

return data

def parse():

prod_catalog_list = get_catalog_list("172.16.240.200:5000")

prod_data = get_data(prod_catalog_list, "172.16.240.200:5000")

disaster_catalog_list = get_catalog_list("172.16.240.110:8999")

disaster_data = get_data(disaster_catalog_list, "172.16.240.110:8999")

return prod_data, disaster_data

def main(push_tag, image_name):

if push_tag:

for tag in push_tag:

image_cache = os.popen("""docker images |awk '{if ($1~/^172.16.240.200:5000/)print $1":"$2}'|grep '%s:%s'"""%(image_name, tag)).read()

if not image_cache:

os.system("docker pull 172.16.240.200:5000/%s:%s"%(image_name, tag))

else:

os.system("docker tag 172.16.240.200:5000/%s:%s 172.16.240.110:8999/%s:%s" % (image_name, tag, image_name, tag))

os.system("docker push 172.16.240.110:8999/%s:%s" % (image_name, tag))

def get_tag(prod, disaster):

for image_name, image_tag in prod.items():

# 镜像名字(image_name)有, 但是prod的tag 比 disaster多

if image_name in disaster:

if disaster.get(image_name) != image_tag:

dis_tag = disaster.get(image_name)

prod_tag = image_tag

push_tag = [tag for tag in prod_tag if tag not in dis_tag]

# 打标签拉取镜像

main(push_tag, image_name)

else:

# 镜像名字(image_name)没有, push_tag 就是prod.get(image_name)

push_tag = image_tag

main(push_tag, image_name)

if name == 'main':

prod, disaster = parse()

get_tag(prod, disaster)