IntelliJ IDEA开发Spark的Maven项目Scala语言

1、Maven管理项目在JavaEE普遍使用,开发Spark项目也不例外,而Scala语言开发Spark项目的首选。因此需要构建Maven-Scala项目来开发Spark项目,本文采用的工具是IntelliJ IDEA 2016,IDEA工具越来越被大家认可,开发java, python ,scala 支持都非常好,安装直接下一步即可。

2、安装scala插件,File->Settings->Editor->Plugins,搜索scala即可安装。

3、创建Maven工程,File->New Project->Maven

选择相应的JDK版本,直接下一步,设定Maven项目的GroupId及ArifactId,创建项目的工程名称,点击完成即可。

创建Maven工程完毕,默认是Java的,没关系后面我们再添加scala与spark的依赖

4、修改Maven项目的pom.xml文件,增加scala与spark的依赖

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.new.test</groupId>

<artifactId>mySecondPorject</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<spark.version>1.6.0</spark.version>

<scala.version>2.10</scala.version>

<hadoop.version>2.6.0</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<!--<groupId>org.apache.spark</groupId>-->

<!--<artifactId>spark-sql_${scala.version}</artifactId>-->

<!--<version>${scala.version}</version>-->

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.10</artifactId>

<version>1.5.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-common</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.10</artifactId>

<version>0.8.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>0.8.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.4.10</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.1</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>2.10.4</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.specs</groupId>

<artifactId>specs</artifactId>

<version>1.2.5</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>1.1.1</version>

<type>jar</type>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.1</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.12</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<!-- MAVEN 编译使用的JDK版本 -->

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.3</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

</plugins>

</build>

</project>

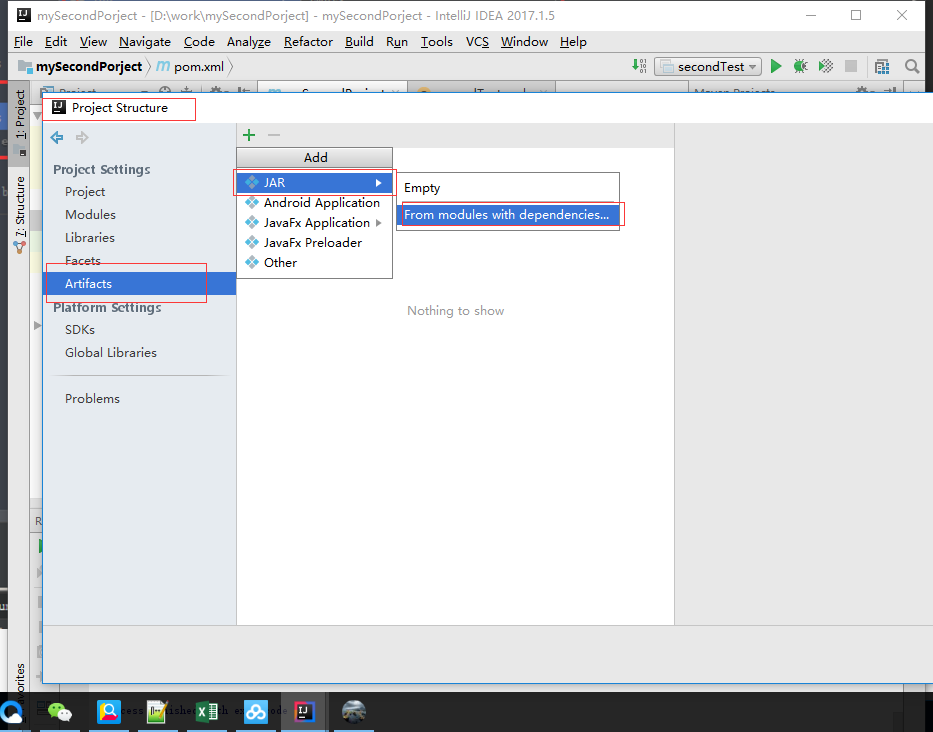

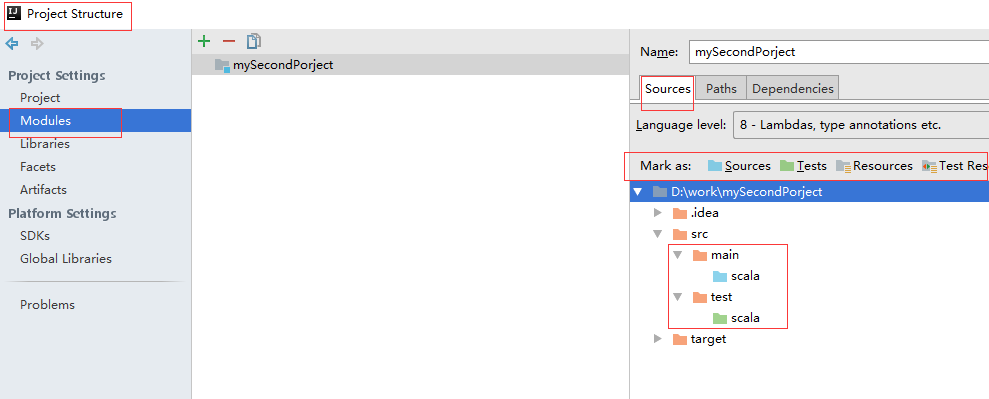

5、删除项目的java目录,新建scala并设置源文件夹

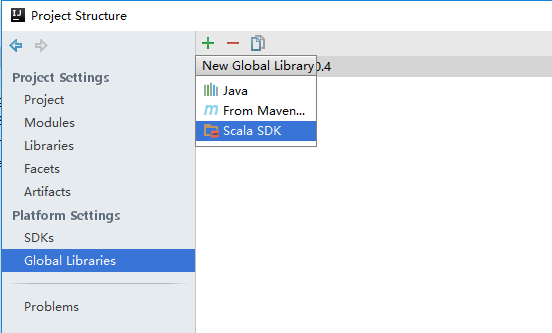

添加scala的SDK(在全局里加SDK)

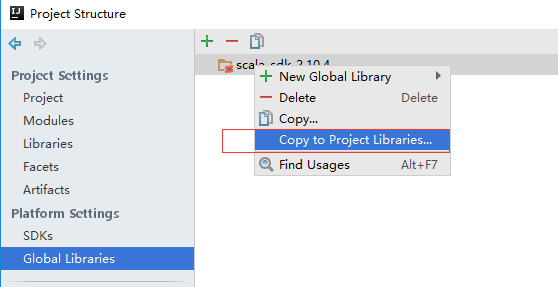

并且右击,选copy to Project Libraries..

6、开发Spark实例

7、打包编译,线上发布