Service Mesh服务网格技术探究---VMWare+k8s集群+Istio系列:k8s集群之node节点安装

一、环境准备

重复之前安装master的步骤,卸载podman、关闭交换区、禁用selinux、关闭防火墙、配置系统基本安装源、添加k8s安装源、安装docker、为docker配置阿里云加速。

具体步骤可参考之前的文章Service Mesh服务网格技术探究---VMWare+k8s集群+Istio系列:k8s集群之master安装。

二、k8s安装并加入集群

以上准备工作完成之后,开始安装kubectl、kubelet、kubeadm并进行启动。

在安装之前还有一个重要的步骤需要先完成,就是修改 /usr/lib/systemd/system/docker.service中的driver,否则后面执行kubeadm join命令,加入集群的时候会报类似:error execution phase kubelet-start: error uploading crisocket: timed out waiting for the condition的错。我之前在安装master的时候就遇到过这个问题,虽然解决了,但是在安装node的时候把这个给遗漏了,所以在加入集群的时候一直报错,后面的文章里我也会描述一下解决的过程。

安装kubectl、kubelet、kubeadm,这里和安装master的步骤一样。

[root@k8s-node1 ~]# yum install -y kubectl kubelet kubeadm Repository extras is listed more than once in the configuration Last metadata expiration check: 0:04:20 ago on Fri 17 Sep 2021 08:03:00 PM CST. Dependencies resolved. ======================================================================================================================================================== Package Architecture Version Repository Size ======================================================================================================================================================== Installing: kubeadm x86_64 1.22.2-0 kubernetes 9.3 M kubectl x86_64 1.22.2-0 kubernetes 9.6 M kubelet x86_64 1.22.2-0 kubernetes 23 M Installing dependencies: conntrack-tools x86_64 1.4.4-10.el8 base 204 k cri-tools x86_64 1.13.0-0 kubernetes 5.1 M kubernetes-cni x86_64 0.8.7-0 kubernetes 19 M libnetfilter_cthelper x86_64 1.0.0-15.el8 base 24 k libnetfilter_cttimeout x86_64 1.0.0-11.el8 base 24 k libnetfilter_queue x86_64 1.0.4-3.el8 base 31 k socat x86_64 1.7.3.3-2.el8 AppStream 302 k Transaction Summary ======================================================================================================================================================== Install 10 Packages Total download size: 67 M Installed size: 313 M Downloading Packages: (1/10): libnetfilter_cttimeout-1.0.0-11.el8.x86_64.rpm 119 kB/s | 24 kB 00:00 (2/10): libnetfilter_cthelper-1.0.0-15.el8.x86_64.rpm 92 kB/s | 24 kB 00:00 (3/10): libnetfilter_queue-1.0.4-3.el8.x86_64.rpm 223 kB/s | 31 kB 00:00 (4/10): conntrack-tools-1.4.4-10.el8.x86_64.rpm 426 kB/s | 204 kB 00:00 (5/10): socat-1.7.3.3-2.el8.x86_64.rpm 434 kB/s | 302 kB 00:00 (6/10): 14bfe6e75a9efc8eca3f638eb22c7e2ce759c67f95b43b16fae4ebabde1549f3-cri-tools-1.13.0-0.x86_64.rpm 354 kB/s | 5.1 MB 00:14 (7/10): 994be6998becbaa99f3c42cd8f2299364fb6f5c597b5ba1eb5db860d63928d6e-kubectl-1.22.2-0.x86_64.rpm 471 kB/s | 9.6 MB 00:20 (8/10): 601174c7fbdf37f053d43088913525758704610e8036f0afd422d6e6a726f6b9-kubeadm-1.22.2-0.x86_64.rpm 376 kB/s | 9.3 MB 00:25 (9/10): db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kubernetes-cni-0.8.7-0.x86_64.rpm 655 kB/s | 19 MB 00:29 (10/10): 80864433372b7120669c95335d54aedd2cb7e2002b41e5686e71d560563e3e8c-kubelet-1.22.2-0.x86_64.rpm 530 kB/s | 23 MB 00:45 -------------------------------------------------------------------------------------------------------------------------------------------------------- Total 1.1 MB/s | 67 MB 01:00 warning: /var/cache/dnf/kubernetes-d03a9fe438e18cac/packages/14bfe6e75a9efc8eca3f638eb22c7e2ce759c67f95b43b16fae4ebabde1549f3-cri-tools-1.13.0-0.x86_64. rpm: Header V4 RSA/SHA512 Signature, key ID 3e1ba8d5: NOKEY Kubernetes 30 kB/s | 3.4 kB 00:00 Importing GPG key 0x307EA071: Userid : "Rapture Automatic Signing Key (cloud-rapture-signing-key-2021-03-01-08_01_09.pub)" Fingerprint: 7F92 E05B 3109 3BEF 5A3C 2D38 FEEA 9169 307E A071 From : https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg Key imported successfully Importing GPG key 0x836F4BEB: Userid : "gLinux Rapture Automatic Signing Key (//depot/google3/production/borg/cloud-rapture/keys/cloud-rapture-pubkeys/cloud-rapture-signing-key- 2020-12-03-16_08_05.pub) <glinux-team@google.com>" Fingerprint: 59FE 0256 8272 69DC 8157 8F92 8B57 C5C2 836F 4BEB From : https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg Key imported successfully Kubernetes 7.7 kB/s | 975 B 00:00 Importing GPG key 0x3E1BA8D5: Userid : "Google Cloud Packages RPM Signing Key <gc-team@google.com>" Fingerprint: 3749 E1BA 95A8 6CE0 5454 6ED2 F09C 394C 3E1B A8D5 From : https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg Key imported successfully Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Installing : kubectl-1.22.2-0.x86_64 1/10 Installing : cri-tools-1.13.0-0.x86_64 2/10 Installing : socat-1.7.3.3-2.el8.x86_64 3/10 Installing : libnetfilter_queue-1.0.4-3.el8.x86_64 4/10 Running scriptlet: libnetfilter_queue-1.0.4-3.el8.x86_64 4/10 Installing : libnetfilter_cttimeout-1.0.0-11.el8.x86_64 5/10 Running scriptlet: libnetfilter_cttimeout-1.0.0-11.el8.x86_64 5/10 Installing : libnetfilter_cthelper-1.0.0-15.el8.x86_64 6/10 Running scriptlet: libnetfilter_cthelper-1.0.0-15.el8.x86_64 6/10 Installing : conntrack-tools-1.4.4-10.el8.x86_64 7/10 Running scriptlet: conntrack-tools-1.4.4-10.el8.x86_64 7/10 Installing : kubernetes-cni-0.8.7-0.x86_64 8/10 Installing : kubelet-1.22.2-0.x86_64 9/10 Installing : kubeadm-1.22.2-0.x86_64 10/10 Running scriptlet: kubeadm-1.22.2-0.x86_64 10/10 Verifying : conntrack-tools-1.4.4-10.el8.x86_64 1/10 Verifying : libnetfilter_cthelper-1.0.0-15.el8.x86_64 2/10 Verifying : libnetfilter_cttimeout-1.0.0-11.el8.x86_64 3/10 Verifying : libnetfilter_queue-1.0.4-3.el8.x86_64 4/10 Verifying : socat-1.7.3.3-2.el8.x86_64 5/10 Verifying : cri-tools-1.13.0-0.x86_64 6/10 Verifying : kubeadm-1.22.2-0.x86_64 7/10 Verifying : kubectl-1.22.2-0.x86_64 8/10 Verifying : kubelet-1.22.2-0.x86_64 9/10 Verifying : kubernetes-cni-0.8.7-0.x86_64 10/10 Installed: conntrack-tools-1.4.4-10.el8.x86_64 cri-tools-1.13.0-0.x86_64 kubeadm-1.22.2-0.x86_64 kubectl-1.22.2-0.x86_64 kubelet-1.22.2-0.x86_64 kubernetes-cni-0.8.7-0.x86_64 libnetfilter_cthelper-1.0.0-15.el8.x86_64 libnetfilter_cttimeout-1.0.0-11.el8.x86_64 libnetfilter_queue-1.0.4-3.el8.x86_64 socat-1.7.3.3-2.el8.x86_64 Complete!

设置kubelet开机启动,并启动kublet

[root@k8s-node1 ~]# systemctl enable kubelet Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service. [root@k8s-node1 ~]# systemctl start kubelet

执行kubeadm join命令加入集群,找到前面master在kubeadm init成功后控制台打印的那一串命令

[root@k8s-node1 ~]# kubeadm join 192.168.186.132:6443 --token fxvabb.7kmdvdstq1csjgbx --discovery-token-ca-cert-hash sha256:c4055de4f7fe4bef818e7a8dbe de04a84ff75e6126d30d94deea28deee4abd82

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING FileExisting-tc]: tc not found in system path

[preflight] The system verification failed. Printing the output from the verification:

KERNEL_VERSION: 4.18.0-305.19.1.el8_4.x86_64

CONFIG_NAMESPACES: enabled

CONFIG_NET_NS: enabled

CONFIG_PID_NS: enabled

CONFIG_IPC_NS: enabled

CONFIG_UTS_NS: enabled

CONFIG_CGROUPS: enabled

CONFIG_CGROUP_CPUACCT: enabled

CONFIG_CGROUP_DEVICE: enabled

CONFIG_CGROUP_FREEZER: enabled

CONFIG_CGROUP_PIDS: enabled

CONFIG_CGROUP_SCHED: enabled

CONFIG_CPUSETS: enabled

CONFIG_MEMCG: enabled

CONFIG_INET: enabled

CONFIG_EXT4_FS: enabled (as module)

CONFIG_PROC_FS: enabled

CONFIG_NETFILTER_XT_TARGET_REDIRECT: enabled (as module)

CONFIG_NETFILTER_XT_MATCH_COMMENT: enabled (as module)

CONFIG_FAIR_GROUP_SCHED: enabled

CONFIG_OVERLAY_FS: enabled (as module)

CONFIG_AUFS_FS: not set - Required for aufs.

CONFIG_BLK_DEV_DM: enabled (as module)

CONFIG_CFS_BANDWIDTH: enabled

CONFIG_CGROUP_HUGETLB: enabled

CONFIG_SECCOMP: enabled

CONFIG_SECCOMP_FILTER: enabled

OS: Linux

CGROUPS_CPU: enabled

CGROUPS_CPUACCT: enabled

CGROUPS_CPUSET: enabled

CGROUPS_DEVICES: enabled

CGROUPS_FREEZER: enabled

CGROUPS_MEMORY: enabled

CGROUPS_PIDS: enabled

CGROUPS_HUGETLB: enabled

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: Client:

Context: default

Debug Mode: false

Plugins:

app: Docker App (Docker Inc., v0.9.1-beta3)

buildx: Build with BuildKit (Docker Inc., v0.6.1-docker)

scan: Docker Scan (Docker Inc., v0.8.0)

Server:

ERROR: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

errors pretty printing info

, error: exit status 1

[ERROR Service-Docker]: docker service is not active, please run 'systemctl start docker.service'

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

[ERROR SystemVerification]: error verifying Docker info: "Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daem on running?"

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

第一次加入失败:

从控制台的日志看,加入集群失败了,从错误提示看应该是docker服务没有启动,按照提示start docker后继续加入集群。

[root@k8s-node1 ~]# systemctl start docker.service

继续执行kubeadm join 命令

[root@k8s-node1 ~]# kubeadm join 192.168.186.132:6443 --token fxvabb.7kmdvdstq1csjgbx --discovery-token-ca-cert-hash sha256:c4055de4f7fe4bef818e7a8dbe de04a84ff75e6126d30d94deea28deee4abd82 [preflight] Running pre-flight checks [WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service' [WARNING FileExisting-tc]: tc not found in system path [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... [kubelet-check] Initial timeout of 40s passed. [kubelet-check] It seems like the kubelet isn't running or healthy. [kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1 ]:10248: connect: connection refused. [kubelet-check] It seems like the kubelet isn't running or healthy. [kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1 ]:10248: connect: connection refused. [kubelet-check] It seems like the kubelet isn't running or healthy. [kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1 ]:10248: connect: connection refused. [kubelet-check] It seems like the kubelet isn't running or healthy. [kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1 ]:10248: connect: connection refused. [kubelet-check] It seems like the kubelet isn't running or healthy. [kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1 ]:10248: connect: connection refused. error execution phase kubelet-start: error uploading crisocket: timed out waiting for the condition To see the stack trace of this error execute with --v=5 or higher

第二次加入失败:

通过执行journalctl -xeu kubelet命令查看日志,在最后发现"failed to run Kubelet: misconfiguration: kubelet cgroup driver: \"systemd\" is different from docker cgroup driver: \>,果不其然~~~,又是driver的问题。。。,这个就是前面我说的环境准备的时候遗漏的步骤,通过docker info | grep Cgroup查看,确实是cgroupfs,既然找到了原因就好办了,于是果断修改配置文件。

[root@k8s-node1 ~]# journalctl -xeu kubelet Sep 17 19:48:32 k8s-node1 systemd[1]: Started kubelet: The Kubernetes Node Agent. -- Subject: Unit kubelet.service has finished start-up -- Defined-By: systemd -- Support: https://access.redhat.com/support -- -- Unit kubelet.service has finished starting up. -- -- The start-up result is done. Sep 17 19:48:32 k8s-node1 kubelet[7440]: Flag --network-plugin has been deprecated, will be removed along with dockershim. Sep 17 19:48:32 k8s-node1 kubelet[7440]: Flag --network-plugin has been deprecated, will be removed along with dockershim. Sep 17 19:48:32 k8s-node1 kubelet[7440]: I0917 19:48:32.978630 7440 server.go:440] "Kubelet version" kubeletVersion="v1.22.2" Sep 17 19:48:32 k8s-node1 kubelet[7440]: I0917 19:48:32.978848 7440 server.go:868] "Client rotation is on, will bootstrap in background" Sep 17 19:48:32 k8s-node1 kubelet[7440]: I0917 19:48:32.987218 7440 certificate_store.go:130] Loading cert/key pair from "/var/lib/kubelet/pki/kubelet-client-current.pem". Sep 17 19:48:32 k8s-node1 kubelet[7440]: I0917 19:48:32.988180 7440 dynamic_cafile_content.go:155] "Starting controller" name="client-ca-bundle::/etc/kubernetes/pki/ca.crt" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.043013 7440 server.go:687] "--cgroups-per-qos enabled, but --cgroup-root was not specified. defaulting to /" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.043220 7440 container_manager_linux.go:280] "Container manager verified user specified cgroup-root exists" cgroupRoot=[] Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.043273 7440 container_manager_linux.go:285] "Creating Container Manager object based on Node Config" nodeConfig={RuntimeCgroupsName: SystemCgroupsName: KubeletCgroupsName: C> Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.043290 7440 topology_manager.go:133] "Creating topology manager with policy per scope" topologyPolicyName="none" topologyScopeName="container" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.043297 7440 container_manager_linux.go:320] "Creating device plugin manager" devicePluginEnabled=true Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.043315 7440 state_mem.go:36] "Initialized new in-memory state store" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.043348 7440 kubelet.go:314] "Using dockershim is deprecated, please consider using a full-fledged CRI implementation" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.043365 7440 client.go:78] "Connecting to docker on the dockerEndpoint" endpoint="unix:///var/run/docker.sock" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.043373 7440 client.go:97] "Start docker client with request timeout" timeout="2m0s" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.050457 7440 docker_service.go:566] "Hairpin mode is set but kubenet is not enabled, falling back to HairpinVeth" hairpinMode=promiscuous-bridge Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.050489 7440 docker_service.go:242] "Hairpin mode is set" hairpinMode=hairpin-veth Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.050582 7440 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.052836 7440 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.052890 7440 docker_service.go:257] "Docker cri networking managed by the network plugin" networkPluginName="cni" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.052940 7440 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" Sep 17 19:48:33 k8s-node1 kubelet[7440]: I0917 19:48:33.059135 7440 docker_service.go:264] "Docker Info" dockerInfo=&{ID:X6ZD:TABJ:FZEZ:7QCI:WQXG:OIRT:GQTY:ZDWA:KHAG:RMVD:XNLG:4LOJ Containers:0 ContainersRunning:0 ContainersPause> Sep 17 19:48:33 k8s-node1 kubelet[7440]: E0917 19:48:33.059183 7440 server.go:294] "Failed to run kubelet" err="failed to run Kubelet: misconfiguration: kubelet cgroup driver: \"systemd\" is different from docker cgroup driver: \> Sep 17 19:48:33 k8s-node1 systemd[1]: kubelet.service: Main process exited, code=exited, status=1/FAILURE Sep 17 19:48:33 k8s-node1 systemd[1]: kubelet.service: Failed with result 'exit-code'. -- Subject: Unit failed -- Defined-By: systemd -- Support: https://access.redhat.com/support -- -- The unit kubelet.service has entered the 'failed' state with result 'exit-code'.

[root@k8s-node1 ~]# docker info | grep Cgroup

Cgroup Driver: cgroupfs

Cgroup Version: 1

编辑/usr/lib/systemd/system/docker.service文件,找到ExecStart,在后面增加 --exec-opt native.cgroupdriver=systemd。

修改完成后重启docker、重置kubeadm,通过docker info | grep Cgroup查看,确认这里已经改为systemd。

[root@k8s-node1 ~]# vim /usr/lib/systemd/system/docker.service [root@k8s-node1 ~]# systemctl daemon-reload && systemctl restart docker [root@k8s-node1 ~]# kubeadm reset -f [preflight] Running pre-flight checks W0917 19:51:54.229348 9629 removeetcdmember.go:80] [reset] No kubeadm config, using etcd pod spec to get data directory [reset] No etcd config found. Assuming external etcd [reset] Please, manually reset etcd to prevent further issues [reset] Stopping the kubelet service [reset] Unmounting mounted directories in "/var/lib/kubelet" [reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki] [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf] [reset] Deleting contents of stateful directories: [/var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni] The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d The reset process does not reset or clean up iptables rules or IPVS tables. If you wish to reset iptables, you must do so manually by using the "iptables" command. If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar) to reset your system's IPVS tables. The reset process does not clean your kubeconfig files and you must remove them manually. Please, check the contents of the $HOME/.kube/config file. [root@k8s-node1 ~]# iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X [root@k8s-node1 ~]# docker info | grep Cgroup Cgroup Driver: systemd Cgroup Version: 1

继续执行kubeadm join命令,加入集群

[root@k8s-node1 ~]# kubeadm join 192.168.186.132:6443 --token fxvabb.7kmdvdstq1csjgbx --discovery-token-ca-cert-hash sha256:c4055de4f7fe4bef818e7a8dbede04a84ff75e6126d30d94deea28deee4abd82 [preflight] Running pre-flight checks [WARNING FileExisting-tc]: tc not found in system path [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

~~~哇,终于成功了!!!

通过kubectl get node命令查看状态都为Ready。

[root@k8s-master ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master Ready control-plane,master 24h v1.22.1 k8s-node1 Ready <none> 15h v1.22.2 k8s-node2 Ready <none> 14h v1.22.2

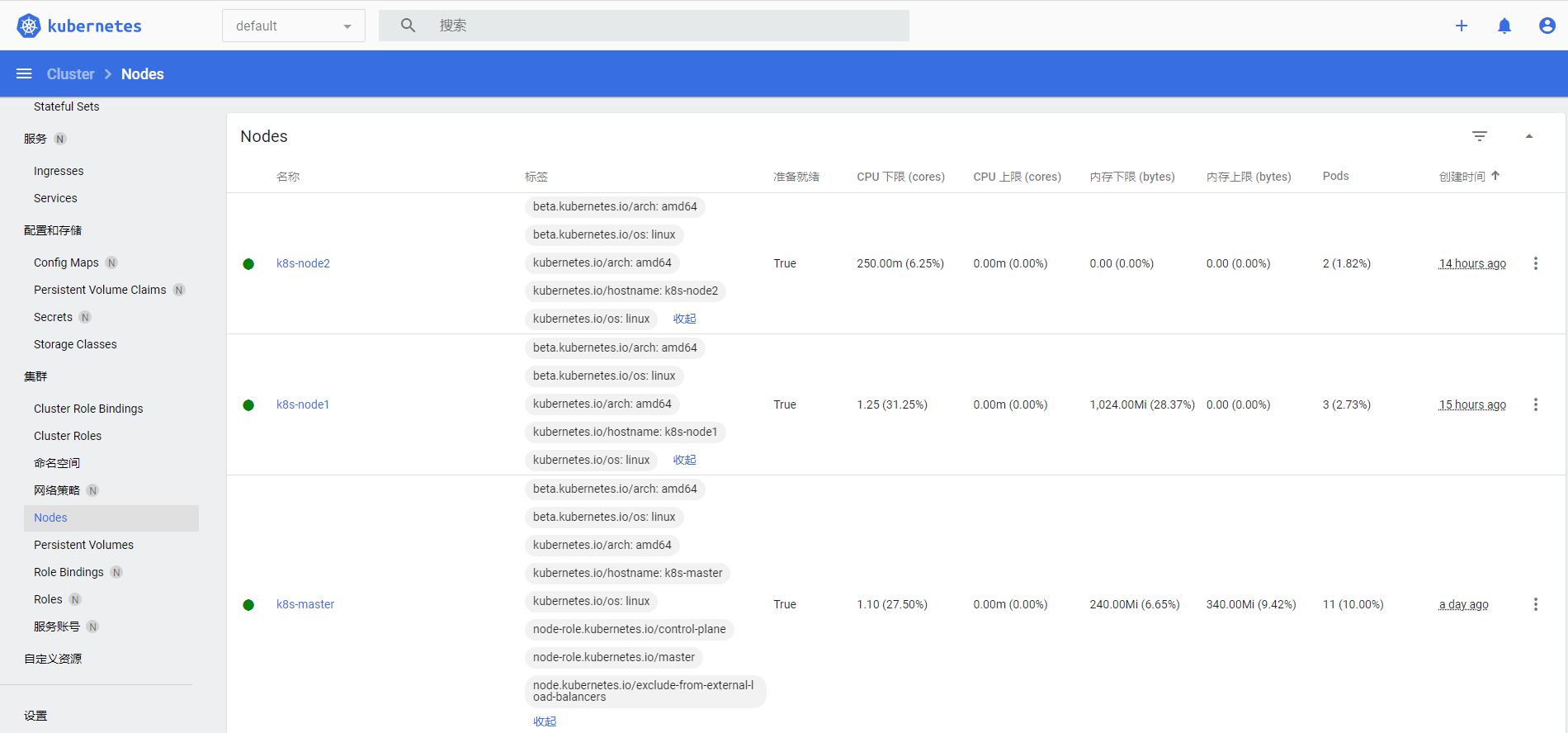

通过dashboard页面查看状态都为Running。

k8s集群安装完成,接下来开始安装Istio。

~~~未完待续