13-垃圾邮件分类2

1.读取

2.数据预处理

3.数据划分—训练集和测试集数据划分

from sklearn.model_selection import train_test_split

x_train,x_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=0, stratify=y_train)

4.文本特征提取

sklearn.feature_extraction.text.CountVectorizer

sklearn.feature_extraction.text.TfidfVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf2 = TfidfVectorizer()

观察邮件与向量的关系

向量还原为邮件

4.模型选择

from sklearn.naive_bayes import GaussianNB

from sklearn.naive_bayes import MultinomialNB

说明为什么选择这个模型?

由于样本特征的分大部分是多元离散值,使用MultinomialNB比较合适。

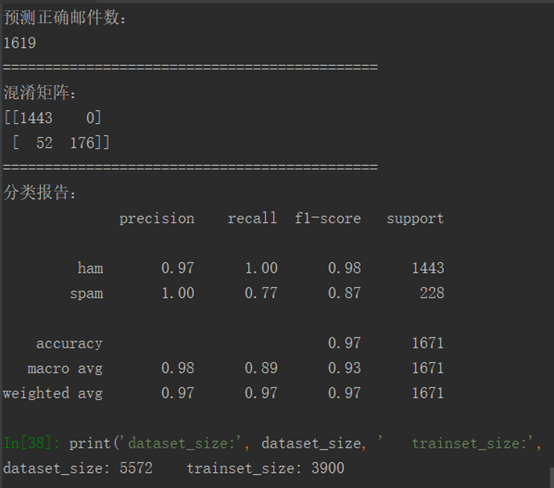

5.模型评价:混淆矩阵,分类报告

from sklearn.metrics import confusion_matrix

confusion_matrix = confusion_matrix(y_test, y_predict)

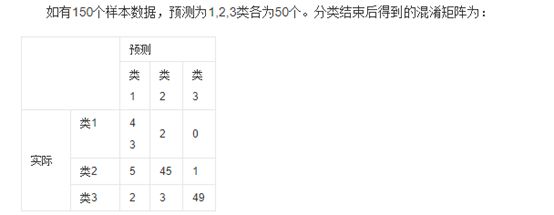

说明混淆矩阵的含义

from sklearn.metrics import classification_report

说明准确率、精确率、召回率、F值分别代表的意义

分类报告:

TP:正确的匹配数目

FP:误报,没有的匹配不正确

FN:漏报,没有找到正确匹配的数目

TN:正确的非匹配数目

准确率(正确率)=所有预测正确的样本/总的样本 (TP+TN)/总

精确率= 将正类预测为正类 / 所有预测为正类 TP/(TP+FP)

召回率 = 将正类预测为正类 / 所有正真的正类 TP/(TP+FN)

F值 = 精确率 * 召回率 * 2 / ( 精确率 + 召回率) (F 值即为精确率和召回率的调和平均值)

6.比较与总结

如果用CountVectorizer进行文本特征生成,与TfidfVectorizer相比,效果如何?

CountVectorizer:只考虑词汇在文本中出现的频率

TfidfVectorizer:除了考量某词汇在文本出现的频率,还关注包含这个词汇的所有文本的数量

能够削减高频没有意义的词汇出现带来的影响, 挖掘更有意义的特征

因此:相比之下,文本条目越多,Tfid的效果会越显著

源代码:

import nltk from nltk.corpus import stopwords from nltk.stem import WordNetLemmatizer import csv import numpy as np from sklearn.model_selection import cross_val_score from sklearn.feature_extraction.text import TfidfVectorizer from sklearn.naive_bayes import MultinomialNB from sklearn.metrics import confusion_matrix from sklearn.metrics import classification_report # 预处理 def preprocessing(text): # text=text.decode("utf-8") tokens = [word for sent in nltk.sent_tokenize(text) for word in nltk.word_tokenize(sent)] stops = stopwords.words('english') tokens = [token for token in tokens if token not in stops] tokens = [token.lower() for token in tokens if len(token) >= 3] lmtzr = WordNetLemmatizer() tokens = [lmtzr.lemmatize(token) for token in tokens] preprocessed_text = ' '.join(tokens) return preprocessed_text # 读取数据集 file_path = 'F:\SMSSpamCollection' sms = open(file_path, 'r', encoding='utf-8') sms_data = [] sms_label = [] csv_reader = csv.reader(sms, delimiter='\t') for line in csv_reader: sms_label.append(line[0]) sms_data.append(preprocessing(line[1])) sms.close() print(len(sms_data)) # 按0.7:0.3比例分为训练集和测试集,再将其向量化 dataset_size = len(sms_data) trainset_size = int(round(dataset_size * 0.7)) print('dataset_size:', dataset_size, ' trainset_size:', trainset_size) x_train = np.array([''.join(el) for el in sms_data[0:trainset_size]]) y_train = np.array(sms_label[0:trainset_size]) x_test = np.array(sms_data[trainset_size + 1:dataset_size]) y_test = np.array(sms_label[trainset_size + 1:dataset_size]) vectorizer = TfidfVectorizer(min_df=2, ngram_range=(1, 2), stop_words='english', strip_accents='unicode', norm='l2') # 将已划分好的训练集和测试集向量化 X_train = vectorizer.fit_transform(x_train) X_test = vectorizer.transform(x_test) # 朴素贝叶斯分类器 MNB = MultinomialNB() clf = MNB.fit(X_train, y_train) y_pred = MNB.predict(X_test) print("预测正确邮件数:") print((y_pred == y_test).sum()) # 模型评价 print("=============================================") print('混淆矩阵:') cm = confusion_matrix(y_test, y_pred) print(cm) print("=============================================") print('分类报告:') cr = classification_report(y_test, y_pred) print(cr)

实验结果: