AI大模型ChatGLM2-6B 第三篇 - ChatGLM2-6B部署

clone项目

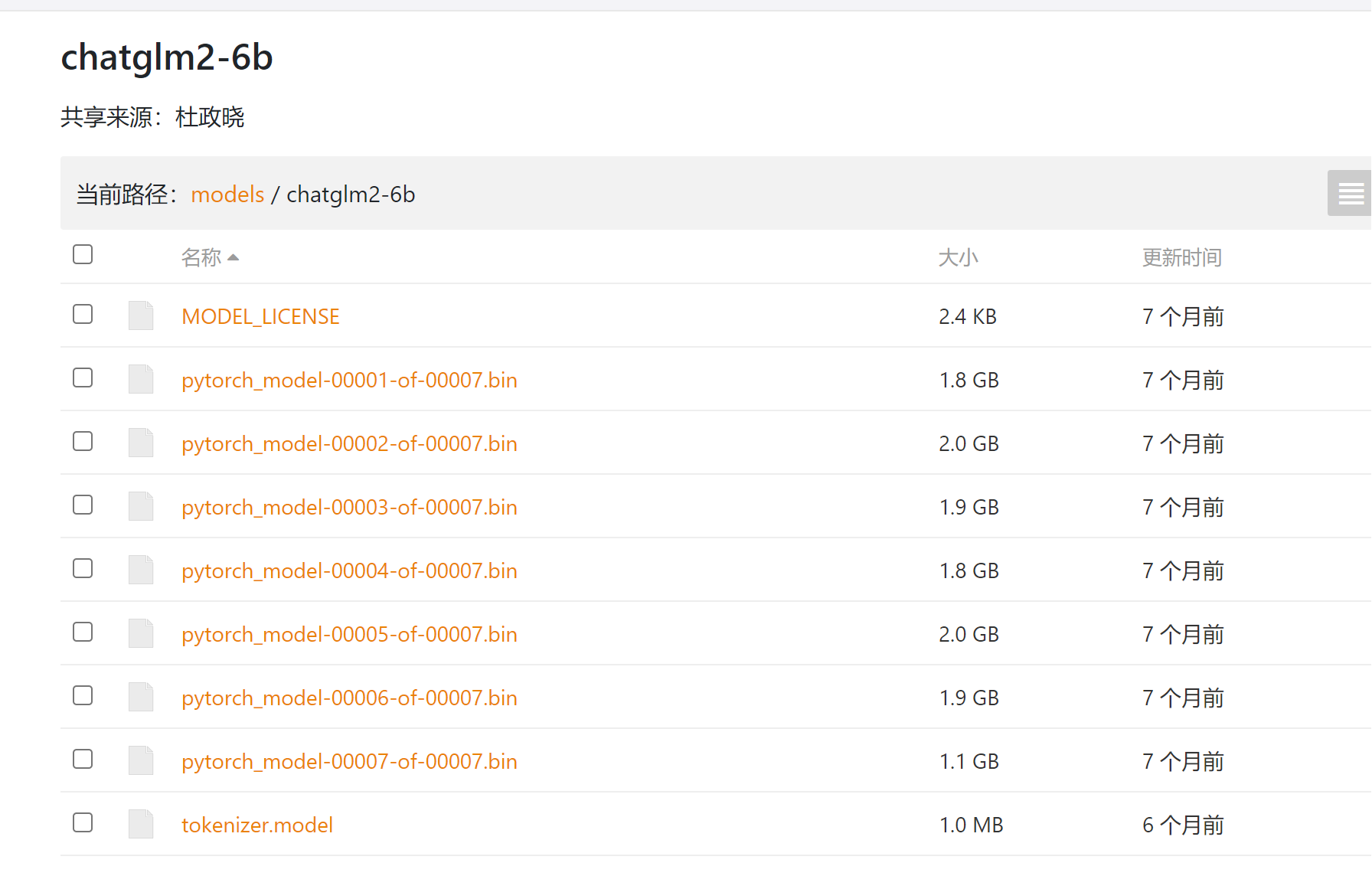

下载模型

https://cloud.tsinghua.edu.cn/d/674208019e314311ab5c/?p=%2Fchatglm2-6b&mode=list

切换conda里面的py39环境

cd /home/chq/ChatGLM2-6B

conda activate py39

执行pip install

(py39) root@chq:/home/chq/ChatGLM2-6B# pip install -r requirements.txt

Collecting protobuf (from -r requirements.txt (line 1))

Downloading protobuf-4.25.2-cp37-abi3-manylinux2014_x86_64.whl.metadata (541 bytes)

Collecting transformers==4.30.2 (from -r requirements.txt (line 2))

Downloading transformers-4.30.2-py3-none-any.whl.metadata (113 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 113.6/113.6 kB 530.9 kB/s eta 0:00:00

Collecting cpm_kernels (from -r requirements.txt (line 3))

Downloading cpm_kernels-1.0.11-py3-none-any.whl (416 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 416.6/416.6 kB 890.6 kB/s eta 0:00:00

Collecting torch>=2.0 (from -r requirements.txt (line 4))

Downloading torch-2.1.2-cp39-cp39-manylinux1_x86_64.whl.metadata (25 kB)

Collecting gradio (from -r requirements.txt (line 5))

Downloading gradio-4.14.0-py3-none-any.whl.metadata (15 kB)

Collecting mdtex2html (from -r requirements.txt (line 6))

Downloading mdtex2html-1.2.0-py3-none-any.whl (13 kB)

Collecting sentencepiece (from -r requirements.txt (line 7))

Downloading sentencepiece-0.1.99-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.3 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.3/1.3 MB 193.1 kB/s eta 0:00:00

Collecting accelerate (from -r requirements.txt (line 8))

Downloading accelerate-0.26.1-py3-none-any.whl.metadata (18 kB)

Collecting sse-starlette (from -r requirements.txt (line 9))

Downloading sse_starlette-1.8.2-py3-none-any.whl.metadata (5.4 kB)

Collecting streamlit>=1.24.0 (from -r requirements.txt (line 10))

Downloading streamlit-1.30.0-py2.py3-none-any.whl.metadata (8.2 kB)

Collecting filelock (from transformers==4.30.2->-r requirements.txt (line 2))

Downloading filelock-3.13.1-py3-none-any.whl.metadata (2.8 kB)

Collecting huggingface-hub<1.0,>=0.14.1 (from transformers==4.30.2->-r requirements.txt (line 2))

Downloading huggingface_hub-0.20.2-py3-none-any.whl.metadata (12 kB)

Collecting numpy>=1.17 (from transformers==4.30.2->-r requirements.txt (line 2))

Downloading numpy-1.26.3-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (61 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 61.2/61.2 kB 106.6 kB/s eta 0:00:00

Collecting packaging>=20.0 (from transformers==4.30.2->-r requirements.txt (line 2))

Downloading packaging-23.2-py3-none-any.whl.metadata (3.2 kB)

Collecting pyyaml>=5.1 (from transformers==4.30.2->-r requirements.txt (line 2))

Downloading PyYAML-6.0.1-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (2.1 kB)

Collecting regex!=2019.12.17 (from transformers==4.30.2->-r requirements.txt (line 2))

Downloading regex-2023.12.25-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (40 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 40.9/40.9 kB 177.9 kB/s eta 0:00:00

Collecting requests (from transformers==4.30.2->-r requirements.txt (line 2))

启动

启动前,主要修改模型路径,如web_demo.py:

tokenizer = AutoTokenizer.from_pretrained("/home/chq/ChatGLM2-6B/module", trust_remote_code=True)

model = AutoModel.from_pretrained("/home/chq/ChatGLM2-6B/module", trust_remote_code=True).cuda()

# 多显卡支持,使用下面两行代替上面一行,将num_gpus改为你实际的显卡数量

# from utils import load_model_on_gpus

# model = load_model_on_gpus("THUDM/chatglm2-6b", num_gpus=2)

model = model.eval()

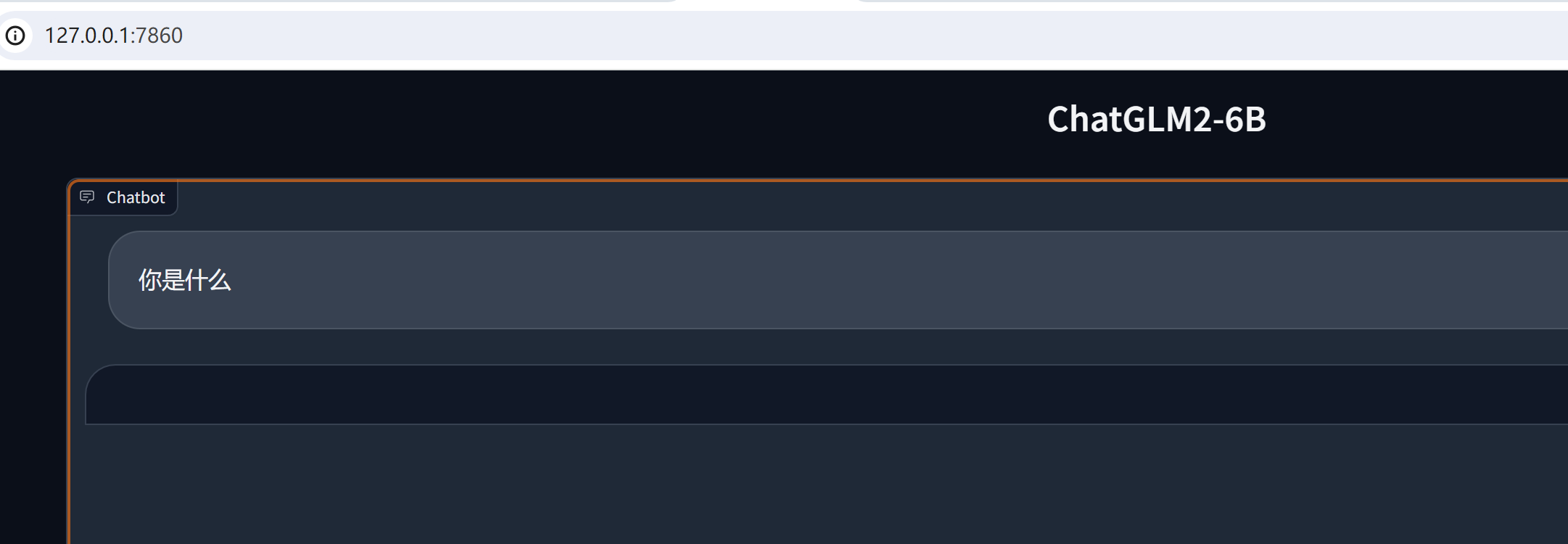

启动方式1(基于 Gradio 的网页版 demo):

python web_demo.py

方式2(基于 Streamlit 的网页版 demo):

streamlit run web_demo2.py

报错

提问的时候是有回答的,但web窗口看不到,是因为组件版本的问题,

参考https://github.com/THUDM/ChatGLM2-6B/issues/570

pip uninstall gradio

pip install gradio==3.39.0