hadoop优化namenode内存优化

Namenode内存配置

在Hadoop2.x里,如何配置NameNode内存?

NameNode默认内存2000M。如果你的服务器内存是4G,那一般可以把NN内存设置成3G,留1G给服务器维持基本运行(如系统运行需要、DataNode运行需要等)所需就行。

在hadoop-env.sh文件中设置:

HADOOP_NAMENODE_OPTS=-Xmx3072m

Hadoop3.x系列,如何配置NameNode内存?

答案是动态分配的。hadoop-env.sh有描述:

# The maximum amount of heap to use (Java -Xmx). If no unit

# is provided, it will be converted to MB. Daemons will

# prefer any Xmx setting in their respective _OPT variable.

# There is no default; the JVM will autoscale based upon machine

# memory size.

# export HADOOP_HEAPSIZE_MAX=

# The minimum amount of heap to use (Java -Xms). If no unit

# is provided, it will be converted to MB. Daemons will

# prefer any Xms setting in their respective _OPT variable.

# There is no default; the JVM will autoscale based upon machine

# memory size.

# export HADOOP_HEAPSIZE_MIN=

HADOOP_NAMENODE_OPTS=-Xmx102400m

如何查看NN所占用内存?

[atguigu@hadoop102 ~]$ jps

3088 NodeManager

2611 NameNode

3271 JobHistoryServer

2744 DataNode

3579 Jps

[atguigu@hadoop102 ~]$ jmap -heap 2611

Heap Configuration:

MaxHeapSize = 1031798784 (984.0MB)

如何查看DataNode所占内存?

[atguigu@hadoop102 ~]$ jmap -heap 2744

Heap Configuration:

MaxHeapSize = 1031798784 (984.0MB)

DN和NN的内存在默认情况下都是自动分配的,且NN和DN相等。这个就不太合理了,万一两个加起来超过了节点总内存怎么办,可能会崩掉。

经验参考:

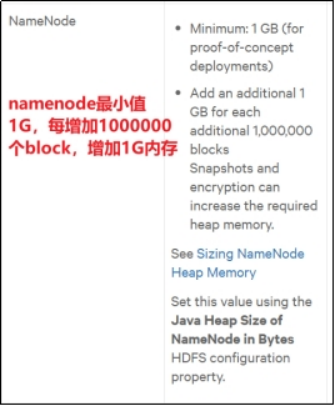

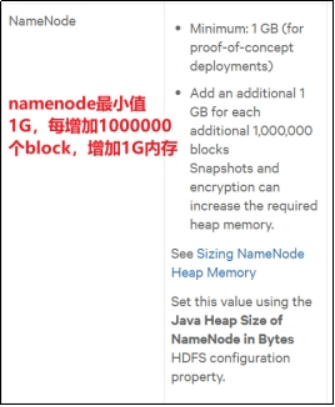

https://docs.cloudera.com/documentation/enterprise/6/release-notes/topics/rg_hardware_requirements.html#concept_fzz_dq4_gbb

NameNode是每增加100万个文件块,就增加1G内存;

DataNode是每增加100万个副本,就增加1G内存。

本质上都是管理元数据,可以理解成,各自管理的数据单位量在上100w之后,就增加1G内存。

具体修改:hadoop-env.sh

export HDFS_NAMENODE_OPTS="-Dhadoop.security.logger=INFO,RFAS -Xmx1024m"

export HDFS_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS -Xmx1024m"

浙公网安备 33010602011771号

浙公网安备 33010602011771号