Spark版本不兼容导致Standalone集群无法连接问题

一、Spark版本不一致报错现象

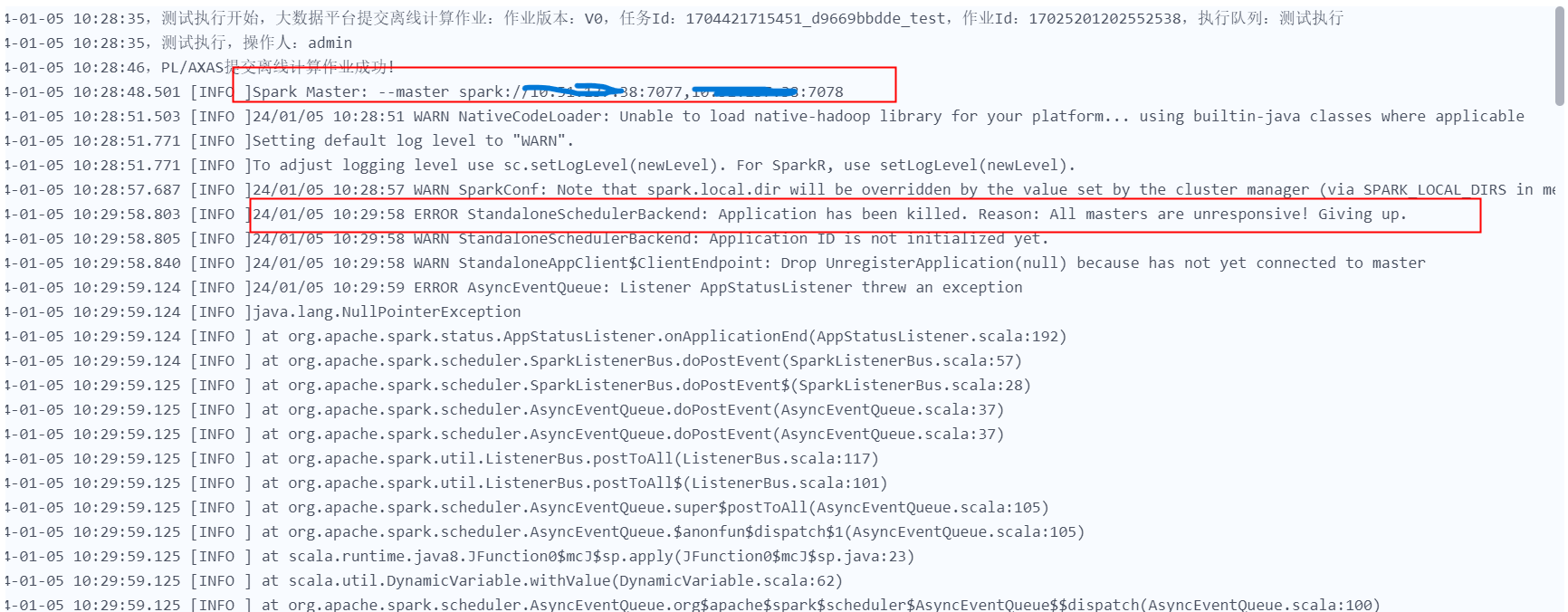

当使用client模式连接Spark的standalone集群时,报错所有的spark master的节点都没有回应。

二、问题排查思路

通过client端的日志产看没有什么有价值的信息,需要看下spark端的master的日志,docker logs spark-master 产看docker容器spark-master的日志如下:

24/01/09 09:27:40 INFO Master: Registering app SparkSQL::172.25.222.2

24/01/09 09:27:40 INFO Master: Registered app SparkSQL::172.25.222.2 with ID app-20240109092740-0000

24/01/09 09:27:40 INFO Master: Start scheduling for app app-20240109092740-0000 with rpId: 0

24/01/09 09:27:40 INFO Master: Launching executor app-20240109092740-0000/0 on worker worker-20240109084853-172.25.222.4-36116

24/01/09 09:27:40 INFO Master: Start scheduling for app app-20240109092740-0000 with rpId: 0

24/01/09 09:28:46 ERROR TransportRequestHandler: Error while invoking RpcHandler#receive() for one-way message.

java.io.InvalidClassException: org.apache.spark.deploy.ApplicationDescription; local class incompatible: stream classdesc serialVersionUID = 1574364215946805297, local class serialVersionUID = -6826680068825109317

at java.io.ObjectStreamClass.initNonProxy(ObjectStreamClass.java:616)

at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1630)

at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1521)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1781)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2018)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1942)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:373)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:87)

at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:123)

at org.apache.spark.rpc.netty.NettyRpcEnv.$anonfun$deserialize$2(NettyRpcEnv.scala:299)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at org.apache.spark.rpc.netty.NettyRpcEnv.deserialize(NettyRpcEnv.scala:352)

at org.apache.spark.rpc.netty.NettyRpcEnv.$anonfun$deserialize$1(NettyRpcEnv.scala:298)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at org.apache.spark.rpc.netty.NettyRpcEnv.deserialize(NettyRpcEnv.scala:298)

at org.apache.spark.rpc.netty.RequestMessage$.apply(NettyRpcEnv.scala:646)

at org.apache.spark.rpc.netty.NettyRpcHandler.internalReceive(NettyRpcEnv.scala:697)

at org.apache.spark.rpc.netty.NettyRpcHandler.receive(NettyRpcEnv.scala:689)

at org.apache.spark.network.server.TransportRequestHandler.processOneWayMessage(TransportRequestHandler.java:274)

at org.apache.spark.network.server.TransportRequestHandler.handle(TransportRequestHandler.java:111)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:140)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:53)

at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:99)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:442)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at org.apache.spark.network.util.TransportFrameDecoder.channelRead(TransportFrameDecoder.java:102)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:440)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:788)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:724)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:650)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:745)

24/01/09 09:29:06 ERROR TransportRequestHandler: Error while invoking RpcHandler#receive() for one-way message.

java.io.InvalidClassException: org.apache.spark.deploy.ApplicationDescription; local class incompatible: stream classdesc serialVersionUID = 1574364215946805297, local class serialVersionUID = -6826680068825109317

at java.io.ObjectStreamClass.initNonProxy(ObjectStreamClass.java:616)

at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1630)

at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1521)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1781)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2018)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1942)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:373)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:87)

at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:123)

at org.apache.spark.rpc.netty.NettyRpcEnv.$anonfun$deserialize$2(NettyRpcEnv.scala:299)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at org.apache.spark.rpc.netty.NettyRpcEnv.deserialize(NettyRpcEnv.scala:352)

at org.apache.spark.rpc.netty.NettyRpcEnv.$anonfun$deserialize$1(NettyRpcEnv.scala:298)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at org.apache.spark.rpc.netty.NettyRpcEnv.deserialize(NettyRpcEnv.scala:298)

at org.apache.spark.rpc.netty.RequestMessage$.apply(NettyRpcEnv.scala:646)

at org.apache.spark.rpc.netty.NettyRpcHandler.internalReceive(NettyRpcEnv.scala:697)

at org.apache.spark.rpc.netty.NettyRpcHandler.receive(NettyRpcEnv.scala:689)

at org.apache.spark.network.server.TransportRequestHandler.processOneWayMessage(TransportRequestHandler.java:274)

at org.apache.spark.network.server.TransportRequestHandler.handle(TransportRequestHandler.java:111)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:140)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:53)

at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:99)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:442)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at org.apache.spark.network.util.TransportFrameDecoder.channelRead(TransportFrameDecoder.java:102)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:440)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:788)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:724)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:650)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:745)

24/01/09 09:29:26 ERROR TransportRequestHandler: Error while invoking RpcHandler#receive() for one-way message.

java.io.InvalidClassException: org.apache.spark.deploy.ApplicationDescription; local class incompatible: stream classdesc serialVersionUID = 1574364215946805297, local class serialVersionUID = -6826680068825109317

at java.io.ObjectStreamClass.initNonProxy(ObjectStreamClass.java:616)

at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1630)

at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1521)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1781)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2018)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1942)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:373)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:87)

at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:123)

at org.apache.spark.rpc.netty.NettyRpcEnv.$anonfun$deserialize$2(NettyRpcEnv.scala:299)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at org.apache.spark.rpc.netty.NettyRpcEnv.deserialize(NettyRpcEnv.scala:352)

at org.apache.spark.rpc.netty.NettyRpcEnv.$anonfun$deserialize$1(NettyRpcEnv.scala:298)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at org.apache.spark.rpc.netty.NettyRpcEnv.deserialize(NettyRpcEnv.scala:298)

at org.apache.spark.rpc.netty.RequestMessage$.apply(NettyRpcEnv.scala:646)

at org.apache.spark.rpc.netty.NettyRpcHandler.internalReceive(NettyRpcEnv.scala:697)

at org.apache.spark.rpc.netty.NettyRpcHandler.receive(NettyRpcEnv.scala:689)

at org.apache.spark.network.server.TransportRequestHandler.processOneWayMessage(TransportRequestHandler.java:274)

at org.apache.spark.network.server.TransportRequestHandler.handle(TransportRequestHandler.java:111)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:140)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:53)

at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:99)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:442)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at org.apache.spark.network.util.TransportFrameDecoder.channelRead(TransportFrameDecoder.java:102)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:444)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:412)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:440)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:420)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:788)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:724)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:650)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:745)

24/01/09 09:29:46 INFO Master: 172.25.222.3:39020 got disassociated, removing it.

24/01/09 09:29:46 INFO Master: 97f5a5d12509:38871 got disassociated, removing it.

三、错误分析

通过上面master的报错日志,可以看到client(172.225.222.2)已经连接到master(172.225.222.3和)的两个节点了,只不过后面master发现客户端脱离关联,又把他移除了。说明client连接master之间的通信是没问题的。那么问题应该是出现在master反向连接client的出了问题。

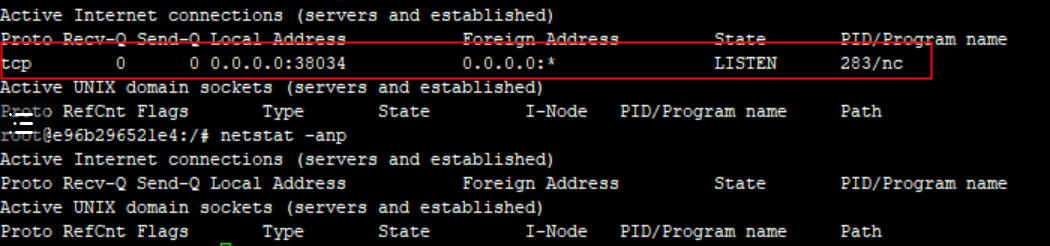

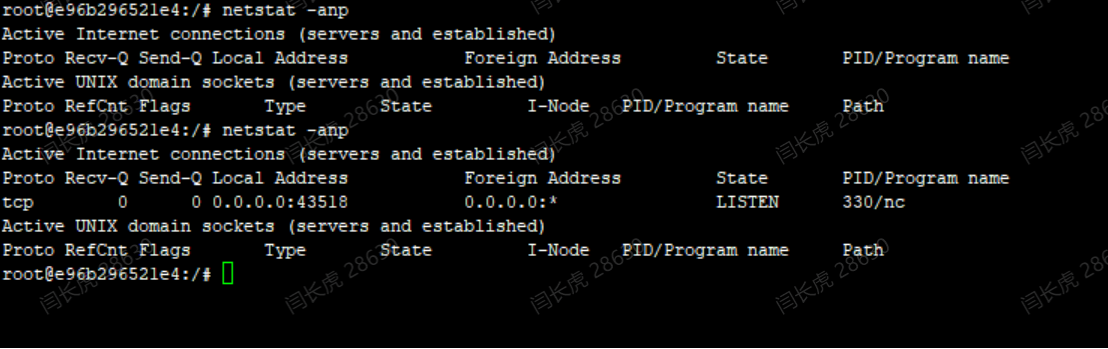

3.1 使用nc命令模拟端口占用,使用master反向连接client

因为client与master连接不久就断开了,程序无法长时间占用一个端口,因此,使用nc模拟一个应用程序占用一个随机端口。在master所在的容器使用telnet ip 端口方式与client通信。

1、进入到容器

docker exec -it spark-master bash

2、使用nc占用随机端口

nc -lk 30000

监听的端口与实际设置的不一致,端口出现了转移?导致master连接client时上报的端口和使用的端口不一致?

3、排查端口不一致原因

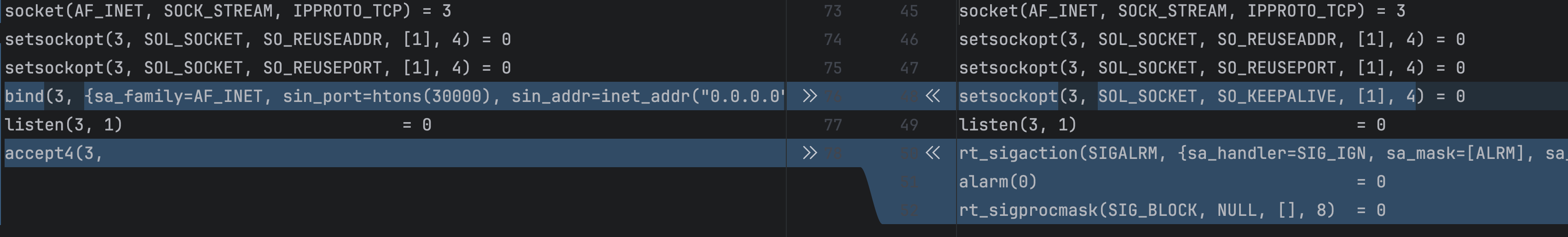

经过排查,发现不同的docker版本,镜像lidaxi2019/webecho:0.1是好的,镜像ubuntu:18.04是会转移端口的,其中的nc的lib库和版本都不一样,本人所使用docker版本的nc如果要绑定一个特定端口需要使用的命令为:

nc -lk -p 30000

不然,就会出现如下差异,缺少了bind这个system call。当使用-p命令后,端口绑定正常了,排除端口转移的问题。

4、在看序列化错误

24/01/09 09:29:26 ERROR TransportRequestHandler: Error while invoking RpcHandler#receive() for one-way message.

java.io.InvalidClassException: org.apache.spark.deploy.ApplicationDescription; local class incompatible: stream classdesc serialVersionUID = 1574364215946805297, local class serialVersionUID = -6826680068825109317

如下博客帮了很大忙,提示为因为client和master的spark的版本不同,导致client端编译的uid跟master端类的序列化id不一致。

https://blog.csdn.net/u013124704/article/details/51437468

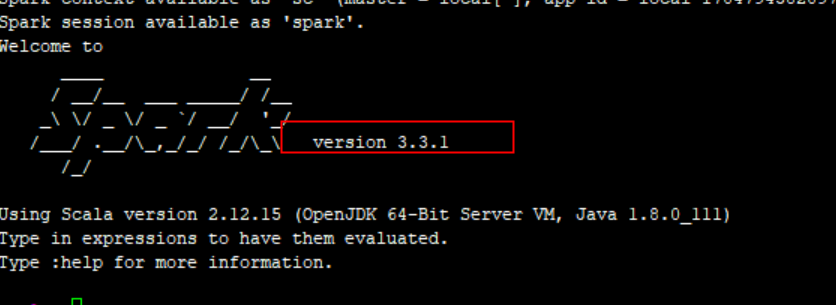

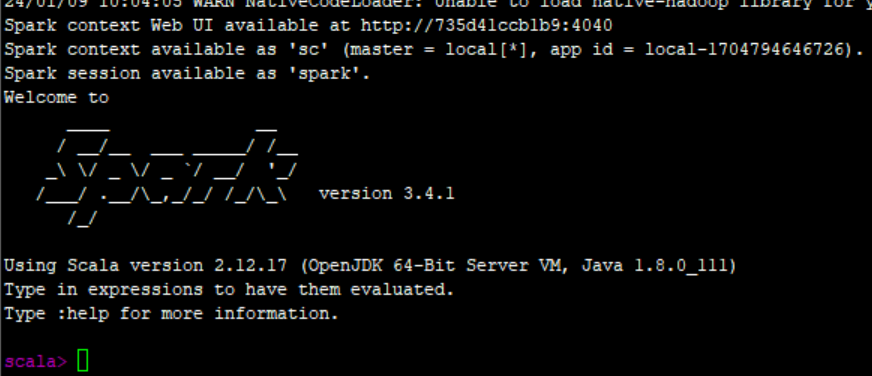

5、查看版本差异

进入到client和master的容器的spark的bin目录,使用sh spark-shell ,发现二者版本果然不一致,将client的版本也改为3.4.1之后,问题解决。spark小版本都有这么大差异,只能说spark变得对开发者越来越不友好了。