搭建分布式文件系统FastDFS集群

FastDFS是为互联网应用量身定做的一套分布式文件存储系统,非常适合用来存储用户图片、视频、文档等文件。对于互联网应用,和其他分布式文件系统相比,优势非常明显。出于简洁考虑,FastDFS没有对文件做分块存储,因此不太适合分布式计算场景。

在生产环境中往往数据存储量比较大,因此会大部分会选择分布式存储来解决,主要解决以下几个问题

- 海量数据存储

- 数据高可用(冗余备份)

- 较高读写性能和负载均衡

- 支持多平台多语言

- 高并发问题

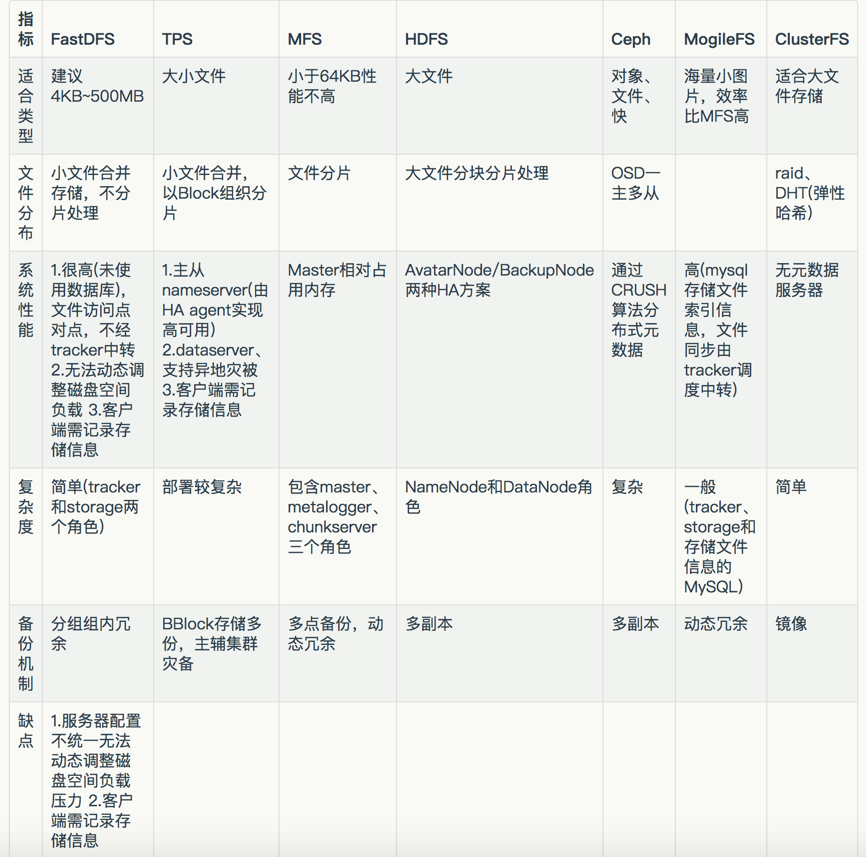

常见分布式存储对比

FastDFS 相关组件及原理

FastDFS介绍

FastDFS是一个C语言实现的开源轻量级分布式文件系统,支持Linux、FreeBSD、AID等Linux系统,解决了大量数据存储和读写负载等问题,适合存储4KB~500MB之间的小文件,如图片网站,短视频网站,文档,APP下载站等,UC,京东,支付宝,迅雷,酷狗等都有使用,其中UC基于FastDFS向用户提供网盘,广告和应用下载的业务。FastDFS与MogileFS、HDFS、TFS等都不是系统级的分布式文件系统,而是应用级的分布式文件存储服务

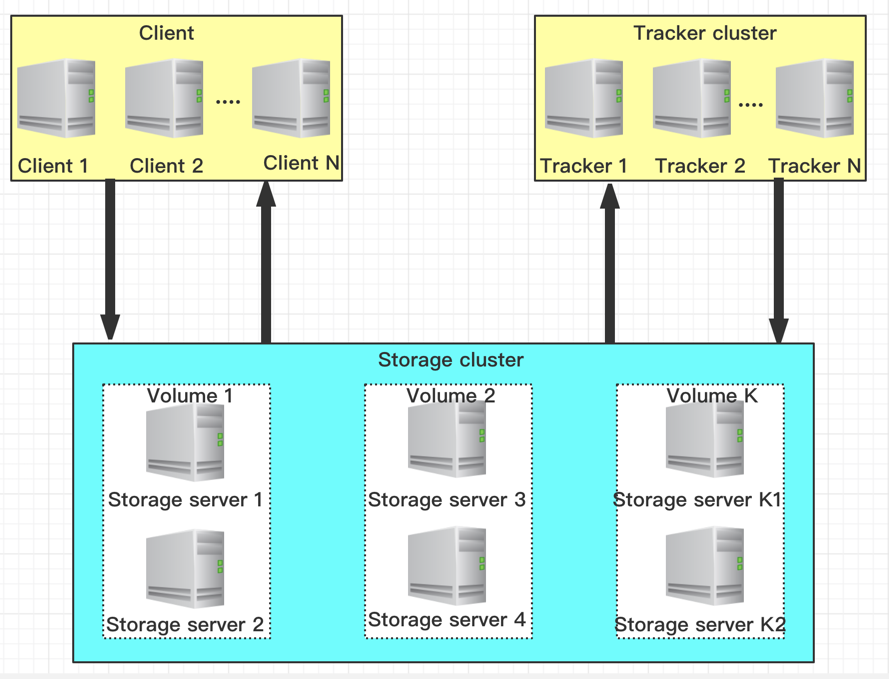

FastDFS架构

FastDFS服务有三个角色: 跟踪服务(tracker server)、存储服务(storage server)和客户端(client)

tracker server: 跟踪服务,主要做调度工作,起到均衡的作用;负责管理所有的storage server和group,每个storage在启动后会连接Tracker,告知自己所属group等信息,并保持周期性心跳,tracker根据storage心跳信息,建立group --> [storage server list]的映射表;tracker管理的元数据很少,会直接存放在内存;tracker上的元信息都是由storage汇报的信息生成的,本身不需要持久化任何数据,tracker之间是对等关系,因此扩展tracker访问非常容器,之间增加tracker访问即可,所有tracker都接受storage心跳信息,生成元数据信息来提供读写访问(与其他master-slave架构的优势是没有单点,tracker也不会成为瓶颈,最终数据是和一个可用的storage server进行传输)

storage server: 存储服务器,主要提供容量和备份访问;以group为单位,每个group内可以包含多个storage server,数据互为备份,存储容量空间以group内容量最小的storage为准;建议group内的storage server配置相同;以group为单位组织存储能够方便的进行引用隔离、负载均衡和副本数定制;

缺点: group的容量受单机存储容量的限制,同时group内机器坏掉,数据恢复只能依赖group内其他机器重新同步(硬盘替换,重新挂载重启fdfs_storaged即可)

group存储策略

- round robin (轮训)

- load balance (选择最大剩余空间的组上传文件)

- specify group (指定group上传)

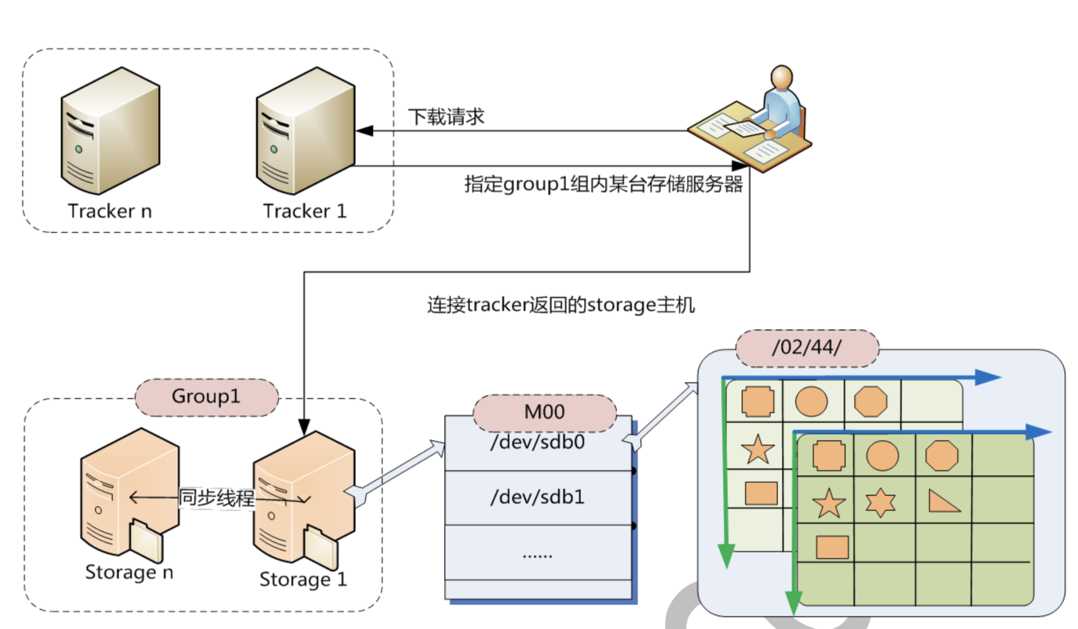

group中storage存储依赖本地文件系统,storage可配置多个数据存储目录,磁盘不做raid,直接分别挂在到多个目录,将这些目录配置为storage的数据目录即可

storage接受写请求时,会根据配置好的规则,选择其中一个存储目录来存储文件;为避免单个目录下文件过多,storage第一次启动,会在每个数据存储目录里创建2级子目录,每级256个,总共65536个,新写的文件会以hash的方式被路由到其中某个子目录下,然后将文件数据直接作为一个本地文件存储到该目录中

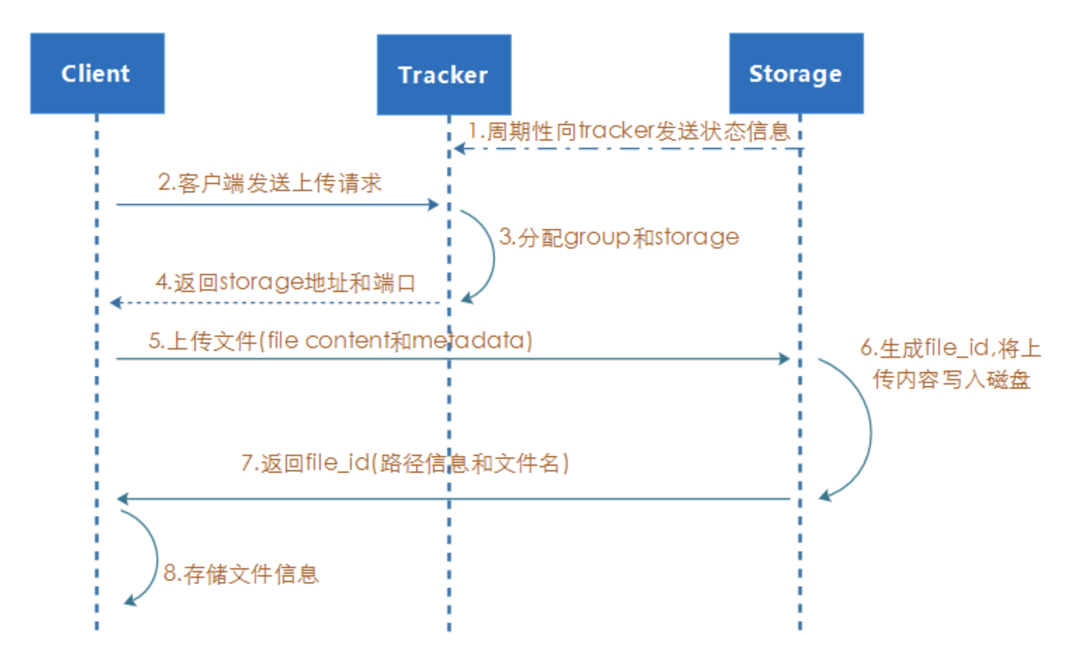

FastDFS工作流程

上传

FastDFS提供基本的文件访问接口,如upload、download、append、delete等

选择tracker server

集群中tracker之间是对等关系,客户端在上传文件时可用任意选择一个tracker

选择存储group

当tracker接受到upload file的请求时,会为该文件分配一个可以存储的group,目前支持选择group的规则为

1.Round Robin (所有group轮训使用)

2.Specified group (指定某个确定的group)

3.Load balance (剩余存储空间较多的group优先)

选择storage server

当选定group后,tracker会在group内选择一个storage server给客户端,目前支持选择server的规则为

1.Round Robin (所有server轮训使用)默认规则

2.根据IP地质进行排序选择第一个服务器 (IP地址最小者)

3.根据优先级进行排序 (上传优先级由storage server来设置,参数为upload_priority)

选择storage path (磁盘或者挂载点)

当分配好storage server后,客户端将向storage发送写文件请求,storage会将文件分配一个数据存储目录,目前支持选择存储路径的规则为:

1.round robin (轮训)默认

2.load balance 选择使用剩余空间最大的存储路径

选择下载服务器

目前支持的规则为

1.轮训方式,可以下载当前文件的任一storage server

2.从源storage server下载

生成file_id

选择存储目录后,storage会生成一个file_id,采用Base64编码,包含字段包括: storage server ip、文件创建时间、文件大小、文件CRC32校验码和随机数;每个存储目录下有两个256*256个子目录,storage会按文件file_id进行两次hash,路由到其中一个子目录,然后将文件file_id为文件名存储在该子目录下,最后生成文件路径: group名称、虚拟磁盘路径、数据两级目录、file_id

group1 /M00/02/44/wkgDRe348wAAAAGKYJK42378.sh

其中,组名: 上传文件后所在的存储组的名称,在文件上传成功后由存储服务器返回,需要客户端自行保存

虚拟磁盘路径: 存储服务器配置的虚拟路径,与磁盘选项store_path*参数对应

数据两级目录: 存储服务器在每个虚拟磁盘路径下创建的两级目录,用于存储数据文件

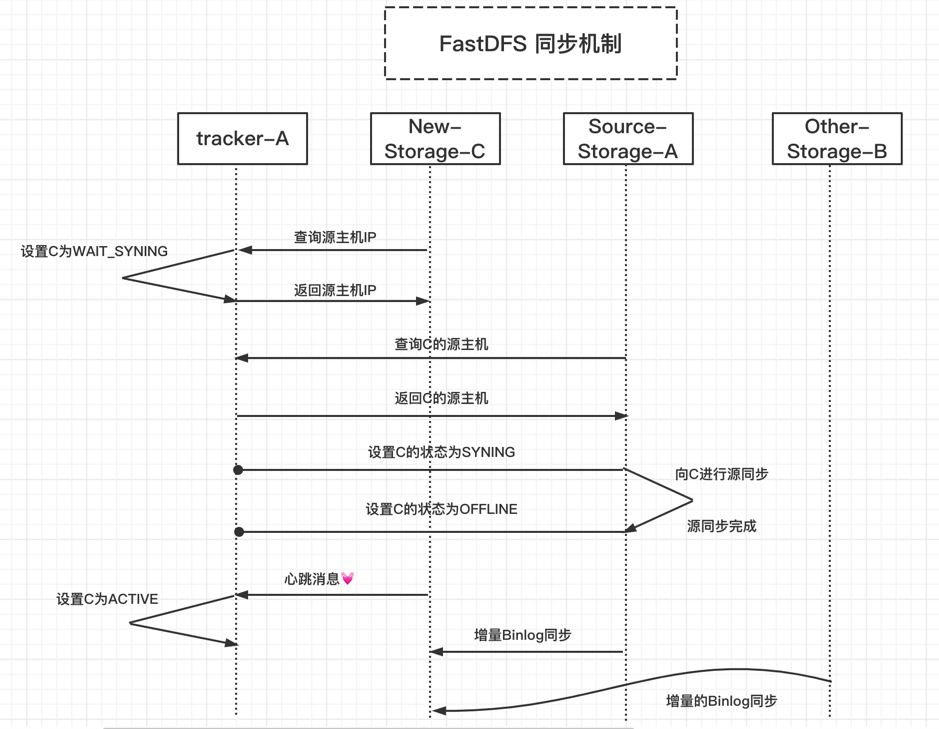

同步机制

1.新增tracker服务器数据同步

由于storage server上配置了所有的tracker server,storage server和tracker server之间的通信是由storage server主动发起的,storage server为每台tracker server 启动一个线程进行通信;在通信过程中,若发现tracker server返回的本组storage server列表比本机记录少,就会将该tracker server上没有的storage server同步给该tracker,这样的机制使得tracker之间是对等的关系,数据保持一致

2.组内新增storage数据同步

若新增storage server或者其状态发生变化,tracker server都会将storage server列表同步给该组内所有storage server;以新增storage server为例,新加入storage server会主动连接tracker server,tracker server发现有新的storage server加入,就会将该组内所有的storage server返回给新加入的storage server,并重新将该组的storage server列表返回给该组内的其他storage server

3.组内storage数据同步

组内storage server之间是对等的,文件上传,删除等操作可以在组内任意一台storage server上进行。文件同步只能在同组内的storage server之间进行,采用push方式,即源服务器同步到目标服务器

A. 只在同组内的storage server之间同步

B. 源数据才需要同步,备份数据不再同步

C. 特例: 新增storage server时,由其中一台将已有的所有数据(包括源数据和备份数据)同步到新增服务器

storage server 7种状态

通过命令fdfs_monitor /etc/fdfs/client.conf可以查看ip_addr选项显示storage server当前状态

INIT 初始化,尚未得到同步已有数据的源服务器 WAIT_SYNC 等待同步,已得到同步已有数据的源服务器 SYNCING 同步中 DELETE 已删除,该服务器从本组中摘除 OFFLINE 离线 ONLINE 在线,尚不能提供服务 ACTIVE 在线,可以提供服务

组内增加storage server A状态变化过程:

1.storage server A主动连接tracker server,此时tracker server将storage serverA状态设置为INIT

2.storage server A向tracker server询问追加同步的源服务器和追加同步截止时间点(当前时间),若组内只有storage server A或者上传文件数为0,则告诉新主机不需要同步数据,storage serverA状态为ONLINE;若组内没有active状态及其,就返回错误给新机器,新机器重新尝试;否则tracker将其状态设置为WAIT_SYNC

3.假如分配了storage server B为同步源服务器和截止时间点,那么storage serverB将会截止时间点之前的所有数据同步给storage server A,并请求tracker设置storage server A状态为SYNCING;到了截止时间后,storage server B向storage server A的同步将由追加同步切换为正常binlog增量同步,当获取不到更多binlog时,请求tracker将storage server A同步完所有数据,暂时没有数据要同步时,storage server B请求tracker server将storage server A的状态设置为ONLINE

4.storage server B向storage server A同步完所有数据,暂时没有数据要同步时,storage server B请求tracker server将 storage server A的状态设置为ONLINE

5.当storage server A向tracker server发起心跳时,tracker server将其状态更改为ACTIVE,之后就是增量同步(binlog)

注: 整个源同步班过程是源机器启动弄一个同步线程,将数据Push到新机器,最大达到一个磁盘的IO,不能并发;由于源同步截止条件是获取不到binlog,系统繁忙,不断有新数据写入的情况,将会导致一直无法完成源同步

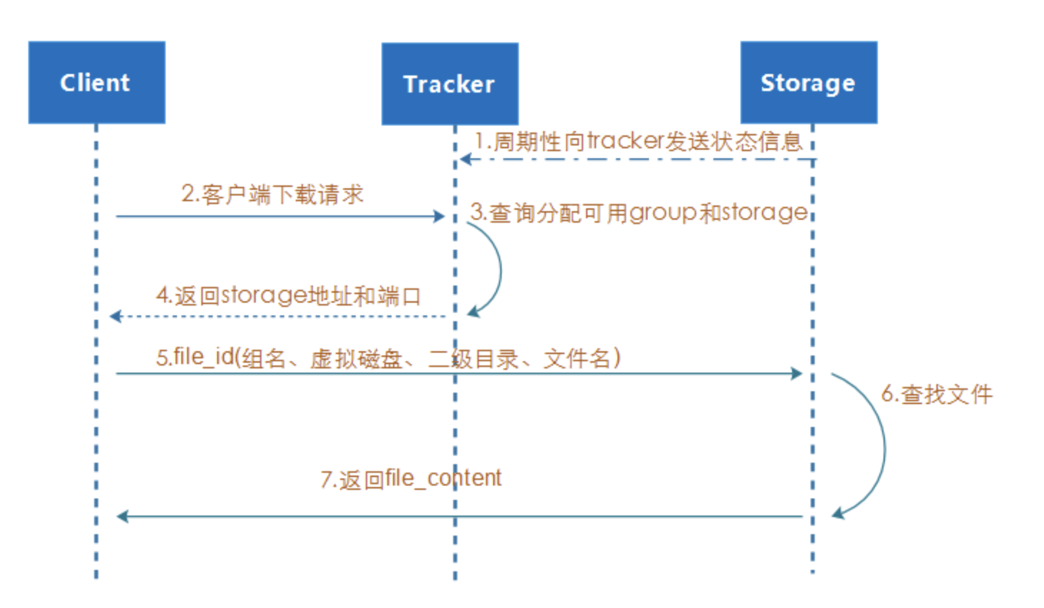

下载

client发送下载请求给某个tracker,必须带上文件名信息,tracker从文件名中解析出文件的group、大小、创建时间等信息,然后为该请求选择一个storage用于读请求;由于group内的文件同步是异步进行,可能出现文件没有同步到其他storage server上或者延迟的问题,可以使用nginx_fastdfs_module模块解决

关于文件去重

由于FastDFS本身不能对重复上传的文件进行去重,而FastDHT可以做到去重。FastDHT是一个高性能的分布式哈希系统,它是基于键值对存储的,而且它需要依赖于Berkeley DB作为数据存储的媒介,同时需要依赖于libfastcommon

由于业务需要,目前不存在文件去重的时候,如果需要可以自己简单了解一下

FastDHT

安装FastDFS集群

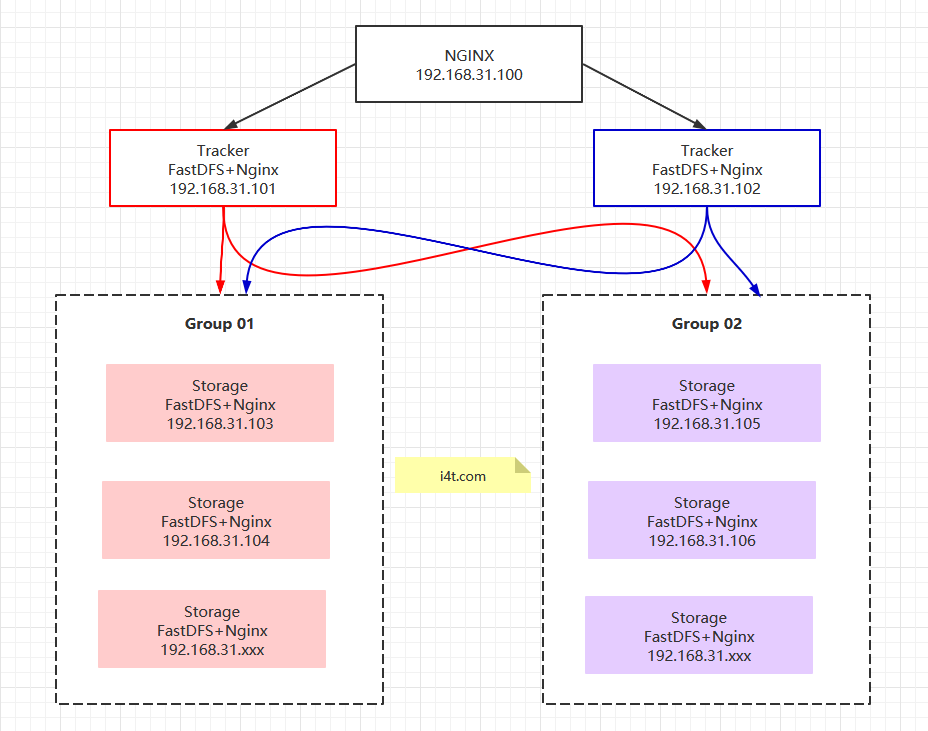

本次环境架构

针对tracker.conf && storage.conf && mod_fastdfs.conf有一篇单独的文章介绍相关参数。有兴趣的可以看一下,也可以直接看默认的配置文件,对每个参数都有介绍

FastDFS 配置文件详解

网上大部分安装FastDFS并没有说清楚相关的配置文件和参数,导致集群无法启动或者性能降低。 这篇文章主要整理一下以FastDFS 6.6版本为基础的配置文件参数,需要修改的地方已经提前说明清楚

环境说明

#nginx这里可以部署2台,加上keepliveed作高可用,由于我这里机器不足,就使用单台nginx进行代理 nginx 192.168.31.100 nginx tracker 节点 tracker 01:192.168.31.101 FastDFS,libfastcommon,nginx,ngx_cache_purge tracker 02:192.168.31.102 FastDFS,libfastcommon,nginx,ngx_cache_purge #其中tracker不提供存储 Storage 节点 [group1] storage 01:192.168.31.103 FastDFS,libfastcommon,nginx,fastdfs-nginx-module storage 02:192.168.31.104 FastDFS,libfastcommon,nginx,fastdfs-nginx-module [group2] storage 03:192.168.31.105 FastDFS,libfastcommon,nginx,fastdfs-nginx-module storage 04:192.168.31.106 FastDFS,libfastcommon,nginx,fastdfs-nginx-module

1.所有的服务器都需要安装nginx,主要是用于访问和上传无关;

2.tracker安装nginx主要为了提供http反向代理、负载均衡以及缓存服务

3.每一台storage服务器部署Nginx及FastDFS扩展模块,主要用于对storage存储的文件提供http下载访问,仅当前storage节点找不到文件时会向源storage主机发送rediect或者proxy动作

所有节点安装

关闭防火墙,selinux

systemctl stop firewalld systemctl disable firewalld iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat iptables -P FORWARD ACCEPT setenforce 0 sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

设置yum源

yum install -y wget wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo yum clean all yum makecache

温馨提示:除了nginx节点(192.168.31.100),其他节点都需要执行安装fastdfs和nginx

安装依赖包 (可解决99%的依赖问题)

yum -y install gcc gcc-c++ make autoconf libtool-ltdl-devel gd-devel freetype-devel libxml2-devel libjpeg-devel libpng-devel openssh-clients openssl-devel curl-devel bison patch libmcrypt-devel libmhash-devel ncurses-devel binutils compat-libstdc++-33 elfutils-libelf elfutils-libelf-devel glibc glibc-common glibc-devel libgcj libtiff pam-devel libicu libicu-devel gettext-devel libaio-devel libaio libgcc libstdc++ libstdc++-devel unixODBC unixODBC-devel numactl-devel glibc-headers sudo bzip2 mlocate flex lrzsz sysstat lsof setuptool system-config-network-tui system-config-firewall-tui ntsysv ntp pv lz4 dos2unix unix2dos rsync dstat iotop innotop mytop telnet iftop expect cmake nc gnuplot screen xorg-x11-utils xorg-x11-xinit rdate bc expat-devel compat-expat1 tcpdump sysstat man nmap curl lrzsz elinks finger bind-utils traceroute mtr ntpdate zip unzip vim wget net-tools

下载依赖包 (除了nginx节点,其他节点都要安装)

mkdir /root/tools/ cd /root/tools wget http://nginx.org/download/nginx-1.18.0.tar.gz wget https://github.com/happyfish100/libfastcommon/archive/V1.0.43.tar.gz wget https://github.com/happyfish100/fastdfs/archive/V6.06.tar.gz wget https://github.com/happyfish100/fastdfs-nginx-module/archive/V1.22.tar.gz #为了保证文章可用性,本次软件包已经进行备份,下载地址如下 mkdir /root/tools/ cd /root/tools wget http://down.i4t.com/fdfs/v6.6/nginx-1.18.0.tar.gz wget http://down.i4t.com/fdfs/v6.6/V1.0.43.tar.gz wget http://down.i4t.com/fdfs/v6.6/V6.06.tar.gz wget http://down.i4t.com/fdfs/v6.6/V1.22.tar.gz #解压 cd /root/tools tar xf nginx-1.18.0.tar.gz tar xf V1.0.43.tar.gz tar xf V1.22.tar.gz tar xf V6.06.tar.gz

安装libfastcommon (除了nginx节点,其他节点都要安装)

cd /root/tools/libfastcommon-1.0.43 ./make.sh ./make.sh install

安装FastDFS (除了nginx节点,其他节点都要安装)

cd /root/tools/fastdfs-6.06/ ./make.sh ./make.sh install

拷贝配置文件 (tracker01 02节点)

[root@tracker01 fastdfs-6.06]# cp /etc/fdfs/tracker.conf.sample /etc/fdfs/tracker.conf #tracker节点 [root@01 fastdfs-6.06]# cp /etc/fdfs/client.conf.sample /etc/fdfs/client.conf #客户端文件(测试使用) [root@01 fastdfs-6.06]# cp /root/tools/fastdfs-6.06/conf/http.conf /etc/fdfs/ #nginx配置文件 [root@01 fastdfs-6.06]# cp /root/tools/fastdfs-6.06/conf/mime.types /etc/fdfs/ #nginx配置文件

配置tracker 01节点

这里可以先配置一台节点,没有问题在启动另外的节点

创建tracker数据存储及日志目录 (需要在tracker节点执行)

mkdir /data/tracker/ -p

修改配置文件 (tracker 01节点执行)

cat >/etc/fdfs/tracker.conf <<EOF disabled = false bind_addr = port = 22122 connect_timeout = 5 network_timeout = 60 base_path = /data/tracker max_connections = 1024 accept_threads = 1 work_threads = 4 min_buff_size = 8KB max_buff_size = 128KB store_lookup = 0 store_server = 0 store_path = 0 download_server = 0 reserved_storage_space = 20% log_level = info run_by_group= run_by_user = allow_hosts = * sync_log_buff_interval = 1 check_active_interval = 120 thread_stack_size = 256KB storage_ip_changed_auto_adjust = true storage_sync_file_max_delay = 86400 storage_sync_file_max_time = 300 use_trunk_file = false slot_min_size = 256 slot_max_size = 1MB trunk_alloc_alignment_size = 256 trunk_free_space_merge = true delete_unused_trunk_files = false trunk_file_size = 64MB trunk_create_file_advance = false trunk_create_file_time_base = 02:00 trunk_create_file_interval = 86400 trunk_create_file_space_threshold = 20G trunk_init_check_occupying = false trunk_init_reload_from_binlog = false trunk_compress_binlog_min_interval = 86400 trunk_compress_binlog_interval = 86400 trunk_compress_binlog_time_base = 03:00 trunk_binlog_max_backups = 7 use_storage_id = false storage_ids_filename = storage_ids.conf id_type_in_filename = id store_slave_file_use_link = false rotate_error_log = false error_log_rotate_time = 00:00 compress_old_error_log = false compress_error_log_days_before = 7 rotate_error_log_size = 0 log_file_keep_days = 0 use_connection_pool = true connection_pool_max_idle_time = 3600 http.server_port = 8080 http.check_alive_interval = 30 http.check_alive_type = tcp http.check_alive_uri = /status.html EOF

启动tracker

[root@01 fastdfs-6.06]# /usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf start

配置tracker02节点

拷贝配置文件

scp -r /etc/fdfs/tracker.conf root@192.168.31.102:/etc/fdfs/ ssh root@192.168.31.102 mkdir /data/tracker/ -p

tracker02启动tracker

[root@02 fastdfs-6.06]# /usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf start

检查启动状态

netstat -lntup|grep 22122 tcp 0 0 0.0.0.0:22122 0.0.0.0:* LISTEN 108126/fdfs_tracker

如果启动失败可以查看tracker报错

tail -f /data/tracker/logs/trackerd.log

接下来编辑启动脚本

cat > /usr/lib/systemd/system/tracker.service <<EOF [Unit] Description=The FastDFS File server After=network.target remote-fs.target nss-lookup.target [Service] Type=forking ExecStart=/usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf start ExecStop=/usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf stop ExecRestart=/usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf restart [Install] WantedBy=multi-user.target EOF $ systemctl daemon-reload $ systemctl start tracker $ systemctl enable tracker $ systemctl status tracker #需要先手动kill 掉tracker

storage 01-02节点配置

storage01节点和02节点配置相同,storage03和storage04配置相同

storage01和storage02节点属于group1组

创建storage 数据存储目录

mkdir /data/fastdfs_data -p

修改配置文件

cat >/etc/fdfs/storage.conf<<EOF disabled = false group_name = group1 bind_addr = client_bind = true port = 23000 connect_timeout = 5 network_timeout = 60 heart_beat_interval = 30 stat_report_interval = 60 base_path = /data/fastdfs_data max_connections = 1024 buff_size = 256KB accept_threads = 1 work_threads = 4 disk_rw_separated = true disk_reader_threads = 1 disk_writer_threads = 1 sync_wait_msec = 50 sync_interval = 0 sync_start_time = 00:00 sync_end_time = 23:59 write_mark_file_freq = 500 disk_recovery_threads = 3 store_path_count = 1 store_path0 = /data/fastdfs_data subdir_count_per_path = 256 tracker_server = 192.168.31.101:22122 tracker_server = 192.168.31.102:22122 log_level = info run_by_group = run_by_user = allow_hosts = * file_distribute_path_mode = 0 file_distribute_rotate_count = 100 fsync_after_written_bytes = 0 sync_log_buff_interval = 1 sync_binlog_buff_interval = 1 sync_stat_file_interval = 300 thread_stack_size = 512KB upload_priority = 10 if_alias_prefix = check_file_duplicate = 0 file_signature_method = hash key_namespace = FastDFS keep_alive = 0 use_access_log = false rotate_access_log = false access_log_rotate_time = 00:00 compress_old_access_log = false compress_access_log_days_before = 7 rotate_error_log = false error_log_rotate_time = 00:00 compress_old_error_log = false compress_error_log_days_before = 7 rotate_access_log_size = 0 rotate_error_log_size = 0 log_file_keep_days = 0 file_sync_skip_invalid_record = false use_connection_pool = true connection_pool_max_idle_time = 3600 compress_binlog = true compress_binlog_time = 01:30 check_store_path_mark = true http.domain_name = http.server_port = 80 EOF #注意: 需要修改tracker_server地址,多个节点多复制几行,一个节点写一行就可以。 不建议单节点使用localhost

配置启动文件

cat >/usr/lib/systemd/system/storage.service <<EOF [Unit] Description=The FastDFS File server After=network.target remote-fs.target nss-lookup.target [Service] Type=forking ExecStart=/usr/bin/fdfs_storaged /etc/fdfs/storage.conf start ExecStop=/usr/bin/fdfs_storaged /etc/fdfs/storage.conf stop ExecRestart=/usr/bin/fdfs_storaged /etc/fdfs/storage.conf restart [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl start storage systemctl status storage systemctl enable storage

检查启动状态

netstat -lntup|grep 23000

如果出现启动失败,可以到设置的目录查看一下log

tail -f /data/fastdfs_data/logs/storaged.log

storage 02-03节点配置

storage03和storage04节点属于group2组

基本流程不变这里只说明需要修改的地方

在storage03-storage-04节点同步执行

创建storage 数据存储目录 mkdir /data/fastdfs_data -p 修改配置文件 cat >/etc/fdfs/storage.conf<<EOF disabled = false group_name = group2 bind_addr = client_bind = true port = 23000 connect_timeout = 5 network_timeout = 60 heart_beat_interval = 30 stat_report_interval = 60 base_path = /data/fastdfs_data max_connections = 1024 buff_size = 256KB accept_threads = 1 work_threads = 4 disk_rw_separated = true disk_reader_threads = 1 disk_writer_threads = 1 sync_wait_msec = 50 sync_interval = 0 sync_start_time = 00:00 sync_end_time = 23:59 write_mark_file_freq = 500 disk_recovery_threads = 3 store_path_count = 1 store_path0 = /data/fastdfs_data subdir_count_per_path = 256 tracker_server = 192.168.31.101:22122 tracker_server = 192.168.31.102:22122 log_level = info run_by_group = run_by_user = allow_hosts = * file_distribute_path_mode = 0 file_distribute_rotate_count = 100 fsync_after_written_bytes = 0 sync_log_buff_interval = 1 sync_binlog_buff_interval = 1 sync_stat_file_interval = 300 thread_stack_size = 512KB upload_priority = 10 if_alias_prefix = check_file_duplicate = 0 file_signature_method = hash key_namespace = FastDFS keep_alive = 0 use_access_log = false rotate_access_log = false access_log_rotate_time = 00:00 compress_old_access_log = false compress_access_log_days_before = 7 rotate_error_log = false error_log_rotate_time = 00:00 compress_old_error_log = false compress_error_log_days_before = 7 rotate_access_log_size = 0 rotate_error_log_size = 0 log_file_keep_days = 0 file_sync_skip_invalid_record = false use_connection_pool = true connection_pool_max_idle_time = 3600 compress_binlog = true compress_binlog_time = 01:30 check_store_path_mark = true http.domain_name = http.server_port = 80 EOF #配置启动文件 cat >/usr/lib/systemd/system/storage.service <<EOF [Unit] Description=The FastDFS File server After=network.target remote-fs.target nss-lookup.target [Service] Type=forking ExecStart=/usr/bin/fdfs_storaged /etc/fdfs/storage.conf start ExecStop=/usr/bin/fdfs_storaged /etc/fdfs/storage.conf stop ExecRestart=/usr/bin/fdfs_storaged /etc/fdfs/storage.conf restart [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl start storage systemctl status storage systemctl enable storage #检查启动状态 netstat -lntup|grep 23000

如果出现systemctl启动失败,可以使用命令启动,在根据日志进行查看。 大概启动时间为10s

#storage启动、停止、重启命令 /usr/bin/fdfs_storaged /etc/fdfs/storage.conf start /usr/bin/fdfs_storaged /etc/fdfs/storage.conf stop /usr/bin/fdfs_storaged /etc/fdfs/storage.conf restart

所有节点storage启动完毕后进行检查,是否可以获取到集群信息 (刚创建的集群比较慢,稍等一会。需要等待状态为ACTIVE即可)

#在任意节点storage节点执行命令都可以,获取结果应该如下

[root@storage01 fdfs]# fdfs_monitor /etc/fdfs/storage.conf list

[2020-07-03 01:15:25] DEBUG - base_path=/data/fastdfs_data, connect_timeout=5, network_timeout=60, tracker_server_count=2, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=1, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0

server_count=2, server_index=1

tracker server is 192.168.31.102:22122 #tracker server处理本次命令的节点

group count: 2 #group组数量

Group 1: #group1组信息

group name = group1

disk total space = 17,394 MB

disk free space = 13,758 MB

trunk free space = 0 MB

storage server count = 2

active server count = 2

storage server port = 23000

storage HTTP port = 80

store path count = 1

subdir count per path = 256

current write server index = 0

current trunk file id = 0

Storage 1: #storage1节点信息

id = 192.168.31.103

ip_addr = 192.168.31.103 ACTIVE #storage节点状态

http domain =

version = 6.06 #fdfs 版本

join time = 2020-07-03 01:08:29 #加入集群时间

up time = 2020-07-03 01:08:29

total storage = 17,394 MB

free storage = 14,098 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 80

current_write_path = 0

source storage id = 192.168.31.104

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

...............省略号............................

last_heart_beat_time = 2020-07-03 01:15:18

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Storage 2: #storage2节点信息

id = 192.168.31.104 #storage 节点IP

ip_addr = 192.168.31.104 ACTIVE #storage 节点状态

http domain =

version = 6.06 #storage 节点版本

join time = 2020-07-03 01:08:26 #加入时间

up time = 2020-07-03 01:08:26

total storage = 17,394 MB

free storage = 13,758 MB

upload priority = 10

store_path_count = 1

...............省略号............................

last_heart_beat_time = 2020-07-03 01:15:17

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Group 2: #group2集群信息

group name = group2

disk total space = 17,394 MB

disk free space = 15,538 MB

trunk free space = 0 MB

storage server count = 2

active server count = 2

storage server port = 23000

storage HTTP port = 80

store path count = 1

subdir count per path = 256

current write server index = 0

current trunk file id = 0

Storage 1: #storage1节点信息

id = 192.168.31.105

ip_addr = 192.168.31.105 ACTIVE

http domain =

version = 6.06

join time = 2020-07-03 01:13:42

up time = 2020-07-03 01:13:42

total storage = 17,394 MB

free storage = 15,538 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000 #storage端口

storage_http_port = 80

current_write_path = 0

source storage id =

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

...............省略号............................

last_heart_beat_time = 2020-07-03 01:15:22

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Storage 2:

id = 192.168.31.106

ip_addr = 192.168.31.106 ACTIVE

http domain =

version = 6.06

join time = 2020-07-03 01:14:05

up time = 2020-07-03 01:14:05

total storage = 17,394 MB

free storage = 15,538 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 80

current_write_path = 0

source storage id = 192.168.31.105

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

connection.max_count = 1

total_upload_count = 0

...............省略号............................

total_file_write_count = 0

success_file_write_count = 0

last_heart_beat_time = 2020-07-03 01:15:10

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

配置client

这里在tracker01节点配置celient客户端 (其他节点可不配置,client.conf为可选配置)

mkdir -p /data/fdfs_client/logs #日志存放路径 cat >/etc/fdfs/client.conf <<EOF connect_timeout = 5 network_timeout = 60 base_path = /data/fdfs_client/logs tracker_server = 192.168.31.101:22122 tracker_server = 192.168.31.102:22122 log_level = info use_connection_pool = false connection_pool_max_idle_time = 3600 load_fdfs_parameters_from_tracker = false use_storage_id = false storage_ids_filename = storage_ids.conf http.tracker_server_port = 80 EOF #需要修改tracker_server地址

上传文件测试,这里的文件是init.yaml

[root@01 ~]# echo "test" >init.yaml [root@01 ~]# fdfs_upload_file /etc/fdfs/client.conf init.yaml group2/M00/00/00/wKgfaV7-GG2AQcpMAAAABTu5NcY98.yaml

Storage节点安装Nginx

所有storage节点mod_fastdfs.conf配置如下

cat >/etc/fdfs/mod_fastdfs.conf <<EOF connect_timeout=2 network_timeout=30 base_path=/tmp load_fdfs_parameters_from_tracker=true storage_sync_file_max_delay = 86400 use_storage_id = false storage_ids_filename = storage_ids.conf tracker_server=192.168.31.101:22122 tracker_server=192.168.31.102:22122 storage_server_port=23000 url_have_group_name = true store_path_count=1 log_level=info log_filename= response_mode=proxy if_alias_prefix= flv_support = true flv_extension = flv group_count = 2 #include http.conf [group1] group_name=group1 storage_server_port=23000 store_path_count=1 store_path0=/data/fastdfs_data [group2] group_name=group2 storage_server_port=23000 store_path_count=1 store_path0=/data/fastdfs_data EOF

拷贝相关依赖 (以下是所有storage节点安装)

cp /root/tools/fastdfs-6.06/conf/http.conf /etc/fdfs/ cp /root/tools/fastdfs-6.06/conf/mime.types /etc/fdfs/

安装Nginx依赖包

yum install -y gcc glibc gcc-c++ prce-devel openssl-devel pcre-devel lua-devel libxml2 libxml2-devel libxslt-devel perl-ExtUtils-Embed GeoIP GeoIP-devel GeoIP-data zlib zlib-devel openssl pcre pcre-devel gcc g++ gcc-c++ gd-devel

创建nginx用户

useradd -s /sbin/nologin nginx -M

编译nginx

cd /root/tools/nginx-1.18.0 ./configure --prefix=/usr/local/nginx-1.18 --with-http_ssl_module --user=nginx --group=nginx --with-http_sub_module --add-module=/root/tools/fastdfs-nginx-module-1.22/src make && make install ln -s /usr/local/nginx-1.18 /usr/local/nginx

修改Nginx配置文件

cat > /usr/local/nginx/conf/nginx.conf <<EOF

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

server {

listen 8888;

server_name localhost;

location ~/group[0-9]/M00 {

root /data/fastdfs_data;

ngx_fastdfs_module;

}

}

}

EOF

启动nginx

/usr/local/nginx/sbin/nginx -t /usr/local/nginx/sbin/nginx

在storage节点上8888的请求且有group的都转给ngx_fastdfs_module插件处理

接下来我们手动上传两张图片进行测试

[root@tracker01 ~]# fdfs_upload_file /etc/fdfs/client.conf abcdocker.png group1/M00/00/00/wKgfZ17-JkKAYX-SAABc0HR4eEs313.png [root@tracker01 ~]# fdfs_upload_file /etc/fdfs/client.conf i4t.jpg group2/M00/00/00/wKgfal7-JySABmgLAABdMoE-LPo504.jpg

目前我们tracker属于轮训机制,会轮训group1和group2;具体使用参数可以参考下面的文章

接下来我们可以通过浏览器访问,不同的组对应不同的项目,FastDFS集群可以有多个组,但是每台机器只可以有一个storage

http://storage1节点:8888/group1/M00/00/00/wKgfZ17-JkKAYX-SAABc0HR4eEs313.png http://storage1节点:8888/group2/M00/00/00/wKgfal7-JySABmgLAABdMoE-LPo504.jpg

经过我的测试,即使我们把图片上传到group1中,在group2上面直接访问也可以访问成功,但是在group2的存储目录并没有找到图片文件。原因如下

#Nginx日志 192.168.31.174 - - [03/Jul/2020:03:03:31 +0800] "GET /group2/M00/00/00/wKgfaF7-JyKAYde2AABdMoE-LPo633.jpg HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:77.0) Gecko/20100101 Firefox/77.0"

为何我们其他storage节点也可以访问,原因是nginx中fastdfs-nginx-module模块可以重定向文件连接到源服务器取文件

补充: FastDFS常用命令参数

#查看集群状态 fdfs_monitor /etc/fdfs/storage.conf #上传 fdfs_upload_file /etc/fdfs/client.conf abcdocker.png #下载 fdfs_download_file /etc/fdfs/client.conf group1/M00/00/00/wKgfZ17-JkKAYX-SAABc0HR4eEs313.png #查看文件属性 fdfs_file_info /etc/fdfs/client.conf group1/M00/00/00/wKgfZ17-JkKAYX-SAABc0HR4eEs313.png #删除文件 fdfs_delete_file /etc/fdfs/client.conf group1/M00/00/00/wKgfZ17-JkKAYX-SAABc0HR4eEs313.png #删除一个storage /usr/local/bin/fdfs_monitor /etc/fdfs/storage.conf delete group2 192.168.31.105

Tracker配置高可用

在tracker上安装的nginx主要为了提供http访问的反向代理、负载均衡和缓存服务

这里我们tracker01 02同时进行安装即可

#下载nginx依赖包 mkdir /root/tools -p cd /root/tools wget http://down.i4t.com/ngx_cache_purge-2.3.tar.gz wget http://down.i4t.com/fdfs/v6.6/nginx-1.18.0.tar.gz tar xf ngx_cache_purge-2.3.tar.gz tar xf nginx-1.18.0.tar.gz #创建nginx用户 useradd -s /sbin/nologin -M nginx #编译nginx cd /root/tools/nginx-1.18.0 ./configure --prefix=/usr/local/nginx-1.18 --with-http_ssl_module --user=nginx --group=nginx --with-http_sub_module --add-module=/root/tools/ngx_cache_purge-2.3 make && make install ln -s /usr/local/nginx-1.18 /usr/local/nginx

nginx安装完毕,接下来配置nginx.conf

tracker 中nginx节点可以不是80,我这里以80位代表

mkdir /data/nginx_cache -p

$ vim /usr/local/nginx/conf/nginx.conf

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

server_names_hash_bucket_size 128;

client_header_buffer_size 32k;

large_client_header_buffers 4 32k;

client_max_body_size 300m;

proxy_redirect off;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_connect_timeout 90;

proxy_send_timeout 90;

proxy_read_timeout 90;

proxy_buffer_size 16k;

proxy_buffers 4 64k;

proxy_busy_buffers_size 128k;

proxy_temp_file_write_size 128k;

proxy_cache_path /data/nginx_cache keys_zone=http-cache:100m;

upstream fdfs_group1 {

server 192.168.31.103:8888 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.80.104:8888 weight=1 max_fails=2 fail_timeout=30s;

}

upstream fdfs_group2 {

server 192.168.31.105:8888 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.31.106:8888 weight=1 max_fails=2 fail_timeout=30s;

}

server {

listen 80;

server_name localhost;

location /group1/M00 {

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://fdfs_group1;

expires 30d;

}

location /group2/M00 {

proxy_next_upstream http_502 http_504 error timeout invalid_header; proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://fdfs_group2;

expires 30d;

}

}

}

/usr/local/nginx/sbin/nginx -t

/usr/local/nginx/sbin/nginx

此时访问tracker01节点和tracker02节点应该都没有问题

http://192.168.31.101/group1/M00/00/00/wKgfaF7-JyKAYde2AABdMoE-LPo633.jpg http://192.168.31.101/group2/M00/00/00/wKgfaF7-JyKAYde2AABdMoE-LPo633.jpg http://192.168.31.102/group2/M00/00/00/wKgfaF7-JyKAYde2AABdMoE-LPo633.jpg http://192.168.31.102/group2/M00/00/00/wKgfaF7-JyKAYde2AABdMoE-LPo633.jpg

效果图如下

Nginx代理安装

通过上面的步骤,已经可以使用storage节点和tracker节点进行访问,但是为了解决统一管理和tracker高可用,我们还需要使用nginx在去代理tracker

#nginx安装和上面一样,我这里就只更改nginx.conf文件,nginx代理不需要缓存模块,普通安装即可

$ vim /usr/local/nginx/conf/nginx.conf

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream fastdfs_tracker {

server 192.168.31.101:80 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.31.102:80 weight=1 max_fails=2 fail_timeout=30s;

}

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://fastdfs_tracker/;

}

}

}

最后我们在tracker01节点上,测试nginx代理是否都可以访问成功

[root@tracker01 ~]# fdfs_upload_file /etc/fdfs/client.conf i4t.jpg group1/M00/00/00/wKgfZ17-Oa2AMuRqAABdMoE-LPo686.jpg [root@tracker01 ~]# fdfs_upload_file /etc/fdfs/client.conf i4t.jpg group2/M00/00/00/wKgfaV7-Oa6ANXGLAABdMoE-LPo066.jpg 访问查看 [root@tracker01 ~]# curl 192.168.31.100/group1/M00/00/00/wKgfZ17-Oa2AMuRqAABdMoE-LPo686.jpg -I HTTP/1.1 200 OK Server: nginx/1.18.0 Date: Thu, 02 Jul 2020 19:49:49 GMT Content-Type: image/jpeg Content-Length: 23858 Connection: keep-alive Last-Modified: Thu, 02 Jul 2020 19:46:53 GMT Expires: Sat, 01 Aug 2020 19:49:49 GMT Cache-Control: max-age=2592000 Accept-Ranges: bytes [root@tracker01 ~]# curl 192.168.31.100/group2/M00/00/00/wKgfaV7-Oa6ANXGLAABdMoE-LPo066.jpg -I HTTP/1.1 200 OK Server: nginx/1.18.0 Date: Thu, 02 Jul 2020 19:50:17 GMT Content-Type: image/jpeg Content-Length: 23858 Connection: keep-alive Last-Modified: Thu, 02 Jul 2020 19:46:54 GMT Expires: Sat, 01 Aug 2020 19:50:17 GMT Cache-Control: max-age=2592000 Accept-Ranges: bytes