使用Fiddler和夜神浏览器对搜狐推荐新闻的抓取

各位老铁好,现如今移动端抓取数据是一种趋势化,今天我以抓取移动端搜狐视频为例向大家做一个抓取移动端数据的示例

1.首先下载Fiddler抓包工具:

链接: https://pan.baidu.com/s/1_3l6POqbRFoQjJT02YQ8DQ 提取码: d4n2 复制这段内容后打开百度网盘手机App,操作更方便哦

2.下载夜神浏览器:

链接: https://pan.baidu.com/s/1PTitEggSY26KsTSHi8Q9-w 提取码: dtzu 复制这段内容后打开百度网盘手机App,操作更方便哦

3.下载好工具后做配置:https://www.cnblogs.com/chenyibai/p/10691703.html

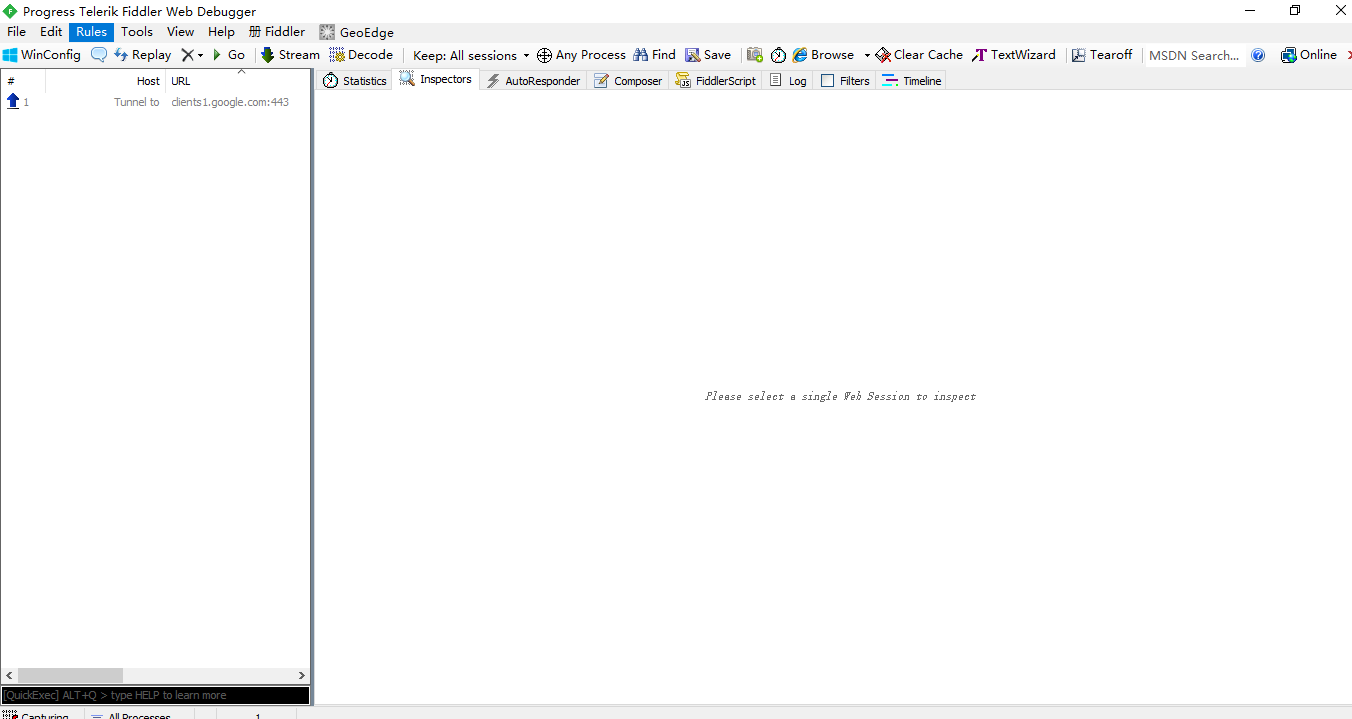

4.打开Fiddler抓包工具

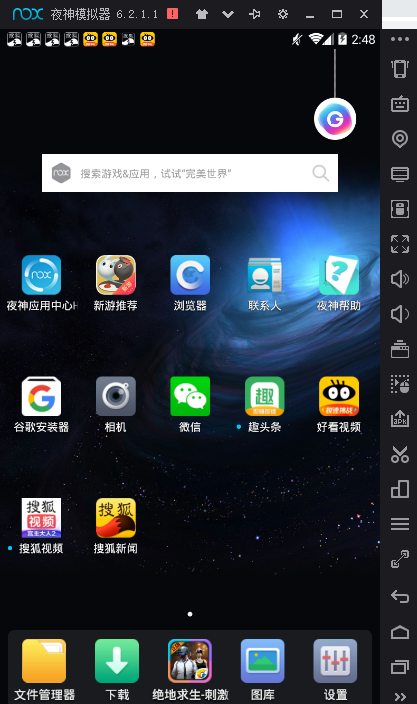

5.打开夜神浏览器

6.点击搜狐新闻安装包

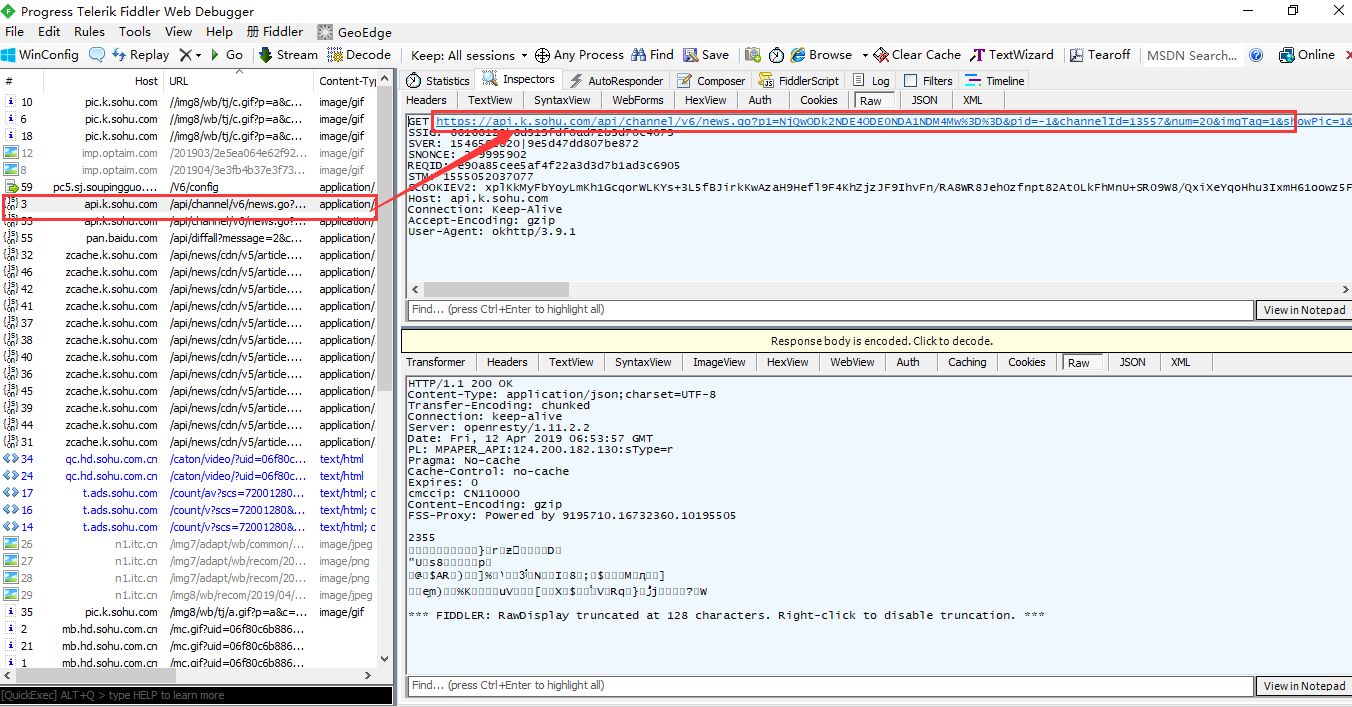

7.点击推荐(点击之前先将Fiddler抓包工具清除所有抓到的数据包,Remove All)

8.获取到动态加载的数据

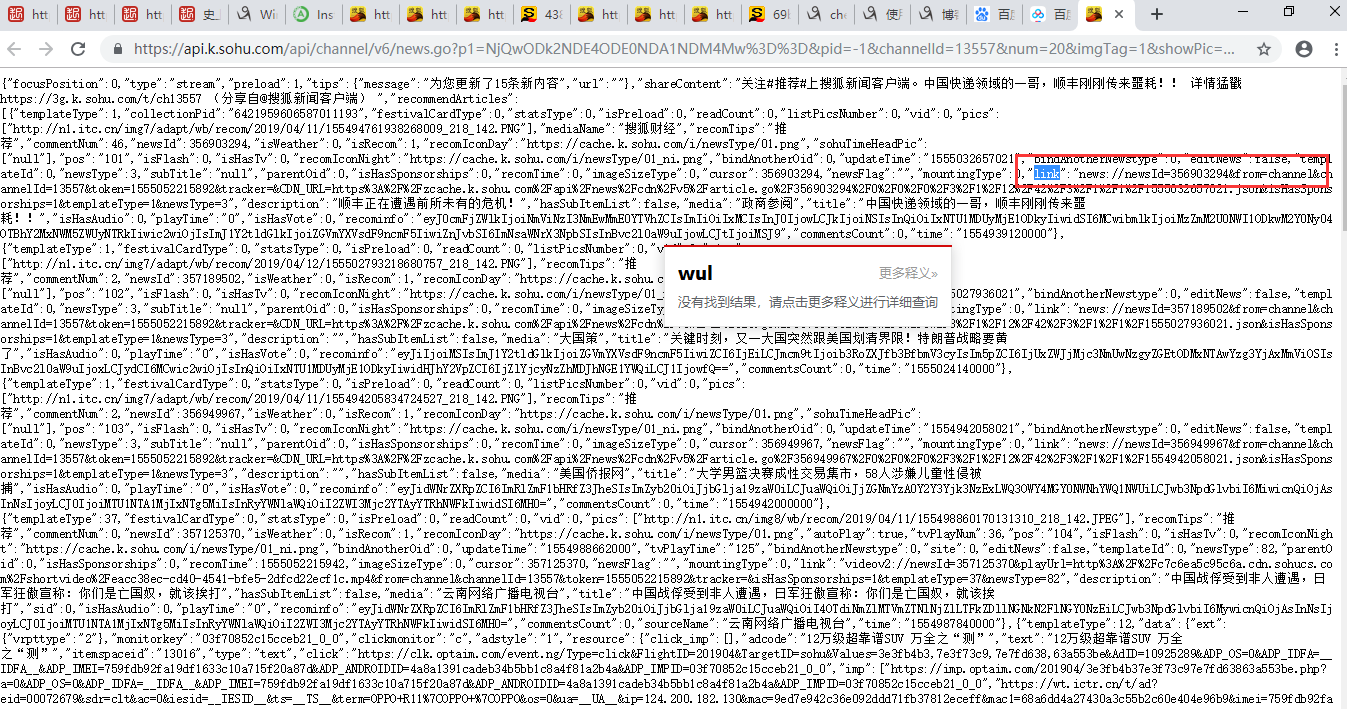

9.新闻内容url的获取

for i in list:

'https://api.k.sohu.com/api/news/v5/article.go?' + i['link'].split('://')[1]

10.获取图片的时候需要注意视频图片

11.找到视频新闻的内容url

12.访问视频新闻的url,获取到图片的真是url

'https://api.k.sohu.com/api/news/v5/article.go?' + i['link'].split('://')[1]

13.爬取过程中,或得到的视频新闻图片数据是

data-thumbnail="//media-platform.bjcnc.img-internal.sohucs.com/images/20190411/a42212a334e443d18f7ed70fa009fcff.jpeg"

所以需要我们做一个replace替换

以上便是我爬取过程中遇到的问题

def proxies(): ips = ["103.216.82.200:6667", "103.216.82.20:6667", "103.216.82.198:6667", "103.216.82.196:6667"] for ip in ips: proxies = { "http": 'http://' + ip, "https": 'https://' + ip, } return proxies

import pymysql db = pymysql.connect( host="127.0.0.1", user="root", password="root", database="test2", port=3306, charset="utf8" )

# -*- coding: utf-8 -*- import sys sys.path.append('..') import re import json import random import requests from config.ua import ua from config.log import log from fuzzywuzzy import fuzz from config.mysql_db import db from config.proxies import proxies class Sh: def __init__(self): # 日志 self.logger = log('souhu') self.db = db # 创建数据库游标 self.cursor = self.db.cursor() # 代理ip self.proxies = proxies() # self.url = 'https://api.k.sohu.com/api/channel/v6/news.go?p1=NjQzNzIyNTk5MjkxNzc5MDgwMw%3D%3D&pid=-1&channelId=4&num=20&imgTag=1&showPic=1&picScale=11&rt=json&net=wifi&cdma_lat=39.084532&cdma_lng=117.196715&from=channel&mac=34%3A23%3A87%3AF9%3A16%3ACD&AndroidID=342387f916cd6291&carrier=CMCC&imei=355757010342380&imsi=460073423872491&density=2.0&apiVersion=42&isMixStream=0&skd=4d246a30bbe5efe53c4000371feb8736fdb37f079e985aca195c06930c76d4f5256f06f4a8d0f5db273507c9b4eb7e157fb1b888be0b0a2f19c8312188e8b41957ebbe202d524b93fc562d58f25f01ef5e7b134eb4656646c85b6e70112c552f28f975ea5807b68948bd78a87794dcb7&v=1535472000&t=1535511104&forceRefresh=0×=2&page=1&action=1&mode=3&cursor=0&mainFocalId=0&focusPosition=0&viceFocalId=0&lastUpdateTime=0&version=6.1.2&contentToken=no_data&gbcode=120000&apiVersion=42&u=1&source=0&actiontype=3&isSupportRedPacket=0&t=1535511104&sy=-251&rr=3' self.url = "https://api.k.sohu.com/api/channel/v6/news.go?p1=NjQwODk2NDE4ODE0NDA1NDM4Mw%3D%3D&pid=-1&channelId=13557&num=20&imgTag=1&showPic=1&picScale=11&rt=json&net=wifi&from=channel&mac=1C%3AB7%3A2C%3A8D%3A1C%3A73&AndroidID=1cb72c8d1c736648&carrier=CMCC&imei=866174010281836&imsi=460072818344141&density=1.5&apiVersion=42&isMixStream=0&skd=81e696dea279595143925185fcf52593d2cc6a37e205aef3cb5de06c206c25d6f68a9a582cd989effaabf861e6839a316005d66fdc7dc82e1863e9c1ec0c3746eeea91f73bb07b96e302cad95ca3b279f53b4de1348a91667eb23817782cb65522e56568e9b8aee484597ff0b48fe8bc&v=1554998400&t=1555043743×=1&page=1&action=0&mode=0&cursor=0&mainFocalId=0&focusPosition=1&viceFocalId=0&lastUpdateTime=0&version=6.2.1&platformId=3&gbcode=110000&apiVersion=42&u=1&actiontype=2&isSupportRedPacket=0&t=1555043743&rr=1 HTTP/1.1" self.main() self.db.close() # 请求函数 def request(self, url): for num in range(5): try: head = { 'User-Agent': random.choice(ua), } response = requests.get(url, headers=head, proxies=self.proxies, timeout=10) if response.status_code == 200: return response elif num == 4: response = requests.get(url, headers=head) return response except Exception as e: self.proxies = proxies() # 数据库获取新闻详情id函数 def pd_title(self, title, create_time): # 根据新闻标题名从数据库中查询到新闻详情id以及创建时间 sql = 'SELECT detail_id,create_time FROM `rs_content` WHERE title=%s' # 执行sql语句 self.cursor.execute(sql, [title]) # 获取到数据值 zhi = self.cursor.fetchone() # 如果数据库中有该条新闻并获取到数据值,判断是否是最新时间的新闻 if zhi is not None: # 如果是最新时间,返回新闻详情id if zhi[1] != int(create_time): return zhi[0] # 如果数据库没有新闻数据,返回no else: return 'no' # 获取新闻详情内容函数 def pd_content(self, detail, create_time): # 获取到详情列表,以 。 分割获取到存放新闻详情内容的标签 detail_list = detail.split('。') # print(detail_list) print(int(len(detail_list))) detail = detail_list[int(len(detail_list) // 2)] print(detail) similarity_values = 0 min_time = create_time - (1 * 60 * 60) max_time = create_time + (1 * 60 * 60) # 查询数据库中新闻内容详情和创建时间的sql语句 detail_sql = 'select detail,create_time from rs_content_detail c join rs_content b on c.id = b.detail_id where b.create_time >= %s and b.create_time <= %s and b.cate_id = %s' # 执行sql语句 self.cursor.execute(detail_sql, [min_time, max_time, 1]) # 获取到所有的内容 contents = self.cursor.fetchall() for content in contents: # 模糊匹配==>子字符串匹配,获取到的随机新闻详情字符串与数据库中的数据字符串做模糊匹配 similarity_values = fuzz.partial_ratio(detail, content[0]) # 如果模糊值大于等于80,跳出循环 if similarity_values >= 80: break # 返回匹配值 return similarity_values # 插入数据库数据函数 def insert_table(self, *all_list): all_list = list(all_list) detail = all_list[-1] all_list.pop(-1) sql = 'INSERT INTO rs_content(title, thumb, cate_id, create_time, update_time, source) VALUES(%s,%s,%s,%s,%s,%s)' self.cursor.execute(sql, all_list) rs_content_id = self.cursor.lastrowid self.db.commit() sql1 = 'INSERT INTO rs_content_detail(detail) VALUES(%s)' self.cursor.execute(sql1, detail) detail_id = self.cursor.lastrowid self.db.commit() sql2 = 'update rs_content set detail_id = %s where id = %s' self.cursor.execute(sql2, [detail_id, rs_content_id]) self.db.commit() # 更新数据库数据函数 def update_table(self, *all_list): all_list = list(all_list) detail = all_list[-2] detail_id = all_list[-1] all_list.pop(-2) sql = 'UPDATE rs_content SET title=%s,thumb=%s,cate_id=%s,create_time=%s,update_time=%s,source=%s WHERE detail_id=%s' self.cursor.execute(sql, all_list) self.db.commit() sql1 = 'UPDATE rs_content_detail SET detail=%s WHERE id=%s' self.cursor.execute(sql1, [detail, detail_id]) self.db.commit() # 获取数据字段函数 def get_content(self, _list): for i in _list: try: # 新闻标题 title = i['title'] # 创建时间 create_time = int(i['updateTime'][:-3]) # 根据新闻标题从数据库获取到新闻详情id pd_title = self.pd_title(title, create_time) # 如果新闻详情id不为空 if pd_title != None: try: # 获取到新闻图片的src属性 src = i['pics'][0] # 新闻图片地址和图片大小 thumb = json.dumps([{ "thumb_img": src, "thumb_size": "0kb" }]) cate_id = 1 update_time = create_time # 作者来源 source = i['media'] # 获取到新闻内容url link = 'https://api.k.sohu.com/api/news/v5/article.go?' + i['link'].split('://')[1] try: # 获取到新闻内容数据 data = json.loads(self.request(link).text) # 将新闻内容中的</image_.*?>替换成</img>,获取到详情新闻内容 detail = re.sub('</image_.*?>', '</img>', data['content']) # print("aaa", detail) # 枚举新闻内容中的photos(列表套多个字典),获取到含有图片地址的字典src_da """ 0 {'pic': 'http://n1.itc.cn/img7/adapt/wb/sohulife/2019/04/12/155504546945370738_620_1000.JPEG', 'smallPic': 'http://n1.itc.cn/img7/adapt/wb/sohulife/2019/04/12/155504546945370738_124_1000.JPEG', 'description': '', 'width': 1080, 'height': 608} 1 {'pic': 'http://n1.itc.cn/img7/adapt/wb/sohulife/2019/04/12/155504546955416542_620_1000.JPEG', 'smallPic': 'http://n1.itc.cn/img7/adapt/wb/sohulife/2019/04/12/155504546955416542_124_1000.JPEG', 'description': '', 'width': 1039, 'height': 420} """ for num, src_da in enumerate(data['photos']): # 将新闻详情内容中类似<image_0>替换成图片地址<img src="http://n1.itc.cn/img7/adapt/wb/sohulife/2019/04/12/155504652909253384_620_1000.JPEG"> detail = re.sub('<image_{0}>'.format(num), '<img src="{0}">'.format(src_da['pic']), detail) # print("bbb", detail) # 将新闻内容中的a标签中的href属性替换为空,并将是视频新闻图片的media-platform.bjcnc.img-internal.sohucs.com替换成5b0988e595225.cdn.sohucs.com detail = re.sub('href=".*?"', '', detail).replace( 'media-platform.bjcnc.img-internal.sohucs.com', '5b0988e595225.cdn.sohucs.com') # print("ccc", detail) # 如果视频video不在新闻内容中以及新闻内容不为空 if 'video' not in detail and detail != '': # 如果新闻详情id为no if pd_title == 'no': # 获取到模糊匹配值 pd_content = self.pd_content(detail, create_time) # 如果模糊匹配值小于等于80 if pd_content <= 80: # 将数据插入到数据库中 self.insert_table(title, thumb, cate_id, create_time, update_time, source, detail) # 如果获取到的数据含有新闻id else: # 将获取到的数据更新到数据库中 self.update_table(title, thumb, cate_id, create_time, update_time, source, detail, pd_title) except Exception as e: if '1062' not in str(e): self.logger.error("{0}——{1}".format(link, e)) except: pass except Exception as e: pass def main(self): for i in range(3): try: text = self.request(self.url).text # print(text) # 获取到响应数据对象 _list = json.loads(text)['recommendArticles'] # 获取到数据内容 self.get_content(_list) except Exception as e: self.logger.error(e) if __name__ == '__main__': Sh()

ua = [ 'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2228.0 Safari/537.36', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2227.1 Safari/537.36']

import logging.handlers def log(name): handler = logging.handlers.RotatingFileHandler('logs/{0}.log'.format(name), encoding='utf-8') fmt = '%(asctime)s %(filename)s[line:%(lineno)d] %(levelname)s %(message)s' formatter = logging.Formatter(fmt) handler.setFormatter(formatter) logger = logging.getLogger('{0}'.format(name)) logger.addHandler(handler) logger.setLevel(logging.DEBUG) return logger