Linux系统管理----LVM逻辑卷和磁盘配额练习

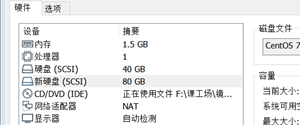

1.为主机增加80G SCSI 接口硬盘

2.划分三个各20G的主分区

[root@localhost chen]# fdisk /dev/sdb

命令(输入 m 获取帮助):n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p):

Using default response p //默认为主分区primary

分区号 (1-4,默认 1):

起始 扇区 (2048-167772159,默认为 2048):

将使用默认值 2048

Last 扇区, +扇区 or +size{K,M,G} (2048-167772159,默认为 167772159):+20G

分区 1 已设置为 Linux 类型,大小设为 20 GiB

…

[root@localhost chen]# partx /dev/sdb

NR START END SECTORS SIZE NAME UUID

1 2048 41945087 41943040 20G

2 41945088 83888127 41943040 20G

3 83888128 125831167 41943040 20G

3.将三个主分区转换为物理卷(pvcreate),扫描系统中的物理卷

[root@localhost chen]# pvscan //扫描当前系统中的物理卷

PV /dev/sda2 VG centos lvm2 [<39.00 GiB / 4.00 MiB free]

Total: 1 [<39.00 GiB] / in use: 1 [<39.00 GiB] / in no VG: 0 [0 ]

[root@localhost chen]# pvcreate /dev/sdb[123] //把/dev/sdb下的分区123创建为物理卷

Physical volume "/dev/sdb1" successfully created.

Physical volume "/dev/sdb2" successfully created.

Physical volume "/dev/sdb3" successfully created.

[root@localhost chen]# pvscan

PV /dev/sda2 VG centos lvm2 [<39.00 GiB / 4.00 MiB free]

PV /dev/sdb1 lvm2 [20.00 GiB]

PV /dev/sdb2 lvm2 [20.00 GiB]

PV /dev/sdb3 lvm2 [20.00 GiB]

Total: 4 [<99.00 GiB] /in use: 1 [<39.00 GiB] / in no VG: 3 [60.00 GiB]

4.使用两个物理卷创建卷组,名字为myvg,查看卷组大小

[root@localhost chen]# vgscan //扫描当前系统中的卷组

Reading volume groups from cache.

Found volume group "centos" using metadata type lvm2

[root@localhost chen]# vgcreate myvg /dev/sdb[12] //用物理卷/dev/sdb1和/dev/sdb2创建卷组myvg

Volume group "myvg" successfully created

[root@localhost chen]# vgscan

Reading volume groups from cache.

Found volume group "centos" using metadata type lvm2

Found volume group "myvg" using metadata type lvm2

[root@localhost chen]# vgdisplay myvg //显示卷组myvg的信息

--- Volume group ---

VG Name myvg

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 2

Act PV 2

VG Size 39.99 GiB

PE Size 4.00 MiB

Total PE 10238

Alloc PE / Size 0 / 0

Free PE / Size 10238 / 39.99 GiB

VG UUID iIisQE-rwE8-dR41-YRFn-cNmv-eGtt-gbiZtw

5.创建逻辑卷mylv,大小为30G

[root@localhost chen]# lvscan //扫描当前系统中的逻辑卷

ACTIVE '/dev/centos/swap' [3.00 GiB] inherit

ACTIVE '/dev/centos/root' [35.99 GiB] inherit

[root@localhost chen]# lvcreate -L 30G -n mylv myvg //利用卷组myvg,创建逻辑卷mylv,指定的容量大小为30G

Logical volume "mylv" created.

[root@localhost chen]# lvscan

ACTIVE '/dev/centos/swap' [3.00 GiB] inherit

ACTIVE '/dev/centos/root' [35.99 GiB] inherit

ACTIVE '/dev/myvg/mylv' [30.00 GiB] inherit

6.将逻辑卷格式化成xfs文件系统,并挂载到/data目录上,创建文件测试

[root@localhost chen]# mkdir /data

[root@localhost chen]# mkfs.xfs /dev/myvg/mylv //将逻辑卷格式化成xfs文件系统

meta-data=/dev/myvg/mylv isize=512 agcount=4, agsize=1966080 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=7864320, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=3840, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@localhost chen]# mount /dev/myvg/mylv /data //把逻辑卷mylv挂载到/data下

[root@localhost chen]# touch /data/cs

[root@localhost chen]# vi /data/cs

[root@localhost chen]# cat /data/cs

fuhcvgfggffffffffffas

asfasfv

tqw

g'

erhfns

nengf

nngf

7.增大逻辑卷到35G

[root@localhost chen]# lvextend -L 35G /dev/myvg/mylv //增大逻辑卷到35G(逻辑卷的最大容量大小来源于卷组的大小)

Size of logical volume myvg/mylv changed from 30.00 GiB (7680 extents) to 35.00 GiB (8960 extents).

Logical volume myvg/mylv successfully resized.

[root@localhost chen]# lvdisplay /dev/myvg/mylv //显示逻辑卷mylv的信息

--- Logical volume ---

LV Path /dev/myvg/mylv

LV Name mylv

VG Name myvg

LV UUID 3QF5Vv-n2Aq-l89C-Avif-04SP-n8C5-qiSkyf

LV Write Access read/write

LV Creation host, time localhost.localdomain, 2019-08-01 17:22:12 +0800

LV Status available

# open 1

LV Size 35.00 GiB

Current LE 8960

Segments 2

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

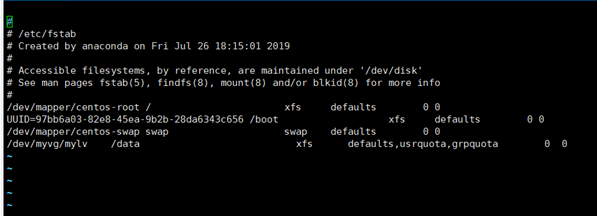

8.编辑/etc/fstab文件挂载逻辑卷,并支持磁盘配额选项

[root@localhost chen]# vi /etc/fstab

// mount -o defaults,usrquota,grpquota /data (用命令使其支持磁盘配额选项mount -o)

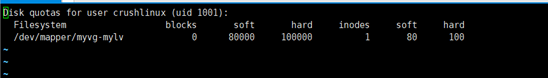

9.创建磁盘配额,crushlinux用户在/data目录下文件大小软限制为80M,硬限制为100M,

crushlinux用户在/data目录下文件数量软限制为80个,硬限制为100个。

[root@localhost chen]# mount -o defaults,usrquota,grpquota /data

[root@localhost chen]# quotacheck -uv /data //在/data下针对用户扫描文件系统并建立Qutoa的记录文件(u),显示其详细信息(v)

quotacheck: Skipping /dev/mapper/myvg-mylv [/data]

quotacheck: Cannot find filesystem to check or filesystem not mounted with quota option.

[root@localhost chen]# quotaon /data //开启quota服务

quotaon: Enforcing group quota already on /dev/mapper/myvg-mylv

quotaon: Enforcing user quota already on /dev/mapper/myvg-mylv

[root@localhost chen]# edquota -u crushlinux //编辑用户的限制值

[root@localhost chen]# quota -uvs crushlinux //查看用户crushlinux的quota报表

Disk quotas for user crushlinux (uid 1001):

Filesystem space quota limit grace files quota limit grace

/dev/mapper/myvg-mylv

0K 80000K 100000K 1 80 100

[root@localhost chen]# repquota -uvs /data //查看/data文件系统的限额报表

*** Report for user quotas on device /dev/mapper/myvg-mylv

Block grace time: 7days; Inode grace time: 7days

Space limits File limits

User used soft hard grace used soft hard grace

----------------------------------------------------------------------

root -- 16K 0K 0K 4 0 0

crushlinux -- 0K 80000K 100000K 1 80 100

#777 -- 0K 0K 0K 1 0 0

*** Status for user quotas on device /dev/mapper/myvg-mylv

Accounting: ON; Enforcement: ON

Inode: #67 (3 blocks, 3 extents)

10.使用touch dd 命令在/data目录下测试

[crushlinux@localhost chen]$ touch /data/a{1..111} //创建目录测试配额限制

touch: 无法创建"/data/a101": 超出磁盘限额

touch: 无法创建"/data/a102": 超出磁盘限额

touch: 无法创建"/data/a103": 超出磁盘限额

touch: 无法创建"/data/a104": 超出磁盘限额

touch: 无法创建"/data/a105": 超出磁盘限额

touch: 无法创建"/data/a106": 超出磁盘限额

touch: 无法创建"/data/a107": 超出磁盘限额

touch: 无法创建"/data/a108": 超出磁盘限额

touch: 无法创建"/data/a109": 超出磁盘限额

touch: 无法创建"/data/a110": 超出磁盘限额

touch: 无法创建"/data/a111": 超出磁盘限额

[crushlinux@localhost chen]$dd if=/dev/zero of=/data/qqq bs=1M count=101 //测试容量配额限制

dd: 写入"/data/qqq" 出错: 超出磁盘限额

记录了98+0 的读入

记录了97+0 的写出

101711872字节(102 MB)已复制,0.368447 秒,276 MB/秒

11.查看配额的使用情况:用户角度

[crushlinux@localhost chen]$ quota -uvs crushlinux

Disk quotas for user crushlinux (uid 1001):

Filesystem space quota limit grace files quota limit grace

/dev/mapper/myvg-mylv

0K 80000K 100000K 1 80 100

12.查看配额的使用情况:文件系统角度

[root@localhost chen]# repquota -uvs /data

*** Report for user quotas on device /dev/mapper/myvg-mylv

Block grace time: 7days; Inode grace time: 7days

Space limits File limits

User used soft hard grace used soft hard grace

----------------------------------------------------------------------

root -- 16K 0K 0K 4 0 0

crushlinux -- 0K 80000K 100000K 1 80 100

#777 -- 0K 0K 0K 1 0 0

*** Status for user quotas on device /dev/mapper/myvg-mylv

Accounting: ON; Enforcement: ON

Inode: #67 (3 blocks, 3 extents)