引入两个依赖,存在相同bean,导致启动失败

场景描述

OpenFeign和FastDFS都存在connectionManager这个类,导致spring在加载bean名称冲突。

解决方案

网上的解决方案有很多,都一一尝试过,但是无法解决。

最后解决方案:采用全类名覆盖加载

资料信息:

JVM优先加载项目中的类而不是依赖包里的类,这主要基于Java的类加载机制中的双亲委派模型以及类加载器的工作原理。

双亲委派模型是Java类加载器的工作原则。当一个类加载器收到类加载的请求时,它首先不会自己去尝试加载这个类,而是把这个请求委派给父类加载器去完成。每一个层次的类加载器都是如此,因此所有的加载请求最终都应该传送到顶层的启动类加载器中。只有当父类加载器无法完成这个加载请求(它的搜索范围中没有找到所需的类)时,子加载器才会尝试自己去加载。

整体思路解析

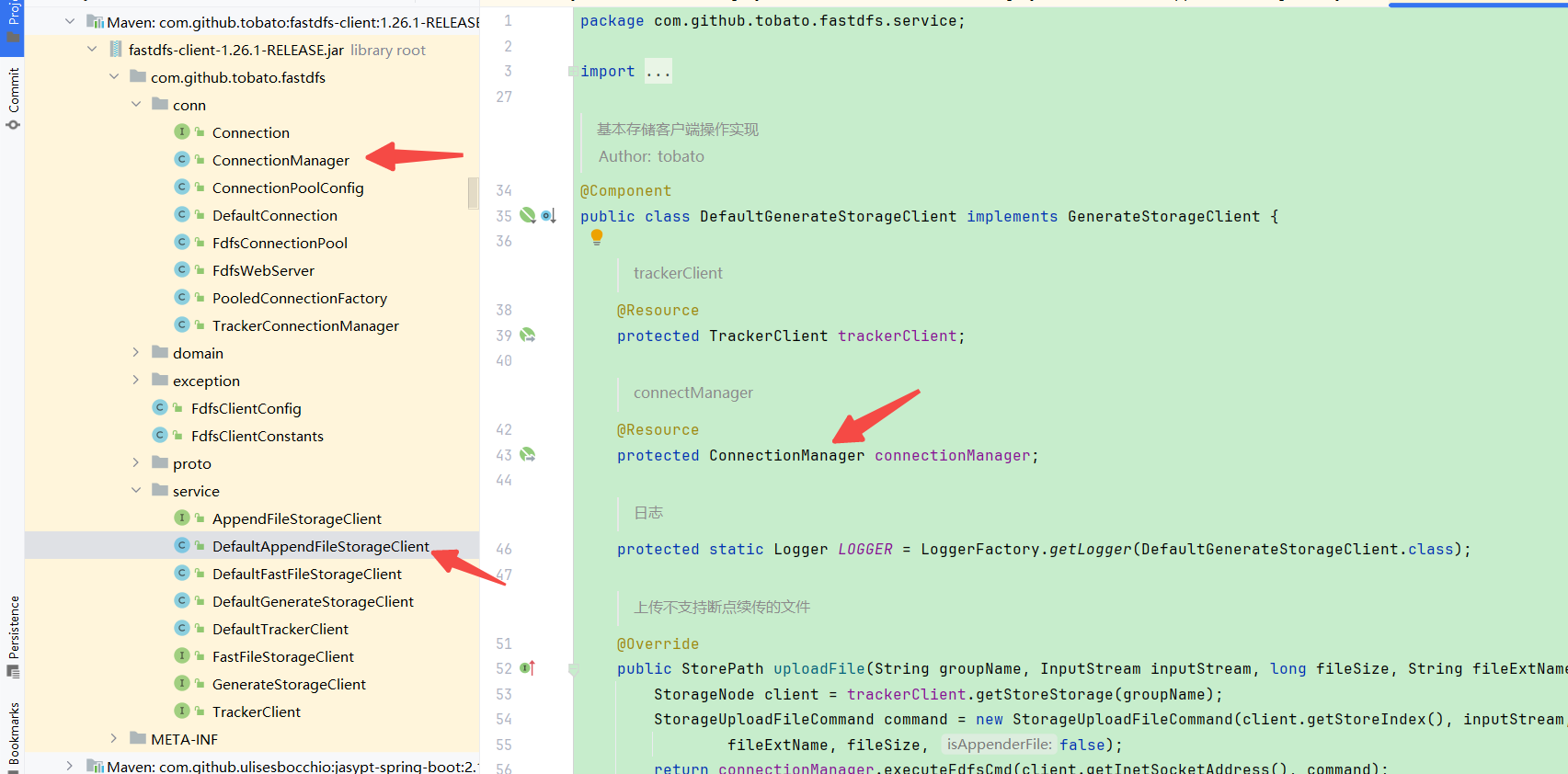

查看FastDFS源码结构

因为我们采用方案是修改connectionManager的bean名,从而避免bean名称冲突问题,所以主要注入了connectionManager的类,都要进行覆盖。

相关代码

除了标注出来的地方,其他都是保持和源码一模一样。

ConnectionManager

关键:

- 在自己项目中创建com.github.tobato.fastdfs.con包;

- 修改ConnectionManager的bean名称,如:@Component("FastDFSConnectionManager")

**package com.github.tobato.fastdfs.conn;**

import java.net.InetSocketAddress;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

import com.github.tobato.fastdfs.exception.FdfsException;

import com.github.tobato.fastdfs.proto.FdfsCommand;

/**

* 连接池管理

*

* <pre>

* 负责借出连接,在连接上执行业务逻辑,然后归还连

* </pre>

*

* @author tobato

*

*/

**@Component("FastDFSConnectionManager")**

public class ConnectionManager {

/** 连接池 */

@Autowired

private FdfsConnectionPool pool;

/** 日志 */

protected static final Logger LOGGER = LoggerFactory.getLogger(ConnectionManager.class);

/**

* 构造函数

*/

public ConnectionManager() {

super();

}

/**

* 构造函数

*

* @param pool

*/

public ConnectionManager(FdfsConnectionPool pool) {

super();

this.pool = pool;

}

/**

* 获取连接并执行交易

*

* @param address

* @param command

* @return

*/

public <T> T executeFdfsCmd(InetSocketAddress address, FdfsCommand<T> command) {

// 获取连接

Connection conn = getConnection(address);

// 执行交易

return execute(address, conn, command);

}

/**

* 执行交易

*

* @param conn

* @param command

* @return

*/

protected <T> T execute(InetSocketAddress address, Connection conn, FdfsCommand<T> command) {

try {

// 执行交易

LOGGER.debug("对地址{}发出交易请求{}", address, command.getClass().getSimpleName());

return command.execute(conn);

} catch (FdfsException e) {

throw e;

} catch (Exception e) {

LOGGER.error("execute fdfs command error", e);

throw new RuntimeException("execute fdfs command error", e);

} finally {

try {

if (null != conn) {

pool.returnObject(address, conn);

}

} catch (Exception e) {

LOGGER.error("return pooled connection error", e);

}

}

}

/**

* 获取连接

*

* @param address

* @return

*/

protected Connection getConnection(InetSocketAddress address) {

Connection conn = null;

try {

// 获取连接

conn = pool.borrowObject(address);

} catch (FdfsException e) {

throw e;

} catch (Exception e) {

LOGGER.error("Unable to borrow buffer from pool", e);

throw new RuntimeException("Unable to borrow buffer from pool", e);

}

return conn;

}

public FdfsConnectionPool getPool() {

return pool;

}

public void setPool(FdfsConnectionPool pool) {

this.pool = pool;

}

public void dumpPoolInfo(InetSocketAddress address) {

LOGGER.debug("==============Dump Pool Info================");

LOGGER.debug("活动连接{}", pool.getNumActive(address));

LOGGER.debug("空闲连接{}", pool.getNumIdle(address));

LOGGER.debug("连接获取总数统计{}", pool.getBorrowedCount());

LOGGER.debug("连接返回总数统计{}", pool.getReturnedCount());

LOGGER.debug("连接销毁总数统计{}", pool.getDestroyedCount());

}

}

DefaultGenerateStorageClient

关键:

- 在自己项目中创建com.github.tobato.fastdfs.con包;

- 因为修改了bean名称了,所以需要修改注入bean名称,如:@Resource(name = "FastDFSConnectionManager")

package com.github.tobato.fastdfs.service;

import java.io.InputStream;

import java.util.Set;

import javax.annotation.Resource;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.context.annotation.Primary;

import org.springframework.stereotype.Component;

import com.github.tobato.fastdfs.conn.ConnectionManager;

import com.github.tobato.fastdfs.domain.FileInfo;

import com.github.tobato.fastdfs.domain.MateData;

import com.github.tobato.fastdfs.domain.StorageNode;

import com.github.tobato.fastdfs.domain.StorageNodeInfo;

import com.github.tobato.fastdfs.domain.StorePath;

import com.github.tobato.fastdfs.proto.storage.DownloadCallback;

import com.github.tobato.fastdfs.proto.storage.StorageDeleteFileCommand;

import com.github.tobato.fastdfs.proto.storage.StorageDownloadCommand;

import com.github.tobato.fastdfs.proto.storage.StorageGetMetadataCommand;

import com.github.tobato.fastdfs.proto.storage.StorageQueryFileInfoCommand;

import com.github.tobato.fastdfs.proto.storage.StorageSetMetadataCommand;

import com.github.tobato.fastdfs.proto.storage.StorageUploadFileCommand;

import com.github.tobato.fastdfs.proto.storage.StorageUploadSlaveFileCommand;

import com.github.tobato.fastdfs.proto.storage.enums.StorageMetdataSetType;

/**

* 基本存储客户端操作实现

*

* @author tobato

*

*/

@Component

@Primary

public class DefaultGenerateStorageClient implements GenerateStorageClient {

/** trackerClient */

@Resource

protected TrackerClient trackerClient;

/** connectManager */

**@Resource(name = "FastDFSConnectionManager")**

protected ConnectionManager connectionManager;

/** 日志 */

protected static Logger LOGGER = LoggerFactory.getLogger(DefaultGenerateStorageClient.class);

/**

* 上传不支持断点续传的文件

*/

@Override

public StorePath uploadFile(String groupName, InputStream inputStream, long fileSize, String fileExtName) {

StorageNode client = trackerClient.getStoreStorage(groupName);

StorageUploadFileCommand command = new StorageUploadFileCommand(client.getStoreIndex(), inputStream,

fileExtName, fileSize, false);

return connectionManager.executeFdfsCmd(client.getInetSocketAddress(), command);

}

/**

* 上传从文件

*/

@Override

public StorePath uploadSlaveFile(String groupName, String masterFilename, InputStream inputStream, long fileSize,

String prefixName, String fileExtName) {

StorageNodeInfo client = trackerClient.getUpdateStorage(groupName, masterFilename);

StorageUploadSlaveFileCommand command = new StorageUploadSlaveFileCommand(inputStream, fileSize, masterFilename,

prefixName, fileExtName);

return connectionManager.executeFdfsCmd(client.getInetSocketAddress(), command);

}

/**

* 获取metadata

*/

@Override

public Set<MateData> getMetadata(String groupName, String path) {

StorageNodeInfo client = trackerClient.getFetchStorage(groupName, path);

StorageGetMetadataCommand command = new StorageGetMetadataCommand(groupName, path);

return connectionManager.executeFdfsCmd(client.getInetSocketAddress(), command);

}

/**

* 覆盖metadata

*/

@Override

public void overwriteMetadata(String groupName, String path, Set<MateData> metaDataSet) {

StorageNodeInfo client = trackerClient.getUpdateStorage(groupName, path);

StorageSetMetadataCommand command = new StorageSetMetadataCommand(groupName, path, metaDataSet,

StorageMetdataSetType.STORAGE_SET_METADATA_FLAG_OVERWRITE);

connectionManager.executeFdfsCmd(client.getInetSocketAddress(), command);

}

/**

* 合并metadata

*/

@Override

public void mergeMetadata(String groupName, String path, Set<MateData> metaDataSet) {

StorageNodeInfo client = trackerClient.getUpdateStorage(groupName, path);

StorageSetMetadataCommand command = new StorageSetMetadataCommand(groupName, path, metaDataSet,

StorageMetdataSetType.STORAGE_SET_METADATA_FLAG_MERGE);

connectionManager.executeFdfsCmd(client.getInetSocketAddress(), command);

}

/**

* 查询文件信息

*/

@Override

public FileInfo queryFileInfo(String groupName, String path) {

StorageNodeInfo client = trackerClient.getFetchStorage(groupName, path);

StorageQueryFileInfoCommand command = new StorageQueryFileInfoCommand(groupName, path);

return connectionManager.executeFdfsCmd(client.getInetSocketAddress(), command);

}

/**

* 删除文件

*/

@Override

public void deleteFile(String groupName, String path) {

StorageNodeInfo client = trackerClient.getUpdateStorage(groupName, path);

StorageDeleteFileCommand command = new StorageDeleteFileCommand(groupName, path);

connectionManager.executeFdfsCmd(client.getInetSocketAddress(), command);

}

/**

* 下载整个文件

*/

@Override

public <T> T downloadFile(String groupName, String path, DownloadCallback<T> callback) {

long fileOffset = 0;

long fileSize = 0;

return downloadFile(groupName, path, fileOffset, fileSize, callback);

}

/**

* 下载文件片段

*/

@Override

public <T> T downloadFile(String groupName, String path, long fileOffset, long fileSize,

DownloadCallback<T> callback) {

StorageNodeInfo client = trackerClient.getFetchStorage(groupName, path);

StorageDownloadCommand<T> command = new StorageDownloadCommand<T>(groupName, path, 0, 0, callback);

return connectionManager.executeFdfsCmd(client.getInetSocketAddress(), command);

}

public void setTrackerClientService(TrackerClient trackerClientService) {

this.trackerClient = trackerClientService;

}

public void setConnectionManager(ConnectionManager connectionManager) {

this.connectionManager = connectionManager;

}

}