最简单的VSLAM/BA

首先

引自 https://www.cnblogs.com/Jessica-jie/p/7739775.html

明确输入

输入为某个初始位姿(可以是轮速计+IMU积分、GPS定位给的、纯IMU积分给的),连续的图像,真值点云(对比用)

明确输出

相机的位姿

关键点

BA、三角化、特征提取和匹配。

其次

目标拆解

三角化:输入(相邻两帧图像的关联像素点、与其初始位姿) 输出相机坐标系下的3D点

特征提取:任意方式即可(superpoint ORB SIFT 等等)

特征匹配:以上descrip(描述子-特征计算表述值)的匹配

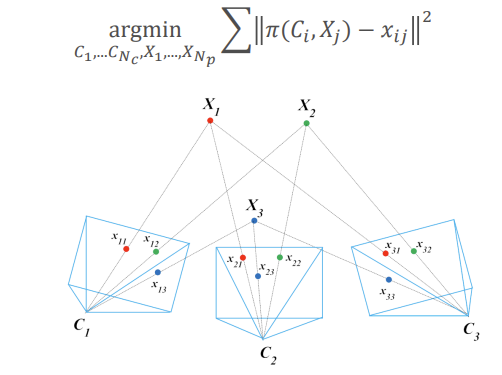

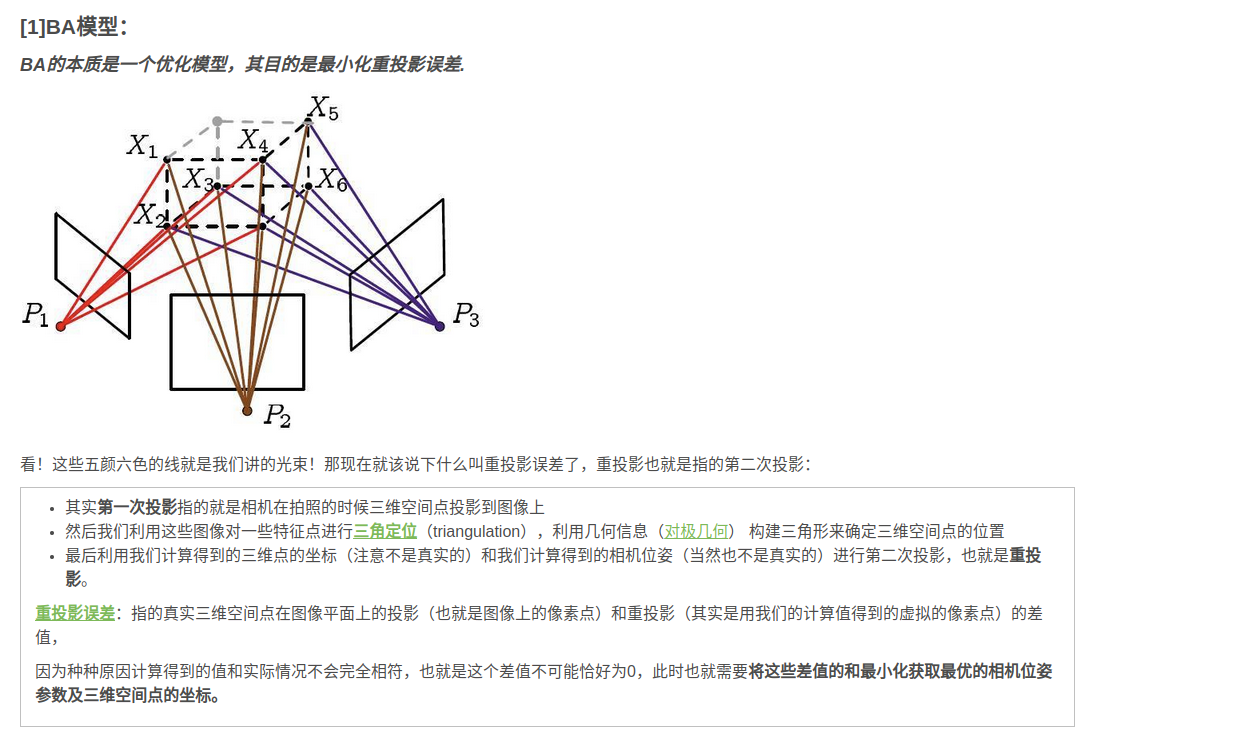

BA:这个最难需要的输入最多,BA的目标就是得到更准确的3D点/位姿。目标是求解某个准确的3D点,首先需要第一帧观测到此和后面几帧观测到的二维像素特征点(对应图像上的2D点) ,然后你还需要某一帧(一般是接下来的第二帧、这个不影响、因为我们优化的就是这个不准确值) 和第一帧三角化的3D点,最后需要这几帧的位姿(如果有位姿容器直接记录于第几帧观测到即可)。(3(3D的xyz) + 2(px py)*n + 6(x y z r p y)*n)* m

特征点管理:这个同样很重要,因为没有看懂其他代码的。。我随便写的,大概就是观测到1、2两帧后就完成初始化->得到地图点mpsa(map points all)记录第一帧的3D点、第几帧观测到的、二维像素坐标,然后2、3两帧继续推断地图点mpsn(map points now),然后用mpsn的descrip(描述子)与mpsa的descrip(描述子)匹配,匹配上的就不再重复加入 但是记录mpsa匹配点在第几帧观测到的还会附带更新观测到这个点的二维像素坐标,没配上的直接塞到mpsa中,然后重复直到到达滑窗最大值。过了滑窗/mpsa数量达到一定值后开始删除旧的。

三角化代码:参考VINS

void TriangulatePoint(Eigen::Matrix<double, 3, 4> &proj0, Eigen::Matrix<double, 3, 4> &proj1,

cv::Point2f &point0, cv::Point2f &point1, Eigen::Vector3d &point_3d) {

Eigen::Matrix4d design_matrix = Eigen::Matrix4d::Zero();

design_matrix.row(0) = point0.x * proj0.row(2) - proj0.row(0);

design_matrix.row(1) = point0.y * proj0.row(2) - proj0.row(1);

design_matrix.row(2) = point1.x * proj1.row(2) - proj1.row(0);

design_matrix.row(3) = point1.y * proj1.row(2) - proj1.row(1);

Eigen::Vector4d triangulated_point;

triangulated_point =

design_matrix.jacobiSvd(Eigen::ComputeFullV).matrixV().rightCols<1>();

point_3d(0) = triangulated_point(0) / triangulated_point(3);

point_3d(1) = triangulated_point(1) / triangulated_point(3);

point_3d(2) = triangulated_point(2) / triangulated_point(3);

}

//pro设为3*4方便计算 与4*4 = 3*3旋转矩阵 + 3*1位移 没区别特征提取随便采用的SIFT 匹配描述子采用的余弦值大小

Frame GetFrameKeypoint(cv::Mat im, int frame_id) {

//undistort pic

cv::Ptr<cv::SIFT> detector = cv::SIFT::create(500);

std::vector<cv::KeyPoint> keyPoint;

cv::Mat descriptors;

detector->detectAndCompute(im, cv::Mat(), keyPoint, descriptors); //descriptors size[128 x 500] cols width 128 rows height 500

std::vector<std::vector<std::pair<std::size_t, cv::KeyPoint>>> keyPointv;

keyPointv.resize(ngridcells);

std::vector<Keypoint> vgridkps;

Frame frame;

for(std::size_t i{0U}; i < keyPoint.size(); i++) {

keyPointv[GetPointCell(keyPoint[i].pt)].push_back(std::make_pair(i, keyPoint[i]));

}

int kpid{0};

for(std::size_t i{0U}; i < keyPointv.size(); i++) {

if(keyPointv[i].size() > 1) {

std::sort(keyPointv[i].begin(), keyPointv[i].end(), compare_response);

if(keyPointv[i][0].second.response > 0.04){

Keypoint kps;

kps.lmid = kpid;

kps.px = keyPointv[i][0].second.pt;

kps.desc = descriptors.row(static_cast<int>(keyPointv[i][0].first));

vgridkps.push_back(kps);

kpid ++;

// cv::circle(im, keyPointv[i][0].second.pt, 1, cv::Scalar(255, 0, 0), cv::FILLED);

}

}

}

std::cout << "get keypoints" << kpid << std::endl;

frame.kfid_ = frame_id;

frame.vgridkps_ = vgridkps;

// vgrid 得到在某个gird的keypoint

return frame;

}

double DescriptorDistance(const Eigen::Matrix<double, 1, 128> &a, const Eigen::Matrix<double, 1, 128> &b){

Eigen::Matrix<double, 1, 128> a_n{a/a.norm()};

Eigen::Matrix<double, 1, 128> b_n{b/b.norm()};

Eigen::Matrix<double, 128, 1> an_t{a_n.transpose()};

return b_n*an_t;

}BA 同样参考VINS

struct PointReprojectionError{

public:

// observation is undistored

PointReprojectionError(cv::Point2f observed_in, Eigen::Matrix3d camera_K_in, Eigen::Vector4d camera_D_in, Eigen::Matrix4d Trans_in) :

observed(observed_in), camera_K(camera_K_in), camera_D(camera_D_in), Trans(Trans_in) {}

template<typename T>

bool operator()(const T* point3d, T* residuals) const {

Eigen::Matrix<T, 3, 3> camera_R;

camera_R << T(Trans(0, 0)), T(Trans(0, 1)), T(Trans(0, 2)),

T(Trans(1, 0)), T(Trans(1, 1)), T(Trans(1, 2)),

T(Trans(2, 0)), T(Trans(2, 1)), T(Trans(2, 2));

Eigen::Matrix<T, 3, 1> point3d_V{point3d[0], point3d[1], point3d[2]};

point3d_V = camera_R*point3d_V;

point3d_V.x() += T(Trans(0, 3));

point3d_V.y() += T(Trans(1, 3));

point3d_V.z() += T(Trans(2, 3));

T fx = (T)camera_K(0, 0);

T fy = (T)camera_K(1, 1);

T cx = (T)camera_K(0, 2);

T cy = (T)camera_K(1, 2);

T keypoint2d_x = fx * point3d_V[0]/point3d_V[2] + cx;

T keypoint2d_y = fy * point3d_V[1]/point3d_V[2] + cy;

residuals[0] = pow(keypoint2d_x - T(observed.x), 2);

residuals[1] = pow(keypoint2d_y - T(observed.y), 2);

return true;

}

static ceres::CostFunction* Create(cv::Point2f observed_in, Eigen::Matrix3d camera_K_in, Eigen::Vector4d camera_D_in, Eigen::Matrix4d Trans_in) {

return (new ceres::AutoDiffCostFunction<PointReprojectionError, 2, 3>(new PointReprojectionError(observed_in, camera_K_in, camera_D_in, Trans_in)));

}

private:

cv::Point2f observed;

Eigen::Matrix3d camera_K;

Eigen::Vector4d camera_D;

Eigen::Matrix4d Trans;

};特征点管理 参考的OV2SLAM(真的很想入门这个SLAM 大概是kitti排名单目第一的水平)

首先需三个struct 特征点Kepoint 帧Frame 地图点Mappoint

Keypoint 需要记录二维像素坐标、描述子、是否和Map点关联上了(retracked)、第几个特征点(id)

Frame 需要记录所有Keypoint、是第几帧

Mappoint 需要记录第几个地图点(id)、3D点坐标、被哪些帧看到了(set_kfid)、在某一帧内是第几个特征点(map_kf_desc)

用以下容器索引

或建立以下三个Struct

struct Keypoint {

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

int id;

cv::Point2f px;

int scale;

cv::Mat desc;

bool is_retracked;

Keypoint() : id(-1), scale(0), is_retracked(false)

{}

};class Frame {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

Frame() {};

Frame(const Frame &F) {

}

Frame& operator=(const Frame& F){

kfid_ = F.kfid_;

vgridkps_ = F.vgridkps_;

return *this;

}

std::vector<Keypoint> vgridkps_; //记录所有提取到的特征点

int kfid_; //记录是第几帧

};class MapPoint {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

MapPoint() {}

MapPoint(const MapPoint& mp){

id_ = mp.id_;

set_kfids_ = mp.set_kfids_;

ptxyz_ = mp.ptxyz_;

kfid_ = mp.kfid_;

invdepth_ = mp.invdepth_;

desc_ = mp.desc_;

map_kf_desc_ = mp.map_kf_desc_;

}

// MapPoint id

int id_;

// Set of observed KF ids

std::set<int> set_kfids_;

// 3D position

Eigen::Vector3d ptxyz_;

// Anchored position

int kfid_;

double invdepth_;

// Mean desc and list of descs

cv::Mat desc_;

std::unordered_map<int, cv::Mat> map_kf_desc_;

};首先我有两个vector容器 记录了到过当前的GPS坐标点inspos 图像picv->frame_all

关联关系:3D点:Mappoint.ptxyz_ 被哪些帧看到了:set_kfids_ 帧对应的二维坐标:frame_all[set_kfids_].vgridkps[map_kf_desc_].px翻译成人话就是 所有图像提取的过特征的帧里面 对应的mappoint保存的特征点/描述子 ID(这两者是一一对应的) 对应的二维像素点

void GetPoints(std::vector<std::pair<double, cv::Mat>> picv, std::vector<ins> ins_data,

const cv::Mat& K1, const cv::Mat& D1, Eigen::Matrix4d extrinsic) {

int nlmid{0};

int mpsid{0};

while(pcur < picv.size() - 1){

std::cout << pcur << "pari \n";

if(first_frame) {

std::unordered_map<int, MapPoint> map_pssigle;

Frame first_fr;

GetMapPoints(picv, ins_data, first_fr, K1, D1, extrinsic, map_pssigle);

map_pkfs.insert({pcur, first_fr});

for(std::unordered_map<int, MapPoint>::iterator its = map_pssigle.begin(); its != map_pssigle.end(); its++) {

map_psall.insert({mpsid, its->second});

mpkps.insert({mpsid, first_fr.vgridkps_[its->second.map_kf_desc_.begin()->first]});

mpsid ++;

}

set_kfids.emplace(pcur);

first_frame = false;

std::cout << "init \n";

} else {

// match to map

cv::Mat img{picv[pcur].second.clone()};

std::unordered_map<int, MapPoint> map_pssigle;

Frame fr;

GetMapPoints(picv, ins_data, fr, K1, D1, extrinsic, map_pssigle);

std::cout << "get Frame and Mappoints \n";

map_pkfs.insert({pcur, fr});

std::cout << "track begin \n";

int match_size{0};

for(std::unordered_map<int, MapPoint>::iterator ita = map_psall.begin(); ita != map_psall.end(); ita++){

double bestDist{0.0};

std::size_t bestIdx{0};

Eigen::Matrix<double, 1, 128> descriptorsmap;

// std::cout << ita->first << " ";

cv::cv2eigen(ita->second.desc_, descriptorsmap);

for(std::unordered_map<int, MapPoint>::iterator its = map_pssigle.begin(); its != map_pssigle.end(); its++) {

Eigen::Matrix<double, 1, 128> descriptorskp;

cv::cv2eigen(its->second.desc_, descriptorskp);

double dist = DescriptorDistance(descriptorsmap, descriptorskp);

if(dist > bestDist){

bestDist = dist;

bestIdx = its->first;

}

}

if(bestDist > 0.90) { //matched //cv匹配

Eigen::Matrix4d posecur;

InstoPose(ins_data[pcur], posecur);

Eigen::Vector3d points3d;

set_kfids.emplace(pcur);

map_psall[ita->first].set_kfids_.emplace(pcur);

map_psall[ita->first].map_kf_desc_.insert({bestIdx, map_pssigle.at(bestIdx).desc_});

map_psall[ita->first].desc_ = map_pssigle.at(bestIdx).desc_;

map_pssigle.at(bestIdx).isobs_ = true;

// 存n个frame id

// 存n个 keypoints id

// 存一个descripters

// 存一个point3d

// 加入新观测

// if( 0.9 * secdist < bestdist ) {

// bestid = -1;

// }

//

match_size ++;

cv::circle(img, fr.vgridkps_[bestIdx].px, 5, cv::Scalar(255, 0, 0), cv::FILLED);

} else {

// map_psall.erase(ita->first);

}

}

std::cout << match_size << " points tracked\n";

for(std::unordered_map<int, MapPoint>::iterator its = map_pssigle.begin(); its != map_pssigle.end(); its++) {

if(map_pssigle.at(its->first).isobs_ == false){

map_psall.insert({mpsid, its->second});

mpkps.insert({mpsid, fr.vgridkps_[its->second.map_kf_desc_.begin()->first]});

mpsid ++;

}

}

cv::imwrite("./" + std::to_string(pcur) + ".jpeg", img);

}

pcur ++;

}串起来

callback(picin, posin){

inspos = slerpins2pos(posin, picin);

vector<pair<mat, pos>> picinsv;

picinsv.push_back({getframe(pic), inspos});

pcur = 0;

if(first_frame && picinsv.size > 2){

mpsa = triangulate(picinsv[pcur]);

pcur++;

}else {

match2map();

if(winsize > n){

pose,3Dpoints = BA();

mpsa.erase(pcur - n);

}

}

}