系統: Ubuntu 18.04

顯示卡: RTX2060 Super (8G內存)

1.0 CUDA版本

1.1 CUDA Toolkit and Corresponding Driver Versions

https://docs.nvidia.com/cuda/cuda-toolkit-release-notes/index.html#id4

尋找合版本的CUDA。

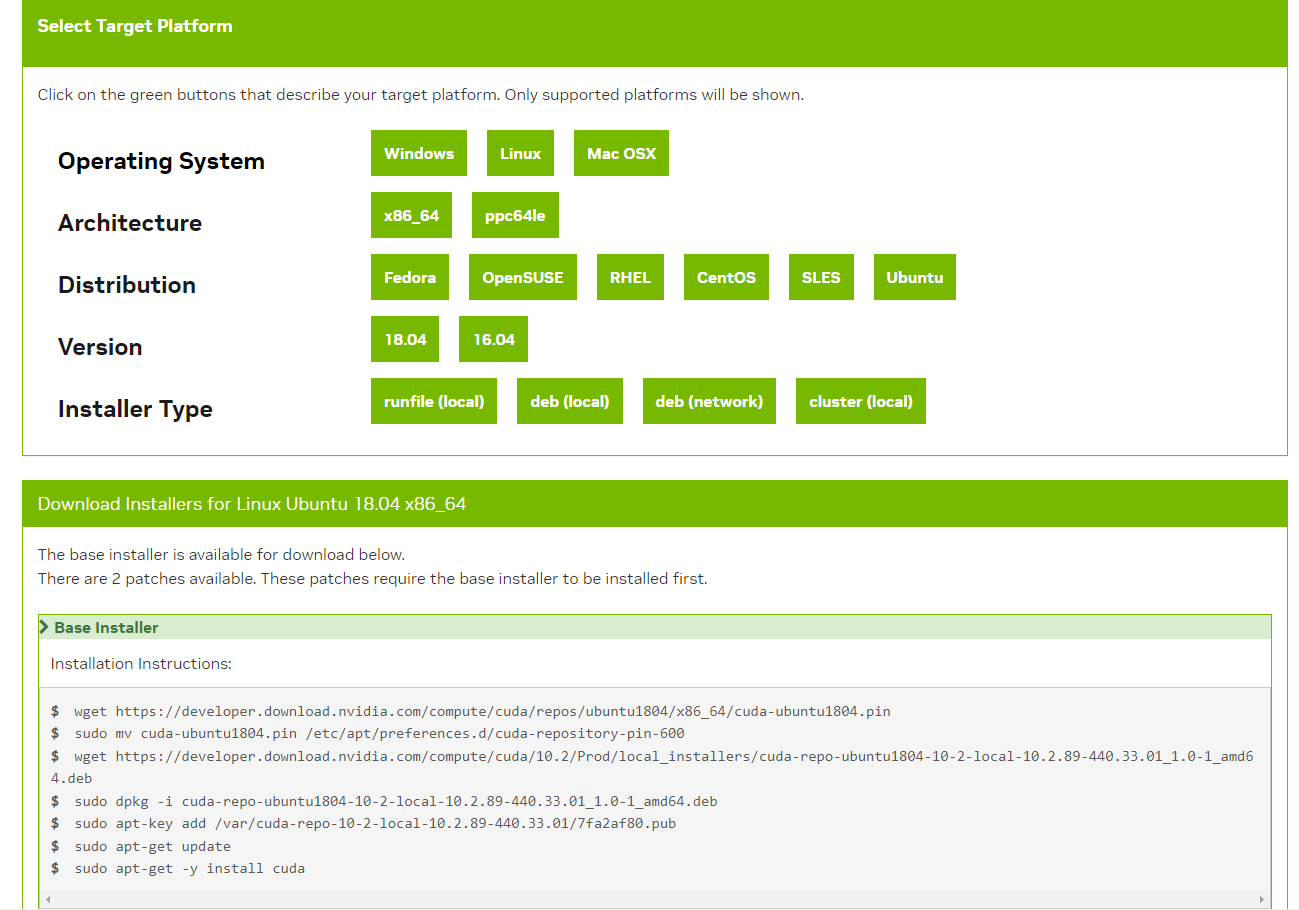

1.2 CUDA Toolkit 10.2 Download

https://developer.nvidia.com/cuda-10.2-download-archive?target_os=Linux&target_arch=x86_64&target_distro=Ubuntu&target_version=1804&target_type=deblocal

選擇系統,根據Installation Instructions執行。推薦使用.deb本地安裝,更穩定。

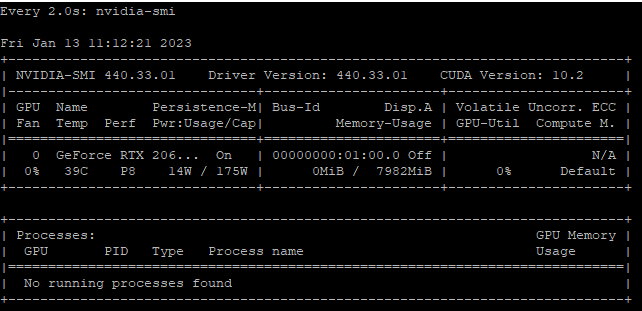

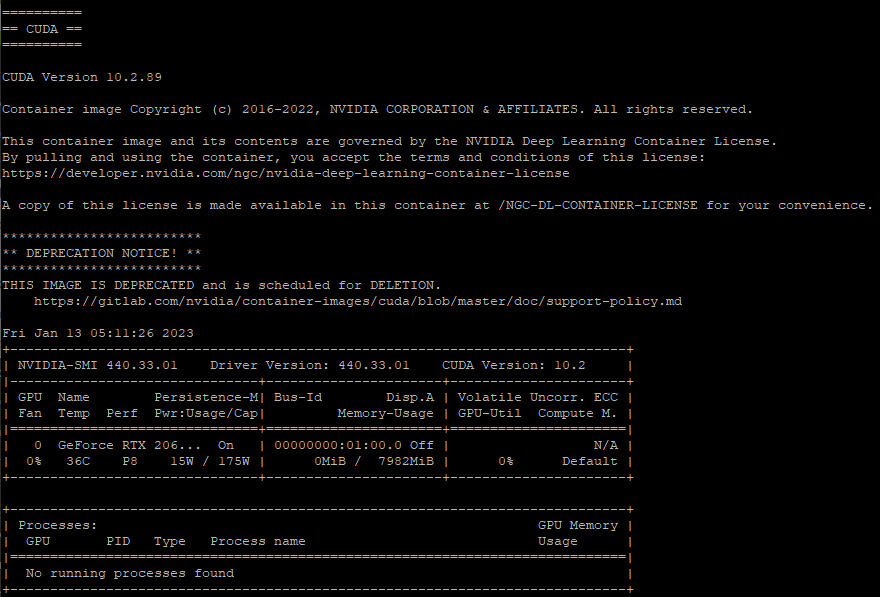

1.2 確定安裝是否成功

nvidia-smi

成功安裝,會出現完整資料。

2.0 安裝CUDNN加速

2.1 註冊nvidia開發者帳號

https://developer.nvidia.com/cudnn

點進去後,提示註冊nvidia開發者帳號。

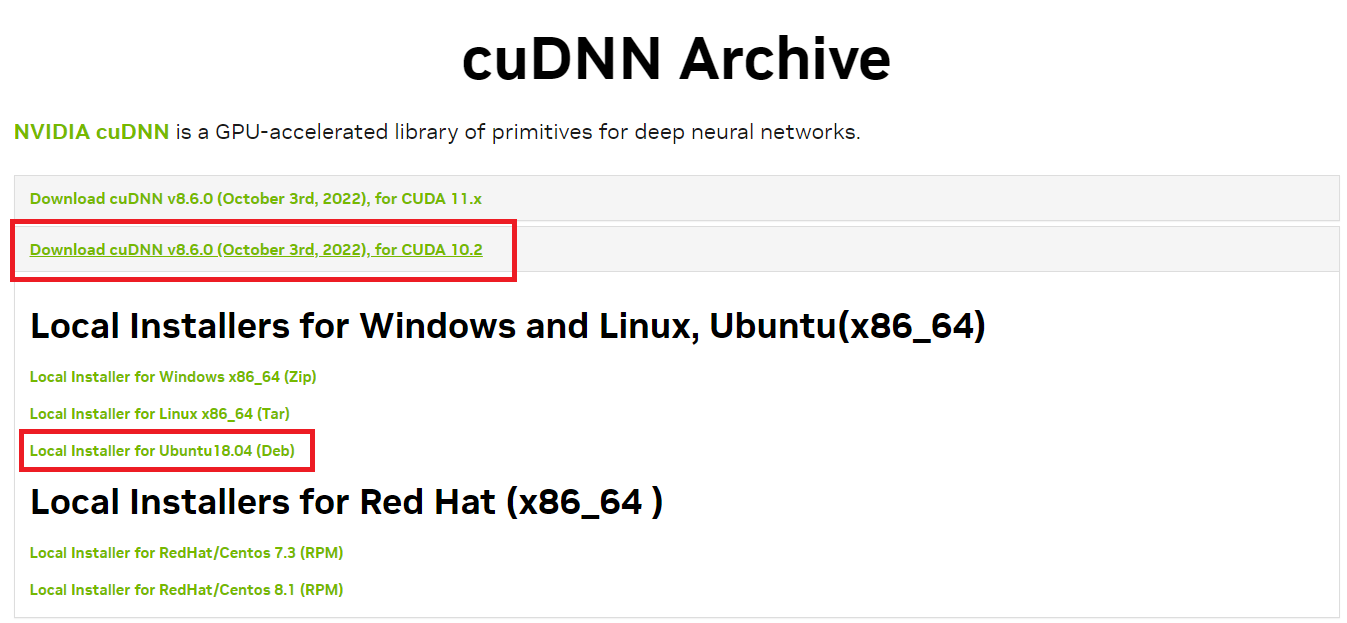

2.2 Download NVIDIA cuDNN

https://developer.nvidia.com/rdp/cudnn-archive

選擇 cuDNN v8.6.0 for CUDA 10.2,.deb安裝。

2.3 Install cuDNN

https://docs.nvidia.com/deeplearning/cudnn/install-guide/index.html#installlinux-deb

本地執行安裝。

2.4 確定安裝是否成功

https://docs.nvidia.com/deeplearning/cudnn/install-guide/index.html#verify

如安裝cuDNN成功,會出現 Test passed! 信息。

3.0 安裝libnvidia-container

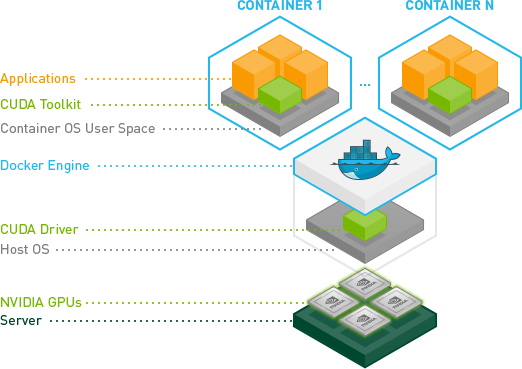

3.1 why libnvidia-container?

https://github.com/NVIDIA/nvidia-docker

如果要用docker container來執行CUDA,就需要libnvidia-container。

3.2 Install libnvidia-container

https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#setting-up-nvidia-container-toolkit

distribution=$(. /etc/os-release;echo $ID$VERSION_ID) \

&& curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/$distribution/libnvidia-container.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

然後用docker測試是否安裝成功。

sudo apt-get update

sudo apt-get install -y nvidia-container-toolkit

安裝nvidia-container-toolkit。

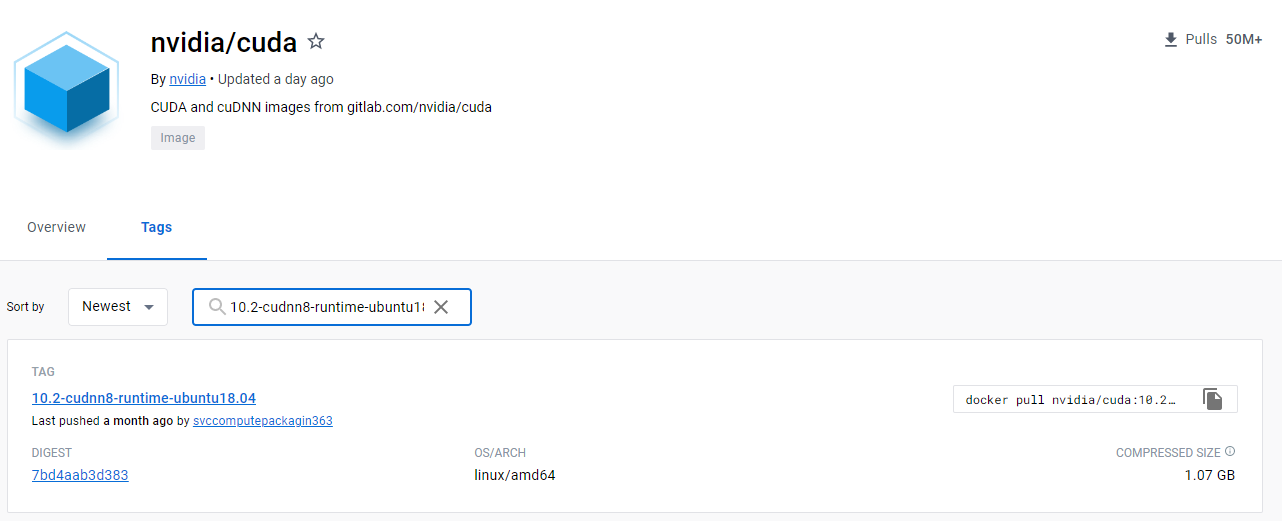

3.2 確定安裝是否成功

https://hub.docker.com/r/nvidia/cuda/tags?page=1&name=10.2-cudnn8-runtime-ubuntu18.04

sudo docker run --rm --gpus all nvidia/cuda:10.2-cudnn8-runtime-ubuntu18.04 nvidia-smi

pull了docker image後,在container里執行nvidia-smi。

安裝成功畫面。Docker container能驅動CUDA10.2。

4.0 Install diffusers

https://github.com/huggingface/diffusers

diffusers是SD模型的pipeline庫,SD官方比較推薦用這個。

4.1 Edit dockerfile

現在流行不寫顯式聲明,反而是之後執行setup.py安裝庫。那麼就跟隨官方的操作。

~/diffusers/docker/diffusers-pytorch-cuda/Dockerfile

FROM nvidia/cuda:10.2-cudnn8-runtime-ubuntu18.04

LABEL maintainer="Hugging Face"

LABEL repository="diffusers"

ENV DEBIAN_FRONTEND=noninteractive

RUN apt update && \

apt install -y bash \

build-essential \

git \

git-lfs \

curl \

ca-certificates \

libsndfile1-dev \

python3.8 \

python3-pip \

python3.8-venv \

python3-venv && \

rm -rf /var/lib/apt/lists

# link libcudart.so.10.2 to libcudart.so.10.1

RUN ln -s /usr/local/cuda-10.2/targets/x86_64-linux/lib/libcudart.so.10.2 /usr/lib/x86_64-linux-gnu/libcudart.so.10.1

# link python3 to python3.8

RUN rm /usr/bin/python3 && \

ln -s /usr/bin/python3.8 /usr/bin/python3

# make sure to use venv

RUN python3 -m venv /opt/venv

ENV PATH="/opt/venv/bin:$PATH"

# pre-install the heavy dependencies (these can later be overridden by the deps from setup.py)

RUN python3 -m pip install --no-cache-dir --upgrade pip && \

python3 -m pip install --no-cache-dir \

torch==1.10.1+cu102 \

torchvision==0.11.2+cu102 \

torchaudio==0.10.1 \

-f https://download.pytorch.org/whl/cu102/torch_stable.html && \

python3 -m pip install --no-cache-dir \

accelerate \

datasets \

hf-doc-builder \

huggingface-hub \

diffusers \

librosa \

modelcards \

numpy \

scipy \

tensorflow-gpu==2.3.0 \

tensorboard \

transformers \

jupyterlab \

flask

CMD ["/bin/bash"]

降級可使用CUDA10.2。

1. 更改nvidia/cuda:10.2-cudnn8-runtime-ubuntu18.04

2. 再安裝python3-venv,否則venv時報錯。

3. link libcudart.so.10.2 to libcudart.so.10.1。這個是CUDA官方的Bug。

4. link python3 to python3.8。這個是diffusers官方的Bug。

5. 另外安裝常用庫: diffusers, tensorflow-gpu, jupyterlab, flask。

cd ~/PycharmProjects/diffusers/app/outputs sudo docker build -t diffusers/cuda/v3:10.2-cudnn8-runtime-ubuntu18.04 .

Build docker image。

4.2 Run diffusers

https://huggingface.co/runwayml/stable-diffusion-v1-5

from diffusers import StableDiffusionPipeline

import torch

model_id = "runwayml/stable-diffusion-v1-5"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

image.save("astronaut_rides_horse.png")

Demo Code。

需要注意:

size_base = 64

os.environ["PYTORCH_CUDA_ALLOC_CONF"] = f'max_split_size_mb:{size_base}'

img_width, img_height = size_base*11, size_base*11

size必需是*8的數字。由於模型容易內存不足,最好設置size大小。

sudo docker run --name diffusers.002 --rm --gpus all -t -v ~/PycharmProjects/diffusers/app:/app diffusers/cuda/v3:10.2-cudnn8-runtime-ubuntu18.04 python3 /app/main.py >> ~/PycharmProjects/diffusers/log/diffusers_001_`date +\%Y\%m\%d_\%H\%M\%S`.log 2>&1

執行docker container。

Project Code: https://github.com/kenny-chen/ai.diffusers

5.0 Result