python爬取代理IP地址

本篇以爬取爬取西刺代理为例,通过python爬取国内http代理IP及端口并存入csv文件。

抓取页面

我们通过urllib的urllib.request子模块来抓取页面信息

# 西刺国内HTTP代理第一页

url = 'https://www.xicidaili.com/wt'

user_agent = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36'

# 请求头信息 `user_agent:用户代理`

headers = {

'User-Agent': user_agent

}

# 抓取页面信息

req = urllib.request.Request(url, headers=headers)

page = urllib.request.urlopen(req).read().decode('utf-8')

解析页面信息

我们使用BeautifulSoup来解析html,并通过正则表达式模块re来匹配我们需要的信息

- 我们查看西刺的html结构,可以发现需要获取的IP及端口都是在标签中的

# BeautifulSoup解析html

soup = BeautifulSoup(page, 'lxml')

# 正则

# 匹配ip

ips = re.findall('td>(\d+\.\d+\.\d+\.\d+)</td', str(soup))

# 匹配端口

ports = re.findall('td>(\d+)</td', str(soup))

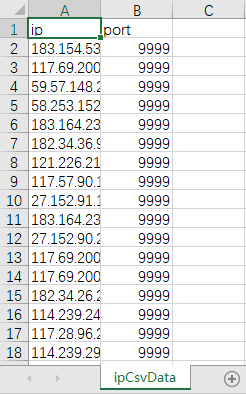

存csv文件

最后将获取到的数据存入一个csv文件中,

#csv文件数据

csvdata = []

index = 0

while index < len(ips):

_item = []

_item.append(ips[index])

_item.append(ports[index])

csvdata.append(_item)

index += 1

# csv文件title

headers_title = ['ip', 'port']

# 写入csv文件

csvPath = 'ipCsvData.csv'

# w模型写入文件

with open(csvPath, 'w', newline='')as f:

f_csv = csv.writer(f)

f_csv.writerow(headers_title)

f_csv.writerows(csvdata)

完整代码

import urllib.request

import csv

import re

import os

from bs4 import BeautifulSoup

pageUrl = 'https://www.xicidaili.com/wt'

pageIndex = 1

# 获取前20页数据

pageCount = 20

# url爬取的网址,headers

# https://www.xicidaili.com/wt

def crawlerPage(pageUrl):

url = pageUrl

user_agent = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36'

headers = {

'User-Agent': user_agent

}

# 抓取page信息

req = urllib.request.Request(url, headers=headers)

page = urllib.request.urlopen(req).read().decode('utf-8')

# BeautifulSoup解析html

soup = BeautifulSoup(page, 'lxml')

# 正则

# 匹配ip

ips = re.findall('td>(\d+\.\d+\.\d+\.\d+)</td', str(soup))

# 匹配端口

ports = re.findall('td>(\d+)</td', str(soup))

# 存csv文件数据

csvdata = []

index = 0

while index < len(ips):

_item = []

_item.append(ips[index])

_item.append(ports[index])

csvdata.append(_item)

index += 1

headers_title = ['ip', 'port']

# 写入csv文件

csvPath = 'ipCsvData.csv'

# 如果csv文件则原文件基础增加,否则创建新文件

if (os.path.exists(csvPath)):

with open(csvPath, 'a+', newline='')as f:

f_csv = csv.writer(f)

f_csv.writerows(csvdata)

else:

with open(csvPath, 'w', newline='')as f:

f_csv = csv.writer(f)

f_csv.writerow(headers_title)

f_csv.writerows(csvdata)

while pageIndex <= pageCount:

if (pageIndex == 1):

crawlerPage(pageUrl)

print('Page 1 get success!')

else:

crawlerPage(pageUrl + "/" + str(pageIndex))

print('Page ' + str(pageIndex) + ' get success!')

pageIndex += 1