每日总结

Mapreduce实例——排序

依赖:

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-app</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>3.2.0</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.30</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.2.0</version>

</dependency>

实验代码:

package mapreduce;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class OneSort {

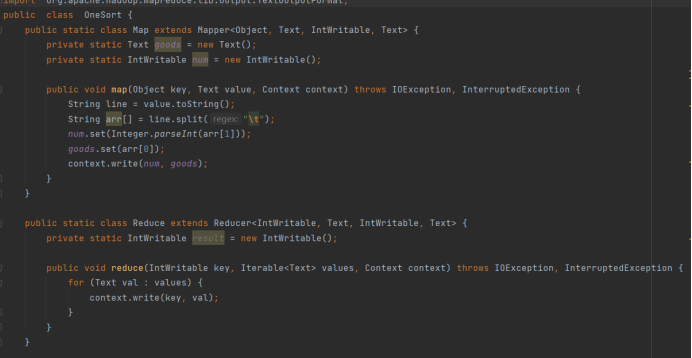

public static class Map extends Mapper<Object, Text, IntWritable, Text> {

private static Text goods = new Text();

private static IntWritable num = new IntWritable();

public void map(Object key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

String arr[] = line.split("\t");

num.set(Integer.parseInt(arr[1]));

goods.set(arr[0]);

context.write(num, goods);

}

}

public static class Reduce extends Reducer<IntWritable, Text, IntWritable, Text> {

private static IntWritable result = new IntWritable();

public void reduce(IntWritable key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

for (Text val : values) {

context.write(key, val);

}

}

}

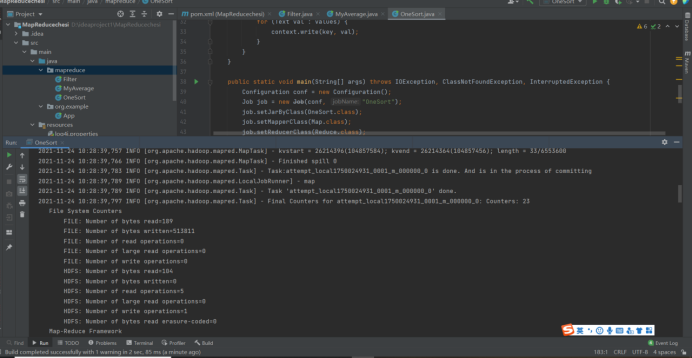

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = new Job(conf, "OneSort");

job.setJarByClass(OneSort.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(Text.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

Path in = new Path("hdfs://hadoop102:8020/mymapreduce2/in/goods_visit1");

Path out = new Path("hdfs://hadoop102:8020/mymapreduce2/out2");

FileInputFormat.addInputPath(job, in);

FileOutputFormat.setOutputPath(job, out);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统

· 【译】Visual Studio 中新的强大生产力特性

· 2025年我用 Compose 写了一个 Todo App

2020-12-01 每日总结62