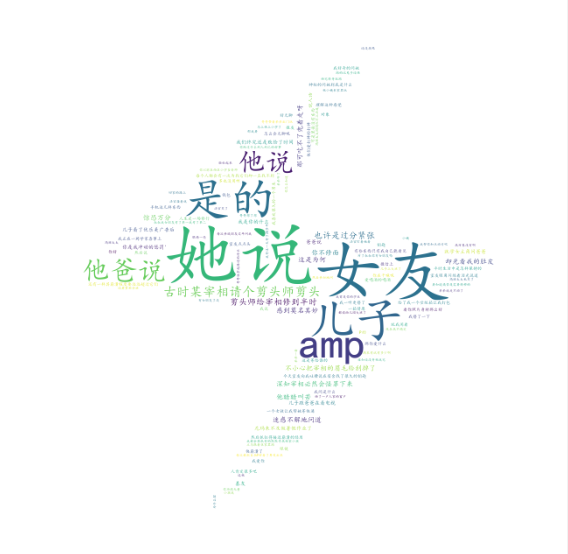

爬虫的使用(文字)+词频统计+词云

http://www.haha56.net/xiaohua/gushi/

以上网址自选两个,选择其中一个网址爬取文字并做词频和词云分析,选择另一个网址爬取图片内容。

import requests

import re

import jieba

import wordcloud

from imageio import imread

request = requests.get('http://www.haha56.net/xiaohua/gushi/')

request.encoding='gb2312'

data = request.text

link = re.findall('<a href="(.*?.html)" target="_blank">',data)

for i in link:

if i == 'http://www.haha56.net/a/2016/01/12159.html':

link_res = requests.get(i)

link_res.encoding='gb2312'

link_content = link_res.text

link_joke1 = re.findall('【1】(.*?) </div>',link_content)

for j in range(len(link_joke1)):

link_joke1[j]=link_joke1[j].replace('“','')

link_joke1[j] = link_joke1[j].replace('”', '')

elif i == 'http://www.haha56.net/a/2016/12/14140.html':

link_res = requests.get(i)

link_res.encoding = 'gb2312'

link_content = link_res.text

link_joke2 = re.findall('''<div>\n(.*?) </div>\n<div>''', link_content)

for j in range(len(link_joke2)):

link_joke2[j]=link_joke2[j].replace('—','')

link_joke2[j] = link_joke2[j].replace('\u3000\u3000', '')

link_joke2[j] = link_joke2[j].replace('“', '')

link_joke2[j] = link_joke2[j].replace('”', '')

link_joke1.extend(link_joke2)

#词频统计

strjoke = str(link_joke1)

joke = jieba.lcut(strjoke)

wordcount = {}

for i in joke:

if i in [' ',',','。',"'",':',"?",',',"!"]:

continue

if i in wordcount :

wordcount[i] += 1

else:

wordcount[i] =1

list = list(wordcount.items())

def fun(i):

return i[1]

list.sort(key=fun,reverse=True)

for j in list[:10]:

print(f'{j[0]: >5} {j[1]:>5}')

#txt

f = open(r'C:\Users\cgc\PycharmProjects\untitled\cgc\duanzi.txt', 'w', encoding='utf8')

f.write(strjoke)

f.close()

#词云

f = open(r'C:\Users\cgc\PycharmProjects\untitled\cgc\duanzi.txt', 'r', encoding='utf8')

mask = imread(r"C:\Users\cgc\PycharmProjects\untitled\cgc\timg.png")

data = f.read()

w = wordcloud.WordCloud(font_path=r'C:\Windows\Fonts\simkai.ttf', mask=mask, width=1000, height=700,

background_color="black")

w.generate(data)

w.to_file('duanzi.png')

效果图: