【CBAM】2018-ECCV-CBAM: Convolutional block attention module-论文阅读

CBAM

2018-ECCV-CBAM: Convolutional block attention module

来源: ChenBong 博客园

- Institute:KAIST,Lunit,Adobe

- Author:Sanghyun Woo, Jongchan Park, Joon-Young Lee, In So Kweon

- GitHub:

- Citation: 1600+

Introduction

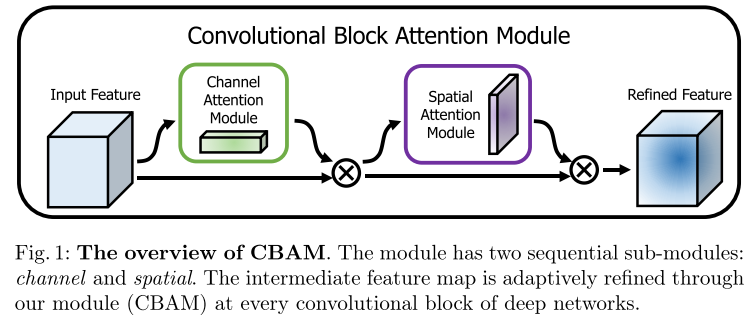

提出了一种在 channel-wise 和 spatial-wise 的注意力模块,可以嵌入任何CNN,在增加微小的计算开销的情况下,显著提高模型性能。

Motivation

- 人类视觉会关注到重要的部分,而不是图片的每个像素

Contribution

- 简单高效的 attention 模块(CBMA),可以用来嵌入任何CNN结构

Method

Feature MAP: \(\mathbf{F} \in \mathbb{R}^{C \times H \times W}\)

1D Channel attention Map: \(\mathbf{M}_{\mathbf{c}} \in \mathbb{R}^{C \times 1 \times 1}\)

2D Spatial attention Map: \(\mathbf{M}_{\mathbf{s}} \in \mathbb{R}^{1 \times H \times W}\)

Feature MAP 先乘 1D 的 Channel attention Map,再乘 2D 的 Spatial attention Map:

\(\mathbf{F}^{\prime}=\mathbf{M}_{\mathbf{c}}(\mathbf{F}) \otimes \mathbf{F}\)

\(\mathbf{F}^{\prime \prime}=\mathbf{M}_{\mathbf{s}}\left(\mathbf{F}^{\prime}\right) \otimes \mathbf{F}^{\prime}\)

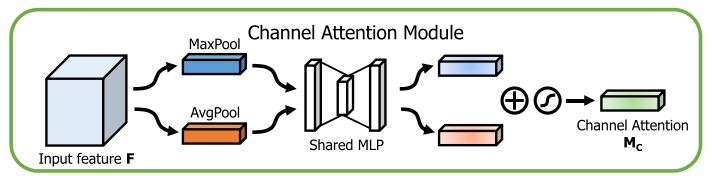

Channel attention module

\(\begin{aligned} \mathbf{M}_{\mathbf{c}}(\mathbf{F}) &=\sigma(\operatorname{MLP}(\operatorname{AvgPool}(\mathbf{F}))+M L P(\operatorname{MaxPool}(\mathbf{F}))) \\ &=\sigma\left(\mathbf{W}_{\mathbf{1}}\left(\mathbf{W}_{\mathbf{0}}\left(\mathbf{F}_{\mathbf{a v g}}^{\mathbf{c}}\right)\right)+\mathbf{W}_{\mathbf{1}}\left(\mathbf{W}_{\mathbf{0}}\left(\mathbf{F}_{\max }^{\mathbf{c}}\right)\right)\right) \end{aligned}\)

其中 \(\mathbf{W_0}\) 和 \(\mathbf{W_1}\) 是2层的Share MLP的参数

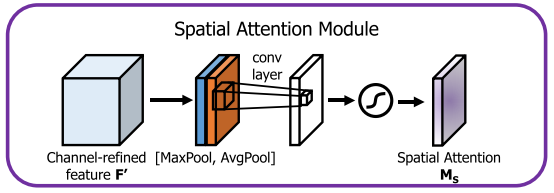

Spatial attention module

\(\begin{aligned} \mathbf{M}_{\mathbf{s}}(\mathbf{F}) &=\sigma\left(f^{7 \times 7}([\operatorname{AvgPool}(\mathbf{F}) ; \operatorname{MaxPool}(\mathbf{F})])\right) \\ &=\sigma\left(f^{7 \times 7}\left(\left[\mathbf{F}_{\mathbf{a v g}}^{\mathbf{s}} ; \mathbf{F}_{\mathbf{m a x}}^{\mathbf{s}}\right]\right)\right) \end{aligned}\)

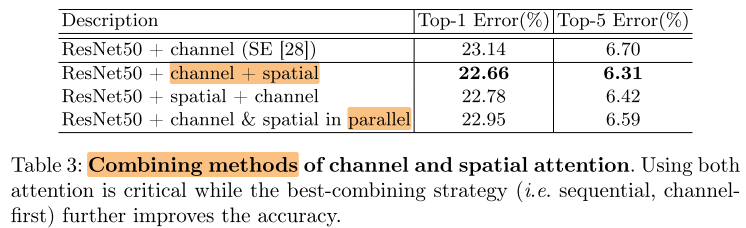

Arrangement of attention modules

3种组合方式:并行,Channel first,Spatial first

其中 Channel first 更好

Experiments

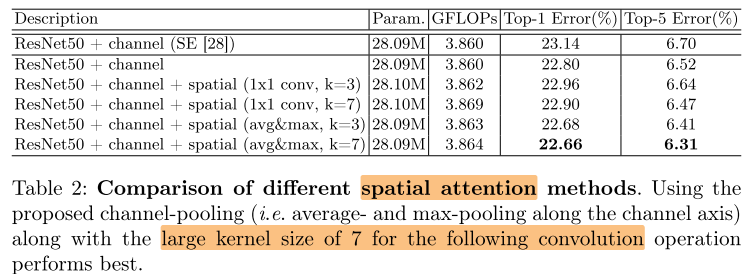

Ablation studies

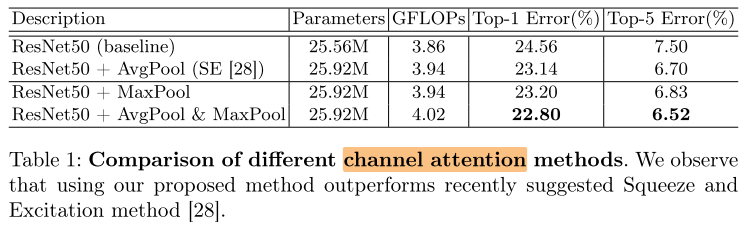

Channel attention

Spatial attention

Arrangement

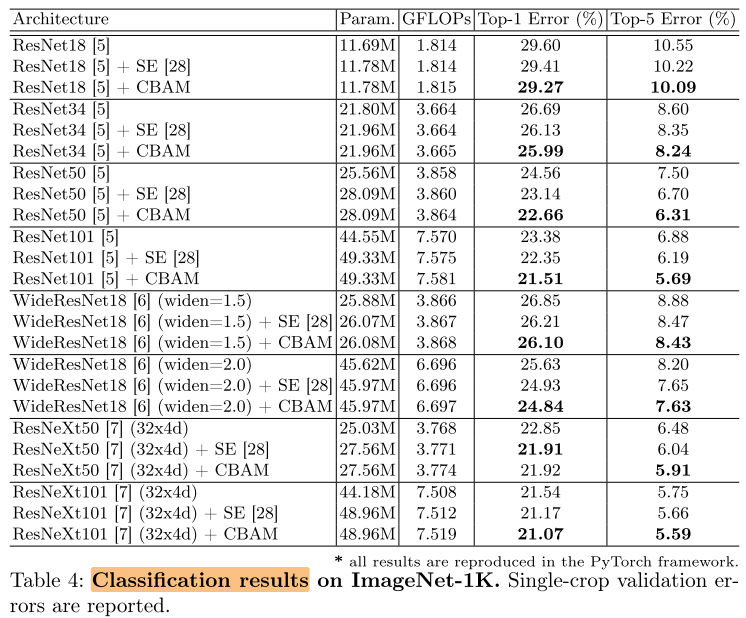

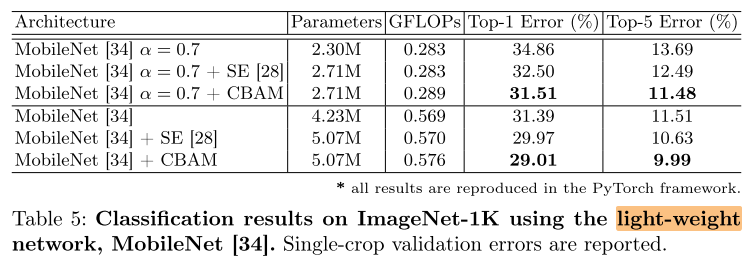

main result

Image Classification on ImageNet

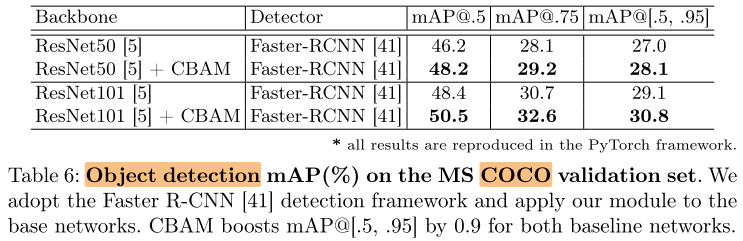

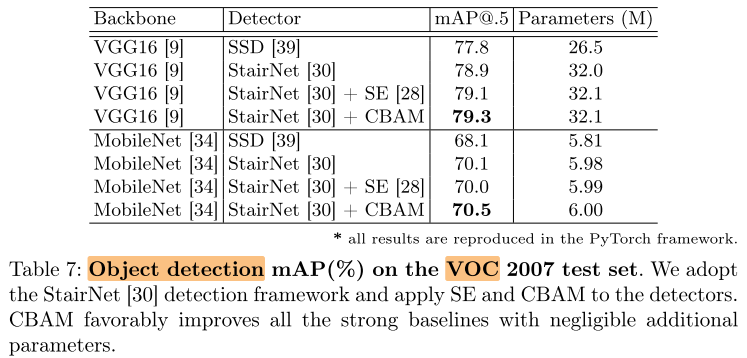

Object detection on COCO and VOC

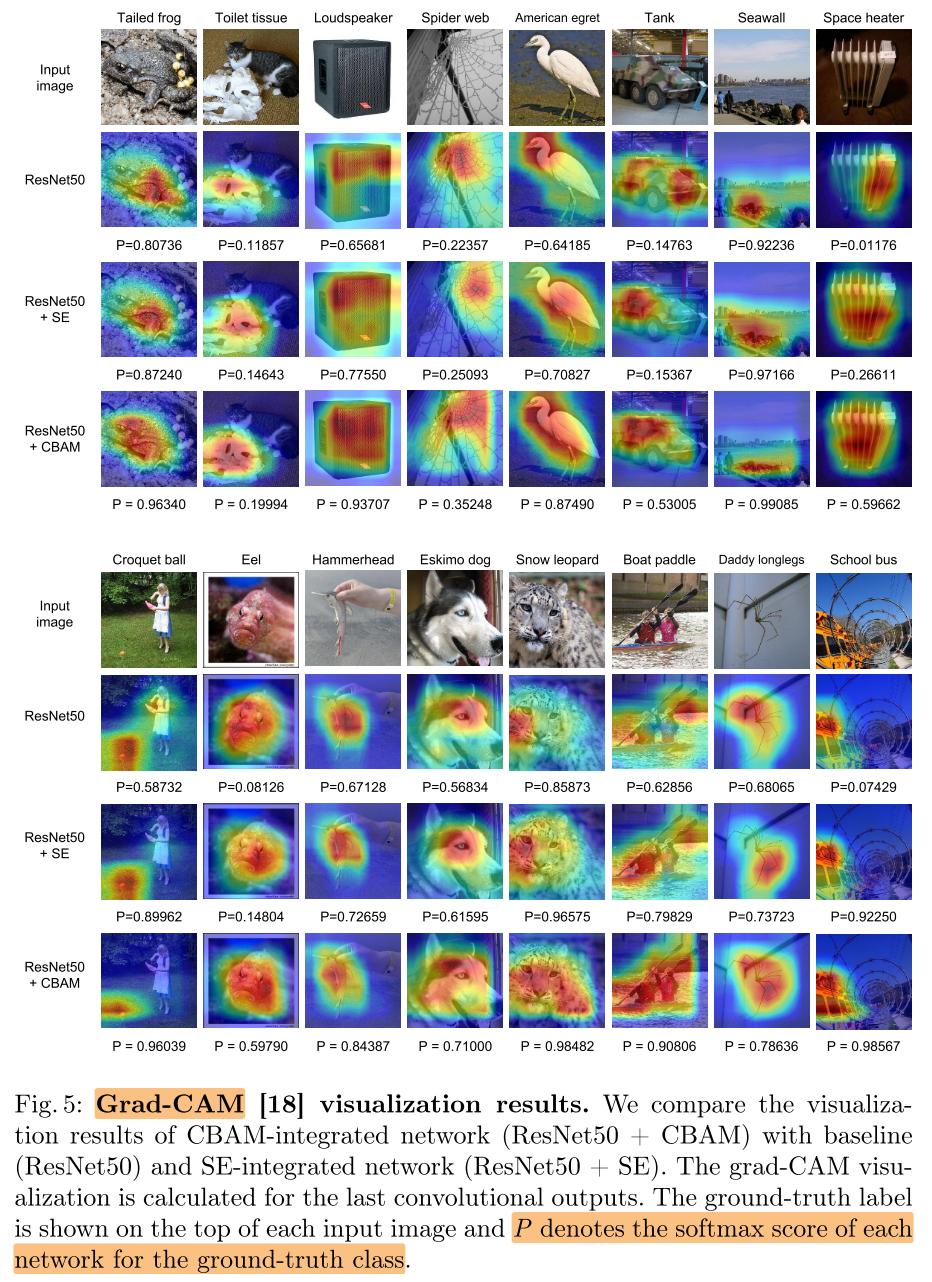

Attention Visualization (Grad-CAM)

Conclusion

Summary

pros:

- 方法简单统一(AvgPool+MaxPool)+MLP/Conv

- 效果好(Res50上提将近2个点),架构无关,任务无关,通用的模块

- attention可视化的图画的很好,softmax score 提升明显

To Read

Reference

万字长文:特征可视化技术(CAM) https://zhuanlan.zhihu.com/p/269702192

CAM和Grad-CAM https://bindog.github.io/blog/2018/02/10/model-explanation/