故障分析:网络闪断引发的ServiceStack.Redis死锁问题

背景说明

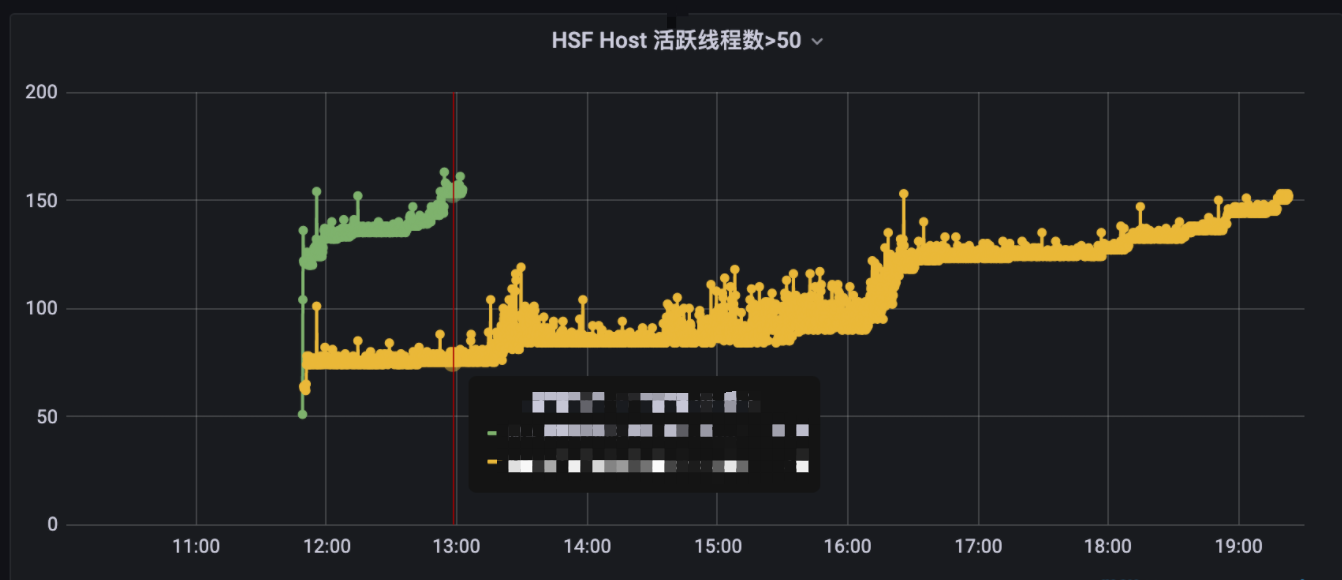

某天生产环境发生进程的活跃线程数过高的预警问题,且一天两个节点分别出现相同预警。此程序近一年没出现过此类预警,事出必有因,本文就记录下此次根因分析的过程。

监控看到的线程数变化情况:

初步的分析和发现

异常的进程在重启时分别保留了dump,这是进行下一步windbg分析的前提。

查看线程明细和异常情况:!threads

0:000> !threads

PDB symbol for clr.dll not loaded

ThreadCount: 297

UnstartedThread: 0

BackgroundThread: 228

PendingThread: 0

DeadThread: 60

Hosted Runtime: no

Lock

ID OSID ThreadOBJ State GC Mode GC Alloc Context Domain Count Apt Exception

0 1 11744 000002bb7332f750 2026020 Preemptive 000002BC775885C0:000002BC7758A518 000002bb73302f40 0 STA

10 2 e988 000002bb733dd610 2b220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Finalizer)

.....省略.....

97 428 2cf40 000002bb05df9240 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Threadpool Worker)

98 410 1c3d8 000002bb05ee44d0 602b221 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA ServiceStack.Redis.RedisException 000002bbf5127940

99 420 29718 000002bb05ee0e20 202b220 Preemptive 000002BE75FE6398:000002BE75FE76F8 000002bb73302f40 0 MTA

100 442 2e76c 000002bb05edd770 2b020 Preemptive 000002BBF5967E88:000002BBF5969E20 000002bb73302f40 1 MTA

101 421 108ec 000002bb05edeee0 2b020 Preemptive 000002BB75A3A208:000002BB75A3C1A0 000002bb73302f40 1 MTA

102 403 2d260 000002bb05eeb230 2b020 Preemptive 000002BDF5580228:000002BDF55821C0 000002bb73302f40 1 MTA

103 472 f008 000002bb06c28950 2b220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA

104 395 4f64 000002bb06b45b80 202b220 Preemptive 000002BBF6213018:000002BBF62144D0 000002bb73302f40 0 MTA

105 398 21be8 000002bb06b44be0 2b020 Preemptive 000002BE758DB828:000002BE758DD7C0 000002bb73302f40 1 MTA

117 355 113d4 000002bb06302a70 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Threadpool Worker)

115 392 2852c 000002bb06a167d0 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Threadpool Worker)

111 399 8b4c 000002bb0633e6c0 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Threadpool Worker)

106 318 3de0 000002bb06b43c40 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Threadpool Worker)

110 333 20530 000002bb06300b30 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Threadpool Worker)

116 329 2458 000002bb06a16fa0 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Threadpool Worker)

109 343 1b5f8 000002bb05cd5910 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Threadpool Worker)

112 344 26bdc 000002bb06a17770 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 3 MTA (Threadpool Worker) ServiceStack.Redis.RedisException 000002bc751983b8

107 320 1a508 000002bb0633ee90 3029220 Preemptive 0000000000000000:0000000000000000 000002bb73302f40 0 MTA (Threadpool Worker)

.....省略.....最后一列可以看到98号、112号线程又异常,分别查看:

0:000> ~98s

0:098> !pe

Exception object: 000002bbf5127940

Exception type: ServiceStack.Redis.RedisException

Message: Host:10.201.107.18,Port:26379 Exceeded timeout of 00:00:03

InnerException: System.Net.Sockets.SocketException, Use !PrintException 000002bef5278f08 to see more.

StackTrace (generated):

SP IP Function

000000D8D77FC260 00007FFB92F54063 UNKNOWN!ServiceStack.Redis.RedisNativeClient.SendReceive[[System.__Canon, mscorlib]](Byte[][], System.Func`1<System.__Canon>, System.Action`1<System.Func`1<System.__Canon>>, Boolean)+0x2b3

000000D8D77FE510 00007FFB92F596C4 UNKNOWN!ServiceStack.Redis.RedisNativeClient.SendExpectMultiData(Byte[][])+0xd4

000000D8D77FE580 00007FFB92F595B5 UNKNOWN!ServiceStack.Redis.RedisNativeClient.SentinelGetMasterAddrByName(System.String)+0x85

000000D8D77FE5C0 00007FFB92F594F3 UNKNOWN!ServiceStack.Redis.RedisSentinelWorker.GetMasterHostInternal(System.String)+0x13

000000D8D77FE5F0 00007FFB92F5940D UNKNOWN!ServiceStack.Redis.RedisSentinelWorker.GetSentinelInfo()+0x1d

000000D8D77FE640 00007FFB92F5937B UNKNOWN!ServiceStack.Redis.RedisSentinel.GetSentinelInfo()+0x4b

000000D8D77FE6A0 00007FFB9394441A UNKNOWN!ServiceStack.Redis.RedisSentinel.ResetClients()+0x2a

000000D8D77FE740 00007FFB93944395 UNKNOWN!ServiceStack.Redis.RedisSentinelWorker.SentinelMessageReceived(System.String, System.String)+0x1d5

000000D8D77FE7A0 00007FFB93944127 UNKNOWN!ServiceStack.Redis.RedisPubSubServer+<>c__DisplayClass98_0.<RunLoop>b__0(System.String, System.String)+0x267

000000D8D77FE810 00007FFB92F5E455 UNKNOWN!ServiceStack.Redis.RedisSubscription.ParseSubscriptionResults(Byte[][])+0x2c5

000000D8D77FE8A0 00007FFB92F5D681 UNKNOWN!ServiceStack.Redis.RedisSubscription.SubscribeToChannelsMatching(System.String[])+0x51

000000D8D77FE8D0 00007FFB92F5CFC5 UNKNOWN!ServiceStack.Redis.RedisPubSubServer.RunLoop()+0x1d5

0:098> !PrintException 000002bef5278f08

Exception object: 000002bef5278f08

Exception type: System.Net.Sockets.SocketException

Message: 由于连接方在一段时间后没有正确答复或连接的主机没有反应,连接尝试失败。

InnerException: <none>

StackTrace (generated):

SP IP Function

000000D8D77FE3C0 00007FFBEE35C842 System_ni!System.Net.Sockets.NetworkStream.Read(Byte[], Int32, Int32)+0xe2

StackTraceString: <none>

HResult: 80004005

0:000> ~112s

0:112> !pe

Exception object: 000002bc751983b8

Exception type: ServiceStack.Redis.RedisException

Message: Host:10.201.107.18,Port:26379 Exceeded timeout of 00:00:03

InnerException: ServiceStack.Redis.RedisRetryableException, Use !PrintException 000002bcf51e92e0 to see more.

StackTrace (generated):

SP IP Function

000000F42BCFBBD0 00007FFB92F54063 UNKNOWN!ServiceStack.Redis.RedisNativeClient.SendReceive[[System.__Canon, mscorlib]](Byte[][], System.Func`1<System.__Canon>, System.Action`1<System.Func`1<System.__Canon>>, Boolean)+0x2b3

000000F42BCFDE80 00007FFB92F596C4 UNKNOWN!ServiceStack.Redis.RedisNativeClient.SendExpectMultiData(Byte[][])+0xd4

000000F42BCFDEF0 00007FFB92F595B5 UNKNOWN!ServiceStack.Redis.RedisNativeClient.SentinelGetMasterAddrByName(System.String)+0x85

000000F42BCFDF30 00007FFB92F594F3 UNKNOWN!ServiceStack.Redis.RedisSentinelWorker.GetMasterHostInternal(System.String)+0x13

000000F42BCFDF60 00007FFB92F5F17B UNKNOWN!ServiceStack.Redis.RedisSentinelWorker.GetMasterHost(System.String)+0x1b

StackTraceString: <none>

HResult: 80131500

0:112> !pe 000002bcf51e92e0

Exception object: 000002bcf51e92e0

Exception type: ServiceStack.Redis.RedisRetryableException

Message: Socket is not connected

InnerException: <none>

StackTrace (generated):

SP IP Function

000000F42BCFDE20 00007FFB92F53F13 UNKNOWN!ServiceStack.Redis.RedisNativeClient.SendReceive[[System.__Canon, mscorlib]](Byte[][], System.Func`1<System.__Canon>, System.Action`1<System.Func`1<System.__Canon>>, Boolean)+0x163

StackTraceString: <none>

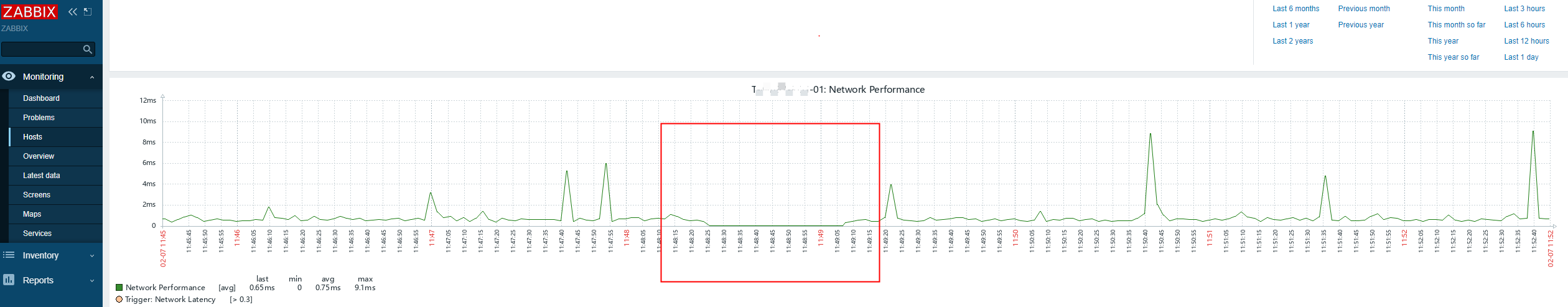

HResult: 80131500异常显示Socket连接失败,于是查看故障期间的网络情况,得到了第一个有价值的发现:网络有闪断!

进一步的分析和发现

按说网络闪断后,故障应该自动恢复,不至于造成线程数持续高位。既然有那么多活跃线程,那就看看线程都在干什么?

0:000> ~*e !clrstack

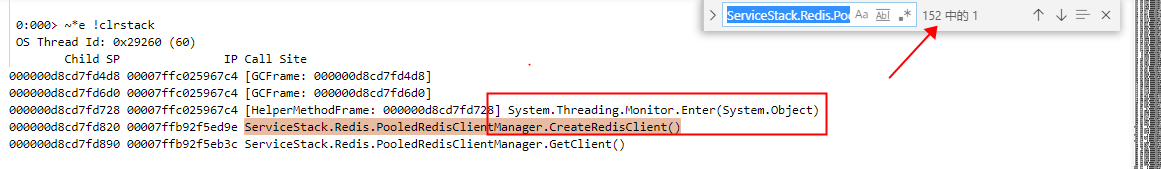

因涉及公司源码信息,不能展示全部堆栈。不过通过堆栈得到的结论是几乎全部线程都卡在获取Redis Client步骤处:ServiceStack.Redis.PooledRedisClientManager.CreateRedisClient(),且在等待加锁的操作。

既然是线程等待加锁,那么就查看dump中锁的情况:

0:036> !syncblk

Index SyncBlock MonitorHeld Recursion Owning Thread Info SyncBlock Owner

510 000002bb0513d4a8 79 1 000002bb073568c0 f9e8 177 000002be7520adb0 System.Object

530 000002bb0513a1f8 219 1 000002bb06a17770 26bdc 112 000002bd74f07d30 System.Object

567 000002bb0513e148 5 1 000002bb06ce6f40 24ce0 113 000002be74f320b8 System.Object

1343 000002bb0513c2b8 7 1 000002bb06a17770 26bdc 112 000002bd7515b158 ServiceStack.Redis.RedisSentinelWorker

-----------------------------

Total 1369

CCW 3

RCW 2

ComClassFactory 0

Free 507根据输出内容,可以得到如下结论:

- 绝大多数线程分别在等待000002be7520adb0、000002bd74f07d30对象的释放;

- 这两个锁对象当前分别被177和112号线程占用。

于是通过!clrstack查看这两个线程的堆栈:

OS Thread Id: 0x26bdc (112)

Child SP IP Call Site

000000f42bcf96a8 00007ffc025967c4 [HelperMethodFrame_1OBJ: 000000f42bcf96a8] System.Threading.Thread.AbortInternal()

000000f42bcf97a0 00007ffbf06a912a System.Threading.Thread.Abort()

000000f42bcf97e0 00007ffb92f5c8e9 ServiceStack.Redis.RedisPubSubServer.KillBgThreadIfExists()

000000f42bcf9820 00007ffb938dc511 ServiceStack.Redis.RedisPubSubServer.Dispose()

000000f42bcf9870 00007ffb92f57dcb ServiceStack.DisposableExtensions.Dispose(System.Collections.Generic.IEnumerable`1<System.IDisposable>, ServiceStack.Logging.ILog)

000000f42bcf98d0 00007ffb92f59223 ServiceStack.Redis.RedisSentinel.GetNextSentinel()

000000f42bcf9950 00007ffb93945d77 ServiceStack.Redis.RedisSentinel.OnSentinelError(System.Exception)

000000f42bcf9990 00007ffb92f5f1b8 ServiceStack.Redis.RedisSentinelWorker.GetMasterHost(System.String)

000000f42bcfbae8 00007ffbf225a626 [HelperMethodFrame: 000000f42bcfbae8]

000000f42bcfbbd0 00007ffb92f54063 ServiceStack.Redis.RedisNativeClient.SendReceive[[System.__Canon, mscorlib]](Byte[][], System.Func`1<System.__Canon>, System.Action`1<System.Func`1<System.__Canon>>, Boolean)

000000f42bcfdd38 00007ffbf225a626 [HelperMethodFrame: 000000f42bcfdd38]

000000f42bcfde20 00007ffb92f53f13 ServiceStack.Redis.RedisNativeClient.SendReceive[[System.__Canon, mscorlib]](Byte[][], System.Func`1<System.__Canon>, System.Action`1<System.Func`1<System.__Canon>>, Boolean)

000000f42bcfde80 00007ffb92f596c4 ServiceStack.Redis.RedisNativeClient.SendExpectMultiData(Byte[][])

000000f42bcfdef0 00007ffb92f595b5 ServiceStack.Redis.RedisNativeClient.SentinelGetMasterAddrByName(System.String)

000000f42bcfdf30 00007ffb92f594f3 ServiceStack.Redis.RedisSentinelWorker.GetMasterHostInternal(System.String)

000000f42bcfdf60 00007ffb92f5f17b ServiceStack.Redis.RedisSentinelWorker.GetMasterHost(System.String)

000000f42bcfdfa0 00007ffb92f5f067 ServiceStack.Redis.RedisSentinel.GetMaster()

000000f42bcfe020 00007ffb92f5efc6 ServiceStack.Redis.RedisSentinelResolver.GetReadWriteHost(Int32)

000000f42bcfe060 00007ffb92f5ef70 ServiceStack.Redis.RedisSentinelResolver.CreateMasterClient(Int32)

000000f42bcfe090 00007ffb92f5ee0d ServiceStack.Redis.PooledRedisClientManager.CreateRedisClient()

000000f42bcfe100 00007ffb92f5eb3c ServiceStack.Redis.PooledRedisClientManager.GetClient()

....省略....

OS Thread Id: 0xf9e8 (177)

Child SP IP Call Site

0000014977dfccf8 00007ffc025967c4 [GCFrame: 0000014977dfccf8]

0000014977dfcef0 00007ffc025967c4 [GCFrame: 0000014977dfcef0]

0000014977dfcf48 00007ffc025967c4 [HelperMethodFrame: 0000014977dfcf48] System.Threading.Monitor.Enter(System.Object)

0000014977dfd040 00007ffb92f5f059 ServiceStack.Redis.RedisSentinel.GetMaster()

0000014977dfd0c0 00007ffb92f5efc6 ServiceStack.Redis.RedisSentinelResolver.GetReadWriteHost(Int32)

0000014977dfd100 00007ffb92f5ef70 ServiceStack.Redis.RedisSentinelResolver.CreateMasterClient(Int32)

0000014977dfd130 00007ffb92f5ee0d ServiceStack.Redis.PooledRedisClientManager.CreateRedisClient()

0000014977dfd1a0 00007ffb92f5eb3c ServiceStack.Redis.PooledRedisClientManager.GetClient()

....省略....又能得到以下结论:

- 112号线程在执行线程中止操作。

- 177线程跟之前看到的绝大多数线程操作一样,是在等待锁释放。

看到这里就有了点奇怪的味道:别忘了177号本身就是持有了锁对象000002be7520adb0,且有39个线程等着用呢。极有可能会发生死锁了。于是自然就要查看177号等待的锁对象是什么。方法如下:

0:098> ~177s

ntdll!NtWaitForMultipleObjects+0x14:

00007ffc`025967c4 c3 ret

0:177> !clrstack -l

OS Thread Id: 0xf9e8 (177)

Child SP IP Call Site

0000014977dfccf8 00007ffc025967c4 [GCFrame: 0000014977dfccf8]

0000014977dfcef0 00007ffc025967c4 [GCFrame: 0000014977dfcef0]

0000014977dfcf48 00007ffc025967c4 [HelperMethodFrame: 0000014977dfcf48] System.Threading.Monitor.Enter(System.Object)

0000014977dfd040 00007ffb92f5f059 ServiceStack.Redis.RedisSentinel.GetMaster()

LOCALS:

<CLR reg> = 0x000002bd7515b158

0x0000014977dfd080 = 0x000002bd7515b158

<no data>

<no data>

<no data>

0x0000014977dfd078 = 0x0000000000000000

....省略....上面输出的是堆栈以及局部变量信息。既然要给目标对象加锁,那么目标对象自然是要存到局部变量中的。 于是可以判断000002bd7515b158就是锁对象的地址,且根据前面syncblk的输出,可以确认此锁对象当前被112线程持有。而112线程的堆栈表明它并没有在等待加锁,所以从现有信息判断没有构成死锁。

确定是发生了死锁

至此,根据已知的所有信息,可以归纳出:因为112线程持有的2个锁对象,绝大多数线程都被阻塞。此时就要重点分析112号线程的情况了。前面的分析过程表明,112号线程在执行线程中止操作,即Abort()。于是查看此被中止的线程有什么线索没有:

0:112> !clrstack -a

OS Thread Id: 0x26bdc (112)

Child SP IP Call Site

000000f42bcf96a8 00007ffc025967c4 [HelperMethodFrame_1OBJ: 000000f42bcf96a8] System.Threading.Thread.AbortInternal()

000000f42bcf97a0 00007ffbf06a912a System.Threading.Thread.Abort()

PARAMETERS:

this = <no data>

000000f42bcf97e0 00007ffb92f5c8e9 ServiceStack.Redis.RedisPubSubServer.KillBgThreadIfExists()

PARAMETERS:

this (<CLR reg>) = 0x000002be7520a900

000000f42bcf9820 00007ffb938dc511 ServiceStack.Redis.RedisPubSubServer.Dispose()

PARAMETERS:

this (0x000000f42bcf9870) = 0x000002be7520a900

LOCALS:

<no data>

<no data>

....省略....

0:177> !do2 000002be7520a900

0x000002be7520a900 ServiceStack.Redis.RedisPubSubServer

[statics]

0000 startedAt : 000002be7511e4e8 (System.Diagnostics.Stopwatch)

0008 heartbeatTimer : NULL

0010 <OnInit>k__BackingField : NULL

0018 <OnStart>k__BackingField : NULL

0020 <OnHeartbeatSent>k__BackingField : NULL

0028 <OnHeartbeatReceived>k__BackingField : NULL

0030 <OnStop>k__BackingField : NULL

0038 <OnDispose>k__BackingField : NULL

0040 <OnMessage>k__BackingField : 000002be7520abe0 (System.Action<System.String,System.String>)

0048 <OnControlCommand>k__BackingField : NULL

0050 <OnUnSubscribe>k__BackingField : NULL

0058 <OnError>k__BackingField : NULL

0060 <OnFailover>k__BackingField : NULL

0068 rand : 000002be7520aa30 (System.Random)

0070 lastExMsg : NULL

0078 bgThread : 000002be7511e550 (System.Threading.Thread)

0080 <ClientsManager>k__BackingField : 000002be7520a7d0 (ServiceStack.Redis.BasicRedisClientManager)

0088 <Channels>k__BackingField : 000002be7520aa18 (System.String[]) [Length: 0]

0090 <ChannelsMatching>k__BackingField : 000002be7520abc0 (System.String[]) [Length: 1]

0098 masterClient : 000002bef51d8288 (ServiceStack.Redis.RedisClient)

00a0 lastHeartbeatTicks : 637793659924009545 (System.Int64)

00a8 timesStarted : 1 (System.Int64)

00b0 noOfErrors : 0 (System.Int64)

00b8 bgThreadCount : 1 (System.Int64)

00c0 doOperation : 1 (System.Int32)

00c4 noOfContinuousErrors : 0 (System.Int32)

00c8 status : -1 (System.Int32)

00cc autoRestart : 0 (System.Int32)

00d0 <IsSentinelSubscription>k__BackingField : True (System.Boolean)

00d8 serverTimeAtStart : 000002be7520a9e0 2022/2/2 2:33:12 (System.DateTime)

00e0 HeartbeatInterval : 000002be7520a9e8 (System.Nullable<System.TimeSpan>)

00f0 HeartbeatTimeout : 000002be7520a9f8 00:00:30 (System.TimeSpan)

00f8 <WaitBeforeNextRestart>k__BackingField : 000002be7520aa00 (System.Nullable<System.TimeSpan>)

0:177> !do2 000002be7511e550

0x000002be7511e550 System.Threading.Thread

[statics]

0000 m_Context : 000002bdf4f025d8 (System.Runtime.Remoting.Contexts.Context)

0008 m_ExecutionContext : 000002be7511e7b0 (System.Threading.ExecutionContext)

0010 m_Name : 000002be7511e618 "RedisPubSubServer 1" [19] (System.String)

0018 m_Delegate : NULL

0020 m_CurrentCulture : NULL

0028 m_CurrentUICulture : NULL

0030 m_ThreadStartArg : NULL

0038 DONT_USE_InternalThread : 000002bb05ee44d0 (System.IntPtr)

0040 m_Priority : 2 (System.Int32)

0044 m_ManagedThreadId : 410 (System.Int32)

0048 m_ExecutionContextBelongsToOuterScope : False (System.Boolean)

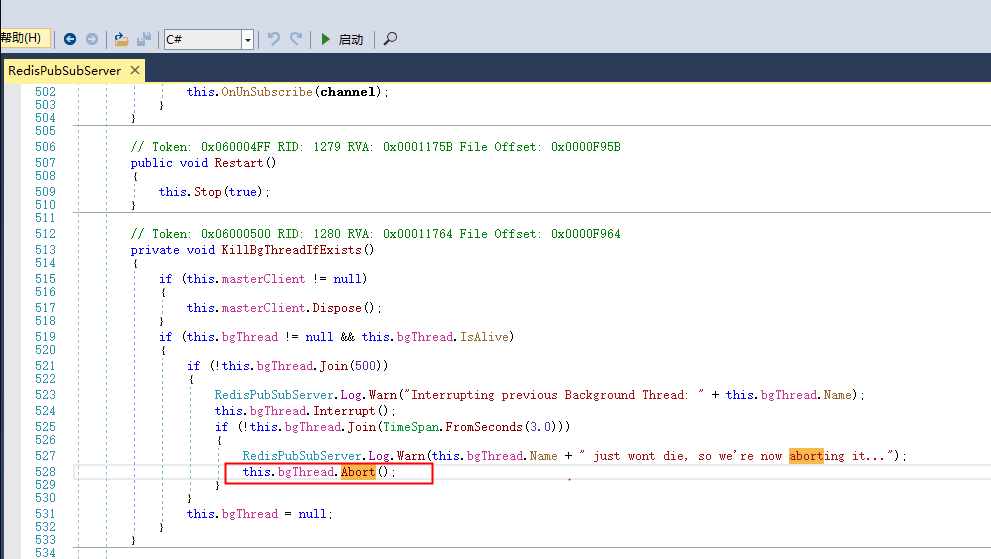

结合上述dump信息和反编译的程序源码,他们之间的联系是:112线程执行了RedisPubSubServer.KillBgThreadIfExists()方法,方法内执行了this.bgThread.Abort()。

查看dump信息得到RedisPubSubServer对象为000002be7520a900,bgThread对象为000002be7511e550,bgThread的托管线程号是410,根据!threads内容查找对应关系即98号线程。

于是得到的结论:112要中止的是98号线程。看到这里,又出现了一点怪味道:因为前面的分析结论是绝大多数线程都被112线程的锁对象阻塞了。那么98号呢?赶紧看看98号的堆栈:

0:098> !clrstack -l

OS Thread Id: 0x1c3d8 (98)

Child SP IP Call Site

000000d8d77f87a8 00007ffc025967c4 [GCFrame: 000000d8d77f87a8]

000000d8d77f89a0 00007ffc025967c4 [GCFrame: 000000d8d77f89a0]

000000d8d77f89f8 00007ffc025967c4 [HelperMethodFrame: 000000d8d77f89f8] System.Threading.Monitor.Enter(System.Object)

000000d8d77f8af0 00007ffb92f59371 ServiceStack.Redis.RedisSentinel.GetSentinelInfo()

LOCALS:

<CLR reg> = 0x000002bd7515b158

0x000000d8d77f8b28 = 0x000002bd7515b158

<no data>

0x000000d8d77f8b20 = 0x0000000000000000

000000d8d77f8b50 00007ffb9394441a ServiceStack.Redis.RedisSentinel.ResetClients()

LOCALS:

<no data>

<no data>

<no data>

....省略....果然,98号也是在等锁对象000002bd7515b158的释放,而这个对象是被112占用的!

重述一下前面分析得到的现状:

- 112正在中止98号线程

- 98号线程在等112的锁对象释放

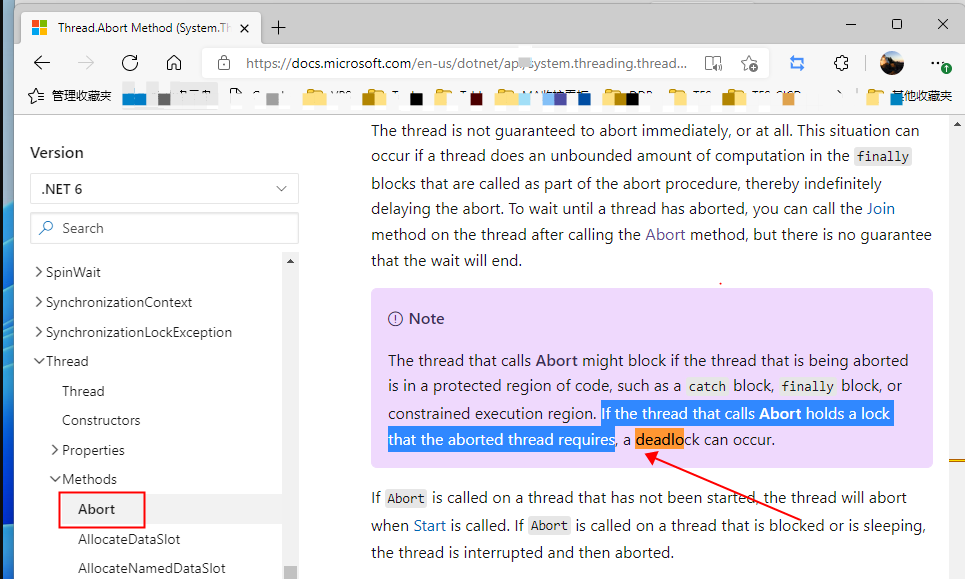

这个味道一看就像是死锁,但是之前确实没有验证过这种情况。那么自然Google一下,于是....真相大白:

同时还查询了死锁的112线程的执行时间,方法是通过!clrstack -a查看堆栈的入参和局部变量,结合业务系统的日志,验证了死锁发生的时间正是网络闪断的时间点。

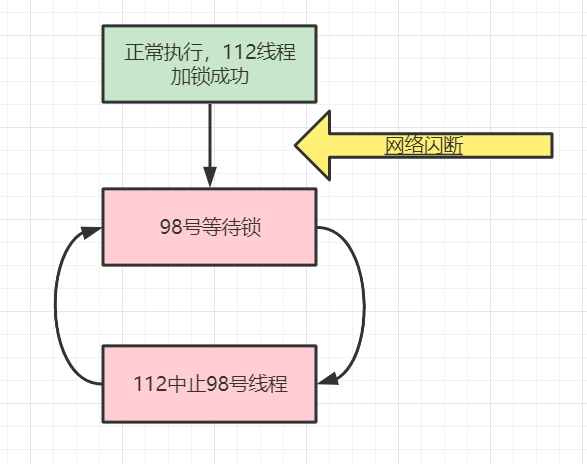

死锁的产生原因

既然确定是发生了死锁,下面就要分析死锁产生的逻辑关系了。

结合堆栈与ServiceStack.Redis源码,98号线程的执行逻辑是:

- 进程运行,启动了RedisPubSubServer.RunLoop();

- 网络闪断,需要复位客户端连接:RedisSentinel.ResetClients();

- RedisSentinel.GetSentinelInfo()内部又需要加锁等待;

OS Thread Id: 0x1c3d8 (98)

Child SP IP Call Site

000000d8d77f87a8 00007ffc025967c4 [GCFrame: 000000d8d77f87a8]

000000d8d77f89a0 00007ffc025967c4 [GCFrame: 000000d8d77f89a0]

000000d8d77f89f8 00007ffc025967c4 [HelperMethodFrame: 000000d8d77f89f8] System.Threading.Monitor.Enter(System.Object)

000000d8d77f8af0 00007ffb92f59371 ServiceStack.Redis.RedisSentinel.GetSentinelInfo()

000000d8d77f8b50 00007ffb9394441a ServiceStack.Redis.RedisSentinel.ResetClients()

000000d8d77f8bf0 00007ffb93944395 ServiceStack.Redis.RedisSentinelWorker.SentinelMessageReceived(System.String, System.String)

000000d8d77f8c50 00007ffb93944127 ServiceStack.Redis.RedisPubSubServer+<>c__DisplayClass98_0.<RunLoop>b__0(System.String, System.String)

000000d8d77f8cc0 00007ffb92f5e455 ServiceStack.Redis.RedisSubscription.ParseSubscriptionResults(Byte[][])

000000d8d77f8d50 00007ffb938dcd72 ServiceStack.Redis.RedisSubscription.UnSubscribeFromAllChannelsMatchingAnyPatterns()

000000d8d77f8d90 00007ffb92f5d0f9 ServiceStack.Redis.RedisPubSubServer.RunLoop()

000000d8d77fc178 00007ffbf225a626 [HelperMethodFrame: 000000d8d77fc178]

000000d8d77fc260 00007ffb92f54063 ServiceStack.Redis.RedisNativeClient.SendReceive[[System.__Canon, mscorlib]](Byte[][], System.Func`1<System.__Canon>, System.Action`1<System.Func`1<System.__Canon>>, Boolean)

000000d8d77fe3c8 00007ffbf225a626 [HelperMethodFrame: 000000d8d77fe3c8]

000000d8d77fe4b0 00007ffb92f53f13 ServiceStack.Redis.RedisNativeClient.SendReceive[[System.__Canon, mscorlib]](Byte[][], System.Func`1<System.__Canon>, System.Action`1<System.Func`1<System.__Canon>>, Boolean)

000000d8d77fe510 00007ffb92f596c4 ServiceStack.Redis.RedisNativeClient.SendExpectMultiData(Byte[][])

000000d8d77fe580 00007ffb92f595b5 ServiceStack.Redis.RedisNativeClient.SentinelGetMasterAddrByName(System.String)

000000d8d77fe5c0 00007ffb92f594f3 ServiceStack.Redis.RedisSentinelWorker.GetMasterHostInternal(System.String)

000000d8d77fe5f0 00007ffb92f5940d ServiceStack.Redis.RedisSentinelWorker.GetSentinelInfo()

000000d8d77fe640 00007ffb92f5937b ServiceStack.Redis.RedisSentinel.GetSentinelInfo()

000000d8d77fe6a0 00007ffb9394441a ServiceStack.Redis.RedisSentinel.ResetClients()

000000d8d77fe740 00007ffb93944395 ServiceStack.Redis.RedisSentinelWorker.SentinelMessageReceived(System.String, System.String)

000000d8d77fe7a0 00007ffb93944127 ServiceStack.Redis.RedisPubSubServer+<>c__DisplayClass98_0.<RunLoop>b__0(System.String, System.String)

000000d8d77fe810 00007ffb92f5e455 ServiceStack.Redis.RedisSubscription.ParseSubscriptionResults(Byte[][])

000000d8d77fe8a0 00007ffb92f5d681 ServiceStack.Redis.RedisSubscription.SubscribeToChannelsMatching(System.String[])

000000d8d77fe8d0 00007ffb92f5cfc5 ServiceStack.Redis.RedisPubSubServer.RunLoop()

000000d8d77fe940 00007ffbeff6df12 System.Threading.ExecutionContext.RunInternal(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object, Boolean)

000000d8d77fea10 00007ffbeff6dd95 System.Threading.ExecutionContext.Run(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object, Boolean)

000000d8d77fea40 00007ffbeff6dd65 System.Threading.ExecutionContext.Run(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object)

000000d8d77fea90 00007ffbf0013e85 System.Threading.ThreadHelper.ThreadStart()

000000d8d77fece0 00007ffbf2216913 [GCFrame: 000000d8d77fece0]

000000d8d77ff040 00007ffbf2216913 [DebuggerU2MCatchHandlerFrame: 000000d8d77ff040]112号线程的执行逻辑是:

- 业务调用获取redis 客户端对象实例,调用到PooledRedisClientManager.GetClient()

- 网络闪断,引发异常,触发OnSentinelError事件

- OnSentinelError事件处理程序中执行Dispose()操作

- Dispose()操作包含了对现有线程bgThread的中止操作

OS Thread Id: 0x26bdc (112)

Child SP IP Call Site

000000f42bcf96a8 00007ffc025967c4 [HelperMethodFrame_1OBJ: 000000f42bcf96a8] System.Threading.Thread.AbortInternal()

000000f42bcf97a0 00007ffbf06a912a System.Threading.Thread.Abort()

000000f42bcf97e0 00007ffb92f5c8e9 ServiceStack.Redis.RedisPubSubServer.KillBgThreadIfExists()

000000f42bcf9820 00007ffb938dc511 ServiceStack.Redis.RedisPubSubServer.Dispose()

000000f42bcf9870 00007ffb92f57dcb ServiceStack.DisposableExtensions.Dispose(System.Collections.Generic.IEnumerable`1<System.IDisposable>, ServiceStack.Logging.ILog)

000000f42bcf98d0 00007ffb92f59223 ServiceStack.Redis.RedisSentinel.GetNextSentinel()

000000f42bcf9950 00007ffb93945d77 ServiceStack.Redis.RedisSentinel.OnSentinelError(System.Exception)

000000f42bcf9990 00007ffb92f5f1b8 ServiceStack.Redis.RedisSentinelWorker.GetMasterHost(System.String)

000000f42bcfbae8 00007ffbf225a626 [HelperMethodFrame: 000000f42bcfbae8]

000000f42bcfbbd0 00007ffb92f54063 ServiceStack.Redis.RedisNativeClient.SendReceive[[System.__Canon, mscorlib]](Byte[][], System.Func`1<System.__Canon>, System.Action`1<System.Func`1<System.__Canon>>, Boolean)

000000f42bcfdd38 00007ffbf225a626 [HelperMethodFrame: 000000f42bcfdd38]

000000f42bcfde20 00007ffb92f53f13 ServiceStack.Redis.RedisNativeClient.SendReceive[[System.__Canon, mscorlib]](Byte[][], System.Func`1<System.__Canon>, System.Action`1<System.Func`1<System.__Canon>>, Boolean)

000000f42bcfde80 00007ffb92f596c4 ServiceStack.Redis.RedisNativeClient.SendExpectMultiData(Byte[][])

000000f42bcfdef0 00007ffb92f595b5 ServiceStack.Redis.RedisNativeClient.SentinelGetMasterAddrByName(System.String)

000000f42bcfdf30 00007ffb92f594f3 ServiceStack.Redis.RedisSentinelWorker.GetMasterHostInternal(System.String)

000000f42bcfdf60 00007ffb92f5f17b ServiceStack.Redis.RedisSentinelWorker.GetMasterHost(System.String)

000000f42bcfdfa0 00007ffb92f5f067 ServiceStack.Redis.RedisSentinel.GetMaster()

000000f42bcfe020 00007ffb92f5efc6 ServiceStack.Redis.RedisSentinelResolver.GetReadWriteHost(Int32)

000000f42bcfe060 00007ffb92f5ef70 ServiceStack.Redis.RedisSentinelResolver.CreateMasterClient(Int32)

000000f42bcfe090 00007ffb92f5ee0d ServiceStack.Redis.PooledRedisClientManager.CreateRedisClient()

000000f42bcfe100 00007ffb92f5eb3c ServiceStack.Redis.PooledRedisClientManager.GetClient()

....省略....上述过程简化后的逻辑示意图:

确定死锁原因后,简单搜索可找到官方论坛也有一个相同的反馈:https://forums.servicestack.net/t/application-appears-to-be-deadlocked-after-sentinel-failover/5782,并且官方做了相应的修复。

总结

根据文章的分析思路和步骤看起来比较顺畅,这是因为我已经按照关联关系重新梳理并整合了,实际上整个过程确实花费了一些时间。

但是得益于对windbg的理解和熟悉,以及对ServiceStack.Redis的阅读把握,最终将此故障的根因确定下来。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 周边上新:园子的第一款马克杯温暖上架

· Open-Sora 2.0 重磅开源!

· 分享 3 个 .NET 开源的文件压缩处理库,助力快速实现文件压缩解压功能!

· Ollama——大语言模型本地部署的极速利器

· DeepSeek如何颠覆传统软件测试?测试工程师会被淘汰吗?