16 Hbase案例

基本操作

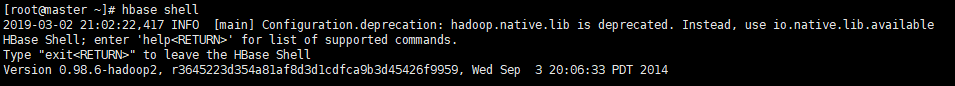

- 进入互交命令

hbase shell

- 创建一张表和列簇

create 'm_table','meta_data','action'

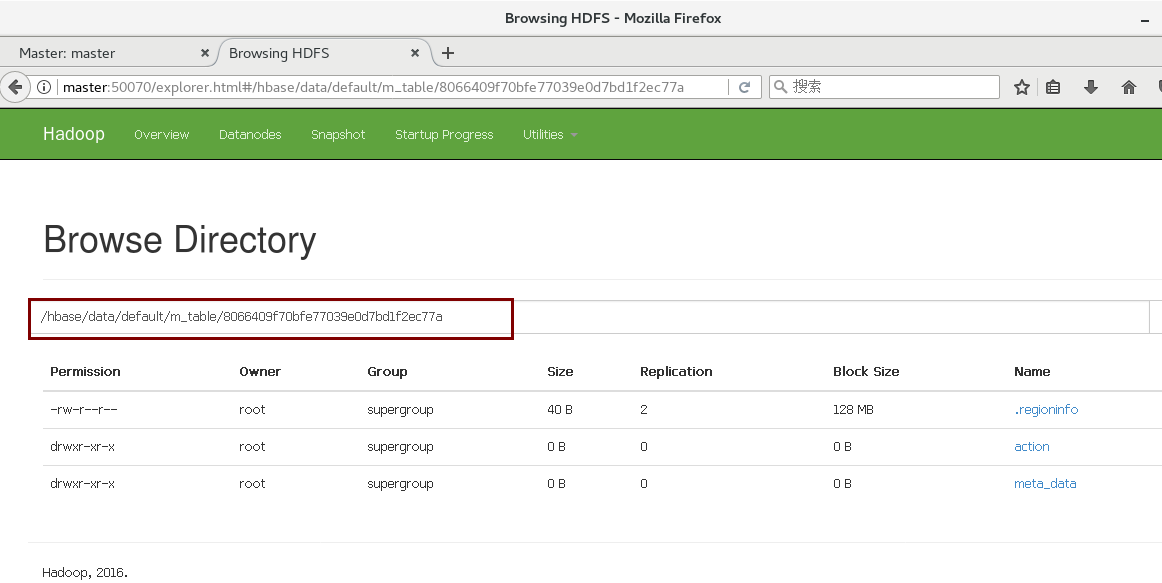

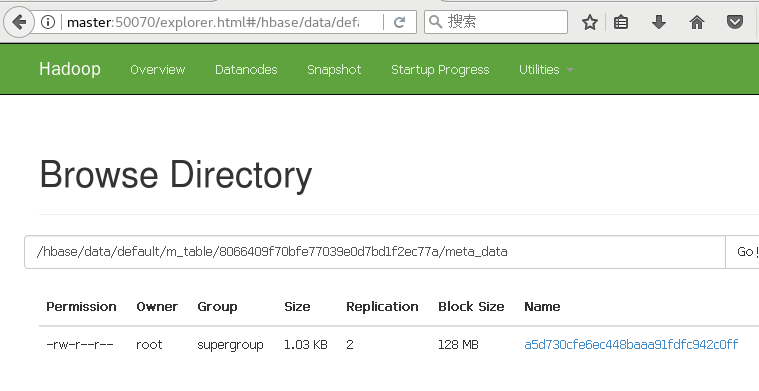

- 50070端口查看文件

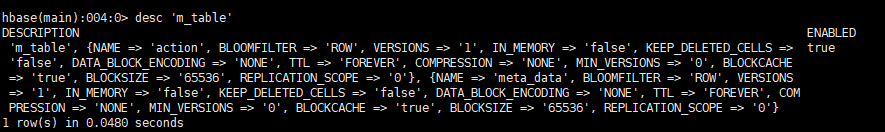

- 查看表结构

desc 'm_table'

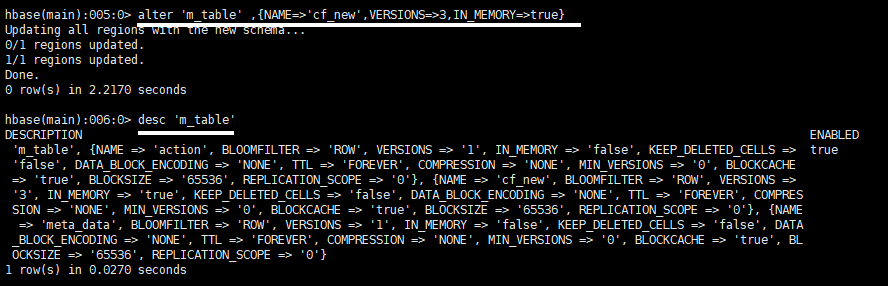

- 再添加一个列簇

alter 'm_table',{NAME=>'cf_new',VERSIONS=>3,IN_MEMORY=>true}

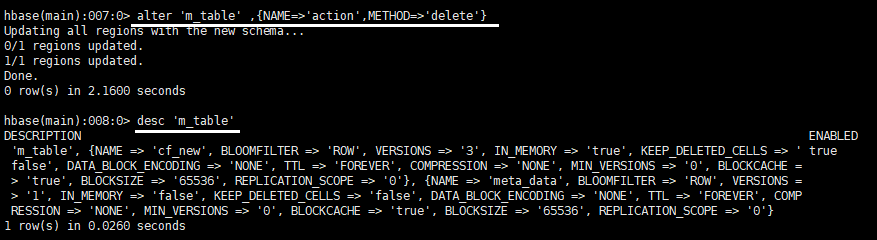

- 删除一个列簇

alter 'm_table',{NAME=>'action',METHOD=>'delete'}

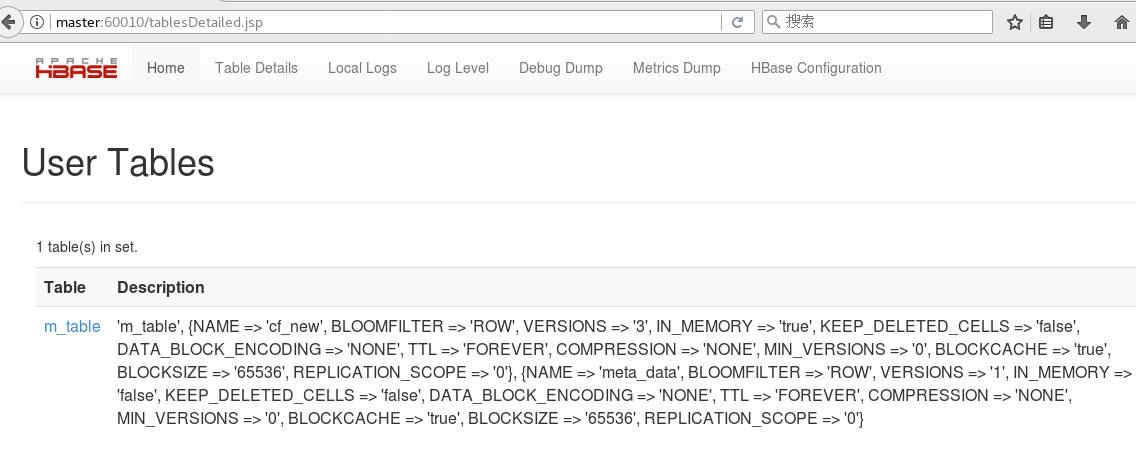

- 60010查看

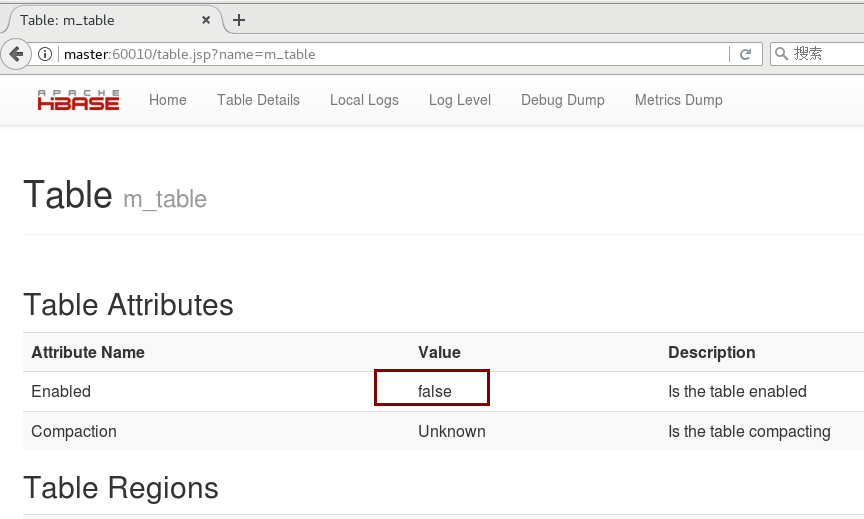

- 删除表需要修改Enabled为false

disable 'm_table'

- 删除表

drop 'm_table'

- 写数据

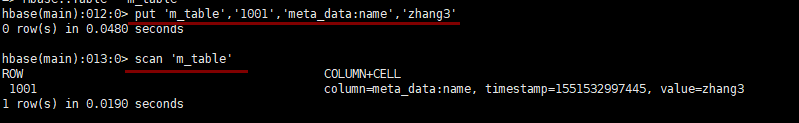

put 'm_table','1001','meta_data:name','zhang3' - 扫描表

scan 'm_table'

- 再次写数据

put 'm_table','1001','meta_data:age','18' - 没有数据

-

刷新数据

flush 'm_table'

- 再次插入一组数据

put 'm_table','1002','meta_data:name','li4'

put 'm_table','1002','meta_data:age','22'

put 'm_table','1002','meta_data:gender','man'

-

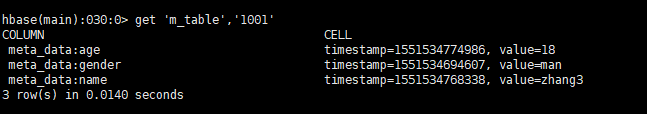

读一条数据

get 'm_table','1001'

- 版本号

alter 'm_table',{NAME=>'meta_data',VERSIONS=>3}

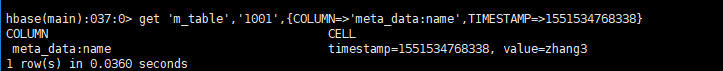

put 'm_table','1001','meta_data:name','wangwu'- 通过时间戳拿到修改之前的数据

get 'm_table','1001',{COLUMN=>'meta_data:name',TIMESTAMP=>1551534768338}

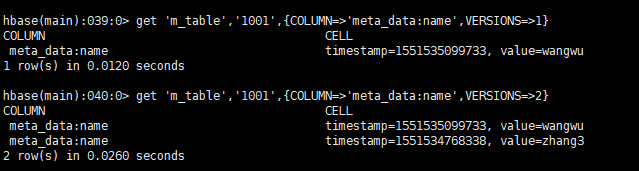

- 通过版本号查找时间按戳

get 'm_table','1001',{COLUMN=>'meta_data:name',VERSIONS=>1}

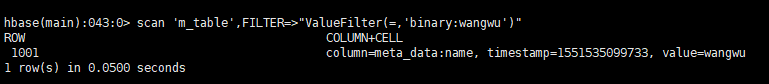

- 通过某一个值反查记录(不知道rowkey的情况下)

scan 'm_table',FILTER=>"ValueFilter(=,'binary:wangwu')"

- 模糊反查

scan 'm_table',FILTER=>"ValueFilter(=,'substring:ang')"

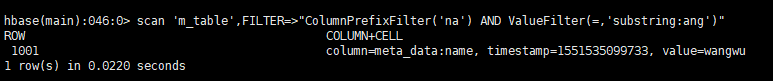

- 多条件(列名已na开始,值里有ang的)

scan 'm_table',FILTER=>"ColumnPrefixFilter('na') AND ValueFilter(=,'substring:ang')"

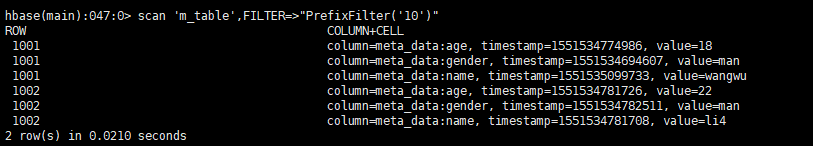

- 已rowkey什么开头查询

scan 'm_table',FILTER=>"PrefixFilter('na')"

- 范围

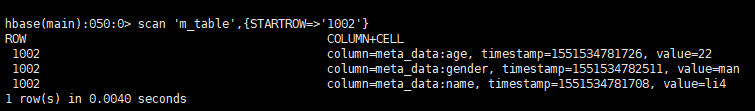

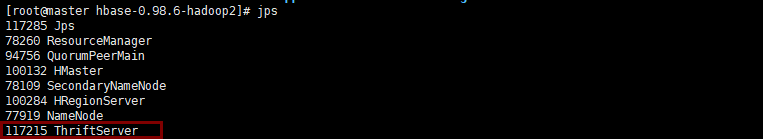

scan 'm_table',{STARTROW=>'1002'}

- 范围+条件

scan 'm_table',{STARTROW=>'1002',FILTER=>"ColumnPrefixFilter('na')"}

- 正则过滤

put 'm_table','user|4001','meta_data:name','zhao6'

import org.apache.hadoop.hbase.filter.RegexStringComparator

import org.apache.hadoop.hbase.filter.CompareFilter

import org.apache.hadoop.hbase.filter.SubstringComparator

import org.apache.hadoop.hbase.filter.RowFilter

scan 'm_table',{FILTER=>RowFilter.new(CompareFilter::CompareOp.valueOf('EQUAL'),RegexStringComparator.new('^user\|\d+$'))}

-

查看表的行数

count 'm_table'

-

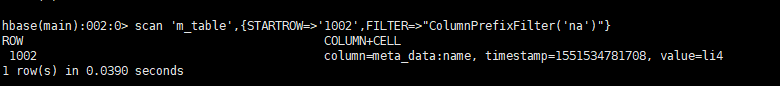

清空表

truncate 'm_table'

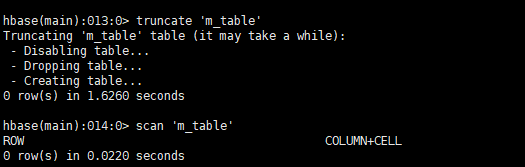

Python操作Hbase

1. 安装thrift

下载thrift-0.8.0.tar.gz(需要gcc,G++,C++环境yum install gcc 和 yum install gcc-c++ 还有(python-devel)yum install python-devel )

解压安装:

./configure

make

make install2. 启动

在hbase路径下./bin/hbase-daemon.sh start thrift

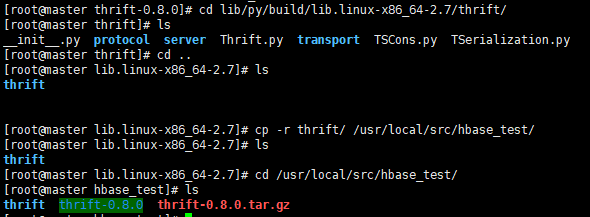

3. 拷贝thrift下的py模块以供开发使用

lib/py/build/lib.linux-x86_64-2.7 路径下的thrift模块cp -r thrift/ /usr/local/src/hbase_test/

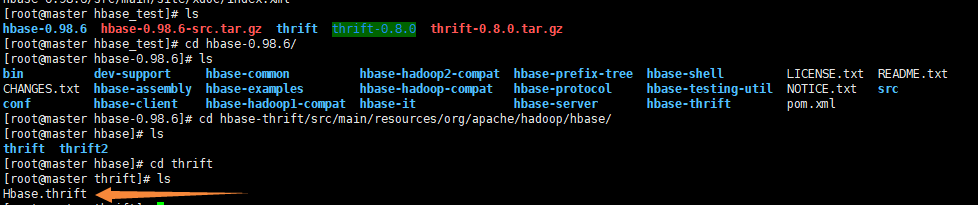

4. 下载对应版本源码包(这里是hbase-0.98.6-src.tar)上传服务器解压

进入目录cd hbase-thrift/src/main/resources/org/apache/hadoop/hbase/

找到Hbase.thrift文件

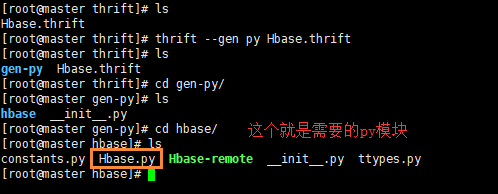

生成对应的python代码thrift --gen py Hbase.thrift

拷贝对应的py模块到我们的测试路径cp -r hbase/ /usr/local/src/hbase_test/

5. 开发

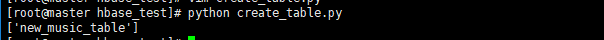

5.1使用python创建一个表格create_table.py

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

from hbase import Hbase

from hbase.ttypes import *

transport = TSocket.TSocket('master', 9090)

transport = TTransport.TBufferedTransport(transport)

protocol = TBinaryProtocol.TBinaryProtocol(transport)

client = Hbase.Client(protocol)

transport.open()

#==============================

base_info_contents = ColumnDescriptor(name='meta-data', maxVersions=1)

other_info_contents = ColumnDescriptor(name='flags', maxVersions=1)

client.createTable('new_music_table', [base_info_contents, other_info_contents])

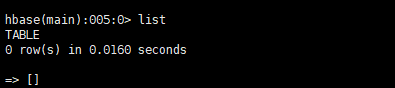

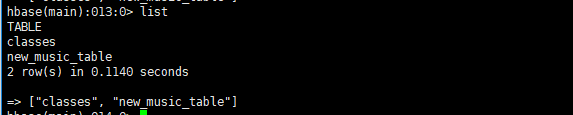

print client.getTableNames()查询一下有哪些表

执行建表py文件

5.2插入一些数据insert_data.py

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

from hbase import Hbase

from hbase.ttypes import *

transport = TSocket.TSocket('master', 9090)

transport = TTransport.TBufferedTransport(transport)

protocol = TBinaryProtocol.TBinaryProtocol(transport)

client = Hbase.Client(protocol)

transport.open()

tableName = 'new_music_table'

rowKey = '1100'

mutations = [Mutation(column="meta-data:name", value="wangqingshui"), \

Mutation(column="meta-data:tag", value="pop"), \

Mutation(column="flags:is_valid", value="TRUE")]

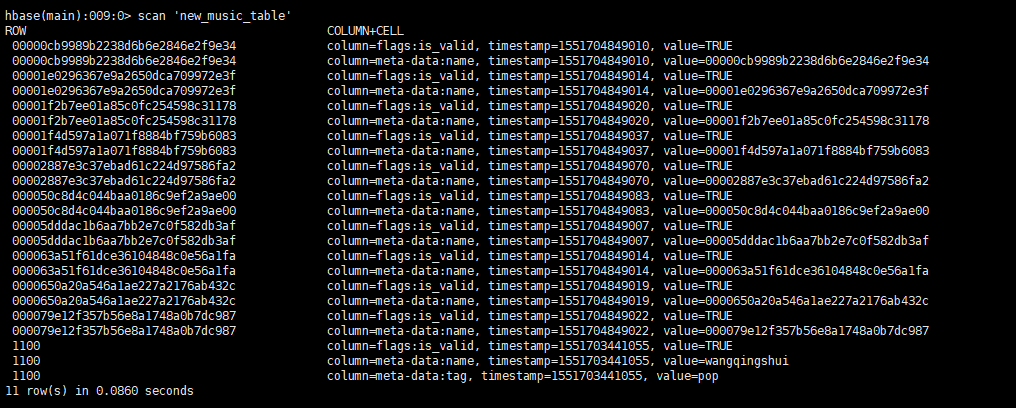

client.mutateRow(tableName, rowKey, mutations, None)执行脚本

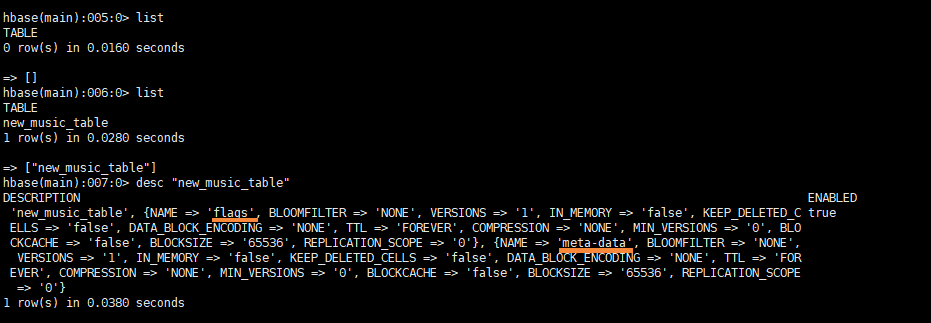

5.3读取一条数据get_one_line.py

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

from hbase import Hbase

from hbase.ttypes import *

transport = TSocket.TSocket('master', 9090)

transport = TTransport.TBufferedTransport(transport)

protocol = TBinaryProtocol.TBinaryProtocol(transport)

client = Hbase.Client(protocol)

transport.open()

tableName = 'new_music_table'

rowKey = '1100'

result = client.getRow(tableName, rowKey, None)

for r in result:

print 'the row is ' , r.row

print 'the name is ' , r.columns.get('meta-data:name').value

print 'the flag is ' , r.columns.get('flags:is_valid').value

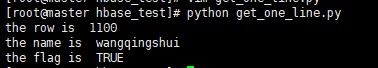

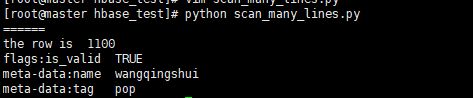

5.4扫描多条数据scan_many_lines.py

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

from hbase import Hbase

from hbase.ttypes import *

transport = TSocket.TSocket('master', 9090)

transport = TTransport.TBufferedTransport(transport)

protocol = TBinaryProtocol.TBinaryProtocol(transport)

client = Hbase.Client(protocol)

transport.open()

tableName = 'new_music_table'

scan = TScan()

id = client.scannerOpenWithScan(tableName, scan, None)

result = client.scannerGetList(id, 10)

for r in result:

print '======'

print 'the row is ' , r.row

for k, v in r.columns.items():

print "\t".join([k, v.value])

5.5mr批量插入

压缩2个模块文件

tar -zcvf hbase.tgz hbase

tar -zcvf thrift.tgz thrift

mkdir hbase_batch_insert

mv hbase.tgz hbase_batch_insert

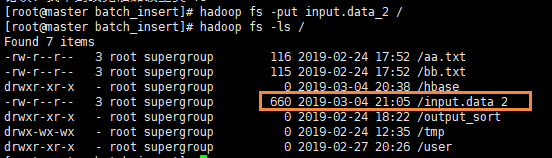

mv thrift.tgz hbase_batch_insert新建文件input.data_2,上传hdfs

00000cb9989b2238d6b6e2846e2f9e34 00000cb9989b2238d6b6e2846e2f9e34

00001e0296367e9a2650dca709972e3f 00001e0296367e9a2650dca709972e3f

00001f2b7ee01a85c0fc254598c31178 00001f2b7ee01a85c0fc254598c31178

00001f4d597a1a071f8884bf759b6083 00001f4d597a1a071f8884bf759b6083

00002887e3c37ebad61c224d97586fa2 00002887e3c37ebad61c224d97586fa2

000050c8d4c044baa0186c9ef2a9ae00 000050c8d4c044baa0186c9ef2a9ae00

00005dddac1b6aa7bb2e7c0f582db3af 00005dddac1b6aa7bb2e7c0f582db3af

000063a51f61dce36104848c0e56a1fa 000063a51f61dce36104848c0e56a1fa

0000650a20a546a1ae227a2176ab432c 0000650a20a546a1ae227a2176ab432c

000079e12f357b56e8a1748a0b7dc987 000079e12f357b56e8a1748a0b7dc987

map.py文件

transport = TTransport.TBufferedTransport(transport)

#!/usr/bin/python

import os

import sys

os.system('tar xvzf hbase.tgz > /dev/null')

os.system('tar xvzf thrift.tgz > /dev/null')

reload(sys)

sys.setdefaultencoding('utf-8')

sys.path.append("./")

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

from hbase import Hbase

from hbase.ttypes import *

transport = TSocket.TSocket('master', 9090)

transport = TTransport.TBufferedTransport(transport)

protocol = TBinaryProtocol.TBinaryProtocol(transport)

client = Hbase.Client(protocol)

transport.open()

tableName = 'new_music_table'

def mapper_func():

for line in sys.stdin:

ss = line.strip().split('\t')

if len(ss) != 2:

continue

key = ss[0].strip()

val = ss[1].strip()

rowKey = key

mutations = [Mutation(column="meta-data:name", value=val), \

Mutation(column="flags:is_valid", value="TRUE")]

client.mutateRow(tableName, rowKey, mutations, None)

if __name__ == "__main__":

module = sys.modules[__name__]

func = getattr(module, sys.argv[1])

args = None

if len(sys.argv) > 1:

args = sys.argv[2:]

func(*args)run.sh文件

HADOOP_CMD="/usr/local/src/hadoop-2.6.5/bin/hadoop"

STREAM_JAR_PATH="/usr/local/src/hadoop-2.6.5/share/hadoop/tools/lib/hadoop-streaming-2.6.5.jar"

INPUT_FILE_PATH_1="/input.data_2"

OUTPUT_PATH="/output_hbase"

$HADOOP_CMD fs -rmr -skipTrash $OUTPUT_PATH

# Step 1.

$HADOOP_CMD jar $STREAM_JAR_PATH \

-input $INPUT_FILE_PATH_1 \

-output $OUTPUT_PATH \

-mapper "python map.py mapper_func" \

-file ./map.py \

-file "./hbase.tgz" \

-file "./thrift.tgz"执行脚本bash run.sh查询表。

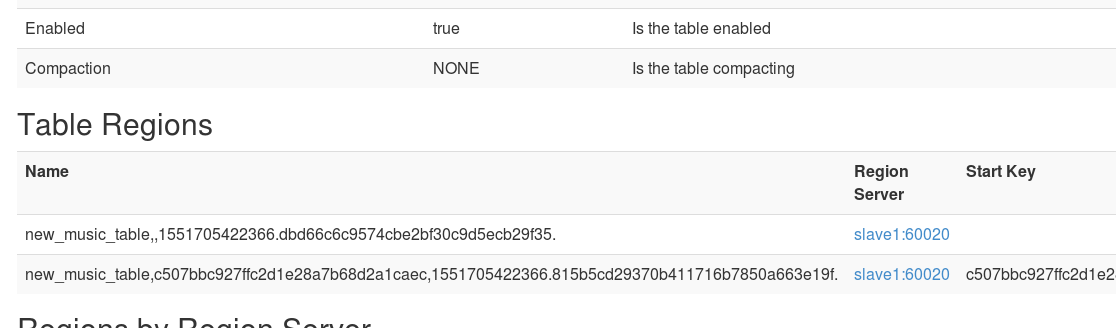

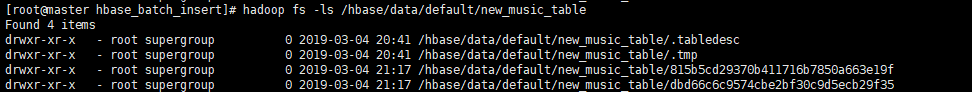

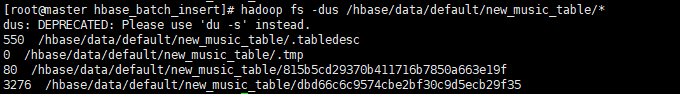

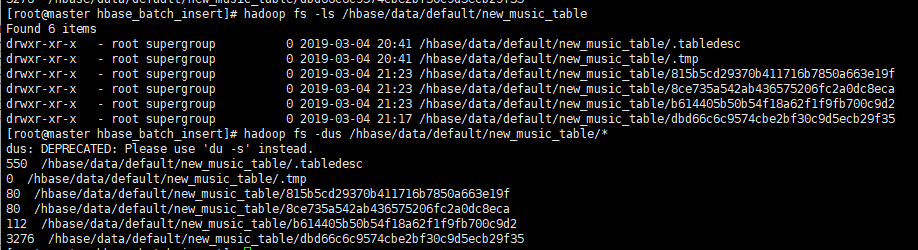

刷新数据flush 'new_music_table'查看端口

分裂Regionhbase(main):011:0>split 'new_music_table','c507bbc927ffc2d1e28a7b68d2a1caec'

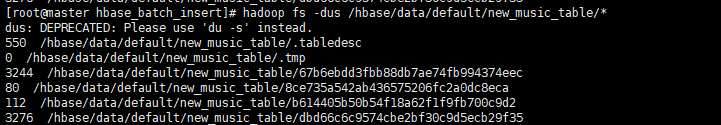

继续分裂hbase(main):012:0>split 'new_music_table','dbd66c6c9574cbe2bf30c9d5ecb29f35'

合并`hbase(main):013:0>merge_region 'dbd66c6c9574cbe2bf30c9d5ecb29f35','8ce735a542ab436575206fc2a0dc8eca',true

java操作Hbase

pom.xml

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-it</artifactId>

<version>1.2.2</version>

</dependency>简单CRUD

HabseDemo.java

package com.hadoop;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

public class HabseDemo {

public static final String TABLENAME = "new_music_table";

public static final String COLUMNFAMILY = "meta-data";

public static Configuration conf = HBaseConfiguration.create();

//表管理类

private static HBaseAdmin hBaseAdmin;

//数据管理类

private static HTable table;

@Before

public void init() throws Exception {

conf.set("hbase.master", "192.168.74.10:60000");

conf.set("hbase.zookeeper.quorum", "192.168.74.10,192.168.74.11,192.168.74.12");

hBaseAdmin = new HBaseAdmin(conf);

table = new HTable(conf, TABLENAME);

}

@After

public void destory() throws IOException {

if (hBaseAdmin != null) {

hBaseAdmin.close();

}

if (table != null) {

table.close();

}

}

/**

* 创建表

*/

@Test

public void createTable() throws IOException {

HTableDescriptor desc = new HTableDescriptor(TableName.valueOf(TABLENAME));

HColumnDescriptor family = new HColumnDescriptor(COLUMNFAMILY.getBytes());

desc.addFamily(family);

hBaseAdmin.createTable(desc);

System.out.println("创建表" + TABLENAME + ";列簇为:" + COLUMNFAMILY + ";成功!!!");

}

@Test

public void addtest() {

try {

addOneRecord(TABLENAME, "ip=192.168.74.200-001", COLUMNFAMILY, "ip", "192.168.1.201");

addOneRecord(TABLENAME, "ip=192.168.74.200-001", COLUMNFAMILY, "name", "zhangsan");

addOneRecord(TABLENAME, "ip=192.168.74.200-001", COLUMNFAMILY, "age", "15");

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* @param TABLENAME 表名

* @param rowKey 行号

* @param family 列簇

* @param qualifier 列名

* @param value 值

* @throws IOException 添加一条信息

*/

public static void addOneRecord(String tableName, String rowKey, String family, String qualifier, String value)

throws IOException {

Put put = new Put(Bytes.toBytes(rowKey));

put.add(Bytes.toBytes(family), Bytes.toBytes(qualifier), Bytes.toBytes(value));

table.put(put);

System.out.println("insert record " + rowKey + " to table " + tableName + " success");

}

@Test

public void getByRow(){

try {

selectRowKey(TABLENAME,"ip=192.168.74.200-001");

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 查询单条记录

* @param tableName 表名

* @param rowKey 行名

* @throws IOException

*/

public static void selectRowKey(String tableName, String rowKey) throws IOException {

Get g = new Get(rowKey.getBytes());

Result rs = table.get(g);

for (Cell kv : rs.rawCells()) {

System.out.println("--------------------" + new String(kv.getRow()) + "----------------------------");

System.out.println("Column Family: " + new String(kv.getFamily()));

System.out.println("Column :" + new String(kv.getQualifier()));

System.out.println("value : " + new String(kv.getValue()));

}

}

@Test

public void DeleteByRow(){

try {

delOneRecord(TABLENAME,"ip=192.168.74.200-001");

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 删除单条数据

* @param tableName 表名

* @param rowKey 行名

* @throws IOException

*/

public static void delOneRecord(String tableName, String rowKey) throws IOException {

table = new HTable(conf, tableName);

List<Delete> list = new ArrayList<Delete>();

Delete delete = new Delete(rowKey.getBytes());

list.add(delete);

table.delete(list);

System.out.println("delete record " + rowKey + " success!");

}

}多条件查询

HbaseScanManyRecords.java

import java.io.IOException;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

import org.apache.derby.iapi.types.DataValueDescriptor;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HConstants;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.filter.BinaryComparator;

import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp;

import org.apache.hadoop.hbase.filter.Filter;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.RowFilter;

import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.hbase.TableName;

import org.dmg.pmml.False;

public class HbaseScanManyRecords {

private static final DataValueDescriptor CellUtil = null;

public static Configuration conf = HBaseConfiguration.create();

public static Connection connection = null;

public static Table table = null;

public static void getManyRecords() throws IOException {

connection = ConnectionFactory.createConnection(conf);

table = connection.getTable(TableName.valueOf("new_music_table"));

Scan scan = new Scan();

scan.setCaching(100);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

System.out.print("=================");

for (KeyValue kv : result.raw()) {

System.out.print(new String(kv.getRow()) + " ");

System.out.print(new String(kv.getFamily()) + ":");

System.out.print(new String(kv.getQualifier()) + " ");

System.out.print(kv.getTimestamp() + " ");

System.out.println(new String(kv.getValue()));

}

}

scanner.close();

table.close();

connection.close();

}

/*

public static void getManyRecordsWithFilter(String tableName, String rowKey) throws IOException {

table = new HTable(conf, tableName);

Scan scan = new Scan();

scan.setCaching(100);

// scan.setStartRow(Bytes.toBytes("ip=10.11.1.2-996"));

// scan.setStopRow(Bytes.toBytes("ip=10.11.1.2-997"));

Filter filter = new RowFilter(CompareOp.EQUAL, new BinaryComparator(Bytes.toBytes(rowKey)));

scan.setFilter(filter);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

for (KeyValue kv : result.raw()) {

System.out.print(new String(kv.getRow()) + " ");

System.out.print(new String(kv.getFamily()) + ":");

System.out.print(new String(kv.getQualifier()) + " ");

System.out.print(kv.getTimestamp() + " ");

System.out.println(new String(kv.getValue()));

}

}

}

*/

public static void getManyRecordsWithFilter(String tableName, ArrayList<String> rowKeyList) throws IOException {

table = new HTable(conf, tableName);

Scan scan = new Scan();

scan.setCaching(100);

List<Filter> filters = new ArrayList<Filter>();

for(int i = 0; i < rowKeyList.size(); i++) {

filters.add(new RowFilter(CompareOp.EQUAL, new BinaryComparator(Bytes.toBytes(rowKeyList.get(i)))));

}

FilterList filerList = new FilterList(FilterList.Operator.MUST_PASS_ONE, filters);

scan.setFilter(filerList);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

System.out.println("===============");

for (KeyValue kv : result.raw()) {

System.out.print(new String(kv.getRow()) + " ");

System.out.print(new String(kv.getFamily()) + ":");

System.out.print(new String(kv.getQualifier()) + " ");

System.out.print(kv.getTimestamp() + " ");

System.out.println(new String(kv.getValue()));

}

}

}

public static void main(String[] args) throws IOException {

conf.set("hbase.master", "192.168.74.10:60000");

conf.set("hbase.zookeeper.quorum", "192.168.74.10,192.168.74.11,192.168.74.12");

//conf.setLong("hbase.client.scanner.caching", 100);

// TODO Auto-generated method stub

try {

//getManyRecords();

//getManyRecordsWithFilter("user_action_table", "1001");

ArrayList<String> whiteRowKeyList =new ArrayList<>();

whiteRowKeyList.add("ip=192.168.74.200-001");

whiteRowKeyList.add("1100");

getManyRecordsWithFilter("new_music_table", whiteRowKeyList);

//getManyRecords(TableName);

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

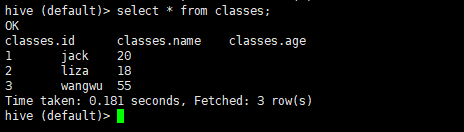

}hive&&Hbase

首先创建一张hbase表create 'classes','user'

插入数据

put 'classes','001','user:name','jack'

put 'classes','001','user:age','20'

put 'classes','002','user:name','liza'

put 'classes','002','user:age','18'

然后创建hive表

create external table classes(id int, name string, age int)

STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

WITH SERDEPROPERTIES ("hbase.columns.mapping" = ":key,user:name,user:age")

TBLPROPERTIES("hbase.table.name" = "classes");

在hbase中添加数据

put 'classes','003','user:name','wangwu'

put 'classes','003','user:age','55'