- pom.xml

- 注意运行时报错java.lang.ClassNotFoundException: backtype.storm.topology.IRichSpout。注释掉作用域

- 注意运行时报错java.lang.NoSuchMethodError: com.lmax.disruptor.RingBuffer.。制定lmax版本

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-core</artifactId>

<version>0.9.3</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>com.lmax</groupId>

<artifactId>disruptor</artifactId>

<version>3.2.0</version>

</dependency>

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichSpout;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Values;

import java.util.List;

import java.util.Map;

public class WcSpout extends BaseRichSpout {

private Map map;

private TopologyContext topologyContext;

private SpoutOutputCollector spoutOutputCollector;

int i = 0;

@Override

public void open(Map map, TopologyContext topologyContext, SpoutOutputCollector spoutOutputCollector) {

this.map = map;

this.topologyContext = topologyContext;

this.spoutOutputCollector = spoutOutputCollector;

}

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Tuple;

import java.util.Map;

public class WcBolt extends BaseRichBolt {

private Map map;

private TopologyContext topologyContext;

private OutputCollector outputCollector;

int sum = 0;

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

this.map = map;

this.topologyContext = topologyContext;

this.outputCollector = outputCollector;

}

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.topology.TopologyBuilder;

public class Test {

public static void main(String[] args) {

TopologyBuilder tb = new TopologyBuilder();

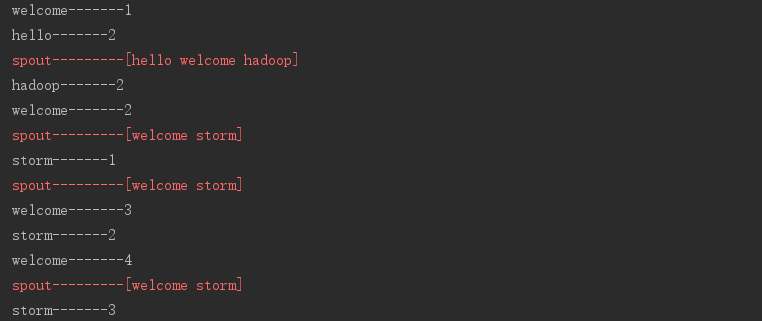

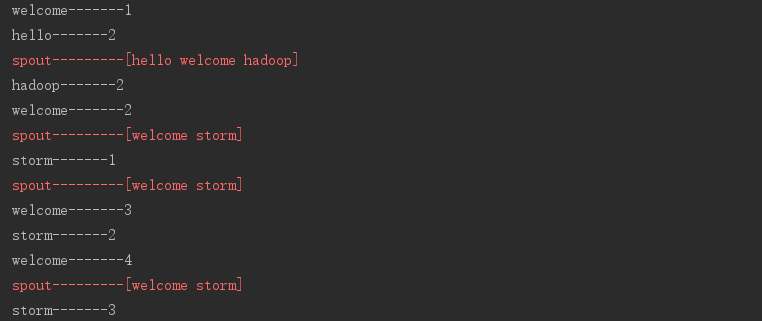

- 测试结果

demo2 WorldCount单词统计案例

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichSpout;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Values;

import backtype.storm.utils.Utils;

import java.util.List;

import java.util.Map;

import java.util.Random;

public class WcSpout extends BaseRichSpout {

private SpoutOutputCollector spoutOutputCollector;

String[] text = {

"hello welcome hadoop",

"hello hadoop",

"welcome storm"

};

Random r = new Random();

@Override

public void open(Map map, TopologyContext topologyContext, SpoutOutputCollector spoutOutputCollector) {

this.spoutOutputCollector = spoutOutputCollector;

}

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Tuple;

import backtype.storm.tuple.Values;

import java.util.List;

import java.util.Map;

public class WcSplitBolt extends BaseRichBolt {

private OutputCollector outputCollector;

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

this.outputCollector = outputCollector;

}

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Tuple;

import java.util.HashMap;

import java.util.Map;

public class WcCountBolt extends BaseRichBolt {

private Map<String, Integer> map = new HashMap<>();

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

}

@Override

public void execute(Tuple tuple) {

String w = tuple.getStringByField("w");

Integer count = 1;

if (map.containsKey(w)) {

count = map.get(w) + 1;

}

map.put(w, count);

System.out.println(w + "-------" + count);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

}

}

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.StormSubmitter;

import backtype.storm.generated.AlreadyAliveException;

import backtype.storm.generated.InvalidTopologyException;

import backtype.storm.topology.TopologyBuilder;

import backtype.storm.tuple.Fields;

public class Test {

public static void main(String[] args) {

TopologyBuilder tb = new TopologyBuilder();

tb.setSpout("WcSpout", new WcSpout());

- 测试结果

数据流分组(即数据分发策略)

- 1.Shuffle Grouping

- 随机分组,随机派发stream里面的tuple,保证每个bolt task接收到的tuple数目大致相同。

- 轮询,平均分配

- 2.Fields Grouping

- 按字段分组,比如,按"user-id"这个字段来分组,那么具有同样"user-id"的 tuple 会被分到相同的Bolt里的一个task, 而不同的"user-id"则可能会被分配到不同的task。

- 3.All Grouping

- 广播发送,对于每一个tuple,所有的bolts都会收到

- 4.Global Grouping

- 全局分组,把tuple分配给task id最低的task 。

- 5.None Grouping

- 不分组,这个分组的意思是说stream不关心到底怎样分组。目前这种分组和Shuffle grouping是一样的效果。 有一点不同的是storm会把使用none grouping的这个bolt放到这个bolt的订阅者同一个线程里面去执行(未来Storm如果可能的话会这样设计)。

- 6.Direct Grouping

- 指向型分组, 这是一种比较特别的分组方法,用这种分组意味着消息(tuple)的发送者指定由消息接收者的哪个task处理这个消息。只有被声明为 Direct Stream 的消息流可以声明这种分组方法。而且这种消息tuple必须使用 emitDirect 方法来发射。消息处理者可以通过 TopologyContext 来获取处理它的消息的task的id (OutputCollector.emit方法也会返回task的id)

- 7.Local or shuffle grouping

- 本地或随机分组。如果目标bolt有一个或者多个task与源bolt的task在同一个工作进程中,tuple将会被随机发送给这些同进程中的tasks。否则,和普通的Shuffle Grouping行为一致

- 8.customGrouping

- 自定义,相当于mapreduce那里自己去实现一个partition一样。

demo3 数据流分组案例

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 12:40:49

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 09:40:49

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 08:40:51

www.taobao.com VVVYH6Y4V4SFXZ56JIPDPB4V678 2017-02-21 12:40:49

www.taobao.com BBYH61456FGHHJ7JL89RG5VV9UYU7 2017-02-21 08:40:51

www.taobao.com VVVYH6Y4V4SFXZ56JIPDPB4V678 2017-02-21 08:40:52

www.taobao.com BBYH61456FGHHJ7JL89RG5VV9UYU7 2017-02-21 12:40:49

www.taobao.com BBYH61456FGHHJ7JL89RG5VV9UYU7 2017-02-21 09:40:49

www.taobao.com ABYH6Y4V4SCVXTG6DPB4VH9U123 2017-02-21 08:40:53

www.taobao.com ABYH6Y4V4SCVXTG6DPB4VH9U123 2017-02-21 09:40:49

www.taobao.com ABYH6Y4V4SCVXTG6DPB4VH9U123 2017-02-21 10:40:49

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 12:40:49

www.taobao.com ABYH6Y4V4SCVXTG6DPB4VH9U123 2017-02-21 08:40:50

www.taobao.com CYYH6Y2345GHI899OFG4V9U567 2017-02-21 11:40:49

www.taobao.com VVVYH6Y4V4SFXZ56JIPDPB4V678 2017-02-21 11:40:49

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 08:40:50

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 08:40:53

www.taobao.com VVVYH6Y4V4SFXZ56JIPDPB4V678 2017-02-21 09:40:49

www.taobao.com BBYH61456FGHHJ7JL89RG5VV9UYU7 2017-02-21 09:40:49

www.taobao.com BBYH61456FGHHJ7JL89RG5VV9UYU7 2017-02-21 08:40:52

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 11:40:49

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 08:40:51

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 08:40:53

www.taobao.com BBYH61456FGHHJ7JL89RG5VV9UYU7 2017-02-21 08:40:53

www.taobao.com VVVYH6Y4V4SFXZ56JIPDPB4V678 2017-02-21 08:40:50

www.taobao.com CYYH6Y2345GHI899OFG4V9U567 2017-02-21 08:40:53

www.taobao.com BBYH61456FGHHJ7JL89RG5VV9UYU7 2017-02-21 12:40:49

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 11:40:49

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 08:40:50

www.taobao.com XXYH6YCGFJYERTT834R52FDXV9U34 2017-02-21 08:40:53

www.taobao.com BBYH61456FGHHJ7JL89RG5VV9UYU7 2017-02-21 08:40:52

www.taobao.com CYYH6Y2345GHI899OFG4V9U567 2017-02-21 08:40:51

www.taobao.com ABYH6Y4V4SCVXTG6DPB4VH9U123 2017-02-21 10:40:49

www.taobao.com BBYH61456FGHHJ7JL89RG5VV9UYU7 2017-02-21 09:40:49

www.taobao.com