0 Spark完成WordCount操作

先看下结果:

pom.xml:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.chenjj</groupId>

<artifactId>sparkDemo</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<spark.version>2.2.0</spark.version>

</properties>

<repositories>

<repository>

<id>nexus-aliyun</id>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</repository>

</repositories>

<dependencies>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-core_2.10 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.10</artifactId>

<version>1.6.0</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<version>2.3</version>

<configuration>

<classifier>dist</classifier>

<appendAssemblyId>true</appendAssemblyId>

<descriptorRefs>

<descriptor>jar-with-dependencies</descriptor>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

1、项目目录下新建aa.txt文件

我们 都是 好人

我们 好人 你们 他们

好人 坏人 他们

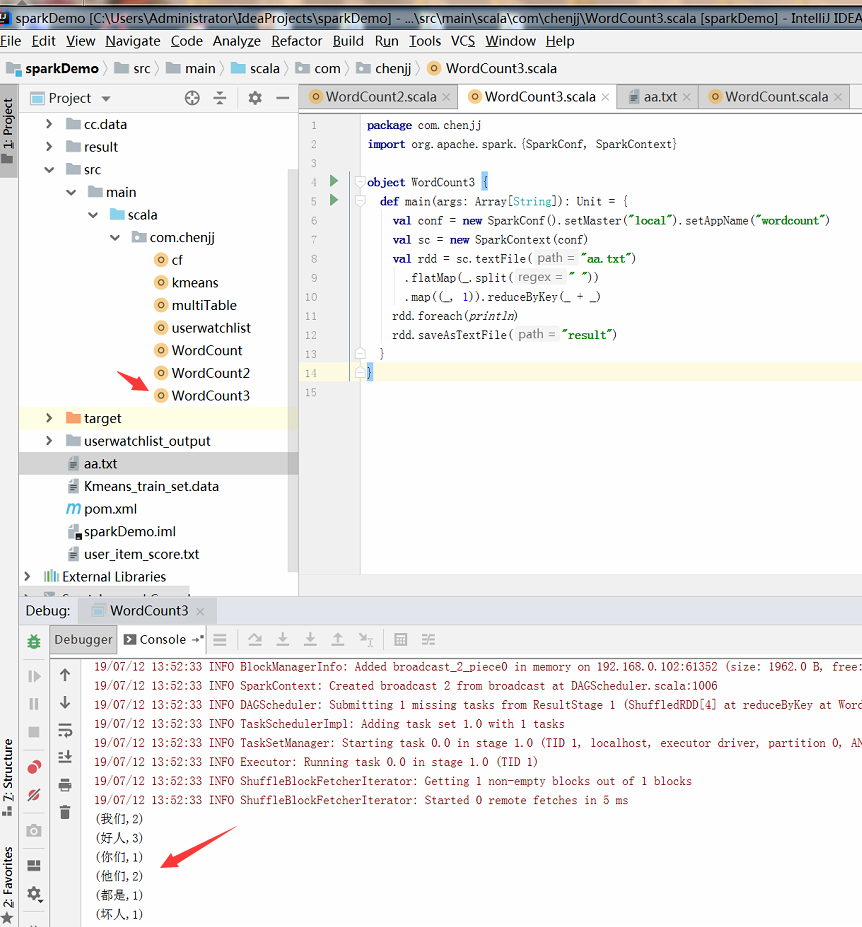

2、scala版本---WordCount3.scala

package com.chenjj

import org.apache.spark.{SparkConf, SparkContext}

object WordCount3 {

def main(args: Array[String]): Unit = {

//conf 可以设置SparkApplication 的名称,设置Spark 运行的模式

val conf = new SparkConf().setMaster("local").setAppName("wordcount")

//SparkContext 是通往spark 集群的唯一通道

val sc = new SparkContext(conf)

val rdd = sc.textFile("aa.txt")

.flatMap(_.split(" "))

.map((_, 1)).reduceByKey(_ + _)

rdd.foreach(println)

rdd.saveAsTextFile("result")

}

}

3、运行结果: