yolov5

参考网址:https://zhuanlan.zhihu.com/p/501798155

源码下载及使用

release下载source及pt文件(yolov5s.pt)

https://github.com/ultralytics/yolov5/tags

https://github.com/ultralytics/yolov5/releases/tag/v5.0

安装yolov5训练所需的第三方库

1. 安装anaconda,版本conda -V 进入yolo5目录下 cd [path_to_yolov5]

2. 新建虚拟环境 conda create -n yolov5GPU python=3.7 -y

3. 激活虚拟环境 conda activate yolov5GPU

4. pip install -r requirements.txt

5. 将xx.pt复制在yolov5文件夹下

6. PyCharm打开yolov5源码,File->Settings->Project:yolov5-5.0->Python Interpreter->Add->Conda Enviroment->Existing environment (D:\ProgramData\Anaconda3\envs\yolov5\python.exe),保存后,右下角显示Python3.7(yolo5)

7. PyCharm运行detect.py,提示错误 AttributeError: 'Upsample' object has no attribute 'recompute_scale_factor'

进入 D:\ProgramData\Anaconda3\envs\yolov5\lib\site-packages\torch\nn\modules\upsampling.py 行号:155 编辑并保存

def forward(self, input: Tensor) -> Tensor:

#return F.interpolate(input, self.size, self.scale_factor, self.mode, self.align_corners,

# recompute_scale_factor=self.recompute_scale_factor)

return F.interpolate(input, self.size, self.scale_factor, self.mode, self.align_corners)

train时,提示错误

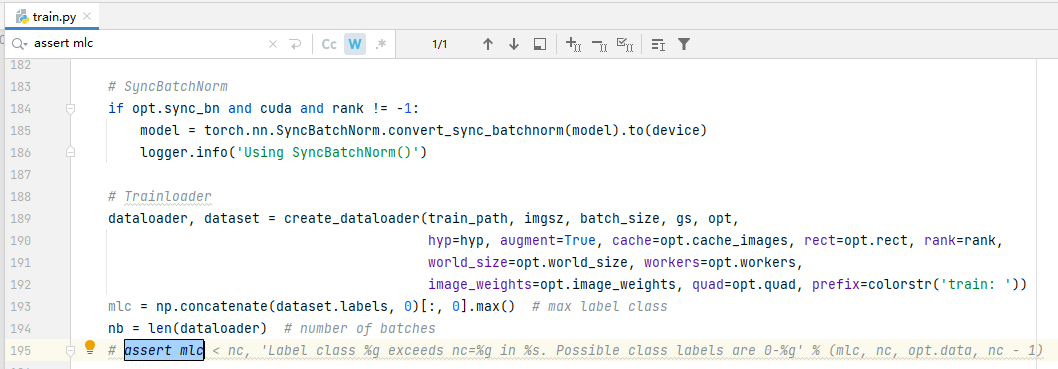

问题1:AssertionError: Label class 1 exceeds nc=1 in data/fire.yaml. Possible class labels are 0-0

解决:注释掉train.py中的一行代码 #assert mlc < nc, 'Label class %g exceeds nc=%g in %s. Possible class labels are 0-%g' % (mlc, nc, opt.data, nc - 1)

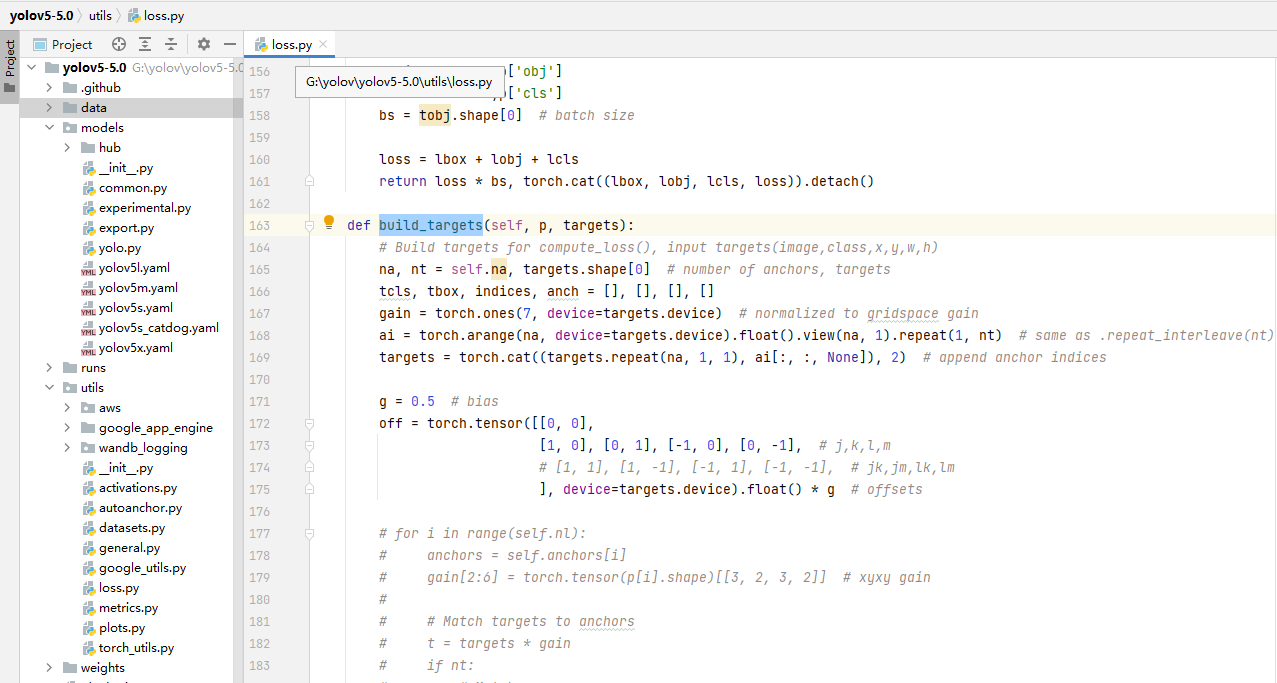

问题2:

Traceback (most recent call last):

File "G:/yolov/yolov5-5.0/train.py", line 543, in <module>

train(hyp, opt, device, tb_writer)

File "G:/yolov/yolov5-5.0/train.py", line 304, in train

loss, loss_items = compute_loss(pred, targets.to(device)) # loss scaled by batch_size

File "G:\yolov\yolov5-5.0\utils\loss.py", line 117, in __call__

tcls, tbox, indices, anchors = self.build_targets(p, targets) # targets

File "G:\yolov\yolov5-5.0\utils\loss.py", line 211, in build_targets

indices.append((b, a, gj.clamp_(0, gain[3] - 1), gi.clamp_(0, gain[2] - 1))) # image, anchor, grid indices

RuntimeError: result type Float can't be cast to the desired output type __int64

解决:loss.py 行号:1136

for i in range(self.nl): anchors, shape = self.anchors[i], p[i].shape gain[2:6] = torch.tensor(shape)[[3, 2, 3, 2]] # xyxy gain # Match targets to anchors t = targets * gain # shape(3,n,7) if nt: # Matches r = t[..., 4:6] / anchors[:, None] # wh ratio j = torch.max(r, 1 / r).max(2)[0] < self.hyp['anchor_t'] # compare # j = wh_iou(anchors, t[:, 4:6]) > model.hyp['iou_t'] # iou(3,n)=wh_iou(anchors(3,2), gwh(n,2)) t = t[j] # filter # Offsets gxy = t[:, 2:4] # grid xy gxi = gain[[2, 3]] - gxy # inverse j, k = ((gxy % 1 < g) & (gxy > 1)).T l, m = ((gxi % 1 < g) & (gxi > 1)).T j = torch.stack((torch.ones_like(j), j, k, l, m)) t = t.repeat((5, 1, 1))[j] offsets = (torch.zeros_like(gxy)[None] + off[:, None])[j] else: t = targets[0] offsets = 0 # Define bc, gxy, gwh, a = t.chunk(4, 1) # (image, class), grid xy, grid wh, anchors a, (b, c) = a.long().view(-1), bc.long().T # anchors, image, class gij = (gxy - offsets).long() gi, gj = gij.T # grid indices # Append indices.append((b, a, gj.clamp_(0, shape[2] - 1), gi.clamp_(0, shape[3] - 1))) # image, anchor, grid tbox.append(torch.cat((gxy - gij, gwh), 1)) # box anch.append(anchors[a]) # anchors tcls.append(c) # class return tcls, tbox, indices, anch

测试:

conda activate yolov5GPU

python detect.py --weights yolov5s.pt --source data/images/bus.jpg # 测试结果保存在runs/detect

conda activate yolov5GPU

python detect.py --weights yolov5s.pt --source test.mp4

自己训练模型

1.收集数据集(image/train/001.jpg)

dataset #(数据集名字:例如fire) ├── images ├── train ├── xx.jpg ├── val ├── xx.jpg ├── labels ├── train ├── xx.txt ├── val ├── xx.txt

2.标注数据集(label/train/001.txt),可使用yolov5目标识别实现半自动化标注,用labelimg进行校验

labelimg 安装

pip install labelimg -i https://pypi.tuna.tsinghua.edu.cn/simple

检查smoke/label/train目录下是否有文件classes.txt,输入类别 smoke

cmd -> labelimg

"Open Dir" smoke\images\train

"Change Save Dir" smoke\labels\train

3.训练数据集(weight/best.pt),在yaml文件内不要使用中文

yolov5-5.0/data/fire.yaml

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: G:\yolov\datasets\fire # dataset root dir

train: G:\yolov\datasets\fire\images\train # train images (relative to 'path')

val: G:\yolov\datasets\fire\images\val # val images (relative to 'path')

test: # test images (optional)

# Classes

nc: 1 # number of classes

names: ['fire'] # class names

yolov5-5.0/data/catdog.yaml

path: ../datasets/catdog

train: ../datasets/catdog/images/train

val: ../datasets/catdog/images/val

# Classes

nc: 2

names: ['cat', 'dog']

yolov5-5.0/models/yolov5s_catdog.yaml

修改 nc :2

python train.py --weights yolov5s.pt --data data/fire.yaml --workers 1 --batch-size 8

python train.py --img 640 --batch 16 --epochs 300 --data ./data/catdog.yaml --cfg ./models/yolov5s_catdog.yaml --weights ./weights/yolov5s.pt --device 0

4.模型测试,目标检测(objname=people;potion=box_l, box_t, box_r, box_b;confidence=0.6;name=29)

浙公网安备 33010602011771号

浙公网安备 33010602011771号