kubebuilder operator的运行逻辑

kubebuilder 的运行逻辑

更新

- 2023/12/18:代码描述更新至v0.16.0,新增watch部分内容

概述

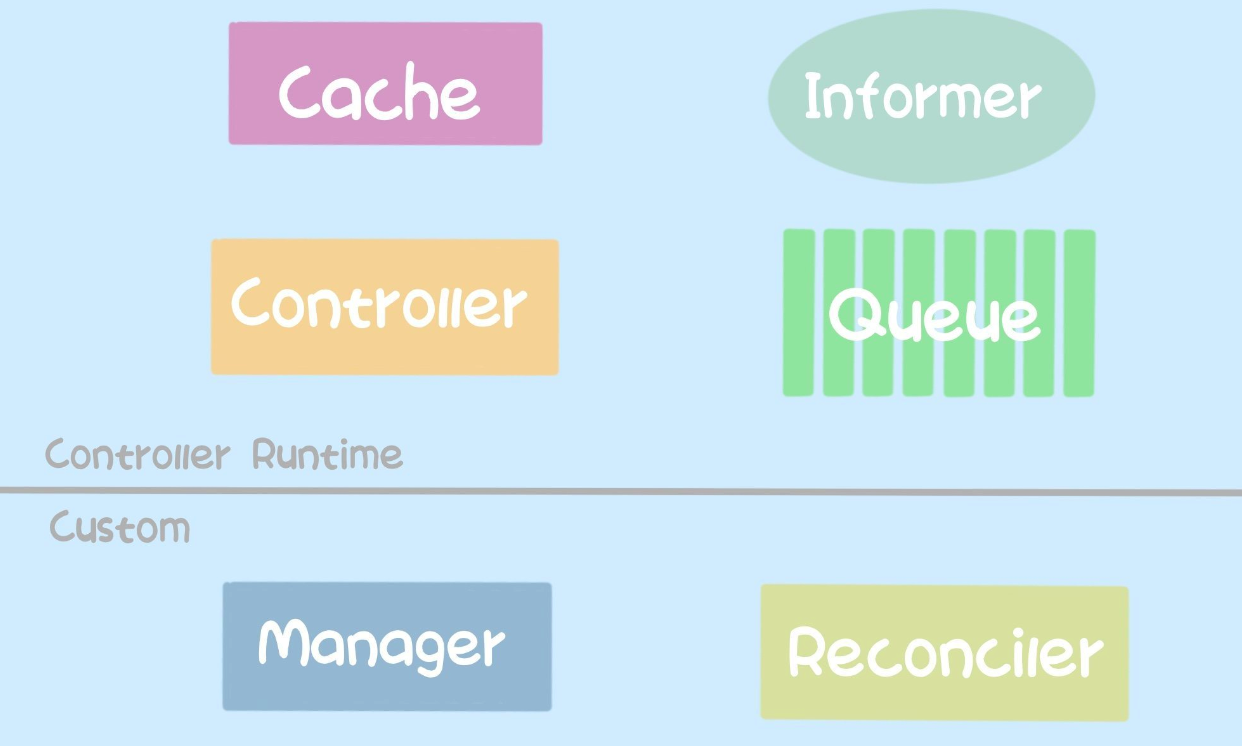

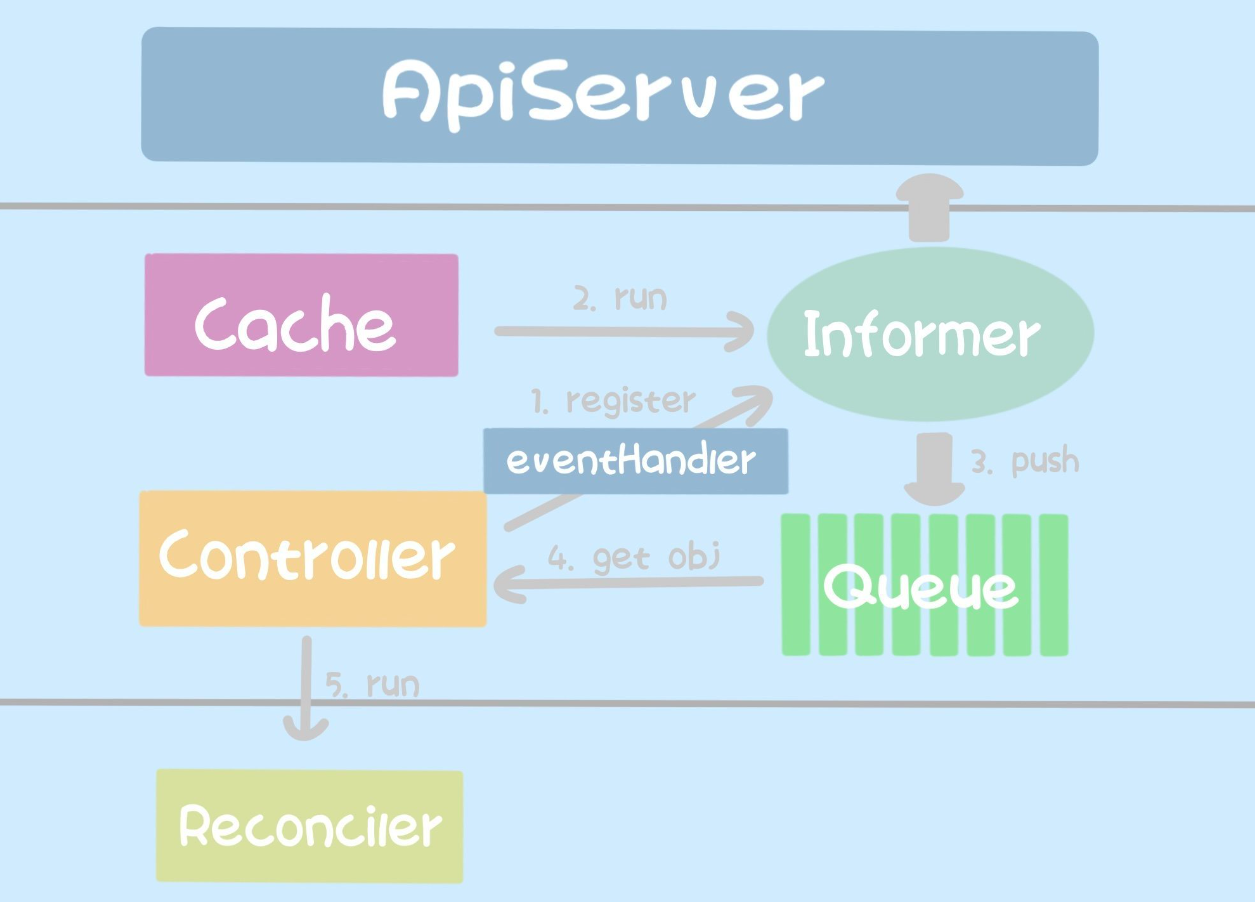

下面是kubebuilder 的架构图。可以看到最外层是通过名为Manager的组件驱动的,Manager中包含了多个组件,其中Cache中保存了gvk和informer的映射关系,用于通过informer的方式缓存kubernetes 的对象。Controller使用workqueue的方式缓存informer传递过来的对象,后续提取workqueue中的对象,传递给Reconciler进行处理。

本文不介绍kuberbuilder的用法,如有需要可以参考如下三篇文章:

- Kubernetes Operator for Beginners — What, Why, How

- Advanced Kubernetes Operators Development

- Advanced Kubernetes Operator Development with Finalizer, Informer, and Webhook

本次使用的controller-runtime的版本是:v0.16.0

引用自:Controller Runtime 的四种使用姿势

下面展示了用户创建的 Manager 和 Reconciler 以及 Controller Runtime 自己启动的 Cache 和 Controller。先看用户侧的,Manager 是用户初始化的时候需要创建的,用来启动 Controller Runtime 的组件;Reconciler 是用户自己需要提供的组件,用于处理自己的业务逻辑。

而 controller-runtime 侧的组件,Cache 顾名思义就是缓存,用于建立 Informer 对 ApiServer 进行连接 watch 资源,并将 watch 到的 object 推入队列;Controller 一方面会向 Informer 注册 eventHandler,另一方面会从队列中拿数据并执行用户侧 Reconciler 的函数。

controller-runtime 侧整个工作流程如下:

首先 Controller 会先向 Informer 注册特定资源的 eventHandler;然后 Cache 会启动 Informer,Informer 向 ApiServer 发出请求,建立连接;当 Informer 检测到有资源变动后,使用 Controller 注册进来的 eventHandler 判断是否推入队列中;当队列中有元素被推入时,Controller 会将元素取出,并执行用户侧的 Reconciler。

下述例子的代码生成参考:Building your own kubernetes CRDs

Managers

manager负责运行controllers和webhooks,并设置公共依赖,如clients、caches、schemes等。

kubebuilder的处理

kubebuilder会自动在main.go中创建Manager:

mgr, err := ctrl.NewManager(ctrl.GetConfigOrDie(), ctrl.Options{

Scheme: scheme,

MetricsBindAddress: metricsAddr,

Port: 9443,

HealthProbeBindAddress: probeAddr,

LeaderElection: enableLeaderElection,

LeaderElectionID: "3b9f5c61.com.bolingcavalry",

})

controllers是通过调用Manager.Start接口启动的。

Controllers

controller使用events来触发reconcile的请求。通过controller.New接口可以初始化一个controller,并通过manager.Start启动该controller。

func New(name string, mgr manager.Manager, options Options) (Controller, error) {

c, err := NewUnmanaged(name, mgr, options)

if err != nil {

return nil, err

}

// Add the controller as a Manager components

return c, mgr.Add(c) // 将controller添加到manager中

}

kubebuilder的处理

kubebuilder会自动在main.go中生成一个SetupWithManager函数,在Complete中创建并将controller添加到manager,具体见下文:

func (r *GuestbookReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).

For(&webappv1.Guestbook{}).

Complete(r)

}

在main.go中调用Manager.Start接口来启动controller:

mgr.Start(ctrl.SetupSignalHandler())

Reconcilers

Controller的核心是实现了Reconciler接口。Reconciler 会接收到一个reconcile请求,该请求中包含对象的name和namespace。reconcile会对比对象和其所拥有(own)的资源的当前状态与期望状态,并据此做出相应的调整。

通常Controller会根据集群事件(如Creating、Updating、Deleting Kubernetes对象)或外部事件(如GitHub Webhooks、轮询外部资源等)触发reconcile。

注意:Reconciler中传入的

reqeust中仅包含对象的名称和命名空间,并没有对象的其他信息,因此需要通过kubernetes client来获取对象的相关信息。// Request contains the information necessary to reconcile a Kubernetes object. This includes the // information to uniquely identify the object - its Name and Namespace. It does NOT contain information about // any specific Event or the object contents itself. type Request struct { // NamespacedName is the name and namespace of the object to reconcile. types.NamespacedName }// NamespacedName comprises a resource name, with a mandatory namespace, // rendered as "<namespace>/<name>". Being a type captures intent and // helps make sure that UIDs, namespaced names and non-namespaced names // do not get conflated in code. For most use cases, namespace and name // will already have been format validated at the API entry point, so we // don't do that here. Where that's not the case (e.g. in testing), // consider using NamespacedNameOrDie() in testing.go in this package. type NamespacedName struct { Namespace string Name string }

Reconciler接口的描述如下,其中给出了其处理逻辑的例子:

- 读取一个对象以及其所拥有的所有pod

- 观察到对象期望的副本数为5,但实际只有一个pod副本

- 创建4个pods,并设置

OwnerReferences

/*

Reconciler implements a Kubernetes API for a specific Resource by Creating, Updating or Deleting Kubernetes

objects, or by making changes to systems external to the cluster (e.g. cloudproviders, github, etc).

reconcile implementations compare the state specified in an object by a user against the actual cluster state,

and then perform operations to make the actual cluster state reflect the state specified by the user.

Typically, reconcile is triggered by a Controller in response to cluster Events (e.g. Creating, Updating,

Deleting Kubernetes objects) or external Events (GitHub Webhooks, polling external sources, etc).

Example reconcile Logic:

* Read an object and all the Pods it owns.

* Observe that the object spec specifies 5 replicas but actual cluster contains only 1 Pod replica.

* Create 4 Pods and set their OwnerReferences to the object.

reconcile may be implemented as either a type:

type reconcile struct {}

func (reconcile) reconcile(controller.Request) (controller.Result, error) {

// Implement business logic of reading and writing objects here

return controller.Result{}, nil

}

Or as a function:

controller.Func(func(o controller.Request) (controller.Result, error) {

// Implement business logic of reading and writing objects here

return controller.Result{}, nil

})

Reconciliation is level-based, meaning action isn't driven off changes in individual Events, but instead is

driven by actual cluster state read from the apiserver or a local cache.

For example if responding to a Pod Delete Event, the Request won't contain that a Pod was deleted,

instead the reconcile function observes this when reading the cluster state and seeing the Pod as missing.

*/

type Reconciler interface {

// Reconcile performs a full reconciliation for the object referred to by the Request.

// The Controller will requeue the Request to be processed again if an error is non-nil or

// Result.Requeue is true, otherwise upon completion it will remove the work from the queue.

Reconcile(context.Context, Request) (Result, error)

}

重新执行Reconciler

Reconcile除了根据事件执行之外还可以重复调用,方法比较简单,即在实现Reconcile(context.Context, Request) (Result, error)的方法中,将返回值Result.Requeue设置为true,此时会非周期性地重复调用Reconcile;另一种是给Result.RequeueAfter设置一个时间范围,当超时之后会重新调用Reconcile。其重复执行的处理逻辑位于reconcileHandler方法中,其实就是将老的obj从workqueue中删除,然后重新入队列,两种情况的处理逻辑如下:

case err != nil:

if errors.Is(err, reconcile.TerminalError(nil)) {

ctrlmetrics.TerminalReconcileErrors.WithLabelValues(c.Name).Inc()

} else {

c.Queue.AddRateLimited(req)

}

...

case result.RequeueAfter > 0: //超时方式重新调用

log.V(5).Info(fmt.Sprintf("Reconcile done, requeueing after %s", result.RequeueAfter))

// The result.RequeueAfter request will be lost, if it is returned

// along with a non-nil error. But this is intended as

// We need to drive to stable reconcile loops before queuing due

// to result.RequestAfter

c.Queue.Forget(obj)

c.Queue.AddAfter(req, result.RequeueAfter)

ctrlmetrics.ReconcileTotal.WithLabelValues(c.Name, labelRequeueAfter).Inc()

case result.Requeue: //非周期性方式调用

log.V(5).Info("Reconcile done, requeueing")

c.Queue.AddRateLimited(req)

ctrlmetrics.ReconcileTotal.WithLabelValues(c.Name, labelRequeue).Inc()

Requeue和RequeueAfter对结果的影响如下:

| Requeue | RequeueAfter | Error | Result |

|---|---|---|---|

| any | any | !nil | Requeue with rate limiting. |

| true | 0 | nil | Requeue with rate limiting. |

| any | >0 | nil | Requeue after specified RequeueAfter. |

| false | 0 | nil | Do not requeue. |

kubebuilder的处理

kubebuilder会在guestbook_controller.go 中生成一个实现了Reconciler接口的模板:

func (r *GuestbookReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

_ = log.FromContext(ctx)

// TODO(user): your logic here

return ctrl.Result{}, nil

}

那么Reconciler又是怎么和controller关联起来的呢?在上文提到 kubebuilder 会通过Complete(SetupWithManager中调用)创建并添加controller到manager,同时可以看到Complete中传入的就是reconcile.Reconciler接口,这就是controller和Reconciler关联的入口:

// Complete builds the Application Controller.

func (blder *Builder) Complete(r reconcile.Reconciler) error {

_, err := blder.Build(r)

return err

}

后续会通过: Builder.Build -->Builder.doController-->newController 最终传递给controller的初始化接口controller.New,并赋值给Controller.Do变量。controller.New中创建的controller结构如下,可以看到还为MakeQueue赋予了一个创建workqueue的函数,新事件会缓存到该workqueue中,后续传递给Reconcile进行处理:

// Create controller with dependencies set

return &controller.Controller{

Do: options.Reconciler,

MakeQueue: func() workqueue.RateLimitingInterface {

return workqueue.NewRateLimitingQueueWithConfig(options.RateLimiter, workqueue.RateLimitingQueueConfig{

Name: name,

})

},

MaxConcurrentReconciles: options.MaxConcurrentReconciles,

CacheSyncTimeout: options.CacheSyncTimeout,

Name: name,

LogConstructor: options.LogConstructor,

RecoverPanic: options.RecoverPanic,

LeaderElected: options.NeedLeaderElection,

}, nil

上面有讲controller会根据事件来调用Reconciler,那它是如何传递事件的呢?

可以看下Controller的启动接口(Manager.Start中会调用Controller.Start接口),可以看到其调用了processNextWorkItem来处理workqueue中的事件:

func (c *Controller) Start(ctx context.Context) error {

...

c.Queue = c.MakeQueue() //通过MakeQueue初始化一个workqueue

...

wg := &sync.WaitGroup{}

err := func() error {

...

for _, watch := range c.startWatches { //启动watch object

...

if err := watch.src.Start(ctx, watch.handler, c.Queue, watch.predicates...); err != nil {

return err

}

}

...

wg.Add(c.MaxConcurrentReconciles)

for i := 0; i < c.MaxConcurrentReconciles; i++ { //并发处理时间

go func() {

defer wg.Done()

for c.processNextWorkItem(ctx) {

}

}()

}

...

}()

...

}

继续查看processNextWorkItem,可以看到该处理逻辑与client-go中的workqueue的处理方式一样,从workqueue中拿出事件对象,然后传递给reconcileHandler:

func (c *Controller) processNextWorkItem(ctx context.Context) bool {

obj, shutdown := c.Queue.Get() //获取workqueue中的对象

if shutdown {

// Stop working

return false

}

defer c.Queue.Done(obj)

ctrlmetrics.ActiveWorkers.WithLabelValues(c.Name).Add(1)

defer ctrlmetrics.ActiveWorkers.WithLabelValues(c.Name).Add(-1)

c.reconcileHandler(ctx, obj)

return true

}

后续会通过Controller.reconcileHandler --> Controller.Reconcile -->Controller.Do.Reconcile 最终将事件传递给Reconcile(自己实现的Reconcile赋值给了controller的Do变量)。

总结一下:kubebuilder首先通过SetupWithManager将Reconcile赋值给controller,在Manager启动时会调用Controller.Start启动controller,controller会不断获取其workqueue中的对象,并传递给Reconcile进行处理。

Controller事件来源

上面讲了controller是如何处理事件的,那么workqueue中的事件是怎么来的呢?

回到Builder.Complete-->Builder.build,从上面内容可以知道在doController函数中进行了controller的初始化,并将Reconciler和controller关联起来。在下面有个doWatch函数,该函数中注册了需要watch的对象类型,以及eventHandler(类型为handler.EnqueueRequestForObject),并通过controller的Watch接口启动对资源的监控。

func (blder *Builder) Build(r reconcile.Reconciler) (controller.Controller, error) {

...

// Set the ControllerManagedBy

if err := blder.doController(r); err != nil {//初始化controller

return nil, err

}

// Set the Watch

if err := blder.doWatch(); err != nil {

return nil, err

}

return blder.ctrl, nil

}

func (blder *Builder) doWatch() error {

// Reconcile type

if blder.forInput.object != nil { // watch For()方式引入的对象

obj, err := blder.project(blder.forInput.object, blder.forInput.objectProjection)//格式化资源类型

if err != nil {

return err

}

src := source.Kind(blder.mgr.GetCache(), obj) //初始化资源类型

hdler := &handler.EnqueueRequestForObject{} //初始化eventHandler

allPredicates := append(blder.globalPredicates, blder.forInput.predicates...)

if err := blder.ctrl.Watch(src, hdler, allPredicates...); err != nil { //启动对资源的监控

return err

}

}

...

for _, own := range blder.ownsInput { // watch Owns()方式引入的对象

...

if err := blder.ctrl.Watch(src, hdler, allPredicates...); err != nil { //启动对资源的监控

return err

}

...

}

...

for _, w := range blder.watchesInput { // watch Watches()方式引入的对象

...

if err := blder.ctrl.Watch(w.src, w.eventHandler, allPredicates...); err != nil { //启动对资源的监控

return err

}

...

}

...

}

watch的对象有三个来源:

- For()、Own():分别对应下面的

For,Owns函数:_ = ctrl.NewControllerManagedBy(mgr). For(&appsv1.ReplicaSet{}). // ReplicaSet is the Application API Owns(&corev1.Pod{}). // ReplicaSet owns Pods created by it. Complete(&ReplicaSetReconciler{Client: manager.GetClient()})

- Watches():来自使用Watches注册的对象:

_ = ctrl.NewControllerManagedBy(mgr). Watches( &source.Kind{Type: &v1core.Node{}}, handler.EnqueueRequestsFromMapFunc(myNodeFilterFunc), builder.WithPredicates(predicate.ResourceVersionChangedPredicate{}), ). Complete(r)

继续看controller.Watch接口,可以看到其调用了src.Start(src的类型为 source.Kind),将evthdler(&handler.EnqueueRequestForObject{})、c.Qeueue关联起来(c.Qeueue为Reconciler提供参数)

func (c *Controller) Watch(src source.Source, evthdler handler.EventHandler, prct ...predicate.Predicate) error {

...

return src.Start(c.ctx, evthdler, c.Queue, prct...)

}

在Kind.Start 中会根据ks.Type选择合适的informer,并添加事件管理器internal.EventHandler:

在Manager初始化时(如未指定)默认会创建一个Cache,该Cache中保存了gvk到

cache.SharedIndexInformer的映射关系,ks.cache.GetInformer中会提取对象的gvk信息,并根据gvk获取informer。在

Manager.Start的时候会启动Cache中的informer。

func (ks *Kind) Start(ctx context.Context, handler handler.EventHandler, queue workqueue.RateLimitingInterface,

prct ...predicate.Predicate) error {

...

go func() {

...

if err := wait.PollImmediateUntilWithContext(ctx, 10*time.Second, func(ctx context.Context) (bool, error) {

// Lookup the Informer from the Cache and add an EventHandler which populates the Queue

i, lastErr = ks.cache.GetInformer(ctx, ks.Type)

...

return true, nil

});

...

_, err := i.AddEventHandler(NewEventHandler(ctx, queue, handler, prct).HandlerFuncs())

...

}()

return nil

}

NewEventHandler返回internal.EventHandler,其实现了SharedIndexInformer所需的ResourceEventHandler接口:

type ResourceEventHandler interface {

OnAdd(obj interface{})

OnUpdate(oldObj, newObj interface{})

OnDelete(obj interface{})

}

看下EventHandler 是如何将OnAdd监听到的对象添加到队列中的:

func (e EventHandler) OnAdd(obj interface{}) {

...

e.handler.Create(ctx, c, e.queue)

}

上面的e.handler就是doWatch中注册的eventHandler EnqueueRequestForObject,可以看到在EnqueueRequestForObject.Create中提取了对象的名称和命名空间,并添加到了队列中:

func (e *EnqueueRequestForObject) Create(ctx context.Context, evt event.CreateEvent, q workqueue.RateLimitingInterface) {

...

q.Add(reconcile.Request{NamespacedName: types.NamespacedName{

Name: evt.Object.GetName(),

Namespace: evt.Object.GetNamespace(),

}})

}

至此将整个Kubebuilder串起来了。

与使用client-go的区别

client-go

在需要操作kubernetes资源时,通常会使用client-go来编写资源的CRUD逻辑,或使用informer机制来监听资源的变更,并在OnAdd、OnUpdate、OnDelete中进行相应的处理。

kubebuilder Operator

从上述讲解可以了解到,Operator一般会涉及两方面:object以及其所有(own)的资源。Reconcilers是核心处理逻辑,但其只能获取到资源的名称和命名空间,并不知道资源的操作(增删改)是什么,也不知道资源的其他信息,目的就是在收到资源变更时,根据object的期望状态来调整资源的状态。

kubebuilder也提供了client库,可以对kubernetes资源进行CRUD操作,但建议这种情况下直接使用client-go进行操作:

package main import ( "context" corev1 "k8s.io/api/core/v1" "k8s.io/apimachinery/pkg/apis/meta/v1/unstructured" "k8s.io/apimachinery/pkg/runtime/schema" "sigs.k8s.io/controller-runtime/pkg/client" ) var c client.Client func main() { // Using a typed object. pod := &corev1.Pod{} // c is a created client. _ = c.Get(context.Background(), client.ObjectKey{ Namespace: "namespace", Name: "name", }, pod) // Using a unstructured object. u := &unstructured.Unstructured{} u.SetGroupVersionKind(schema.GroupVersionKind{ Group: "apps", Kind: "Deployment", Version: "v1", }) _ = c.Get(context.Background(), client.ObjectKey{ Namespace: "namespace", Name: "name", }, u) }

本文来自博客园,作者:charlieroro,转载请注明原文链接:https://www.cnblogs.com/charlieroro/p/15960829.html