GO 爬虫图片相关

1. 正则爬取图片并同步下载

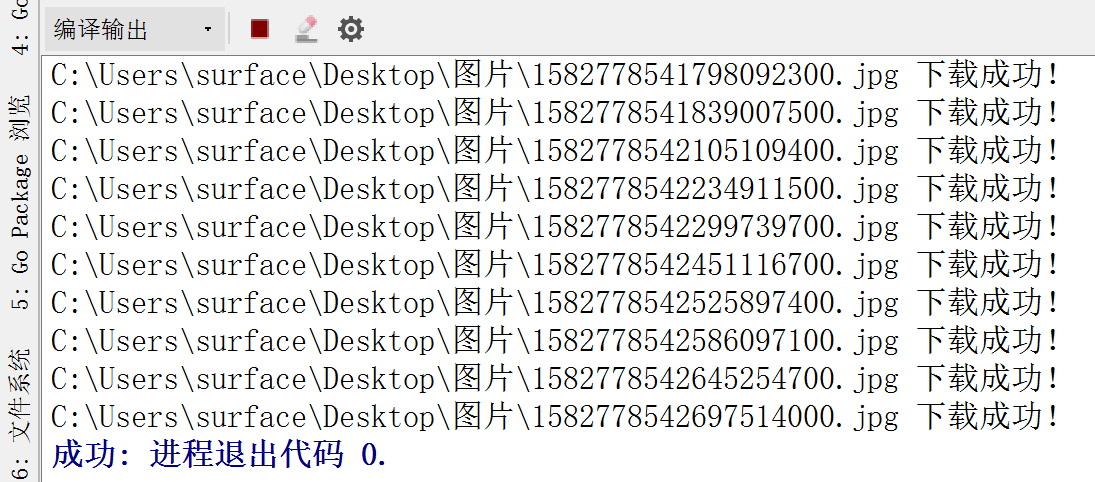

效果:

代码:

1 package main 2 3 import ( 4 "fmt" 5 "io/ioutil" 6 "net/http" 7 "regexp" 8 "strconv" 9 "time" 10 ) 11 12 var ( 13 //<img src="https://7799520.oss-cn-hangzhou.aliyuncs.com/v2/img/woman3.jpg"> 14 reImg = `<img[\s\S]+?src="([\s\S]+?)"` 15 ) 16 17 //获取html全部内容 18 func GetHtml(url string) string { 19 resp, _ := http.Get(url) 20 defer resp.Body.Close() 21 22 bytes, _ := ioutil.ReadAll(resp.Body) 23 html := string(bytes) 24 return html 25 } 26 27 //获取页面上的全部图片链接 28 func GetPageImgurls(url string) []string { 29 html := GetHtml(url) 30 //fmt.Println(html) 31 32 re := regexp.MustCompile(reImg) 33 rets := re.FindAllStringSubmatch(html, -1) 34 fmt.Println("捕获图片张数:", len(rets)) 35 36 imgUrls := make([]string, 0) 37 38 for _, ret := range rets { 39 imgUrl := ret[1] 40 imgUrls = append(imgUrls, imgUrl) 41 } 42 return imgUrls 43 } 44 45 //同步下载图片 46 func DownloadImg(url string) { 47 resp, _ := http.Get(url) 48 defer resp.Body.Close() 49 bytes, _ := ioutil.ReadAll(resp.Body) 50 51 filename := `C:\Users\surface\DesktAop\图片\` + strconv.Itoa(int(time.Now().UnixNano())) + ".jpg" 52 53 err := ioutil.WriteFile(filename, bytes, 0644) 54 if err != nil { 55 fmt.Println(filename, "下载失败:", err) 56 } else { 57 fmt.Println(filename, "下载成功!") 58 } 59 } 60 61 func main() { 62 imgUrls := GetPageImgurls("http://www.7799520.com/") 63 for _, iu := range imgUrls { 64 //fmt.Println(iu) 65 DownloadImg(iu) //同步下载 66 } 67 }

1. 正则爬取图片并异步下载

代码: 执行不成功,需要调整,逻辑框架没问题

1 package main 2 3 import ( 4 "fmt" 5 "io/ioutil" 6 "net/http" 7 "regexp" 8 "strconv" 9 "sync" 10 "time" 11 ) 12 13 var ( 14 ChSem = make(chan int, 5) //信号量 限制并发 15 downloadWG sync.WaitGroup //并发等待组 16 17 //<img src="https://7799520.oss-cn-hangzhou.aliyuncs.com/v2/img/woman3.jpg"> 18 reImg = `<img[\s\S]+?src="([\s\S]+?)"` 19 ) 20 21 //获取html全部内容 22 func GetHtml(url string) string { 23 resp, _ := http.Get(url) 24 defer resp.Body.Close() 25 26 bytes, _ := ioutil.ReadAll(resp.Body) 27 html := string(bytes) 28 return html 29 } 30 31 //获取页面上的全部图片链接 32 func GetPageImgurls(url string) []string { 33 html := GetHtml(url) 34 //fmt.Println(html) 35 36 re := regexp.MustCompile(reImg) 37 rets := re.FindAllStringSubmatch(html, -1) 38 fmt.Println("捕获图片张数:", len(rets)) 39 40 imgUrls := make([]string, 0) 41 42 for _, ret := range rets { 43 imgUrl := ret[1] 44 imgUrls = append(imgUrls, imgUrl) 45 } 46 return imgUrls 47 } 48 49 func DownloadImg(url string) { 50 51 resp, _ := http.Get(url) 52 defer resp.Body.Close() 53 bytes, _ := ioutil.ReadAll(resp.Body) 54 55 filename := `C:\Users\surface\DesktAop\图片\` + strconv.Itoa(int(time.Now().UnixNano())) + ".jpg" 56 57 err := ioutil.WriteFile(filename, bytes, 0644) 58 if err != nil { 59 fmt.Println(filename, "下载失败:", err) 60 } else { 61 fmt.Println(filename, "下载成功!") 62 } 63 } 64 65 //异步下载图片 66 func DownloadImgAsync(url string) { 67 68 go func() { 69 ChSem <- 123 70 DownloadImg(url) 71 <-ChSem 72 downloadWG.Done() 73 }() 74 downloadWG.Wait() 75 } 76 77 func main() { 78 imgUrls := GetPageImgurls("http://www.7799520.com/") 79 for _, iu := range imgUrls { 80 //fmt.Println(iu) 81 //DownloadImg(iu) 82 DownloadImgAsync(iu) 83 } 84 }

完毕