k8s部署http服务

1.构建镜像 http.server, 并且把镜像推送到docker hub上, 我推上去的chengfengsunce/httpserver:0.0.1

2.通过deployment创建pod资源, deployment.yaml如下:

{ "apiVersion": "apps/v1", "kind": "Deployment", "metadata": { "labels": { "app": "httpserver" }, "name": "httpserver" }, "spec": { "progressDeadlineSeconds": 600, "replicas": 3, "revisionHistoryLimit": 10, "selector": { "matchLabels": { "app": "httpserver" } }, "strategy": { "rollingUpdate": { "maxSurge": "25%", "maxUnavailable": "25%" }, "type": "RollingUpdate" }, "template": { "metadata": { "creationTimestamp": null, "labels": { "app": "httpserver" } }, "spec": { "containers": [ { "image": "chengfengsunce/httpserver:0.0.1", "imagePullPolicy": "IfNotPresent", "livenessProbe": { "failureThreshold": 3, "httpGet": { "path": "/healthz", "port": 8080, "scheme": "HTTP" }, "initialDelaySeconds": 5, "periodSeconds": 10, "successThreshold": 1, "timeoutSeconds": 1 }, "name": "httpserver", "readinessProbe": { "failureThreshold": 3, "httpGet": { "path": "/healthz", "port": 8080, "scheme": "HTTP" }, "initialDelaySeconds": 5, "periodSeconds": 10, "successThreshold": 1, "timeoutSeconds": 1 }, "resources": { "limits": { "cpu": "200m", "memory": "100Mi" }, "requests": { "cpu": "20m", "memory": "20Mi" } }, "terminationMessagePath": "/dev/termination-log", "terminationMessagePolicy": "File" } ], "dnsPolicy": "ClusterFirst", "imagePullSecrets": [ { "name": "cloudnative" } ], "restartPolicy": "Always", "schedulerName": "default-scheduler", "securityContext": {}, "terminationGracePeriodSeconds": 30 } } } }

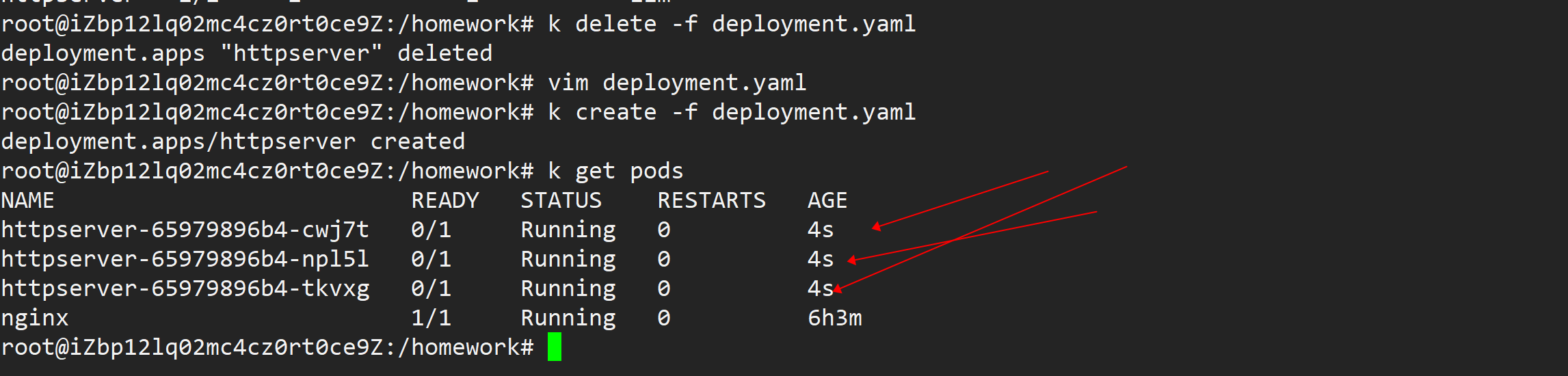

用上面的yaml把文件部署上去,执行命令:kubectl create -f deployment.yaml

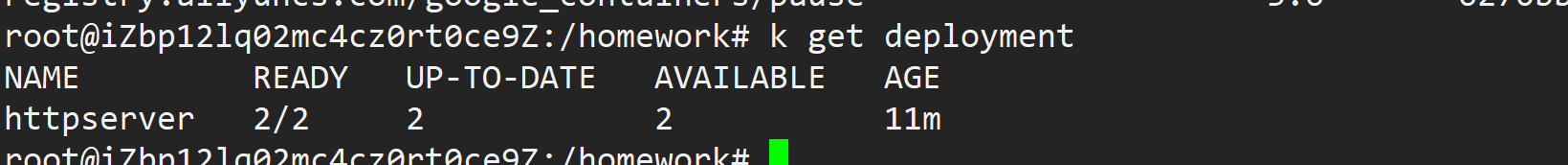

执行命令 kubectl create -f 文件名称.yaml,通过查看命令,看看deployment信息,

root@iZbp12lq02mc4cz0rt0ce9Z:/homework# k get deployment

3.部署service代理

1 apiVersion: v1 2 kind: Service # 资源类型 3 metadata: 4 name: product-server # 名称,这个名称类似dockercompose里面定义的服务名 5 spec: 6 ports: 7 - name: psvc 8 port: 80 # 服务端口,提供给集群内部访问的端口,外部访问不了 9 targetPort: 80 # 容器端口 10 - name: grpc 11 port: 81 12 targetPort: 81 13 selector: 14 app: product-server # 标签,与之对应的是deployment里面的pod标签,它们是多对多的关系 15 type: ClusterIP # 内部网络

执行命令 kubectl create -f 文件名称.yaml,可以查看service信息。

1 root@kubernetes-master:/usr/local/k8s-test01/product# kubectl get service 2 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE 3 kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d15h 4 mssql LoadBalancer 10.104.37.195 <pending> 1433:31227/TCP 26h 5 product-server ClusterIP 10.107.6.57 <none> 80/TCP,81/TCP 6h10m 6 rabmq NodePort 10.102.48.159 <none> 15672:31567/TCP,5672:30567/TCP 13h 7 root@kubernetes-master:/usr/local/k8s-test01/product#

deployment和service配置文件可以放在一个yaml文件里面,通过---分开就行,这里分开是为了看起来有层次。

好了,productservice部署完毕了,我们的服务是部署完毕了,但是我们在外部访问不了,需要入口网关,这里我们先使用ingress-nginx-controller。

部署ingress

1.安装ingress-nginx,这里我用的是最新版0.30.0,很是郁闷,下载地址被墙了,最后在github开源代码里面找到安装资源,其实就是一份yaml文件。

1 # 其他... 2 apiVersion: apps/v1 3 kind: Deployment 4 metadata: 5 name: nginx-ingress-controller 6 namespace: ingress-nginx 7 labels: 8 app.kubernetes.io/name: ingress-nginx 9 app.kubernetes.io/part-of: ingress-nginx 10 spec: 11 replicas: 1 12 selector: 13 matchLabels: 14 app.kubernetes.io/name: ingress-nginx 15 app.kubernetes.io/part-of: ingress-nginx 16 template: 17 metadata: 18 labels: 19 app.kubernetes.io/name: ingress-nginx 20 app.kubernetes.io/part-of: ingress-nginx 21 annotations: 22 prometheus.io/port: "10254" 23 prometheus.io/scrape: "true" 24 spec: 25 # wait up to five minutes for the drain of connections 26 terminationGracePeriodSeconds: 300 27 serviceAccountName: nginx-ingress-serviceaccount 28 nodeSelector: 29 kubernetes.io/os: linux 30 containers: 31 - name: nginx-ingress-controller 32 image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.30.0 33 args: 34 - /nginx-ingress-controller 35 - --configmap=$(POD_NAMESPACE)/nginx-configuration 36 - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services 37 - --udp-services-configmap=$(POD_NAMESPACE)/udp-services 38 - --publish-service=$(POD_NAMESPACE)/ingress-nginx 39 - --annotations-prefix=nginx.ingress.kubernetes.io 40 # ......

安装ingress-nginx ,执行命令 kubectl create -f ingress-nginx.yaml,随后我们通过命令查看。

1 root@kubernetes-master:/usr/local/k8s-test01/product# kubectl get pods -n ingress-nginx 2 NAME READY STATUS RESTARTS AGE 3 nginx-ingress-controller-77db54fc46-tx6pf 1/1 Running 5 40h 4 root@kubernetes-master:/usr/local/k8s-test01/product#

接下来配置并发布ingress-nginx网关服务。

1 apiVersion: networking.k8s.io/v1beta1 2 kind: Ingress 3 metadata: 4 name: nginx-web 5 annotations: # 扩展信息,这里可以配置鉴权、认证信息 6 nginx.ingress.kubernetes.io/rewrite-target: / 7 spec: 8 # 路由规则 9 rules: 10 # 主机名,只能是域名,需要在宿主机配置hosts映射 11 - host: product.com 12 http: 13 paths: 14 - path: / 15 backend: 16 # 后台部署的 Service Name,与上面部署的service对应 17 serviceName: product-server 18 # 后台部署的 Service Port,与上面部署的service对应 19 servicePort: 80 20 - path: /grpc 21 backend: 22 # 后台部署的 Service Name,与上面部署的service对应 23 serviceName: product-server 24 # 后台部署的 Service Port,与上面部署的service对应 25 servicePort: 81

执行kubectl create -f productservice-ingress.yaml部署。接下来我们查看网关服务信息。

1 root@kubernetes-master:/usr/local/k8s-test01/product# kubectl get ingress 2 NAME CLASS HOSTS ADDRESS PORTS AGE 3 nginx-web <none> product.com 80 8h

mssql和rabbitmq的部署这里就贴代码了,接下来我们访问product.com这个域名,看看是否部署成功。

我们测试一个get接口试试,看看能不能通,看图。

服务部署就到这,接下来我们简单总结一下

1.这是测试环境所以master没做高可用,ingress也没做高可用,有时间再做顺便补充一下;

2.外部网络请求到ingress-nginx域名,线上环境这个域名肯定是公网地址,ingress做认证鉴权,合法的请求通过path路由到对应的后台service,如果其中一台ingress挂掉了,keepalived会把vip游离到其他slave节点,这样就完成了ingress的高可用;

3.service代理会把请求随机转发到标签匹配的pod里面的容器处理,如果其中一台node挂了或者pod异常退出了(也就是返回值不等于0),deployment会重新启动一个pod,我们下面做个实验,删掉其中一个pod,看看效果怎么样。

1 root@kubernetes-master:/usr/local/k8s-test01/product# kubectl get pods 2 NAME READY STATUS RESTARTS AGE 3 mssql-59bd4dc6df-xzxc2 1/1 Running 5 27h 4 product-server-599cfd85cc-2q7zx 1/1 Running 0 6s 5 product-server-599cfd85cc-ppmhx 1/1 Running 0 6h51m 6 rabmq-7c9748f876-9msjg 1/1 Running 0 14h 7 rabmq-7c9748f876-lggh6 1/1 Running 0 14h

我们先通过命令显示所有default命名空间下面的所有pod,然后delete一个pod看看,它会不会重新启动。执行删除命令

1 kubectl delete pods product-server-599cfd85cc-ppmhx

接着马上查看pods信息,要快知道吗?

1 root@kubernetes-master:/usr/local/k8s-test01/product# kubectl get pods 2 NAME READY STATUS RESTARTS AGE 3 mssql-59bd4dc6df-xzxc2 1/1 Running 5 27h 4 product-server-599cfd85cc-2q7zx 1/1 Running 0 2m8s 5 product-server-599cfd85cc-9s497 1/1 Running 0 13s 6 product-server-599cfd85cc-ppmhx 0/1 Terminating 0 6h53m 7 rabmq-7c9748f876-9msjg 1/1 Running 0 14h 8 rabmq-7c9748f876-lggh6 1/1 Running 0 14h

看到没?ppmhx这个pod正在终止,马上就创建了新的pod。

全世界的程序员们联合起来吧!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律