docker:搭建ELK 开源日志分析系统

ELK 是由三部分组成的一套日志分析系统,

Elasticsearch: 基于json分析搜索引擎,Elasticsearch是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,

索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash:动态数据收集管道,Logstash是一个完全开源的工具,它可以对你的日志进行收集、分析,并将其存储供以后使用

Kibana:可视化视图,将elasticsearh所收集的data通过视图展现。kibana 是一个开源和免费的工具,它可以为 Logstash 和

ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助您汇总、分析和搜索重要数据日志。

一、使用docker集成镜像

安装docker

elk集成镜像包 名字是 sebp/elk

1.安装 docke、启动

yum install docke

service docker start

2.下载 sebp/elk

docker pull sebp/elk

无法下载、报错 :

unauthorized: authentication required

这是国外网络的问题

解决1 用网易镜像

vim /etc/docker/daemon.json 这个json文件不存在的,不需要担心,直接编辑

把下面的贴进去,保存,重启即可

{

"registry-mirrors": [ "http://hub-mirror.c.163.com"]

}

# service docker restart

没效果,还是下不来

解决2 用阿里云镜像加速

注册一个阿里云账号,登陆

到这里复制自己的加速地址

然后

安装/升级你的Docker客户端

- 推荐安装

1.10.0以上版本的Docker客户端,参考文档 docker-ce

如何配置镜像加速器

-

针对Docker客户端版本大于1.10.0的用户

您可以通过修改daemon配置文件

/etc/docker/daemon.json来使用加速器:sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://34sii7qf.mirror.aliyuncs.com"] } EOF sudo systemctl daemon-reload sudo systemctl restart docker

下载:

# docker pull sebp/elk

……

617ab16bcfa1: Pull complete

Digest: sha256:b6b8dd20b1a9aaf47b11fe9c66395a462f5e65c50dcff725e9bf83576d4ed241

Status: Downloaded newer image for docker.io/sebp/elk:latest

查看镜像:

[root@bogon docker]# docker images

REPOSITORY TAG IMAGEID CREATED SIZE

docker.io/sebp/elk latest 4b52312ebe8d 12 days ago 1.15 GB

3.启动镜像

# docker run -p 5601:5601 -p 9200:9200 -p 5044:5044 -it --name elk sebp/elk

报错:

Couln't start Elasticsearch. Exiting.

Elasticsearch log follows below.

原因:

waiting for Elasticsearch to be up (xx/30) counter goes up to 30 and the container exits with Couln't start Elasticsearch. Exiting. and Elasticsearch's logs are dumped, then read the recommendations in the logs and consider that they must be applied.

In particular, in case (1) above, the message max virtual memory areas vm.max_map_count [65530] likely too low, increase to at least [262144]means that the host's limits on mmap counts must be set to at least 262144.

Docker至少得分配3GB的内存;

Elasticsearch至少需要单独2G的内存;

vm.max_map_count至少需要262144,修改vm.max_map_count 参数

解决:

# vi /etc/sysctl.conf

末尾添加一行

vm.max_map_count=262144

查看结果

# sysctl -p

vm.max_map_count = 262144

重新启动:

# docker run -p 5601:5601 -p 9200:9200 -p 5044:5044 -it --name elk sebp/elk

/usr/bin/docker-current: Error response from daemon: Conflict. The container name "/elk" is already in use by container ece053f704db663e03355a679e25ec732bd08668dae1a87a89567e0e7c950749. You have to remove (or rename) that container to be able to reuse that name..

See '/usr/bin/docker-current run --help'.

[root@bogon ~]# docker run -p 5601:5601 -p 9200:9200 -p 5044:5044 -it --name elk123 sebp/elk

/usr/bin/docker-current: Error response from daemon: Conflict. The container name "/elk123" is already in use by container caf492e3acff7506c5e70b896bd82a33d757816ca3f55911a2aa9aef4cd74670. You have to remove (or rename) that container to be able to reuse that name..

See '/usr/bin/docker-current run --help'.

[root@bogon ~]# docker run -p 5601:5601 -p 9200:9200 -p 5044:5044 -it --name elk1 sebp/elk

* Starting periodic command scheduler cron [ OK ]

* Starting Elasticsearch Server [ OK ]

waiting for Elasticsearch to be up (1/30)

waiting for Elasticsearch to be up (2/30)

waiting for Elasticsearch to be up (3/30)

waiting for Elasticsearch to be up (4/30)

waiting for Elasticsearch to be up (5/30)

waiting for Elasticsearch to be up (6/30)

waiting for Elasticsearch to be up (7/30)

waiting for Elasticsearch to be up (8/30)

waiting for Elasticsearch to be up (9/30)

waiting for Elasticsearch to be up (10/30)

Waiting for Elasticsearch cluster to respond (1/30)

logstash started.

* Starting Kibana5 [ OK ]

==> /var/log/elasticsearch/elasticsearch.log <==

[2018-04-03T06:58:14,192][INFO ][o.e.d.DiscoveryModule ] [JI2Uv6k] using discovery type [zen]

[2018-04-03T06:58:15,132][INFO ][o.e.n.Node ] initialized

[2018-04-03T06:58:15,132][INFO ][o.e.n.Node ] [JI2Uv6k] starting ...

[2018-04-03T06:58:15,358][INFO ][o.e.t.TransportService ] [JI2Uv6k] publish_address {172.17.0.2:9300}, bound_addresses {[::]:9300}

[2018-04-03T06:58:15,378][INFO ][o.e.b.BootstrapChecks ] [JI2Uv6k] bound or publishing to a non-loopback address, enforcing bootstrap checks

[2018-04-03T06:58:18,517][INFO ][o.e.c.s.MasterService ] [JI2Uv6k] zen-disco-elected-as-master ([0] nodes joined), reason: new_master {JI2Uv6k}{JI2Uv6kAQ1ymPZoVhL5AAg}{Rr9Wa_H-RKGaBC4IDpjAMA}{172.17.0.2}{172.17.0.2:9300}

[2018-04-03T06:58:18,529][INFO ][o.e.c.s.ClusterApplierService] [JI2Uv6k] new_master {JI2Uv6k}{JI2Uv6kAQ1ymPZoVhL5AAg}{Rr9Wa_H-RKGaBC4IDpjAMA}{172.17.0.2}{172.17.0.2:9300}, reason: apply cluster state (from master [master {JI2Uv6k}{JI2Uv6kAQ1ymPZoVhL5AAg}{Rr9Wa_H-RKGaBC4IDpjAMA}{172.17.0.2}{172.17.0.2:9300} committed version [1] source [zen-disco-elected-as-master ([0] nodes joined)]])

[2018-04-03T06:58:18,563][INFO ][o.e.h.n.Netty4HttpServerTransport] [JI2Uv6k] publish_address {172.17.0.2:9200}, bound_addresses {[::]:9200}

[2018-04-03T06:58:18,563][INFO ][o.e.n.Node ] [JI2Uv6k] started

[2018-04-03T06:58:18,577][INFO ][o.e.g.GatewayService ] [JI2Uv6k] recovered [0] indices into cluster_state

==> /var/log/logstash/logstash-plain.log <==

==> /var/log/kibana/kibana5.log <==

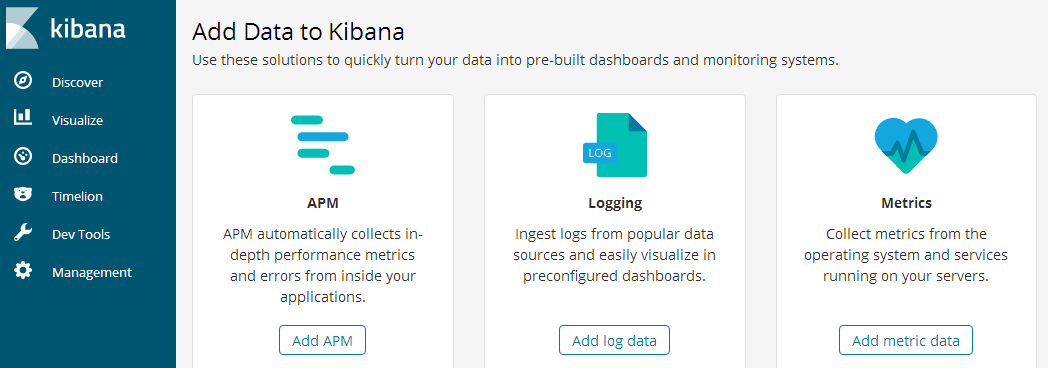

4.验证、测试

打开浏览器,输入:http://<your-host>:5601,看到如下界面说明安装成功

端口之间关系

5601 (Kibana web interface). 前台界面

9200 (Elasticsearch JSON interface) 搜索.

5044 (Logstash Beats interface, receives logs from Beats such as Filebea 日志传输

测试:

测试传递一条信息

1、使用命令:docker exec -it <container-name> /bin/bash 进入容器内

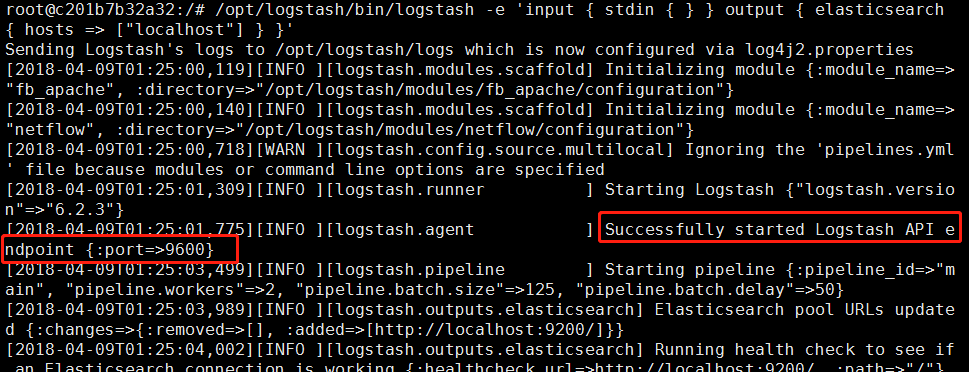

2、执行命令:/opt/logstash/bin/logstash -e 'input { stdin { } } output { elasticsearch { hosts => ["localhost"] } }'

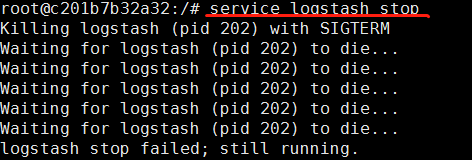

报错:如果看到这样的报错信息 Logstash could not be started because there is already another instance using the configured data directory.

If you wish to run multiple instances, you must change the "path.data" setting.

解决:请执行命令:service logstash stop 然后在执行就可以了。

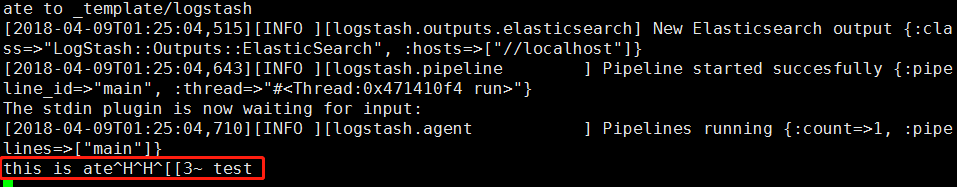

再次执行:看到:Successfully started Logstash API endpoint {:port=>9600} 即可

3.输入测试信息 :this is a test

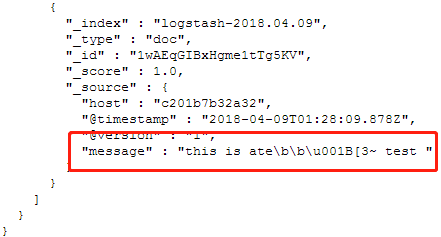

4、打开浏览器,输入:http://<your-host>:9200/_search?pretty 如图,就会看到我们刚刚输入的日志内容

浙公网安备 33010602011771号

浙公网安备 33010602011771号