k8s学习笔记-Pod资源

自主式Pod资源

资源的清单格式

一级字段:apiVersion (group/version),kind,metadata(name,namespace,labels,annotatinos,....),spec ,status(只读)

Pod资源:

spec.containers <[]object>

kubectl explain pods.spec.containers

- name <string>

image <string>

imagePullPolicy <string>

Always 总是去拉取,Never 只使用本地镜像,不拉取,IfNotPresent 如果本地没有在拉取

Image pull policy. One of Always, Never, IfNotPresent. Defaults to Always

if :latest tag is specified, or IfNotPresent otherwise. Cannot be updated.

上面的意思是说明,如果标签是latest默认就是Always ,其他默认就是IfNotPresent

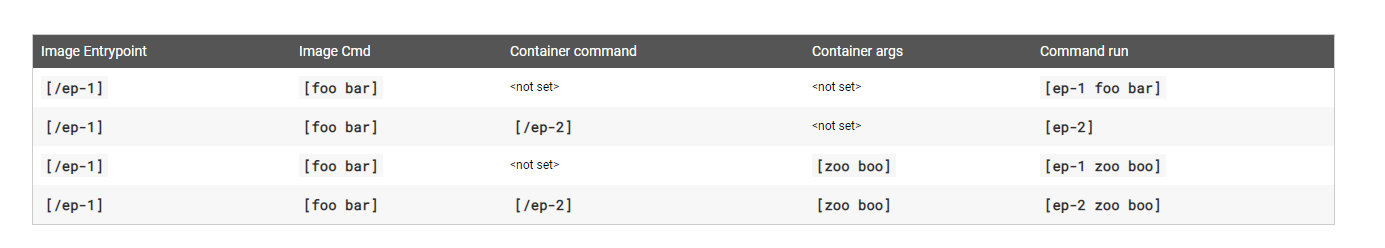

修改镜像中的默认应用:

command,args 具体内容参考官方手册

https://kubernetes.io/docs/tasks/inject-data-application/define-command-argument-container/

标签:

Label其实是一对 key/value,有效的Label keys必须是部分:一个可选前缀+名称,通过/来区分,名称部分是必须的,并且最多63个字符,开始和结束的字符必须是字母或者数字,

中间是字母数字和”_”,”-“,”.”,前缀是刻有可无的,如果指定了,那么前缀必须是一个DNS子域,一系列的DNSlabel通过”.”来划分,长度不超过253个字符,“/”来结尾。如果前缀被省略了

,这个Label的key被假定为对用户私有的,自动系统组成部分(比如kube-scheduler, kube-controller-manager, kube-apiserver, kubectl)

,这些为最终用户添加标签的必须要指定一个前缀,Kuberentes.io 前缀是为Kubernetes 内核部分保留的。

合法的label值必须是63个或者更短的字符。要么是空,要么首位字符必须为字母数字字符,中间必须是横线,下划线,点或者数字字母。

kubectl get pods --show-labels

[root@k8s-master k8s]# kubectl get pods -L app 显示带app的标签, 并单独弄一列来显示,没有为空

[root@k8s-master k8s]# kubectl get pods -L app,run 可以指定多个

只显示带app

[root@k8s-master k8s]# kubectl get pods -l app --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod-demo 2/2 Running 0 3m33s app=myapp,tier=frontend

修改标签

[root@k8s-master k8s]# kubectl label pods pod-demo release=canary

pod/pod-demo labeled

[root@k8s-master k8s]# kubectl get pods -l app --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod-demo 2/2 Running 0 6m37s app=myapp,release=canary,tier=frontend

如果有这个标签,会报错

[root@k8s-master k8s]# kubectl label pods pod-demo release=canary

error: 'release' already has a value (canary), and --overwrite is false

如果要修改,可以加参数

[root@k8s-master k8s]# kubectl label pods pod-demo release=canary --overwrite

pod/pod-demo not labeled

标签选择器:

等值关系:=,==,!=

[root@k8s-master k8s]# kubectl get pods -l release=canary --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod-demo 2/2 Running 0 10m app=myapp,release=canary,tier=frontend

[root@k8s-master k8s]# kubectl get pods -l release=canary,app=myapp --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod-demo 2/2 Running 0 12m app=myapp,release=canary,tier=frontend

root@k8s-master k8s]# kubectl get pods -l release!=canary

NAME READY STATUS RESTARTS AGE

myapp-9b4987d5-244dl 1/1 Running 0 7h16m

myapp-9b4987d5-4rn4q 1/1 Running 0 7h16m

myapp-9b4987d5-7xc9z 1/1 Running 0 7h16m

myapp-9b4987d5-8hppt 1/1 Running 0 7h16m

myapp-9b4987d5-clvtg 1/1 Running 0 7h16m

nginx-deploy-5b66f76f68-lv66h 1/1 Running 2 34d

pod-client 1/1

集合关系:

KEY in (VALUE1,VALUE2,....)

KEY notin(VALUE1,VALUE2,...)

KEY

!KEY

[root@k8s-master k8s]# kubectl get pods -l "release in (canary,beta,alpha)"

NAME READY STATUS RESTARTS AGE

pod-demo 2/2 Running 0 15m

[root@k8s-master k8s]# kubectl get pods -l "release notin (canary,beta,alpha)"

NAME READY STATUS RESTARTS AGE

myapp-9b4987d5-244dl 1/1 Running 0 7h18m

myapp-9b4987d5-4rn4q 1/1 Running 0 7h18m

myapp-9b4987d5-7xc9z 1/1 Running 0 7h18m

myapp-9b4987d5-8hppt 1/1 Running 0 7h18m

myapp-9b4987d5-clvtg 1/1 Running 0 7h18m

nginx-deploy-5b66f76f68-lv66h 1/1 Running 2 4d

pod-client 1/1 Running 0 6h31m

许多资源支持内嵌字段定义其使用的标签选择器:

matchlLabels:直接给定键值

matchExpressions: 基于给定的表达式来定义使用标签选择器{key:"KEY",operator:"OPERATOR",values:[VAL1,VAL2,....]}

参考文档

https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/#set-based-requirement

操作符:

In,NotIn:values字段的值必须为非空列表;

Exists,Notexists:values字段的值必须为空列表;

nodeSelector <map[string]string> 节点标签选择器,

[root@k8s-master k8s]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master Ready master 37d v1.13.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s-master,node-role.kubernetes.io/master=

k8s-node1 Ready <none> 37d v1.13.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s-node1

k8s-node2 Ready <none> 37d v1.13.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s-node2

[root@k8s-master k8s]# kubectl label nodes k8s-node1 disktype=ssd

node/k8s-node1 labeled

[root@k8s-master k8s]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master Ready master 37d v1.13.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=k8s-master,node-role.kubernetes.io/master=

k8s-node1 Ready <none> 37d v1.13.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/hostname=k8s-node1

k8s-node2 Ready <none> 37d v1.13.4 beta.kubernetes.io/arch=amd64,beta

vim pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: default

labels:

app: myapp

tier: frontend

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

- name: busybox

image: busybox:latest

imagePullPolicy: IfNotPresent

command:

- "/bin/sh"

- "-c"

- "sleep 3600"

nodeSelector:

disktype: ssd

[root@k8s-master k8s]# kubectl delete -f pod-demo.yaml

pod "pod-demo" deleted

[root@k8s-master k8s]# kubectl create -f pod-demo.yaml

pod/pod-demo created

[root@k8s-master k8s]# kubectl get pods pod-demo -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-demo 2/2 Running 0 38s 10.244.2.22 k8s-node1 <none> <none>

kubectl describe pods pod-demo

里面可以看到:

Normal Scheduled 6m24s default-scheduler Successfully assigned default/pod-demo to k8s-node1

nodeName <sring>

anotations:

与label不同的地方在于,它不能用于挑选资源对象,只用于为对象提供“元数据”。

vim pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: default

labels:

app: myapp

tier: frontend

annotations:

doudou/create-by: "cluster admin"

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

- name: busybox

image: busybox:latest

imagePullPolicy: IfNotPresent

command:

- "/bin/sh"

- "-c"

- "sleep 3600"

nodeSelector:

disktype: ssd

删除在重新创建

kubectl create -f pod-demo.yaml

[root@k8s-master k8s]# kubectl describe pods pod-demo

Name: pod-demo

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: k8s-node1/10.211.55.12

Start Time: Tue, 23 Apr 2019 00:28:33 +0800

Labels: app=myapp

tier=frontend

Annotations: doudou/create-by: cluster admin

Status: Running

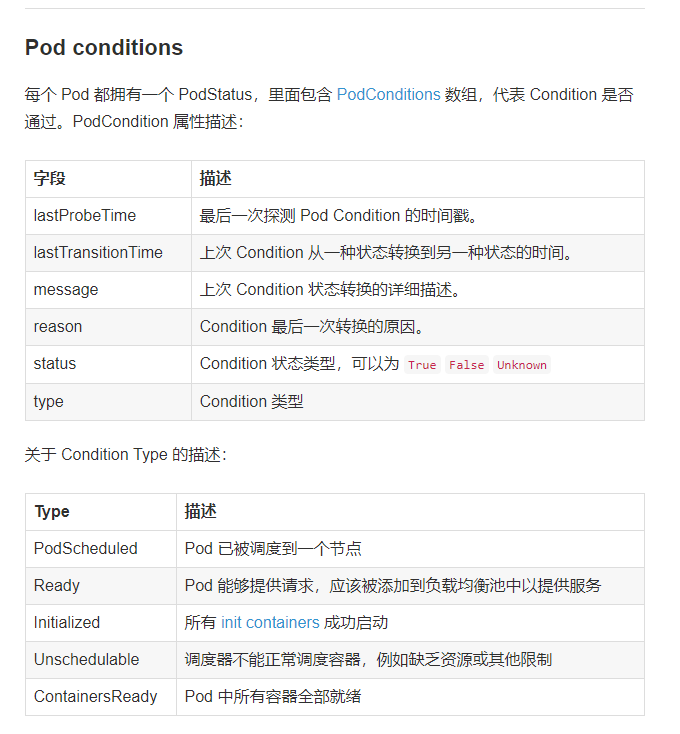

Pod的生命周期:

因为pod代表着一个集群中节点上运行的进程,让这些进程不再被需要,优雅的退出是很重要的(与粗暴的用一个KILL信号去结束,让应用没有机会进行清理操作)。用户应该能请求删除,并且在室进程终止的情况下能知道,而且也能保证删除最终完成。

当一个用户请求删除pod,系统记录想要的优雅退出时间段,在这之前Pod不允许被强制的杀死,TERM信号会发送给容器主要的进程。一旦优雅退出的期限过了,KILL信号会送到这些进程,pod会从API服务器其中被删除。如果在等待进程结束的时候

,Kubelet或者容器管理器重启了,结束的过程会带着完整的优雅退出时间段进行重试。

示例流程如下:

- 用户发送删除pod的命令,默认宽限期是30秒;

- 在Pod超过该宽限期后API server就会更新Pod的状态为“dead”;

- 在客户端命令行上显示的Pod状态为“terminating”;

- 跟第三步同时,当kubelet发现pod被标记为“terminating”状态时,开始停止pod进程:

- 如果在pod中定义了preStop hook,在停止pod前会被调用。如果在宽限期过后,preStop hook依然在运行,第二步会再增加2秒的宽限期;

- 向Pod中的进程发送TERM信号;

- 跟第三步同时,该Pod将从该service的端点列表中删除,不再是replication controller的一部分。关闭的慢的pod将继续处理load balancer转发的流量;

- 过了宽限期后,将向Pod中依然运行的进程发送SIGKILL信号而杀掉进程。

- Kublete会在API server中完成Pod的的删除,通过将优雅周期设置为0(立即删除)。Pod在API中消失,并且在客户端也不可见。

删除宽限期默认是30秒。 kubectl delete命令支持 —grace-period=<seconds> 选项,允许用户设置自己的宽限期。如果设置为0将强制删除pod。在kubectl>=1.5版本的命令中,你必须同时使用 --force 和 --grace-period=0 来强制删除pod。

Pod的强制删除是通过在集群和etcd中将其定义为删除状态。当执行强制删除命令时,API server不会等待该pod所运行在节点上的kubelet确认,就会立即将该pod从API server中移除,这时就可以创建跟原pod同名的pod了。这时,在节点上的pod会被立即设置为terminating状态,不过在被强制删除之前依然有一小段优雅删除周期

Container probes

Probe 是在容器上 kubelet 的定期执行的诊断,kubelet 通过调用容器实现的 Handler 来诊断。目前有三种 Handlers :

ExecAction:在容器内部执行指定的命令,如果命令以状态代码 0 退出,则认为诊断成功。

TCPSocketAction:对指定 IP 和端口的容器执行 TCP 检查,如果端口打开,则认为诊断成功。

HTTPGetAction:对指定 IP + port + path路径上的容器的执行 HTTP Get 请求。如果响应的状态代码大于或等于 200 且小于 400,则认为诊断成功

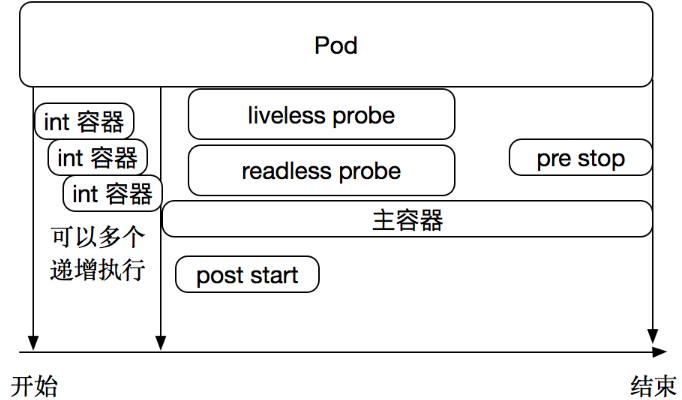

pod生命周期的重要行为:

初始化容器

Init Container在所有容器运行之前执行(run-to-completion),常用来初始化配置。

apiVersion: v1

kind: Pod

metadata:

name: init-demo

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: workdir

mountPath: /usr/share/nginx/html

# These containers are run during pod initialization

initContainers:

- name: install

image: busybox

command:

- wget

- "-O"

- "/work-dir/index.html"

- http://kubernetes.io

volumeMounts:

- name: workdir

mountPath: "/work-dir"

dnsPolicy: Default

volumes:

- name: workdir

emptyDir: {}

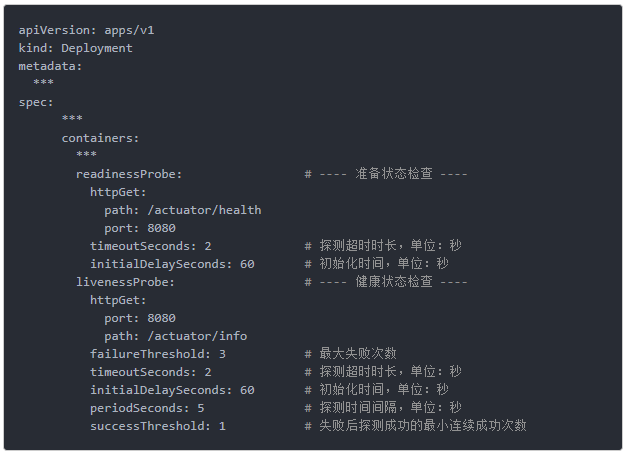

容器探测

为了确保容器在部署后确实处在正常运行状态,Kubernetes提供了两种探针(Probe,支持exec、tcp和httpGet方式)来探测容器的状态:

Probe 是在容器上 kubelet 的定期执行的诊断,kubelet 通过调用容器实现的 Handler 来诊断。目前有三种 Handlers :

ExecAction:在容器内部执行指定的命令,如果命令以状态代码 0 退出,则认为诊断成功。

TCPSocketAction:对指定 IP 和端口的容器执行 TCP 检查,如果端口打开,则认为诊断成功。

HTTPGetAction:对指定 IP + port + path路径上的容器的执行 HTTP Get 请求。如果响应的状态代码大于或等于 200 且小于 400,则认为诊断成功

LivenessProbe:探测应用是否处于健康状态,如果不健康则删除重建改容器

ReadinessProbe:探测应用是否启动完成并且处于正常服务状态,如果不正常则更新容器的状态

kubectl explain pods.spec.containers.livenessProbe

vim liveness-exec-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-exec-pod.yaml

namespace: default

spec:

containers:

- name: liveness-exec-container

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","touch /tmp/healthy;sleep 30;rm -rf /tmp/healthy;sleep 3600"]

livenessProbe:

exec:

command: ["test","-e","/tmp/healthy"]

initialDelaySeconds: 1

periodSeconds: 3

kubectl create -f liveness-exec.yaml

[root@k8s-master k8s]# kubectl get pods liveness-exec-pod

NAME READY STATUS RESTARTS AGE

liveness-exec-pod 1/1 Running 3 4m4s

[root@k8s-master k8s]#kubectl describe pods liveness-exec-pod

Name: liveness-exec-pod

Containers:

liveness-exec-container:

Container ID: docker://8d09c9e59b7ab18cc777f7408b7ae889c4b8439d2481a3bb85b0b5dcde166e44

Command:

/bin/sh

-c

touch /tmp/healthy;sleep 30;rm -rf /tmp/healthy;sleep 3600

State: Running

Started: Tue, 23 Apr 2019 18:05:46 +0800

Last State: Terminated

Reason: Error

Exit Code: 137

Started: Tue, 23 Apr 2019 18:04:37 +0800

Finished: Tue, 23 Apr 2019 18:05:46 +0800

Ready: True

Restart Count: 2

Liveness: exec [test -e /tmp/healthy] delay=1s timeout=1s period=3s #success=1 #failure=3

vim liveness-httpget-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-httpget-pod

namespace: default

spec:

containers:

- name: liveness-httpget-container

image: ikubernetes/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

livenessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 1

periodSeconds: 3

kubectl exec -it liveness-httpget-pod -- /bin/sh

/ # rm -rf /usr/share/nginx/html/index.html

[root@k8s-master k8s]# kubectl get pod liveness-httpget-pod

NAME READY STATUS RESTARTS AGE

liveness-httpget-pod 1/1 Running 1 93s

只会重启一次,因为在重启文件恢复了,没有问题了

查看详细的信息

kubectl describe pod liveness-httpget-pod

Name: liveness-httpget-pod

Containers:

liveness-httpget-container:

Last State: Terminated

Ready: True

Restart Count: 1

Liveness: http-get http://:http/index.html delay=1s timeout=1s period=3s #success=1 #failure=3

Normal Killing 93s kubelet, k8s-node1 Killing container with id

docker://liveness-httpget-container:Container failed liveness probe. Container will be killed and recreated.

kubectl explain pods.spec.containers.readinessProbe

vim readiness-httpget-pod

apiVersion: v1

kind: Pod

metadata:

name: readiness-httpget-pod

namespace: default

spec:

containers:

- name: readiness-httpget-container

image: ikubernetes/myapp:v1

magePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

readinessProbe:

httpGet:

port: http

path: /index.html

[root@k8s-master k8s]# kubectl get pods readiness-httpget-pod

NAME READY STATUS RESTARTS AGE

readiness-httpget-pod 1/1 Running 0 59s

[root@k8s-master ~]# kubectl exec -it readiness-httpget-pod -- /bin/sh

/ # rm -rf /usr/share/nginx/html/index.html

[root@k8s-master k8s]# kubectl get pods readiness-httpget-pod

NAME READY STATUS RESTARTS AGE

readiness-httpget-pod 0/1 Running 0 60s

[root@k8s-master k8s]# kubectl describe pods readiness-httpget-pod

Name: readiness-httpget-pod

readiness-httpget-container:

Ready: False

Restart Count: 0

Readiness: http-get http://:http/index.html delay=0s timeout=1s period=10s #success=1 #failure=3

Warning Unhealthy 0s (x15 over 10m) kubelet, k8s-node1 Readiness probe failed: HTTP probe failed with statuscode: 404

[root@k8s-master ~]# kubectl exec -it readiness-httpget-pod -- /bin/sh

/ # echo "test" >> /usr/share/nginx/html/index.html

[root@k8s-master k8s]# kubectl get pods readiness-httpget-pod

NAME READY STATUS RESTARTS AGE

readiness-httpget-pod 1/1 Running 0 2m41s

看到恢复了正常的状态

kubectl explain pods.spec.containers.lifecycle

kubectl explain pods.spec.containers.lifecycle.postStart

kubectl explain pods.spec.containers.lifecycle.preStop

容器生命周期钩子(Container Lifecycle Hooks)监听容器生命周期的特定事件,并在事件发生时执行已注册的回调函数。支持两种钩子:

postStart: 容器启动后执行,注意由于是异步执行,它无法保证一定在ENTRYPOINT之后运行。如果失败,容器会被杀死,

并根据RestartPolicy决定是否重启preStop:容器停止前执行,常用于资源清理。如果失败,容器同样也会被杀死

而钩子的回调函数支持两种方式:

exec:在容器内执行命令

httpGet:向指定URL发起GET请求

postStart和preStop钩子示例:

apiVersion: v1

kind: Pod

metadata:

name: lifecycle-demo

spec:

containers:

- name: lifecycle-demo-container

image: nginx

lifecycle:

postStart:

exec:

command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

preStop:

exec:

command: ["/usr/sbin/nginx","-s","quit"]

vim poststart-pod.yaml

apiVesion: v1

kind: Pod

metadata:

name: poststart-pod

namespace: default

spec:

containers:

- name: busybox-httpd

image: busybox:latest

imagePullPolicy: IfNotPresent

lifecycle:

postStart:

exec:

command: ['mkdir','-p','/data/web/html']

command: ["/bin/httpd"]

args: ["-f","-h /data/web/html"]

[root@k8s-master k8s]# kubectl get pods poststart-pod

NAME READY STATUS RESTARTS AGE

poststart-pod 0/1 PostStartHookError: command 'mkdir -p /data/web/html' exited with 126: 2 18s

[root@k8s-master k8s]# kubectl get pods poststart-pod

NAME READY STATUS RESTARTS AGE

poststart-pod 0/1 PostStartHookError: comman exited with 126: 4 103s

kubectl describe pod poststart-pod

Warning FailedPostStartHook 6s (x4 over 53s)

kubelet, k8s-node1 Exec lifecycle hook ([mkdir -p /data/web/html]) for Container "busybox-httpd" in Pod

"poststart-pod_default(6c37ff00-65bd-11e9-b018-001c42baaf43)" failed - error:

command 'mkdir -p /data/web/html' exited with 126: , message: "cannot exec in a stopped state: unknown\r\n"

vim vim poststart-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: poststart-pod

namespace: default

spec:

containers:

- name: busybox-httpd

image: busybox:latest

imagePullPolicy: IfNotPresent

lifecycle:

postStart:

exec:

command: ['mkdir','-p','/data/web/html']

command: ['/bin/sh','-c','sleep 3600']

注意:postStart里面的command不能强依赖 pod的command,上面的报错是有误导的,应该是POD command 运行早于postStat

restartPolicy <string>

Always:只要退出就重启

OnFailure:失败退出(exit code不等于0)时重启

Never:只要退出就不再重启 (注意,这里的重启是指在Pod所在Node上面本地重启,并不会调度到其他Node上去)

参考文档:

https://blog.csdn.net/Dou_Hua_Hua/article/details/108164617

https://www.jianshu.com/p/91625e7a8259?utm_source=oschina-app

https://blog.csdn.net/horsefoot/article/details/52324830

https://www.cnblogs.com/linuxk/p/9569618.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号