ceph安装笔记

配置源 ceph版本为luminous

[root@ceph-node1 ~]# yum install -y https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

[root@ceph-node1 ~]# yum makecache ###

[root@ceph-node1 ~]# yum update -y ###

[root@localhost yum.repos.d]#yum install -y yum-plugin-priorities

[root@localhost yum.repos.d]#systemctl stop firewalld && systemctl disable firewalld

[root@ceph001 yum.repos.d]# cat ceph.repo

[ceph]

name=Ceph packages for $basearch

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/$basearch

enabled=1

gpgcheck=0

[ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch

enabled=1

gpgcheck=0

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS

enabled=0

gpgcheck=0

配置主机名可以互访

[root@ceph001 etc]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.16.160 ceph001

172.16.16.161 ceph002

172.16.16.162 ceph003

配置yum源(三节点)

[root@ceph003 ~]# yum install epel-release -y

[root@ceph001 yum.repos.d]# cat /etc/yum.repos.d/ceph.repo

[ceph]

name=ceph

baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/x86_64/

gpgcheck=0

priority =1

[ceph-noarch]

name=cephnoarch

baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch/

gpgcheck=0

priority =1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/SRPMS

gpgcheck=0

priority=1

时间同步(三节点)

[root@ceph002 ~]# yum install chrony -y

创建用户,设置sudo免密登陆root(三节点)

[root@ceph001 sudoers.d]# useradd ceph-admin

[root@ceph001 sudoers.d]# echo "khb123" |passwd --stdin ceph-admin

[root@ceph001 sudoers.d]# cat ceph-admin

ceph-admin ALL = (root) NOPASSWD:ALL

[root@ceph001 sudoers.d]# chmod 0440 /etc/sudoers.d/ceph-admin

使用ceph-deploy部署集群

配置免密钥登陆

su - ceph-admin

[ceph-admin@ceph001 ~]$ ssh-keygen

[ceph-admin@ceph001 ~]$ ssh-copy-id ceph-admin@ceph001

[ceph-admin@ceph001 ~]$ ssh-copy-id ceph-admin@ceph002

[ceph-admin@ceph001 ~]$ ssh-copy-id ceph-admin@ceph003

[ceph-admin@ceph001 ~]$ sudo yum install ceph-deploy python2-pip

创建集群目录,放集群生成的密钥文件等

[ceph-admin@ceph001 ~]$ mkdir my-cluster

[ceph-admin@ceph001 ~]$ cd my-cluster/

[ceph-admin@ceph001 my-cluster]$ ceph-deploy new ceph001 ceph002 ceph003

配置网络

[ceph-admin@ceph001 my-cluster]$ cat ceph.conf

[global]

fsid = 5165ea31-8664-408d-8648-e9cc5494da2e

mon_initial_members = ceph001, ceph002, ceph003

mon_host = 172.16.16.160,172.16.16.161,172.16.16.162

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 172.16.16.0/24

cluster network = 10.16.16.0/24

安装ceph包 (三节点)

[ceph-admin@ceph001 ~]$ sudo yum install ceph ceph-radosgw -y

配置初始(monitor),并收集所有密钥

[ceph-admin@ceph001 ~]$ cd my-cluster/

[ceph-admin@ceph001 my-cluster]$ ceph-deploy mon create-initial

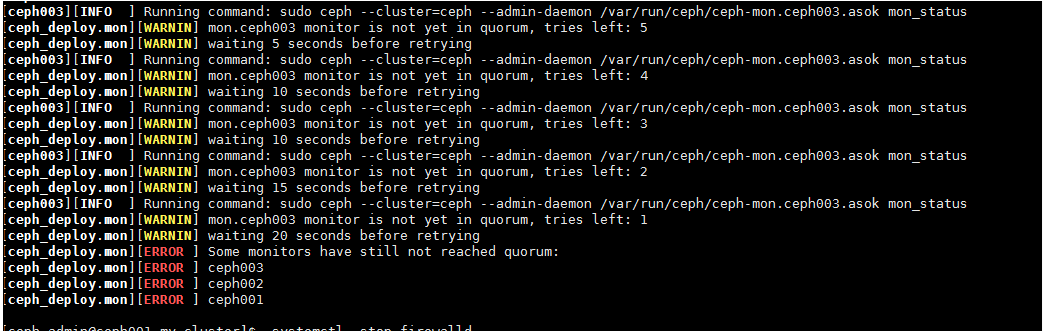

报错如下: 一般是防火墙没有关 #systemctl stop firewalld && systemctl disable firewalld

把配置信息拷贝到各节点

[ceph-admin@ceph001 my-cluster]$ ceph-deploy admin ceph001 ceph002 ceph003

配置OSD

[ceph-admin@ceph001 my-cluster]$ ceph-deploy osd create ceph001:/dev/sdd

查看当前集群布局

[ceph-admin@ceph002 /]$ ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-1 2.15181 root default

-2 0.71727 host ceph001

0 0.23909 osd.0 up 1.00000 1.00000

1 0.23909 osd.1 up 1.00000 1.00000

2 0.23909 osd.2 up 1.00000 1.00000

-3 0.86366 host ceph002

3 0.28789 osd.3 up 1.00000 1.00000

4 0.28789 osd.4 up 1.00000 1.00000

5 0.28789 osd.5 up 1.00000 1.00000

-4 0.57088 host ceph003

6 0.19029 osd.6 up 1.00000 1.00000

7 0.19029 osd.7 up 1.00000 1.00000

8 0.19029 osd.8 up 1.00000 1.00000

[ceph-admin@ceph001 ~]$ ceph auth get-or-create Clinet.rbd mon 'allow r' osd 'allow class-read object_prifix rbd_clildren,allow rwx=rbd'

删除池

[root@ceph001 ~]# ceph osd pool rm ceph-external ceph-external --yes-i-really-really-mean-it

[root@ceph002 .ssh]# rbd create rbd1 --image-feature layering --size 10G

[root@ceph002 .ssh]# rbd info rbd1

查看ceph的空间大小及可用大小

ceph df

1.too few PGs per OSD (21 < min 30)

[ceph-admin@ceph002 /]$ ceph -s

health HEALTH_WARN

too few PGs per OSD (21 < min 30)

修改:

[ceph-admin@ceph002 /]$ ceph osd pool set rbd pg_num 128

[ceph-admin@ceph002 /]$ ceph osd pool set rbd pgp_num 128

2.注意节点/etc/ecph/ 文件及目录的权限

[root@ceph001 ~]# chown ceph-admin.ceph-admin /etc/ceph/ -R